The neural network counting mechanism in PL / SQL for handwriting recognition

Dear colleagues, we are in a hurry to please everyone who is not indifferent to science-intensive tasks. Today we have prepared for you a translation of a curious publication from experts on databases from CERN, dedicated to the training and operation of neural networks using Python and tools based on Oracle PL / SQL.

In this article you will find an example of building and deploying a basic mechanism for counting an artificial neural network using PL / SQL . The article is intended for educational purposes, in particular for Oracle practitioners who want to get acquainted with neural networks with a concrete example.

Currently, machine learning and neural networks are current topics in data processing. Many tools and platforms are now available for work and experiments with neural networks and deep learning (see links at the end of this article). Recognition of handwritten numbers, in particular, using the MNIST database , Jan Lekun, and others, is now an introductory example for familiarizing with neural networks.

')

In this article, you will see how to build and deploy a simple neural network counting mechanism to recognize handwritten numbers using Oracle and PL / SQL. The end result is a small PL / SQL package with an accuracy of about 98%. The neural network is created and trained using TensorFlow , and then transferred to Oracle for maintenance.

One of the ideas that this article aims to illustrate is that counting neural networks is much simpler than their training: the operations necessary to maintain a trained network can be implemented relatively easily in many languages / programming environments. Discussions on these topics are usually centered around platforms for “Big data” (for example, Spark and MLlib ). Interestingly, neural networks can also be successfully applied in the world of RDBMS. This can be useful since a large amount of valuable data is currently stored in relational databases . In the case of Oracle, the implementation of the counting mechanism is also simplified due to the presence of a mature PL / SQL environment with a package for linear algebra : UTL_NLA .

Let's start from the end: how to deploy the MNIST PL / SQL package and recognize handwritten numbers in Oracle

One short PL / SQL package and two tables are all you need to play the next example (you can find the detailed code on Github ). Tables:

The mechanism for counting the neural network from the example is in a package called MNIST . It has an INIT procedure that loads the neural network components from the tensors_array table into PL / SQL variables and the SCORE function, which takes an image as input and returns a number — the predicted value of the digit.

Here is an example of its use, where the first picture in the testdata_array table is checked and correctly recognized as an image of the number 7 (the image label is consistent with the prediction MNIST.SCORE):

Figure 1 : This is a raster display of the test image used in the example. It confirms that the MNIST.SCORE prediction is correct and indeed the picture is an image of the number 7, handwritten and encoded in a grid of 28x28 gray-scale pixels.

Processing of all test images is also performed with a simple SQL command. In the example in fig. 2, it takes 2 minutes to process 10,000 test images, an average of about 12 ms per image. The accuracy of the evaluation function is about 98% . It is calculated as follows: according to data labels, 9787 out of 10,000 images are estimated correctly. Note also that the set of test images does not overlap with the images used to train the neural network. Therefore, we can expect that the MNIST package has a digit recognition accuracy of about 98%, including when used on general input.

The complete PL / SQL code and datapump dump file with the corresponding tables can be found on Github . In the following paragraphs, I will explain how to build and train a neural network.

Figure 2 : The accuracy of the PL / SQL MNIST.SCORE evaluation function on a test set of 10K images is about 98%. Processing takes about 12 ms per image.

Neural network

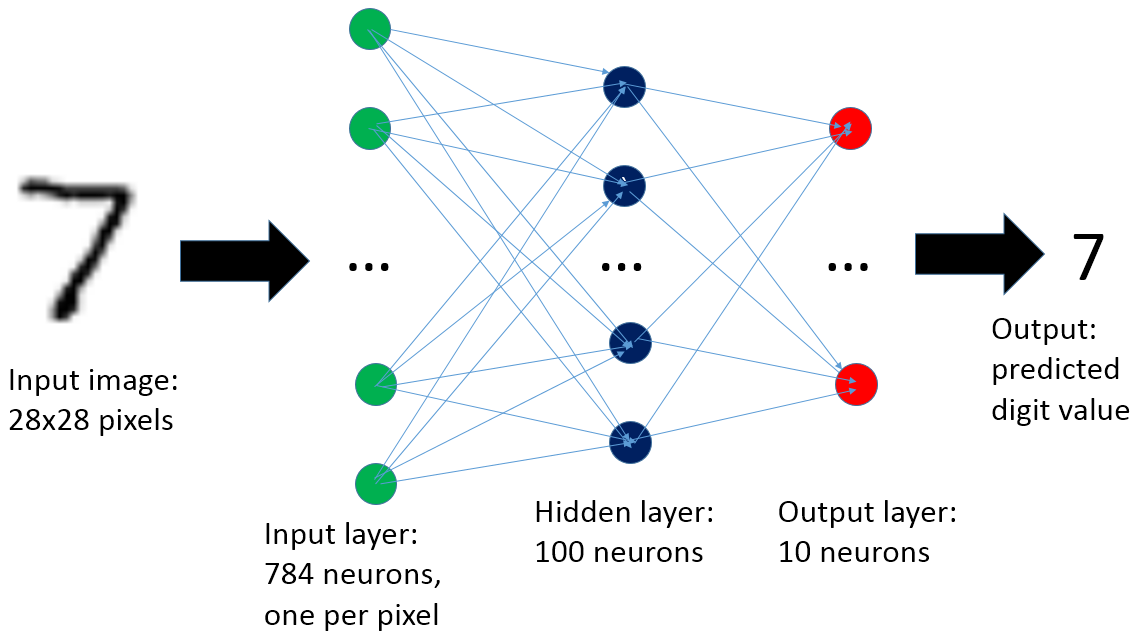

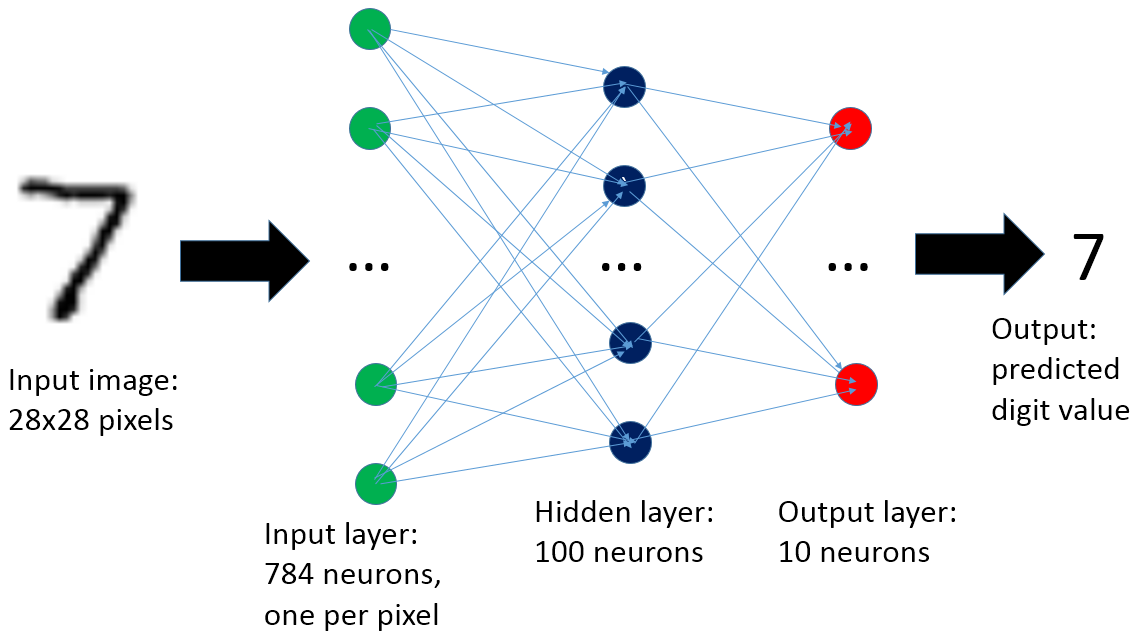

The neural network used in this article consists of three layers (see Fig. 3): one input layer, one hidden layer and one output layer. If this topic is new to you, I recommend reading additional literature (see References) and, in particular, the book “Neural Networks and Deep Learning” by Michael Nielsen , which will provide an excellent introduction to the topic and a series of step-by-step examples regarding the problem of recognizing handwritten numbers.

Figure 3 : The artificial neural network used in this article consists of three layers. The input layer contains 784 neurons, one per pixel of the incoming image. To improve accuracy, a hidden layer of 100 neurons is added. The output layer has 10 neurons, one for each possible output value (that is, numbers from 0 to 9).

Get training and test data, build and train a neural network.

Another important step for deploying neural networks is learning . For this you need data , if possible a lot. You also need an engine to do the necessary calculations. Fortunately, there are many platforms available for working with neural networks that are free and relatively used for deployment (see References). In this article, you will see how to use TensorFlow from Google and the Python environment. TensorFlow comes with instructions for recognizing handwritten numbers in the MNIST database . The instruction includes training and test data with labels , as well as code samples.

You can find the code I used to train the neural network on Github . Some highlights and code snippets are discussed below.

Data Import : The sample data set that comes with TensorFlow includes 55,000 images for training and 10,000 images for testing. They initially proceed from the work of Jan Lekun and colleagues. Having a lot of high-quality data is very important for the success of the process. In addition, the images have labels : they indicate how many are shown in the picture, and this information is very important because the exercise is to implement supervised learning .

Definition of a neural network : there are four tensors in the network (in this case, a vector and matrices): W0, W1, b0 and b1. They are defined in the following code snippet. To better understand their role, as well as the value of cross-entropy and the optimizer of gradient descent for neural network training, see the links, namely “Neural Networks and Deep Learning” and the TensorFlow instruction.

Neural network training: training takes place with several stages of optimization. It is performed using 55,000 tagged images. More than 30,000 iterations are performed using “mini-portions” with a size of 100 images. At each step, the gradient descent algorithm calculates the update of weights and offsets (W0, W1, bo, and b1) in order to minimize the loss function (cross_entropy). Corresponding code snippet:

Result : as a result, the trained network has an accuracy of about 98% in predicting the images in the test set. Please note that the test set consists of 10,000 images and does not match the set of images used for training (the training set contains 55,000 images).

Higher accuracy can be obtained with more complex neural network configurations (see Links for details), but this is beyond the scope of this article.

Manual counting of a neural network, example in Python

The main result of the training operations is that the tensors (in this case, the matrices and vectors) that make up the neural network are now filled with useful values. I believe that a good way to understand how it all works is to “start the network manually”, that is, to start as an example the transition from the image of a handwritten digit to the prediction of its value by a trained neural network. As a first step, we extract the values of the trained tensors from our model into numpy arrays for further processing:

An example of "manual" network management in Python is as follows:

W0_matrix, b0_array, W1_matrix and b1_array are the tensors that make up the neural network after learning, testimage is the input layer, sigmoid () is used as an activation function, hidden_layer represents the hidden network layer, predicted is the output layer, and softmax () is a function that used to normalize output as a probability distribution. At the end of the calculation, the predicted [n] array contains the prediction that the input image represents the digit “n”. The argmax () function finds the value “n” for which predicted [n] is maximum.

The code shown above predicts a value of 7 for the test image. The forecast is confirmed as the correct value of the label, and can also be visually confirmed by a raster display of the test image (see Fig. 1).

Migrate Test Data and Network to Oracle

The example from the previous paragraph on how to manually start the counting mechanism shows that the maintenance of a neural network can be straightforward, in some cases it is just a matter of performing some basic calculations with matrices. This contrasts with the complexity of learning neural network models, where a specialized engine is often required, high quality of training data, and in more complex cases, more specialized equipment, such as a GPU card.

The topic of the previous paragraph also set the stage for the next development: this is the movement of the neural network tensors and test data to Oracle and the implementation of the service mechanism there.

There are many ways to export Python numpy arrays. One of them is to save the arrays in text format . Here you will see a method intended for direct export to Oracle using cx_Oracle, the Python library for interacting with Oracle. For more examples and links on how to use cx_Oracle, I advise you to read the notes "Oracle and Python and cx_Oracle" .

The code can be found on Github , here are some relevant snippets:

- Create tables for placement of definitions of tensors and test data:

- From Python, open a connection to Oracle:

- An example of how to transfer the matrix W0 to the Oracle tensors table:

Finally, you can export the testdata and tensors tables for later use. In the Github repository you can find the dump file obtained using the following command (run as Oracle):

Oracle Optimizations for Linear Algebra

From the Oracle documentation: "The UTL_NLA package provides a subset of BLAS and LAPACK operations (version 3.0) for vectors and matrices represented as VARRAYS." This is very useful for performing the calculations necessary to service the neural network from this article.

Below is a snippet of MNIST code to see how this works in practice. The code performs the calculation v_Y0 = v_Y0 + g_W0_matrix * p_testimage_array , where g_W0_matrix is the 784x100 matrix, p_testimage_array is the vector of 784 elements (encodes 28x28 images), and v_Y0 is the vector of 100 elements.

To use UTL_NLA, tensors that make a neural network and test images must be stored in varrays from binary_float or, rather, be declared with the data type UTL_NLA_ARRAY.

For this reason, it is also convenient to post-process the tensors and testdata tables as follows:

The final step that brings you back to the discussion in the section “Let's start at the end: how to deploy the MNIST PL / SQL package and recognize the handwritten numbers in Oracle” is to create the MNIST PL / SQL package that loads the tensors and performs the operations necessary to calculate the neural network, detailed code can be viewed on Github .

Conclusion and comments

This article describes an example of the implementation of the counting mechanism for an artificial neural network using a relational DBMS Oracle and PL / SQL. This is a simple example implementation for familiarizing with neural networks: recognizing handwritten numbers in the MNIST database . The network is trained using TensorFlow and then exported to Oracle. The end result is a small PL / SQL package that provides digit recognition with an accuracy of around 98% .

In the near future, we can expect an increase in the deployment of neural networks near sources and data warehouses . The example in this article of how to implement a neural network maintenance mechanism in an Oracle database shows that this is not only possible, but also easy to implement .

Maintenance of neural networks is much easier than their learning. Although training requires specialized software / platforms, knowledge of the subject area and a large amount of training data, trained networks can be imported and run on target systems, which in many cases requires low utilization of computational resources.

This article was conceived as an educational material : instead of a more productive convolutional network, a simple neural network of direct propagation is used (see References). Moreover, moving data from TensorFlow to Oracle and implementing the servicing engine in PL / SQL is a kind of “crutch” in the current state, and it is not intended for use in production.

The code accompanying this article is available on Github .

Notes on how to create a test environment

The main components and tools for testing scripts in this article are:

Python environment (on Linux with CentOS 7) installed using Anaconda 4.1: Python 2.7, Jupyter Ipython notebook.

TensorFlow , version 0.9 (last at the time of writing), installed in accordance with the instructions https://www.tensorflow.org/versions/r0.9/get_started/os_setup.html

Oracle's relational database engine running on Linux. Oracle scripts were tested on Oracle 11.2.0.4 and 12.1.0.2

Links and thanks

An excellent introduction to neural networks and inspiration for this article was the book by Michael Nielsen (Michael Nielsen) "Neural Networks and Deep Learning" .

The neural network learning code used in this article is an extension of Google's TensorFlow MNIST instruction .

I also recommend: the TensorFlow tutorial by Nartin Gorner ,

"Basic techniques for TensorFlow" Aaron Schumacher (Aaron Schumacher),

MNIST Databases by Yann LeCun,

MNIST Visualization by Christopher Olah,

"Machine learning Python" Sebastian Raschka (Sebastian Raschka).

Other popular frameworks for working with neural networks and deep learning, in addition to TensorFlow , include Theano and Torch among many others, see also this page on Wikipedia .

In the summer we invite all fans of science-intensive tasks and computer science to visit the database section of the same name and computer science at PG Day'17 Russia . We will discuss blockchains, data modeling and visualization, DBMS profiling theory, data mining and some more interesting topics :)

And do not forget that this year a section for specialists working with Oracle and other commercial databases will be held as part of PG Day. First-class reports are expected from experts from major technology companies and a full-fledged master class on the performance diagnostics of the Oracle Database .

In this article you will find an example of building and deploying a basic mechanism for counting an artificial neural network using PL / SQL . The article is intended for educational purposes, in particular for Oracle practitioners who want to get acquainted with neural networks with a concrete example.

Currently, machine learning and neural networks are current topics in data processing. Many tools and platforms are now available for work and experiments with neural networks and deep learning (see links at the end of this article). Recognition of handwritten numbers, in particular, using the MNIST database , Jan Lekun, and others, is now an introductory example for familiarizing with neural networks.

')

In this article, you will see how to build and deploy a simple neural network counting mechanism to recognize handwritten numbers using Oracle and PL / SQL. The end result is a small PL / SQL package with an accuracy of about 98%. The neural network is created and trained using TensorFlow , and then transferred to Oracle for maintenance.

One of the ideas that this article aims to illustrate is that counting neural networks is much simpler than their training: the operations necessary to maintain a trained network can be implemented relatively easily in many languages / programming environments. Discussions on these topics are usually centered around platforms for “Big data” (for example, Spark and MLlib ). Interestingly, neural networks can also be successfully applied in the world of RDBMS. This can be useful since a large amount of valuable data is currently stored in relational databases . In the case of Oracle, the implementation of the counting mechanism is also simplified due to the presence of a mature PL / SQL environment with a package for linear algebra : UTL_NLA .

Let's start from the end: how to deploy the MNIST PL / SQL package and recognize handwritten numbers in Oracle

One short PL / SQL package and two tables are all you need to play the next example (you can find the detailed code on Github ). Tables:

- TENSORS_ARRAY : This table contains numerical values for the vectors and matrices (tensors) that make up the neural network. There are a total of 79510 floating point numbers encoded in four tensors using the UTL_NLA_ARRAY_FLT data type.

- TESTDATA_ARRAY : This table contains test images. There are 10K images, each of which consists of 28 * 28 = 784 pixels. Images are also encoded using the UTL_NLA_ARRAY_FLT data type .

The mechanism for counting the neural network from the example is in a package called MNIST . It has an INIT procedure that loads the neural network components from the tensors_array table into PL / SQL variables and the SCORE function, which takes an image as input and returns a number — the predicted value of the digit.

Here is an example of its use, where the first picture in the testdata_array table is checked and correctly recognized as an image of the number 7 (the image label is consistent with the prediction MNIST.SCORE):

SQL> exec mnist.init PL/SQL procedure successfully completed. SQL> select mnist.score(image_array), label from testdata_array where rownum=1; MNIST.SCORE(IMAGE_ARRAY) LABEL ------------------------ ---------- 7 7

Figure 1 : This is a raster display of the test image used in the example. It confirms that the MNIST.SCORE prediction is correct and indeed the picture is an image of the number 7, handwritten and encoded in a grid of 28x28 gray-scale pixels.

Processing of all test images is also performed with a simple SQL command. In the example in fig. 2, it takes 2 minutes to process 10,000 test images, an average of about 12 ms per image. The accuracy of the evaluation function is about 98% . It is calculated as follows: according to data labels, 9787 out of 10,000 images are estimated correctly. Note also that the set of test images does not overlap with the images used to train the neural network. Therefore, we can expect that the MNIST package has a digit recognition accuracy of about 98%, including when used on general input.

The complete PL / SQL code and datapump dump file with the corresponding tables can be found on Github . In the following paragraphs, I will explain how to build and train a neural network.

Figure 2 : The accuracy of the PL / SQL MNIST.SCORE evaluation function on a test set of 10K images is about 98%. Processing takes about 12 ms per image.

Neural network

The neural network used in this article consists of three layers (see Fig. 3): one input layer, one hidden layer and one output layer. If this topic is new to you, I recommend reading additional literature (see References) and, in particular, the book “Neural Networks and Deep Learning” by Michael Nielsen , which will provide an excellent introduction to the topic and a series of step-by-step examples regarding the problem of recognizing handwritten numbers.

Figure 3 : The artificial neural network used in this article consists of three layers. The input layer contains 784 neurons, one per pixel of the incoming image. To improve accuracy, a hidden layer of 100 neurons is added. The output layer has 10 neurons, one for each possible output value (that is, numbers from 0 to 9).

Get training and test data, build and train a neural network.

Another important step for deploying neural networks is learning . For this you need data , if possible a lot. You also need an engine to do the necessary calculations. Fortunately, there are many platforms available for working with neural networks that are free and relatively used for deployment (see References). In this article, you will see how to use TensorFlow from Google and the Python environment. TensorFlow comes with instructions for recognizing handwritten numbers in the MNIST database . The instruction includes training and test data with labels , as well as code samples.

You can find the code I used to train the neural network on Github . Some highlights and code snippets are discussed below.

Data Import : The sample data set that comes with TensorFlow includes 55,000 images for training and 10,000 images for testing. They initially proceed from the work of Jan Lekun and colleagues. Having a lot of high-quality data is very important for the success of the process. In addition, the images have labels : they indicate how many are shown in the picture, and this information is very important because the exercise is to implement supervised learning .

Definition of a neural network : there are four tensors in the network (in this case, a vector and matrices): W0, W1, b0 and b1. They are defined in the following code snippet. To better understand their role, as well as the value of cross-entropy and the optimizer of gradient descent for neural network training, see the links, namely “Neural Networks and Deep Learning” and the TensorFlow instruction.

Neural network training: training takes place with several stages of optimization. It is performed using 55,000 tagged images. More than 30,000 iterations are performed using “mini-portions” with a size of 100 images. At each step, the gradient descent algorithm calculates the update of weights and offsets (W0, W1, bo, and b1) in order to minimize the loss function (cross_entropy). Corresponding code snippet:

Result : as a result, the trained network has an accuracy of about 98% in predicting the images in the test set. Please note that the test set consists of 10,000 images and does not match the set of images used for training (the training set contains 55,000 images).

Higher accuracy can be obtained with more complex neural network configurations (see Links for details), but this is beyond the scope of this article.

Manual counting of a neural network, example in Python

The main result of the training operations is that the tensors (in this case, the matrices and vectors) that make up the neural network are now filled with useful values. I believe that a good way to understand how it all works is to “start the network manually”, that is, to start as an example the transition from the image of a handwritten digit to the prediction of its value by a trained neural network. As a first step, we extract the values of the trained tensors from our model into numpy arrays for further processing:

An example of "manual" network management in Python is as follows:

W0_matrix, b0_array, W1_matrix and b1_array are the tensors that make up the neural network after learning, testimage is the input layer, sigmoid () is used as an activation function, hidden_layer represents the hidden network layer, predicted is the output layer, and softmax () is a function that used to normalize output as a probability distribution. At the end of the calculation, the predicted [n] array contains the prediction that the input image represents the digit “n”. The argmax () function finds the value “n” for which predicted [n] is maximum.

The code shown above predicts a value of 7 for the test image. The forecast is confirmed as the correct value of the label, and can also be visually confirmed by a raster display of the test image (see Fig. 1).

Migrate Test Data and Network to Oracle

The example from the previous paragraph on how to manually start the counting mechanism shows that the maintenance of a neural network can be straightforward, in some cases it is just a matter of performing some basic calculations with matrices. This contrasts with the complexity of learning neural network models, where a specialized engine is often required, high quality of training data, and in more complex cases, more specialized equipment, such as a GPU card.

The topic of the previous paragraph also set the stage for the next development: this is the movement of the neural network tensors and test data to Oracle and the implementation of the service mechanism there.

There are many ways to export Python numpy arrays. One of them is to save the arrays in text format . Here you will see a method intended for direct export to Oracle using cx_Oracle, the Python library for interacting with Oracle. For more examples and links on how to use cx_Oracle, I advise you to read the notes "Oracle and Python and cx_Oracle" .

The code can be found on Github , here are some relevant snippets:

- Create tables for placement of definitions of tensors and test data:

SQL> create table tensors (name varchar2(20), val_id number, val binary_float, primary key(name, val_id)); SQL> create table testdata (image_id number, label number, val_id number, val binary_float, primary key(image_id, val_id)); - From Python, open a connection to Oracle:

import cx_Oracle ora_conn = cx_Oracle.connect('mnist/<a href="mnist@ORCL">mnist@ORCL</a>') cursor = ora_conn.cursor() - An example of how to transfer the matrix W0 to the Oracle tensors table:

i=0 sql="insert into tensors values ('W0', :val_id, :val)" for column in W0_matrix: array_values = [] for element in column: array_values.append((i, float(element))) i += 1 cursor.executemany(sql, array_values) ora_conn.commit() Finally, you can export the testdata and tensors tables for later use. In the Github repository you can find the dump file obtained using the following command (run as Oracle):

$ expdp mnist/mnist tables=testdata,tensors directory=DATA_PUMP_DIR dumpfile=MNIST_tables.dmp Oracle Optimizations for Linear Algebra

From the Oracle documentation: "The UTL_NLA package provides a subset of BLAS and LAPACK operations (version 3.0) for vectors and matrices represented as VARRAYS." This is very useful for performing the calculations necessary to service the neural network from this article.

Below is a snippet of MNIST code to see how this works in practice. The code performs the calculation v_Y0 = v_Y0 + g_W0_matrix * p_testimage_array , where g_W0_matrix is the 784x100 matrix, p_testimage_array is the vector of 784 elements (encodes 28x28 images), and v_Y0 is the vector of 100 elements.

utl_nla.blas_gemv( trans => 'N', m => 100, n => 784, alpha => 1.0, a => g_W0_matrix, lda => 100, x => p_testimage_array, incx => 1, beta => 1.0, y => v_Y0, incy => 1, pack => 'C' ); To use UTL_NLA, tensors that make a neural network and test images must be stored in varrays from binary_float or, rather, be declared with the data type UTL_NLA_ARRAY.

For this reason, it is also convenient to post-process the tensors and testdata tables as follows:

SQL> create table testdata_array as select a.image_id, a.label, cast(multiset(select val from testdata where image_id=a.image_id order by val_id) as utl_nla_array_flt) image_array from (select distinct image_id, label from testdata) a order by image_id; SQL> create table tensors_array as select a.name, cast(multiset(select val from tensors where name=a.name order by val_id) as utl_nla_array_flt) tensor_vals from (select distinct name from tensors) a; The final step that brings you back to the discussion in the section “Let's start at the end: how to deploy the MNIST PL / SQL package and recognize the handwritten numbers in Oracle” is to create the MNIST PL / SQL package that loads the tensors and performs the operations necessary to calculate the neural network, detailed code can be viewed on Github .

Conclusion and comments

This article describes an example of the implementation of the counting mechanism for an artificial neural network using a relational DBMS Oracle and PL / SQL. This is a simple example implementation for familiarizing with neural networks: recognizing handwritten numbers in the MNIST database . The network is trained using TensorFlow and then exported to Oracle. The end result is a small PL / SQL package that provides digit recognition with an accuracy of around 98% .

In the near future, we can expect an increase in the deployment of neural networks near sources and data warehouses . The example in this article of how to implement a neural network maintenance mechanism in an Oracle database shows that this is not only possible, but also easy to implement .

Maintenance of neural networks is much easier than their learning. Although training requires specialized software / platforms, knowledge of the subject area and a large amount of training data, trained networks can be imported and run on target systems, which in many cases requires low utilization of computational resources.

This article was conceived as an educational material : instead of a more productive convolutional network, a simple neural network of direct propagation is used (see References). Moreover, moving data from TensorFlow to Oracle and implementing the servicing engine in PL / SQL is a kind of “crutch” in the current state, and it is not intended for use in production.

The code accompanying this article is available on Github .

Notes on how to create a test environment

The main components and tools for testing scripts in this article are:

Python environment (on Linux with CentOS 7) installed using Anaconda 4.1: Python 2.7, Jupyter Ipython notebook.

TensorFlow , version 0.9 (last at the time of writing), installed in accordance with the instructions https://www.tensorflow.org/versions/r0.9/get_started/os_setup.html

Oracle's relational database engine running on Linux. Oracle scripts were tested on Oracle 11.2.0.4 and 12.1.0.2

Links and thanks

An excellent introduction to neural networks and inspiration for this article was the book by Michael Nielsen (Michael Nielsen) "Neural Networks and Deep Learning" .

The neural network learning code used in this article is an extension of Google's TensorFlow MNIST instruction .

I also recommend: the TensorFlow tutorial by Nartin Gorner ,

"Basic techniques for TensorFlow" Aaron Schumacher (Aaron Schumacher),

MNIST Databases by Yann LeCun,

MNIST Visualization by Christopher Olah,

"Machine learning Python" Sebastian Raschka (Sebastian Raschka).

Other popular frameworks for working with neural networks and deep learning, in addition to TensorFlow , include Theano and Torch among many others, see also this page on Wikipedia .

In the summer we invite all fans of science-intensive tasks and computer science to visit the database section of the same name and computer science at PG Day'17 Russia . We will discuss blockchains, data modeling and visualization, DBMS profiling theory, data mining and some more interesting topics :)

And do not forget that this year a section for specialists working with Oracle and other commercial databases will be held as part of PG Day. First-class reports are expected from experts from major technology companies and a full-fledged master class on the performance diagnostics of the Oracle Database .

Source: https://habr.com/ru/post/328824/

All Articles