Multi-armed bandit in the task of finding objects in the video stream

On Habré, the topic of the use of so-called “bandits” for data mining has already been repeatedly touched. In contrast to the already familiar learning of machines on precedents, which is very often used in recognition tasks, a multi-armed gangster is used to build in some sense “recommendatory” systems. On Habré, the idea of a multi-armed gangster and its applicability to the task of recommending Internet content is already very detailed and accessible. In our next post, we wanted to tell you about the symbiosis of learning from precedents and reinforcement learning in video stream recognition tasks.

On Habré, the topic of the use of so-called “bandits” for data mining has already been repeatedly touched. In contrast to the already familiar learning of machines on precedents, which is very often used in recognition tasks, a multi-armed gangster is used to build in some sense “recommendatory” systems. On Habré, the idea of a multi-armed gangster and its applicability to the task of recommending Internet content is already very detailed and accessible. In our next post, we wanted to tell you about the symbiosis of learning from precedents and reinforcement learning in video stream recognition tasks.Consider the problem of detecting a geometrically rigid object in a video stream. In the distant first year of 2001, Paul Viola and Michael Jones proposed an detection algorithm (hereinafter referred to as the method of Viola and Jones [1]), with which you can search for such objects in individual images in a fraction of a second. Although the algorithm was originally developed to solve the problem of searching for individuals, today it is successfully used in various areas of computer vision where real-time detection is necessary.

But what to do when in a video stream you want to search for several different types of objects? In such cases, several different Viola and Jones detectors are usually trained and applied independently on the frames of the video stream, thereby slowing down the search procedure a number of times proportional to the number of objects. Fortunately, in practice, quite often a situation arises when, despite a large number of permissible objects, at each moment in time there is no more than one desired object on the frame. As an example, we will present the real problem from our practice, acquired in the process of painstakingly creating a Smart IDReader . A bank clerk registers bank cards issued to customers using a webcam, and it is required to identify the type of card and recognize the number using the printed logo. Despite the fact that currently there are more than ten different types of payment systems (and therefore more than 10 different logos), the operator shows only one card at a time.

The task of the multi-armed gang

The initial form of the n-hand bandit problem is formulated as follows. Suppose you have to repeatedly select one of the n different alternatives (options for action). Each choice entails receiving a certain reward, depending on the chosen action. The average reward for choosing this action is called the action value. The goal of the workflow is to maximize the expected total reward for a given period of time. Often each such attempt is called a game.

')

Although the exact values of action values are not known, their estimates can be obtained with each new game. Then at each moment of time there will be at least one action for which such an assessment will be greatest (such actions are called greedy). Under the application refers to the choice of greedy action in the next game. Under the study means the choice of one of the non-greedy actions in this game in order to more accurately assess the value of non-greedy actions.

Let V t (a) denote the estimated value of the action value a in the t-th game. If by the time t of the beginning of the t-th game, action a was chosen k a times, which resulted in successive rewards r 1 , r 2 , ..., r ka , then the value of the action for all k a > 0 will be evaluated by the formula:

Despite the fact that the easiest way in each game is to choose an action that has the maximum estimated value, this approach does not lead to success due to the lack of a research procedure. There are many ways to get rid of this problem. Some of them are very simple and quickly come to the mind of every math. The part from them has already been stated here on Habré. In any case, we believe that it would be more correct not to compress a mathematically beautiful theory into the scales and formats of Habr, but to provide the dear reader with a link to the available literature [2].

The task of choosing a recognizing detector

So back to our task of finding objects in the video stream. Let there be a video fragment containing T frames. On each frame of a video sequence there can be no more than one object to be detected. There are a total of N different types of objects, and for each type, Viola and Jones' own cascade is trained. Objects in a video sequence appear and disappear naturally, there is no instant appearance or disappearance of objects (for example, in the task of registering bank cards with a webcam described in the introduction, naturalness lies in the smooth sequential appearance and disappearance of the card in the frame). It is necessary to construct an algorithm for selecting a detector (we will denote it by the letter a ) for each frame of a video clip, which ensures the most accurate detection of objects.

In order to be able to compare various detector selection algorithms, it is necessary to determine the quality functional Q (a) . The better our algorithm “picks up” the recognition detector for the next frame, the higher should be the value of the functional. Then, omitting the mathematics (it can always be found in our full version of the article [3]), we will calculate the functional as the number of correctly recognized frames of the video sequence.

Greedy Detector Algorithm

Let us proceed directly to the description of the algorithm, which, we recall, operates with a machine learning tool with reinforcement.

If in the problem of a multi-armed gang, remuneration arises naturally, then in the problem of searching for objects, the concept of “reward” requires a separate definition. It is intuitively clear that the reward for choosing an action (recognizing cascade) must be positive if it was possible to correctly find the object, and zero otherwise. However, at the time of the game there is no reliable information about the objects in the frames, otherwise the task of searching for objects in the image does not make sense. Then, assuming that the target object detectors have good accuracy and completeness, we will encourage the selection of the corresponding detector only for the objects found in the image (that is, the reward in the next game is 1 when the object is on the frame and 0 otherwise).

Now consider a way to calculate the value of an action. The formula presented in the description of a multi-armed gangster is well suited only for stationary tasks (when the system does not change with time). In non-stationary tasks, later awards have a higher priority than earlier ones. The most common way to achieve this is to use an exponential average. Then the value of the action a when receiving the next reward r k + 1 is determined by the following recursive formula:

where α is the step size (the larger the α value, the greater the weight in the value of the action is the new reward). From a physical point of view, the parameter α controls how quickly the current action becomes greedy.

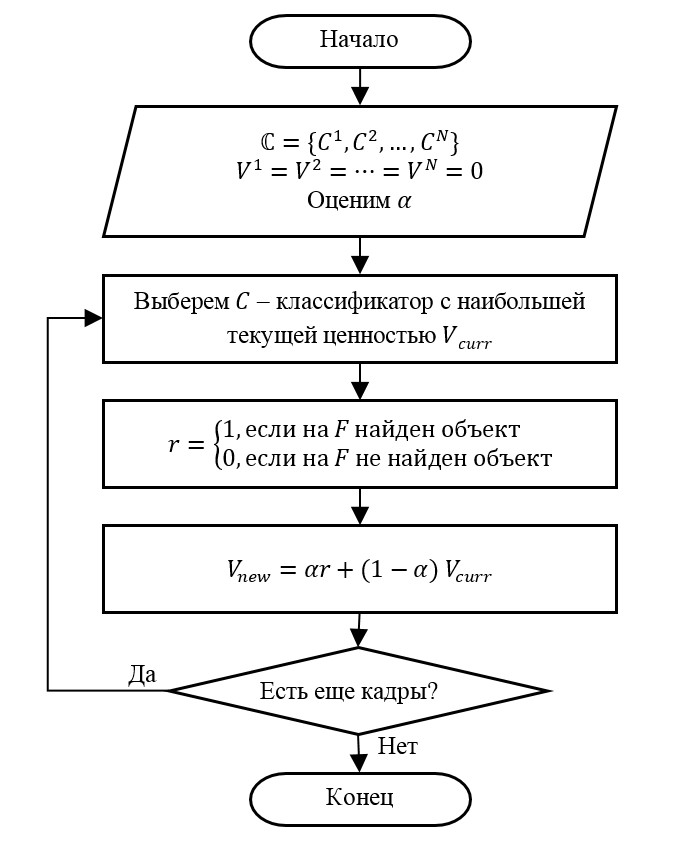

In view of the above, the algorithm for adaptive detector selection is presented in the form of the following sequence of steps.

Step 1 (Initialization). Let there be N detectors. Let us set the initial value of the value for the detector V 1 = V 2 = = V N = 0. Choose the value of the parameter α.

Step 2. Select the detector C with the maximum current value of the value V of curr . If there are several such detectors, choose one of them arbitrarily.

Step 3. Apply on the next frame F the selected detector C. We determine the gain of the detector r in accordance with the rule introduced (that is, r = 1 if there is an object on F ).

Step 4. Update the value of V new for detector C using the above recursive formula.

Step 5. Go to step 2, if there are still frames to search for objects. Otherwise, we finish processing.

Experimental Results

As we said at the very beginning, we used this algorithm to solve the problem of determining the type of bank card in a video stream. The operator presents a bank card to the webcam, and the recognition system must determine the type of card by the existing logo.

To assess the quality of the proposed algorithm, we prepared a test video fragment containing 29 views of bank cards of the following five types: VISA, MasterCard, American Express, Discover and UnionPay. The total number of frames of a video clip was 1116. The frame of the test video clip is shown in the table. We previously trained the logos of the payment system logos using the Viola and Jones method (of course, on completely different images).

| Object type | Number of cards | Total number of frames |

|---|---|---|

| VISA | sixteen | 302 |

| Mastercard | eight | 139 |

| American express | 2 | 37 |

| Unionpay | one | 17 |

| Discovery | 2 | 34 |

| Frames without a card | - | 587 |

| TOTAL | 29 | 1116 |

The effectiveness of the proposed detector selection algorithm (AED) was compared with the sequential algorithm (trained logo detectors are applied to frames in turn). Such an alternate algorithm is quite meaningful and implements the idea of accelerating detection through decimation. The table shows the value of the quality functional Q , as well as the ratio of the quality functional to the total number of processed frames Q r for individual interval points.

| Method | 300 | 600 | 900 | 1116 |

|---|---|---|---|---|

| Alternate | 191 (0.64) | 379 (0.63) | 547 (0.61) | 676 (0.61) |

| AED | 216 (0.72) | 446 (0.74) | 682 (0.76) | 852 (0.76) |

A graphical representation of the presented tabular data is shown in the following figure.

Despite the fact that the described detector selection algorithm provides a better detection quality compared to the sequential method, the ideal quality is still not achieved. This is partly due to the inherent quality parameters of the used Viola and Jones cascades (determined by accuracy and completeness). To confirm this, we conducted an experiment on the simultaneous application of all logo detectors to each frame (of course, not paying attention to time in this case). As a result of this experiment, the maximum possible value of the quality functional Q * = 1030 ( Q r * = 0.92) was obtained, which essentially indicates the need to train new, more accurate detectors :-)

Conclusion

In the tasks of searching for objects in a video stream, the processing speed of an individual frame is important. There are tasks for which even the application of fast algorithms, such as the method of Viola and Jones, does not provide the necessary speed. In such cases, sometimes a combination of different ways of machine learning (such as precedent training and reinforcement training) allows you to elegantly solve the problem, without resorting to over-optimization of the algorithms and unplanned upgrade of hardware.

References

- Viola P., Jones M. Robust Real-time Object Detection // International Journal of Computer Vision. 2002

- Sutton R.S., Barto E.G. Training with reinforcements. M .: BINOM. Laboratory of Knowledge, 2012. 399 p.

- Usilin S.A. Using the pursuit method to improve the performance of the multi-class object detection algorithm in a video stream using Viola-Jones cascades // Proceedings of the Institute for Systems Analysis of the Russian Academy of Sciences (ISA RAS). 2017. № 1 (67). C. 75–82.

Source: https://habr.com/ru/post/328764/

All Articles