The delay in online broadcasts from a webcam, you heartless bitch

In this article we will talk about the delay that arises when conducting online broadcasts from a webcam from a browser. Why does it arise, how to avoid it and how to make an online broadcast really broadcast in real time.

Next, we will show what happens with a delay using the example of WebRTC implementation and how when using WebRTC it is possible to keep the delay at a low level suitable for comfortable communication.

')

Delay

1-3 seconds already causes slight discomfort in communication. The lag is already clearly visible and has to adapt to it. Knowing that there is a lag, you speak like a walkie-talkie, and wait until it reaches the far side and the answer comes. Such a delay can still be called Roman-Tatiana, in honor of two journalists who go to the video link from the scene.

- Roman, can you hear me? Novel?

- 3 seconds passed

- I hear you perfectly. Tatyana?

- 3 seconds passed ...

- Roman, here pii ... what's going on. Novel?

Common delay myths

Below are three common misconceptions related to the delay and quality of video transmission to the Web.

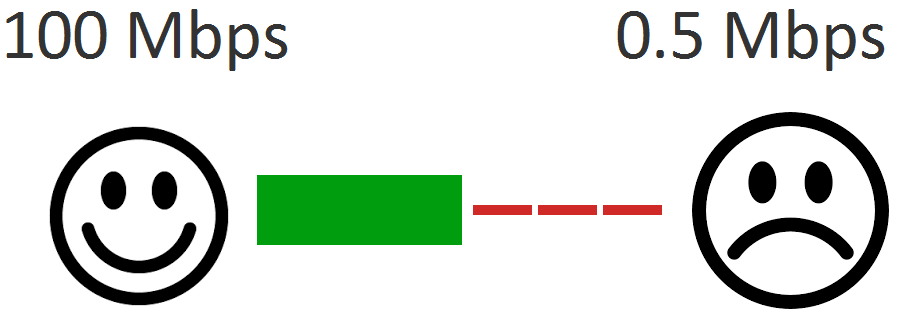

I have 100 mbps

1. I have 100 megabits, no problems should be.

In fact, it does not matter how many megabits the provider drew in its advertising booklet. What is important is the actual bandwidth between your device and the remote server or device, including all nodes through which traffic passes. The provider cannot physically organize 100 Mbps to any arbitrary node on the Internet. Even the speed of 1 Mbps is not guaranteed. Suppose your interlocutor is physically located in a remote Brazilian province, and you are broadcasting from a Moscow data center. No matter how thick and fast your declared channel is, it will not be the same to the final destination.

Thus, such an unpleasant fact develops - in fact, you do not know what the actual bandwidth of the channel between you and your interlocutor is, and even your provider does not know this, since this is a floating value at each moment in time and for each particular host, with which you exchange information.

In addition to bandwidth, the regularity of packet arrival ( no-jittering ) is also important. You can download videos from torrents at high speeds or see good results in the Speedtest service, but when playing in real time and with minimal delay, it is important to get all the packages on time.

It would be ideal if the packets arrived exactly when their decoding and display on the screen is required - a millisecond to a millisecond. But the network is not perfect and there is a jitter in it. Packages arrive irregularly - then they are late, then come in batches, which requires their dynamic buffering for smooth playback. If you drop a lot, the quality deteriorates. If you buffer a lot, the delay will increase.

Therefore, if someone says that he has a good and fast network (in the context of the transfer of real-time video) - do not believe it. Any node at any time can apply restrictions and begin to drop or delay video packets in its queues and nothing can be done about it. You can't send a message to all the nodes in the packet path and say “Hey, don't drop my packets. I need a minimum delay . ” More precisely, this can be done by marking the packages in a special way. But not the fact that the network nodes through which this packet goes will apply it.

I have a LAN

2. I on the local network exactly delay should not be.

In the local network, the delay is really less likely, simply because the traffic passes fewer nodes - at least three: the sender's device, the router, the video receiver's device.

These three devices have their own operating systems, buffers, network stacks. What happens if for example the sender's device actively distributes torrents? Or if the network stack of the server or CPU is loaded with other tasks or if the office has a wireless network and several employees simultaneously watching YouTube in 720p resolution?

With a sufficiently thick (high bitrate) video stream, about 10 Mbps, a router or another node may well begin to drop or delay some packets.

Thus, the delay in the local network is also likely and depends on the bitrate of the video stream and the data processing capacity of the nodes of this network.

It is worth noting here that such problems in the average local area network are much less common than in the global network and most often their causes are network overloads or problems with hardware / software.

As a result, we argue that any network, including the local one, is not ideal and video packets may be delayed and dropped in it and, in general, we cannot directly affect the intensity and number of dropped packets.

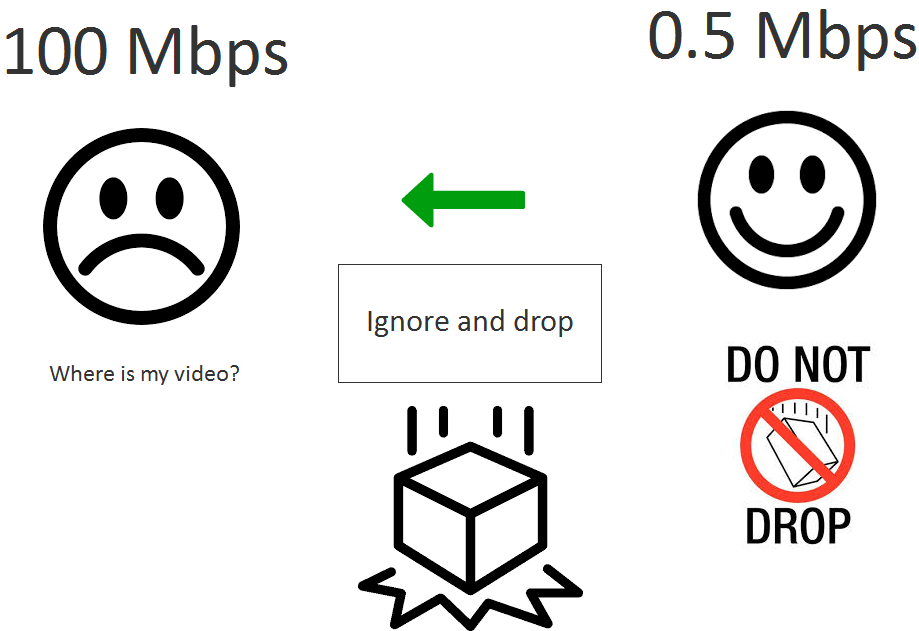

I have UDP

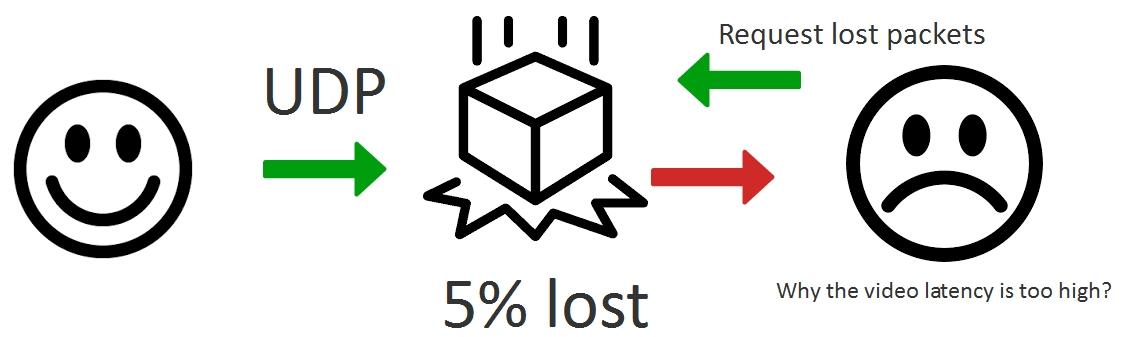

3. I use UDP, and when using the UDP protocol there are no delays.

Packets sent over UDP can also be delayed or lost in the network nodes and if these packets are not enough to build video and decode, they can be requested by the application again, which will cause a delay in playback.

Protocols

While the spacecraft are plying, we realize that there are only two web-based data transfer protocols (protocols that browsers work with): TCP and UDP .

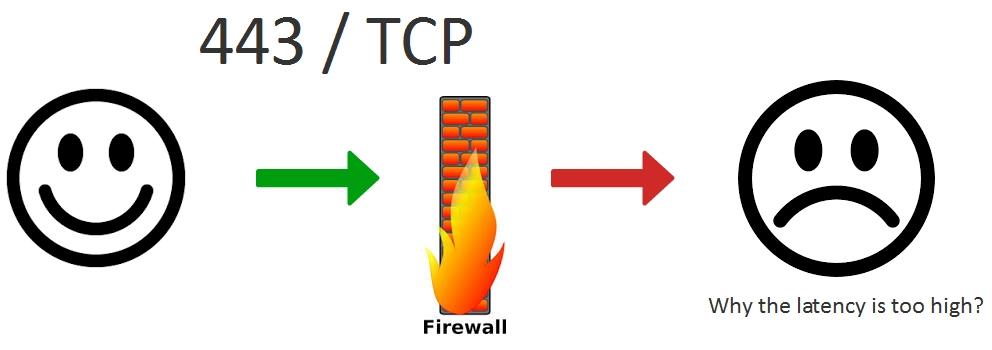

TCP - protocol with guaranteed delivery. This means that sending a packet to the network is an irreversible operation. If you send data to the network, they will travel there until they reach their destination or until the TCP connection is timed out. And this is the main reason for the delay when using the TCP protocol.

Indeed, if the package was delayed or dropped, it will be sent again and again until the remote side sends a confirmation of the arrival of the package and this confirmation does not reach the sender.

The following higher level protocols / technologies are based on the TCP protocol, which are used to transfer live video to the web:

- RTSP (interleaved mode)

- RTMP

- HTTP (HLS)

- WebRTC over TLS

- DASH

All of these protocols guarantee high latency for network problems. It is important to note that these problems may well be not on the sender or the receiving party, but on any of the intermediate nodes. Therefore, when trying to determine the cause of such a delay, it is often useless to check the network of the sender of the video stream and the network of the receiving party. They can be all good and there will be a delay of more than 5 seconds, caused by something in the middle.

Considering the above statement: any network is not perfect and video packets can be delayed and dropped in it , we conclude that in order to obtain a guaranteed minimum delay, you must completely abandon the use of TCP-based protocols.

It is not always easy to drop TCP. In some cases, he has no alternatives. For example, if all ports except 443 (https) are closed on the corporate Firewall, then the only way to transmit video is to tunnel it to https by organizing the transmission of video packets using the HTTPS protocol based on TCP. In this case, you will have to put up with an unpredictable delay, but the video will be delivered anyway.

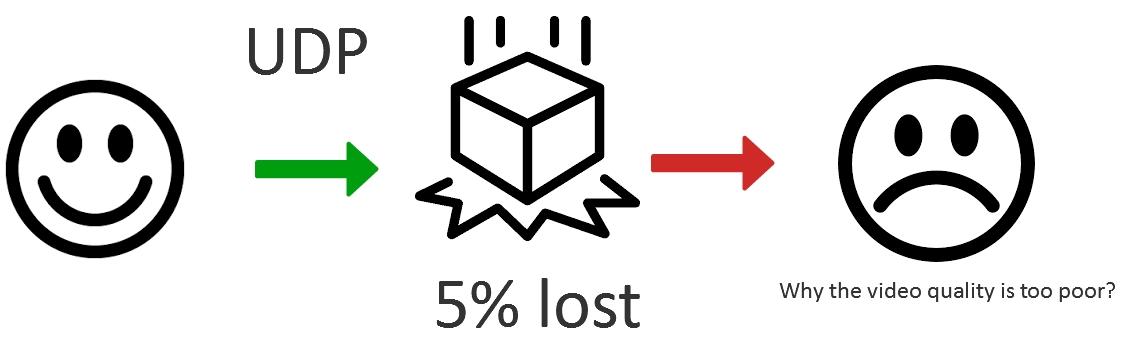

UDP is a protocol with non-guaranteed packet delivery. This means that if you send a packet to the network, it may be lost or delayed, but this will not prevent you from sending other packets afterwards, and the recipient will receive and process them.

The advantage of this approach is the fact that the packet will not be sent again and again, as is the case with TCP, waiting for guaranteed confirmation from the recipient at the protocol level. The recipient decides for himself whether to wait for him when all the packages are assembled in the right order or to work with what is. In TCP, the recipient does not have such freedom.

There are not many web protocols for transmitting video on UDP:

- WebRTC over UDP

- RTMFP

Here, under WebRTC, we mean the entire UDP-based protocol stack of this technology STUN, ICE, DTLS, SRTP, which works over UDP and ultimately provides video delivery over SRTP.

Thus, using UDP, we are able to deliver packets quickly, with partial losses. For example, to lose or permanently delay 5% of the sent packets. The advantage is that we ourselves, at the application level, can decide whether we have enough 95% of the packets received in time to display the video correctly and, if you wish, request the missing packets again at the application level and request them as many times as necessary to a) achieve the required video quality b) keep the delay at a low acceptable level.

As a result, the UDP protocol does not relieve us from the need to package, but it allows us to implement sending more flexibly, balancing between video quality, which depends on packet loss and latency. This cannot be done in TCP, because There is a guaranteed delivery for the design.

Congestion control

Before that, we formed a statement that any network is not perfect and video packets may be delayed and dropped in it and we cannot influence this .

An overview of the protocols did not give a happy smile on the right, but we came to the conclusion that for low latency, you need to use the UDP protocol and implement packet sending, balancing video quality .

In fact, if our packets are dropped or delayed in the network, maybe we send them too much per unit time. And if we cannot give a command that gives our packets a high priority, then we can reduce the load on these intermediate nodes by sending them less traffic.

Thus, the abstract application for low-latency streaming has two main objectives:

- Delay less than 500 ms

- Maximum possible quality for this delay

And these goals are achieved in the following ways:

| Goals | |

| Delay less than 500 ms | Highest possible quality |

|

|

The left column lists ways to reduce latency, and the right one shows things that improve video quality.

Thus, the video stream in which low latency is required and the quality is not static and constant and has to flexibly react to network changes over time, requesting to send packets at the right time, lowering or increasing the bitrate depending on the network status at the current time.

We give one of the most common questions: “Can I stream 720p live video with a delay of 500 ms”. This question, in general, does not make sense, because The resolution of 1280x720p with a bitrate of 2 Mbps and a bitrate of 0.5 Mbps are two completely different pictures, although both have a resolution of 720p, one will be clear and the other is very diluted with macroblocks.

The correct question would be: "Can I stream high-quality 720p video with a delay of less than 500 ms and a bitrate of 2 Mbps." The answer is yes, you can, if between you and the destination there is a real dedicated 2 Mbps band (not the band indicated by the provider) that allows you to do this. If there is no such guaranteed band, then the bitrate and picture quality will be floating, in order to fit into the specified delay, with every second adjustment to the existing band.

As you can see, the smile smiles, but asks itself the question “Am I happy?”. Indeed, floating bitrate, adaptation of the resolution for bandwidth and partial distribution of packets are compromises that do not allow to simultaneously achieve close to zero delay and true Full HD quality in an arbitrary network. But this approach allows you to keep the quality close to the maximum at each point in time and control the delay, keeping it at a low level.

Webrtc

Many people criticize WebRTC technology for alleged dampness and redundancy. However, if you dig deeper into the implementation, it turns out that the technology is quite suitable and does its job well - that is, Provides real-time audio and video delivery with low latency.

Above, we wrote that due to the heterogeneity of the network, to maintain low latency, it is necessary to constantly adjust the flow parameters, such as bitrate, FPS and resolution. All this work is clearly seen in the usual Chrome browser, in the chrome tab : // webrtc-internals

It all starts with a webcam. Suppose the camera is good and produces a stable video of 30 FPS. At the same time, this is what can happen with a real video stream:

As can be seen from the graph, despite the fact that the camera issues 30 FPS, the real frame rate jumps during transmission in an approximate range of 25-31 FPS and at local minima can reach 21-22 FPS.

Simultaneously with FPS, the bitrate is reduced. Indeed, the less video is encoded, the fewer frames / packets sent to the network, the lower the overall speed of the video stream.

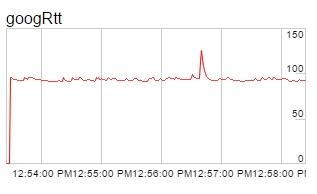

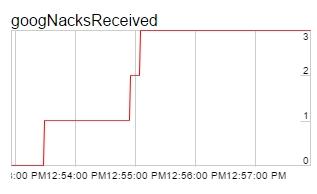

Auxiliary metrics include RTT, NACK and PLI, which affect browser behavior (WebRTC) and the resulting bit rate and stream quality.

RTT is Round Trip Time, which refers to the “ping” to the receiver.

NACK is a message about lost packets sent by the recipient of the stream to the sender of this stream.

PLI is a message about a lost keyframe with the requirement of its delivery.

Based on the number of lost packets, shares, RTT, you can build conclusions about the quality of the network at any given time and dynamically adjust the power of the video stream so that it does not exceed the limits of the capacity of each specific network and does not clog the channel. In WebRTC, this is already implemented and works.

Testing 720p WebRTC video stream

To begin, let's test the WebRTC video stream broadcasting at a resolution of 1280x720 (720p) and measure the delay. We will test the broadcast via WebRTC media server Web Call Server 5 . The test server is located at the Digitalocean site in Frankfurt's data center. Ping to server is 90 ms. The ISP has a speed of 50 Mbps.

Test parameters:

| Server | Web Call Server 5, DO, Frankfurt DC, ping 90ms, 2 core, 2Gb RAM |

| Flow resolution | 1280x720 |

| System | Windows 8.1, Chrome 58 |

| Test | Echo test with sending video to the server in one browser window and receiving in another |

For testing, we used the standard media_devices.html example located at this link . The source code of the example is here .

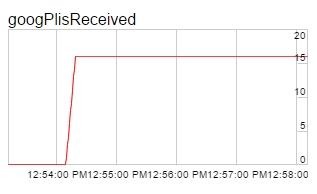

In order to set the resolution of the stream to 720p , select the camera and set 1280x720 in the Size settings. In addition, we set Play / Video / Quality to 0 in order not to use transcoding.

Thus, we send a video stream to a remote 720p server and play it in the window on the right. The page at this time displays the confirming status of PUBLISHING.

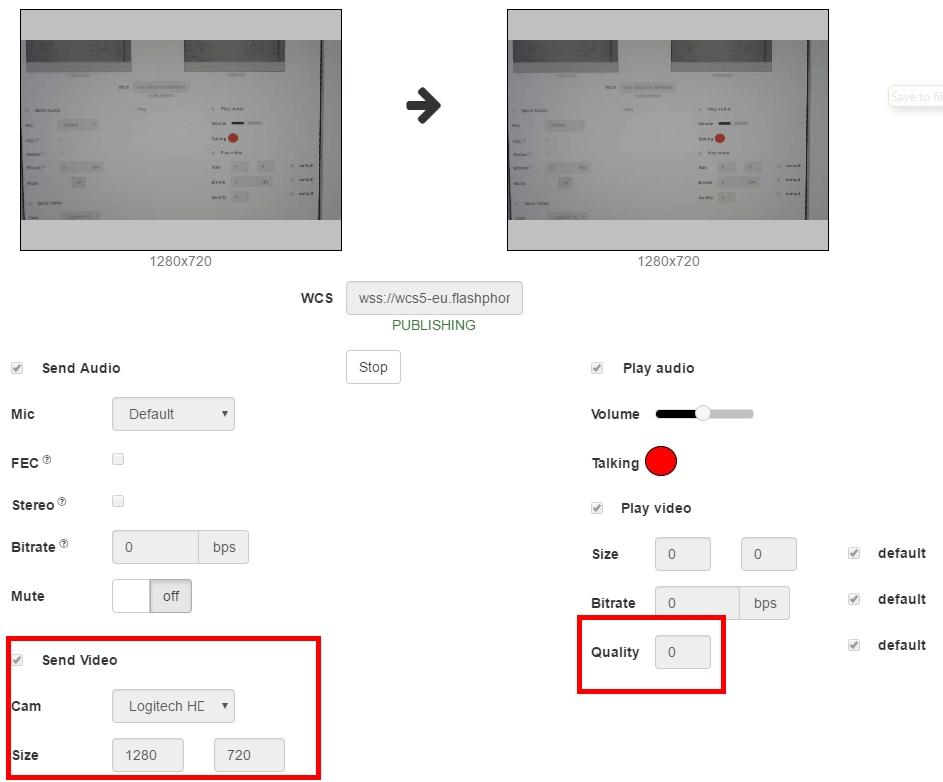

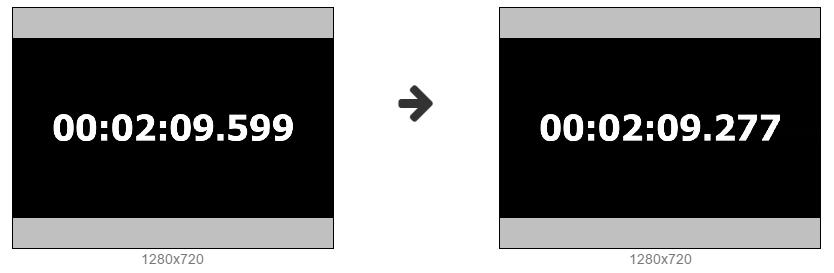

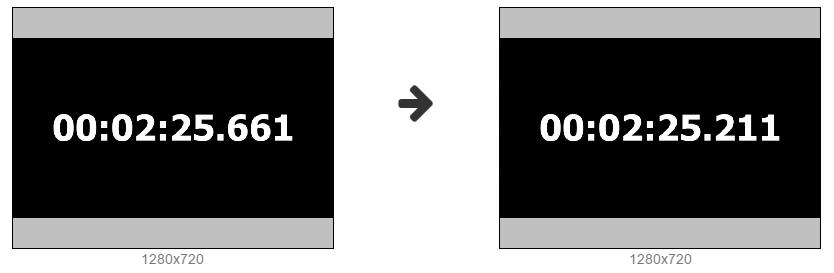

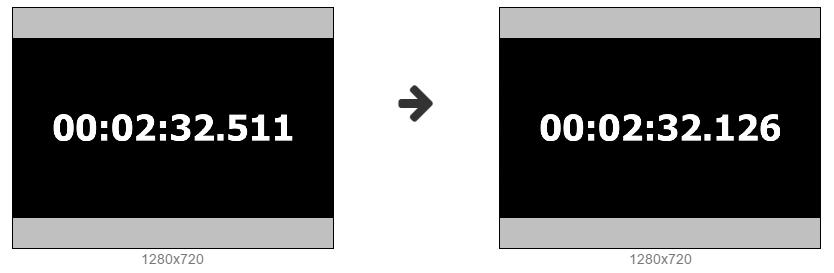

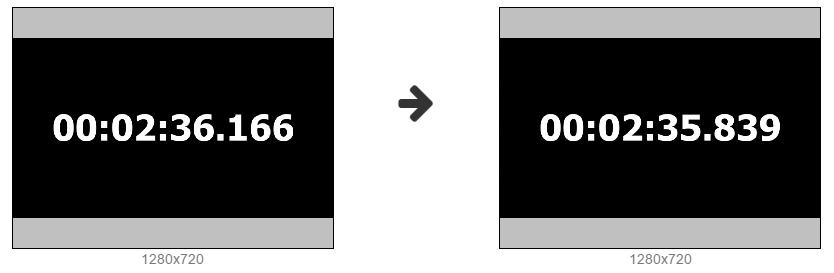

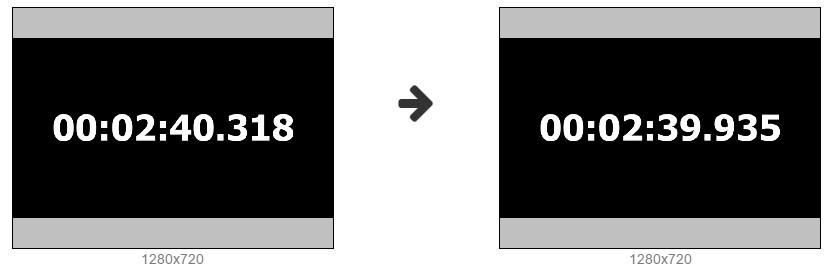

Next, we start the timer with milliseconds from the virtual camera and take some screenshots to measure the real delay.

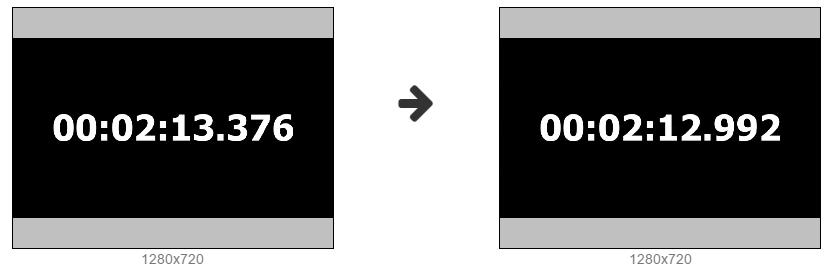

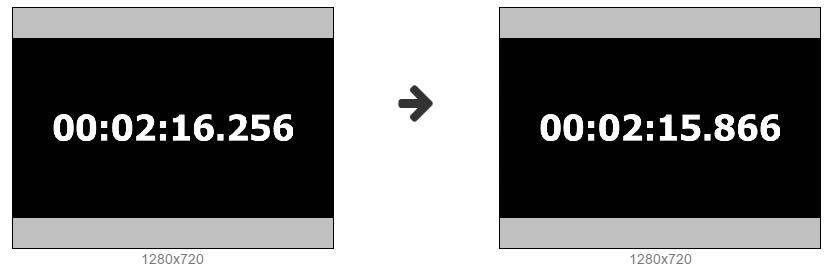

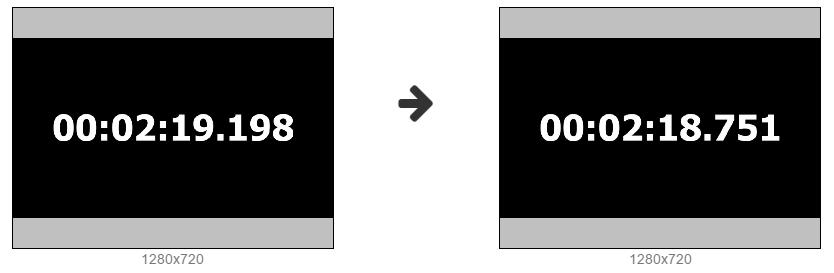

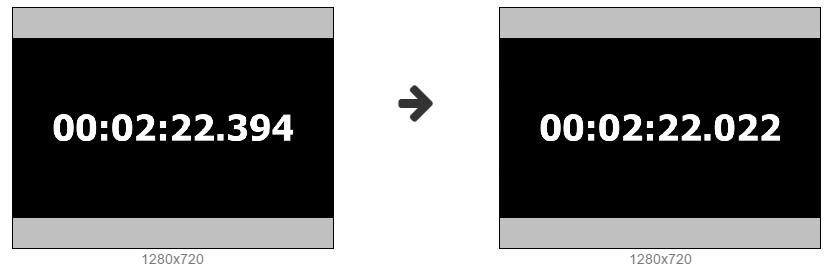

Screen 1

Screen 2

Screen 3

Screen 4

Screen 5

Screen 6

Screen 7

Screen 8

Screen 9

Screen 10

Test results and delay

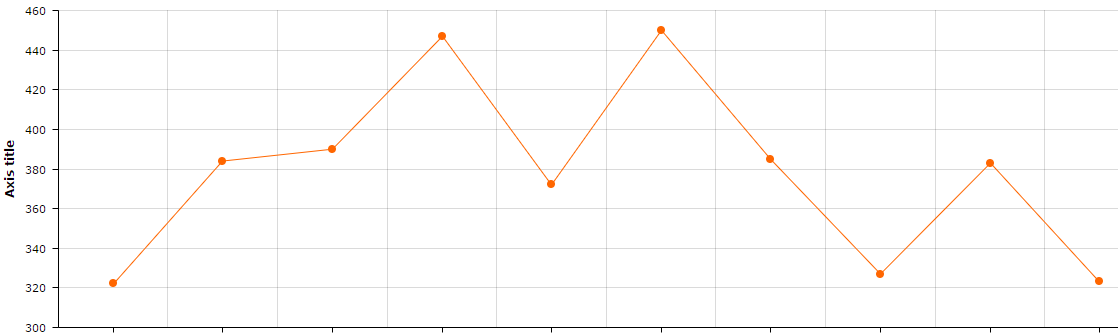

As a result, we get the following table with the measurement results in milliseconds:

| Captured | Displayed | Latency | |

| one | 09599 | 09277 | 322 |

| 2 | 13376 | 12992 | 384 |

| 3 | 16256 | 15866 | 390 |

| four | 19198 | 18751 | 447 |

| five | 22394 | 22022 | 372 |

| 6 | 25661 | 25211 | 450 |

| 7 | 32511 | 32126 | 385 |

| eight | 36166 | 35839 | 327 |

| 9 | 40318 | 39935 | 383 |

| ten | 45310 | 44987 | 323 |

We get the following delay schedule for the 720p stream on our minute test:

The 720p test showed a fairly good result with a visual delay of 300-450 milliseconds.

WebRTC Bit Rate Charts

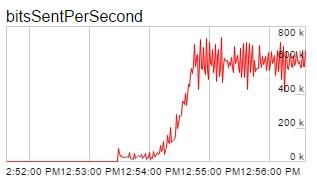

Let's see what happens at this moment with the video stream at the WebRTC level. To do this, instead of the timer, we launch a cartoon in high resolution to see how WebRTC will manage the high bitrate.

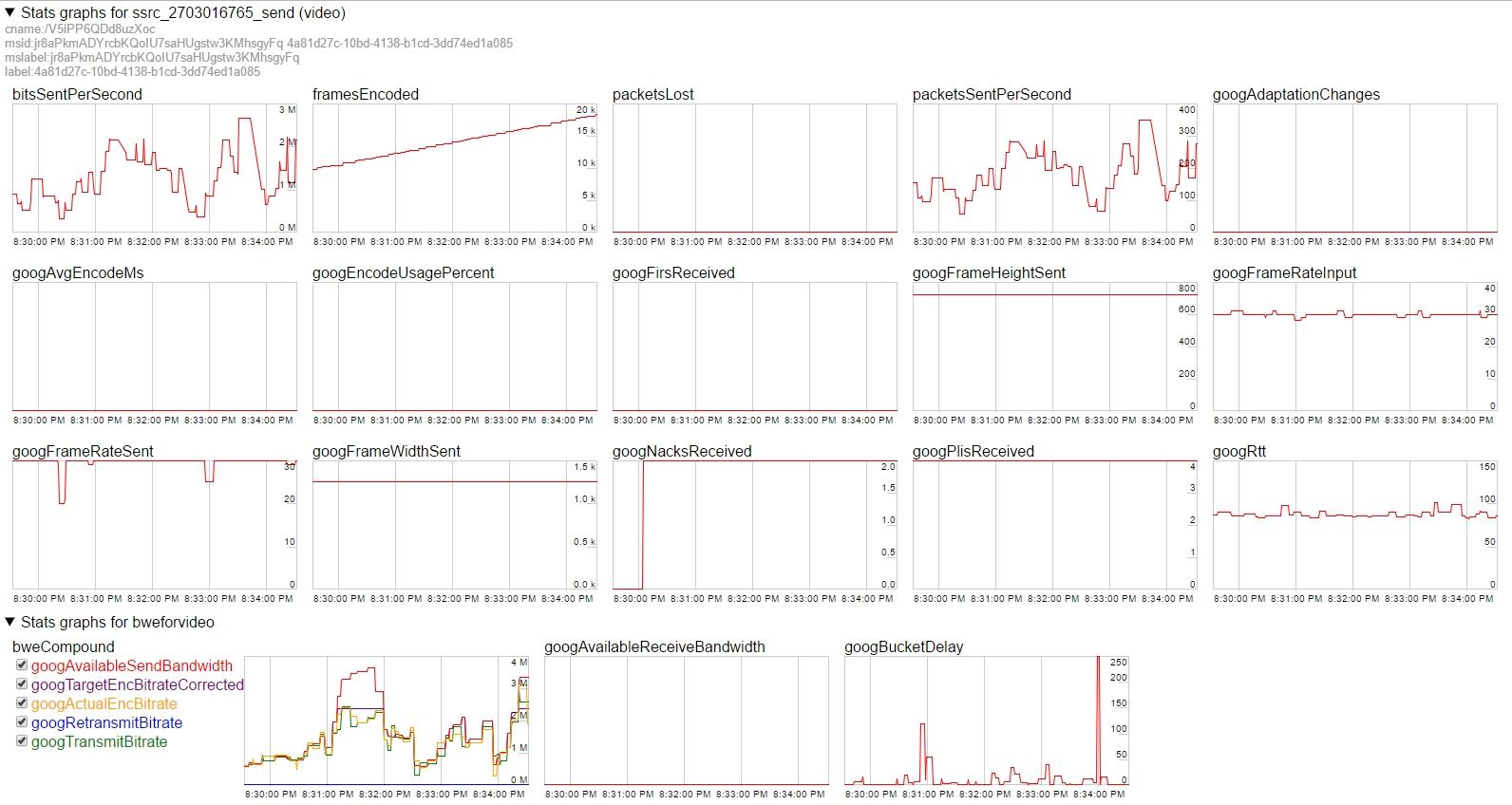

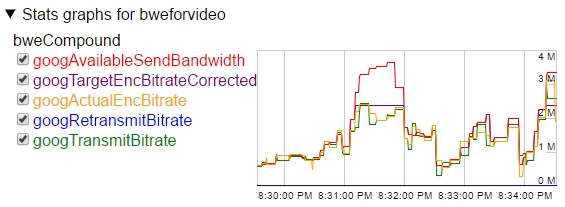

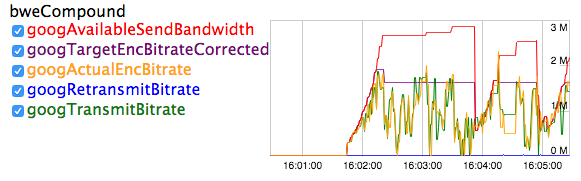

Below are graphs of this WebRTC broadcast.

From the graphs it can be seen that the bitrate of the stream dynamically changes in the range of 1-2 Mbps. This is because the server automatically detects a channel shortage and asks Chrome to lower the bitrate from time to time. The bitrate bar changes dynamically and is indicated on the chart in red by googAvailableSendBandwidth . Green color indicates the actual googTransmitBitrate bitrate.

This is how Congestion Control works on the server side. In order to avoid network congestion and packet loss, the server constantly adjusts the bitrate and the browser follows the server's commands to adjust the bitrate.

At the same time on the width and height graphs everything is stable. Shipped width is 1280, and height is 720p. Those. The sent resolution does not change and the bitrate control occurs without changing the resolution, by lowering the video encoding bitrate.

CPU overload control

In order for the resolution not to change, we disabled the use of the CPU detector detector (googCpuOveruseDetection) for the Google Chrome browser on tests.

The CPU detector monitors the CPU load and, when a certain threshold is reached, triggers events that cause Chrome to reset the resolution. By disabling this feature, we allowed the processor to overrun, and fixed the resolution.

mediaConnectionConstraints: {"mandatory": {googCpuOveruseDetection: false}} With the use of CPU-detector graphics look smoother, but the resolution of the video stream is constantly switched up and down.

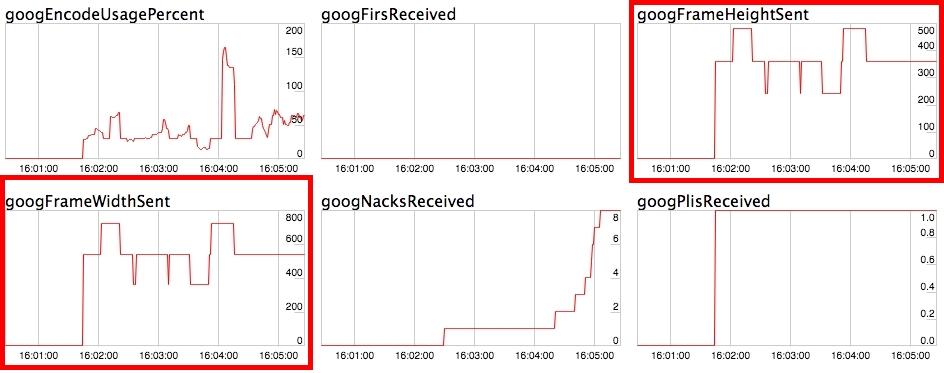

To test adaptations, choose a weaker machine. It will be a Mac Mini 2011 with core i5 1.7 Ghz and Chrome 58. We’ll use the same cartoon as a test.

Please note that at the very beginning of the streaming, the video resolution fell by 540x480.

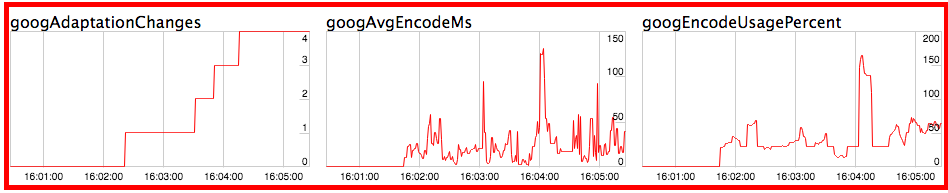

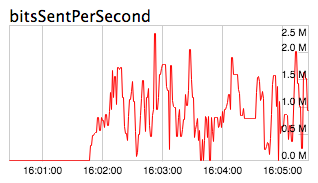

As a result, we have the following graphs:

On the graphs, you can see how the width and height of the image changes, i.e. Video resolution:

And on these graphs it is shown, against the background of what changes there were reductions and increases in width and height.

The googAdaptationChanges parameter shows the number of events (adaptations) that Chrome initiated in the streaming process. The more adaptations passes, the more often the video resolution and bitrate change during video streaming.

As for the bitrate, its schedule turned out to be more sawtooth, despite the fact that the server did not raise the top bar.

This aggressive change in bitrate is due to two things:

- The inclusion of googAdaptationChanges adaptations on the side of the Google Chrome browser, which were caused by increased CPU load.

- Using the H.264 codec, which encodes differently than VP8 and can greatly reset the coding bitrate depending on the content of the scene.

Conclusion

As a result, we did the following:

- We measured the delay WebRTC broadcast through a remote server and determined its average values.

- They showed how the bitrate of the 720p video stream in the VP8 codec behaves when the adaptations of the CPU are disabled, how the bitrate adapts to the network conditions.

- Saw the bit rate bar dynamically set by the server.

- We tested the WebRTC broadcast on a less powerful client machine with adaptation under the CPU and H.264 codec and saw the dynamic adjustment of the video stream resolution.

- They showed CPU metrics that affect resolution changes and the number of adaptations.

Thus, you can answer a few questions that were implied at the beginning of the article:

Question : What should be done to make WebRTC broadcast with minimal delay?

Answer : Just make a broadcast. The resolution and bitrate of the stream are automatically adjusted to the values that provide the minimum delay. For example, if you set 1280x720, the bitrate can go down to 1 Mbps, and the resolution is 950x540.

Question : What should be done to make WebRTC broadcast with minimal latency at a stable resolution of 720p?

Answer : For this, the user channel must actually give at least 1 Mbps and the adaptations of the CPU must be disabled. In this case, the resolution will not fall and adjustment will occur only due to the bitrate.

Question : What will happen to the 720p video stream on the 200 kbps band?

Answer : The picture will be blurred by macroblocks and a low (about 10) FPS. At the same time, the delay will remain low, but the video quality will be visually very bad.

Links

Media Devices is a WebRTC broadcast test case that was used to test latency.

Source - the source code of the test translation example.

Web Call Server - WebRTC server

chrome: // webrtc-internals - WebRTC graphics

Source: https://habr.com/ru/post/328286/

All Articles