We write a script to synchronize folders with Google Drive, plus learn to use the Google Drive API

In this article, we will look at the basic tools for working with the Google Drive REST API, implement the "direct" and "reverse" synchronization of a folder on a computer with a folder in the Google Cloud Cloud, and at the same time find out what difficulties may arise when working with Google Docs via the Disk API and how import and export them correctly so that (almost) no one is hurt.

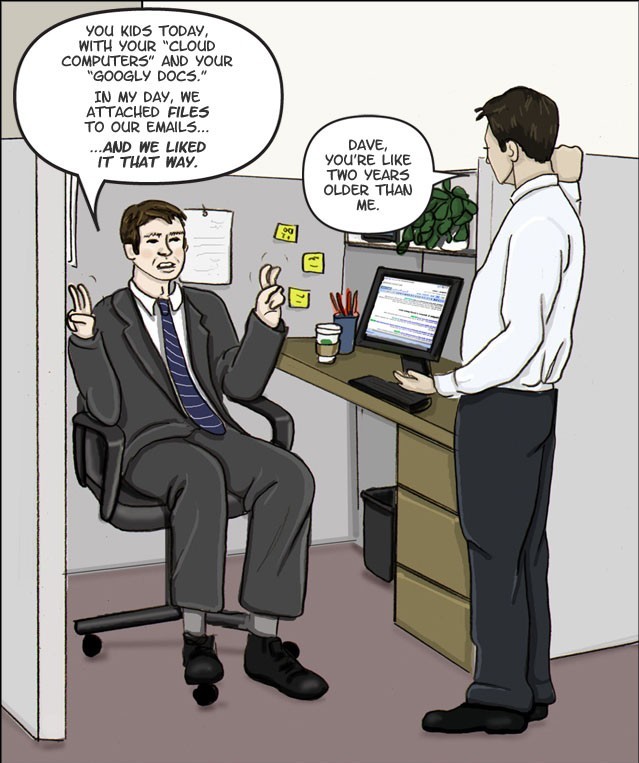

Lyrical introduction

One day, when I woke up early in the morning, I suddenly discovered a lack of productivity (and automation) in everyday life. The first "under the knife optimization" hit the working folder with useful books, projects, documents and funny pictures of cats. Usually, all my stormy activities take place at my home computer, but sometimes it is necessary to act outside the limits of a cozy room, and I want everything familiar to be at hand, including current projects. In such cases, flash drives come to the rescue, but this is the last century and not stylish, I want everything to be reliably available from any cloud (if you have internet access, of course). As an example of such a cloud in this case will be Google Disk.

But since changes occur every day, something is added, something flies into the basket, and there is no desire to follow it manually, synchronization must be set up. On Windows and MacOS there is a special software approximately for such purposes. I spend most of my time on Ubuntu, and there is no official Linux client and is not expected. And in general it does not seem very convenient. I would like to have a universal method for solving this problem, preferably cross-platform, without throwing all the files into a special folder. The search for ready-made solutions did not lead to anything, not one option suited me completely. I decided that it would be best to write a script in Python myself and hang it up to cron (or run it myself and manually). In addition, there was no tutorial on this topic in Habr at a cursory search, so it may be useful to someone in the future.

Get the necessary data ("turn on" the Drive API and get the necessary keys)

After completing a few simple steps, after 5 minutes you will already have a test application in your hands with access to the Drive API.

Before embarking on an exciting journey, be sure to take with you:

- Python version 2.6 or higher (in my case it was Python 3 which I advise you).

- Batch pip manager

- Internet access and web browser.

- Google account and Google Drive work.

This must be taken seriously, because if at least one of these conditions is violated, then nothing will come of it.

The procedure is as follows:

1) Follow the link to create our project and automatically enable the Drive API. Click Continue, then Go to credentials

2) On the Add cridentials to your page page, click the Cancel button.

3) On the page, select the OAuth consent screen tab. Select the desired Email and enter the Product name, click Save.

4) In the Credentials tab, select Create credentials, then Oauth client ID.

5) Select the Other type, enter the sonorous name and click Create.

6) In the field of our "project" click on the button on the right to download the json file. Save it to the folder with the future script, for convenience, rename to client_secret.json

Next, perform the actions already on the computer

1 - Installing dependencies

Install the Google Client Library Library

sudo pip install --upgrade google-api-python-client Or look at the link if you have any questions.

2 - Create our initial script

Create a python script next to the file that was jabbed in .json and save the sample code for the test:

from __future__ import print_function import httplib2 import os from apiclient import discovery from oauth2client import client from oauth2client import tools from oauth2client.file import Storage try: import argparse flags = argparse.ArgumentParser(parents=[tools.argparser]).parse_args() except ImportError: flags = None # If modifying these scopes, delete your previously saved credentials # at ~/.credentials/drive-python-quickstart.json SCOPES = 'https://www.googleapis.com/auth/drive.metadata.readonly' CLIENT_SECRET_FILE = 'client_secret.json' APPLICATION_NAME = 'Drive API Python Quickstart' def get_credentials(): home_dir = os.path.expanduser('~') credential_dir = os.path.join(home_dir, '.credentials') if not os.path.exists(credential_dir): os.makedirs(credential_dir) credential_path = os.path.join(credential_dir, 'drive-python-quickstart.json') store = Storage(credential_path) credentials = store.get() if not credentials or credentials.invalid: flow = client.flow_from_clientsecrets(CLIENT_SECRET_FILE, SCOPES) flow.user_agent = APPLICATION_NAME if flags: credentials = tools.run_flow(flow, store, flags) else: # Needed only for compatibility with Python 2.6 credentials = tools.run(flow, store) print('Storing credentials to ' + credential_path) return credentials def main(): credentials = get_credentials() http = credentials.authorize(httplib2.Http()) service = discovery.build('drive', 'v3', http=http) results = service.files().list( pageSize=10,fields="nextPageToken, files(id, name)").execute() items = results.get('files', []) if not items: print('No files found.') else: print('Files:') for item in items: print('{0} ({1})'.format(item['name'], item['id'])) if __name__ == '__main__': main() When you first start it, it will try to open the tab in the browser and ask you to log in to your Google account and agree with the rights issued.

In general, everything is simple and clear, but if you briefly run

- The get_credentials function creates / gets a .json authorization file for authorization through the API.

- scopes are our access rights (in the future we will put the biggest ones because we trust ourselves)

- service gives us access to a Google Drive account

- Further appeal to api, take a list of files (limited to 10 pieces) and display the data.

You can even run and see how it displays the names and id of the first ten files and folders in the root directory.

In general, this is almost a complete coypaste from the official quickstart-tutorial , so if you have any problems, look there.

A bit about Google Drive API

Basic documentation , if that suddenly.

Actually let's get acquainted with the API closer.

The structure of the Drive REST API is a collection of "resources" (orig. Resources types), each of which has its own methods.

In total, the "resources" about (), files () and possibly comments () are interesting. All the rest can be viewed, again, in the documentation , and if you need them, then the work with them will be similar (most likely). We will consider really useful things.

First of all, for the seed, let's see what we can learn about our Disk through the methods about () (which is logical). Fortunately, everything is simple and there is only one get () method.

Request example

about_example = drive_service.about(fields='user, storageQuota, exportFormats, importFormats').get('') There are many parameters here, I will tell you about those that seemed to me important or interesting.

The importFormats and exportFormats options contain information in which formats you can convert the file when it is downloaded and downloaded, respectively. This information may be important in the case of working with files of the Google Docs format, more on this below.

StorageQuota can tell, which is logical, about the amount of used and remaining free space in the cloud.

user contains several fields, for example displayName, photoLink and emailAddress (the values are clear from the name)

At the output we get a JSON structure with this data.

{

"user": {

"kind": "drive # user",

"displayName": "First Name Last Name",

"photoLink": " http://i.imgur.com/hgXLjzr.png ",

"me": true

"permissionId": "id number",

"emailAddress": "youremail@gmail.com"

},

"storageQuota": {

"limit": "16106127360",

"usage": "2920407530",

"usageInDrive": "1590570002",

"usageInDriveTrash": "54316729"

},

"importFormats": {

"application / x-vnd.oasis.opendocument.presentation": [

"application / vnd.google-apps.presentation"

],

"text / tab-separated-values": [

"application / vnd.google-apps.spreadsheet"

],

"image / jpeg": [

"application / vnd.google-apps.document"

],

"image / bmp": [

"application / vnd.google-apps.document"

],

"image / gif": [

"application / vnd.google-apps.document"

],

"application / vnd.ms-excel.sheet.macroenabled.12": [

"application / vnd.google-apps.spreadsheet"

],

"application / vnd.openxmlformats-officedocument.wordprocessingml.template": [

"application / vnd.google-apps.document"

],

"application / vnd.ms-powerpoint.presentation.macroenabled.12": [

"application / vnd.google-apps.presentation"

],

"application / vnd.ms-word.template.macroenabled.12": [

"application / vnd.google-apps.document"

],

"application / vnd.openxmlformats-officedocument.wordprocessingml.document": [

"application / vnd.google-apps.document"

],

"image / pjpeg": [

"application / vnd.google-apps.document"

],

"application / vnd.google-apps.script + text / plain": [

"application / vnd.google-apps.script"

],

"application / vnd.ms-excel": [

"application / vnd.google-apps.spreadsheet"

],

"application / vnd.sun.xml.writer": [

"application / vnd.google-apps.document"

],

"application / vnd.ms-word.document.macroenabled.12": [

"application / vnd.google-apps.document"

],

"application / vnd.ms-powerpoint.slideshow.macroenabled.12": [

"application / vnd.google-apps.presentation"

],

"text / rtf": [

"application / vnd.google-apps.document"

],

"text / plain": [

"application / vnd.google-apps.document"

],

"application / vnd.oasis.opendocument.spreadsheet": [

"application / vnd.google-apps.spreadsheet"

],

"application / x-vnd.oasis.opendocument.spreadsheet": [

"application / vnd.google-apps.spreadsheet"

],

"image / png": [

"application / vnd.google-apps.document"

],

"application / x-vnd.oasis.opendocument.text": [

"application / vnd.google-apps.document"

],

"application / msword": [

"application / vnd.google-apps.document"

],

"application / pdf": [

"application / vnd.google-apps.document"

],

"application / json": [

"application / vnd.google-apps.script"

],

"application / x-msmetafile": [

"application / vnd.google-apps.drawing"

],

"application / vnd.openxmlformats-officedocument.spreadsheetml.template": [

"application / vnd.google-apps.spreadsheet"

],

"application / vnd.ms-powerpoint": [

"application / vnd.google-apps.presentation"

],

"application / vnd.ms-excel.template.macroenabled.12": [

"application / vnd.google-apps.spreadsheet"

],

"image / x-bmp": [

"application / vnd.google-apps.document"

],

"application / rtf": [

"application / vnd.google-apps.document"

],

"application / vnd.openxmlformats-officedocument.presentationml.template": [

"application / vnd.google-apps.presentation"

],

"image / x-png": [

"application / vnd.google-apps.document"

],

"text / html": [

"application / vnd.google-apps.document"

],

"application / vnd.oasis.opendocument.text": [

"application / vnd.google-apps.document"

],

"application / vnd.openxmlformats-officedocument.presentationml.presentation": [

"application / vnd.google-apps.presentation"

],

"application / vnd.openxmlformats-officedocument.spreadsheetml.sum": [

"application / vnd.google-apps.spreadsheet"

],

"application / vnd.google-apps.script + json": [

"application / vnd.google-apps.script"

],

"application / vnd.openxmlformats-officedocument.presentationml.slideshow": [

"application / vnd.google-apps.presentation"

],

"application / vnd.ms-powerpoint.template.macroenabled.12": [

"application / vnd.google-apps.presentation"

],

"text / csv": [

"application / vnd.google-apps.spreadsheet"

],

"application / vnd.oasis.opendocument.presentation": [

"application / vnd.google-apps.presentation"

],

"image / jpg": [

"application / vnd.google-apps.document"

],

"text / richtext": [

"application / vnd.google-apps.document"

]

},

"exportFormats": {

"application / vnd.google-apps.form": [

"application / zip"

],

"application / vnd.google-apps.document": [

"application / rtf",

"application / vnd.oasis.opendocument.text",

"text / html",

"application / pdf",

"application / epub + zip",

"application / zip",

"application / vnd.openxmlformats-officedocument.wordprocessingml.document",

"text / plain"

],

"application / vnd.google-apps.drawing": [

"image / svg + xml",

"image / png",

"application / pdf",

"image / jpeg"

],

"application / vnd.google-apps.spreadsheet": [

"application / x-vnd.oasis.opendocument.spreadsheet",

"text / tab-separated-values",

"application / pdf",

"application / vnd.openxmlformats-officedocument.spreadsheetml.sl",

"text / csv",

"application / zip",

"application / vnd.oasis.opendocument.spreadsheet"

],

"application / vnd.google-apps.script": [

"application / vnd.google-apps.script + json"

],

"application / vnd.google-apps.presentation": [

"application / vnd.oasis.opendocument.presentation",

"application / pdf",

"application / vnd.openxmlformats-officedocument.presentationml.presentation",

"text / plain"

]

}

}

The "resource" .files (which we mainly will use) also have a large number of methods, consider the main ones.

The files (). List () method is already a little familiar, but let's take a closer look.

The .list () method allows you to find files and folders (using specific queries).

Request example

list_example = drive_service.files().list(q='fullText contains "important" and trashed = true', fileds='files(id, name)') Here we used such an important parameter as q - a special kind of query that allows us to select only the information we need from the entire stream.

It works in a logical semi-natural language, various parameters can be considered, queries look like this

q = 'name = "Habrahabr"' q = 'fullText contains "some text"' q = '"idexample123" in parents' Read more about how to wield it here .

The fileds item regulates what kind of information about the files we want to receive (so that requests are executed faster). This concerns any request.

You can also see the basic examples of work - downloading files and downloading files . I did not consider them separately, because then they will be used "in combat conditions", which is more useful.

Initial file upload

However, enough of the theory, let's move on to the fun part (for the sake of this, we have gathered here).

In order to synchronize a folder on a computer with a folder in the cloud, you first need to have something to synchronize both there and there. In principle, you can download the folder manually through the browser, but in this case, you can manually update the files separately, do not come here at all. So we will load the folder with a couple of lines of code, magic and crutches.

If you still download the folder manually / already have one on the disk and do not want to change anything, just go to the next item (just note - in our particular case, the folder is created directly in the root directory of Google Drive, so bear this in mind, you may need a little bit of tinkering).

Write a function that will load our folder to disk

def folder_upload(service): parents_id = {} for root, _, files in os.walk(FULL_PATH, topdown=True): last_dir = root.split('/')[-1] pre_last_dir = root.split('/')[-2] if pre_last_dir not in parents_id.keys(): pre_last_dir = [] else: pre_last_dir = parents_id[pre_last_dir] folder_metadata = {'name': last_dir, 'parents': [pre_last_dir], 'mimeType': 'application/vnd.google-apps.folder'} create_folder = service.files().create(body=folder_metadata, fields='id').execute() folder_id = create_folder.get('id', []) for name in files: file_metadata = {'name': name, 'parents': [folder_id]} media = MediaFileUpload( os.path.join(root, name), mimetype=mimetypes.MimeTypes().guess_type(name)[0]) service.files().create(body=file_metadata, media_body=media, fields='id').execute() parents_id[last_dir] = folder_id return parents_id Let us analyze in order, although everything is quite simple here.

FULL_PATH is a global variable containing the full path to the desired folder.

We "run through" our folder from the top to the bottom. For each new folder, we create its twin brother on google disk, save its id to the dictionary (key - name, value - id), and load all the found files into the appropriate folder. At the end we return this dictionary.

True, as in the joke, there is one nuance. To download a file to disk, you need to specify its mimeType (specification for encoding information, do not ask, I do not know). To simplify the work with this case, we will use a ready-made solution (in fact, it simply assumes the appropriate mimeType extension, if I understood correctly from the documentation).

So if you have any tricky files or files without an extension, consult a specialist before using the program (be careful).

Sync changes from computer to cloud

We downloaded the folder, did some changes, deleted something, added something, just edited something, we should now make these changes affect our cloud copy.

Obviously, you do not really want to delete and reload just like that, so "we will go another way."

Let's create for this a separate python script, load the necessary libraries. To analyze file changes, you need to obtain a tree of files and folders on the Disk and local storage, then to compare them and transfer these changes there or back.

There is no built-in way to get an entire tree of folders and files in a fairly rich api feature (well, or I didn’t find it, and then I collected the bike for nothing).

And if not, then write it yourself!

def get_tree(folder_name, tree_list, root, parents_id, service): folder_id = parents_id[folder_name] results = service.files().list( pageSize=100, q=("%r in parents and \ mimeType = 'application/vnd.google-apps.folder'and \ trashed != True" % folder_id)).execute() items = results.get('files', []) root += folder_name + os.path.sep for item in items: parents_id[item['name']] = item['id'] tree_list.append(root + item['name']) folder_id = [i['id'] for i in items if i['name'] == item['name']][0] folder_name = item['name'] get_tree(folder_name, tree_list, root, parents_id, service) We will analyze in more detail.

A lot of parameters are input

folder_name is the original name of our folder

folder_id - initial folder id

tree_list is a list of our paths, initially empty []

root - intermediate directory path ... which are then stored in tree_list

parents_id - dictionary containing pairs {folder name - its value}

We make the original request for the contents of the folder with id = folder_id (and even those that are not in the basket *), tear out those folders that are (because now we are only interested in ways).

We save the names and id of these folders into the parents_id dictionary, in the root line we note the fact that we are going down the folder hierarchy, and for each new folder we meet recursively run get_tree with the parameters of this folder already.

Thus recursively descend through all the folders and get their way.

// Note1: Google Disk has some kind of special file system (which is probably logical), which has its own laws and rules. For example, two folders or two files with the same name can be side by side in the same directory, because their identifiers are unique to their files - id. In addition, the same file can be simultaneously in two different places on the disk (if I understood everything correctly). Probably this knowledge could be used for more optimized synchronization, build a database next to the script and check it, but this is already overfitting and completely unnecessary. Just a note.

// Note2: all my examples, and even the final script are made with one negligent assumption, it is considered that there are no more than 1000 files in the folder (and so it will come down!). In my case, this is always true, I trust myself. Therefore, it is so crudely and unwisely written. The problem is fixed easily and in a couple of lines using nextPageToken, as shown in the paragraph above. I think it will be easy for you to do it yourself (well, or my hands will ever reach).

Sample code using the nextPageToken is also provided in a special instruction .

Ok, got the folders that are on the disk, now let's do the same

with a folder on the computer (this is already made easier)

os_tree_list = [] root_len = len(full_path.split(os.path.sep)[0:-2]) for root, dirs, files in os.walk(full_path, topdown=True): for name in dirs: var_path = '/'.join(root.split('/')[root_len+1:]) os_tree_list.append(os.path.join(var_path, name)) Here, in essence, only one trick is the transformation of a full path into a relative one. The rest is pretty simple.

Now we have tree_list and os_tree_list, which we intend to compare.

Examples of what these lists look like.

os_tree_list = [Example/Example1, Example/Example1/Example2, Example/Example1/Example3] tree_list = [Example/Example1/, Example/Example1/Example4, Example/Example1/Example4/Example5] For further action, we divide the folders into three groups.

remove_folders = list(set(tree_list).difference(set(os_tree_list))) upload_folders = list(set(os_tree_list).difference(set(tree_list))) exact_folders = list(set(os_tree_list).intersection(set(tree_list))) As is obvious from the name of variables, remove_folders are those folders that are old or accidentally added here and need to be deleted, upload_folders are new folders that need to be downloaded, and exact_folders are folders that are both on the computer and in the cloud.

Let's start with a simple one — downloads of folders and folders contained in them, since we are already a little familiar with this.

upload_folders = sorted(upload_folders, key=by_lines) for folder_dir in upload_folders: var = os.path.join(full_path.split(os.path.sep)[0:-1]) + os.path.sep variable = var + folder_dir last_dir = folder_dir.split(os.path.sep)[-1] pre_last_dir = folder_dir.split(os.path.sep)[-2] files = [f for f in os.listdir(variable) if os.path.isfile(os.path.join(variable, f))] folder_metadata = {'name': last_dir, 'parents': [parents_id[pre_last_dir]], 'mimeType': 'application/vnd.google-apps.folder'} create_folder = service.files().create( body=folder_metadata, fields='id').execute() folder_id = create_folder.get('id', []) parents_id[last_dir] = folder_id for os_file in files: some_metadata = {'name': os_file, 'parents': [folder_id]} os_file_mimetype = mimetypes.MimeTypes().guess_type( os.path.join(variable, os_file))[0] media = MediaFileUpload(os.path.join(variable, os_file), mimetype=os_file_mimetype) upload_this = service.files().create(body=some_metadata, media_body=media, fields='id').execute() upload_this = upload_this.get('id', []) Before the start of the action, we sorted our list with folder “addresses” for convenience according to the number of subdirectories (or even slashes '\') to load the folders and their contents as you dive deep into the hierarchical tree (thus avoiding possible dangers like this) no, and it is not clear where she will throw him at all ").

We create this folder on the disk in the appropriate place for it, with the indication of its "parent" (this is what pre_last_dir is for).

By analogy with the folders, we look at the files in the directory and check their presence in the same folder on the disk.

We take all the files from this folder and load in turn according to a known script.

Further slightly more interesting, we will go over the files and check them for treason changes.

Now it was necessary to compare the files present on both sides of the barricades, and, if necessary, update their contents on the Disk.

For this, the files have a special method .update (), but not everything is so simple.

The initial version suggested not updating, but deleting - downloading a new version of the file (the update () method did not start up on the first attempt, I panicked and decided that it was too tricky and replaced it with the familiar and native upload ()).

It’s good that I decided to test it again, and it all worked, I had only to specify mimeType correctly, once again I remind you - be more careful with it.

By the way, the speed gain on the eye was not noticeable, quite the contrary, but no approximate measurements were made, so this is not accurate.

Then it was worth finding out whether it makes sense to update the file at all, otherwise if every time all these files are downloaded (in whole or in part) it will not be comme il faut.

The very first idea that came to mind was this - checking by the date of the last modification, the benefit for the file is that it is easy to remove this parameter (the modifiedTime parameter).

And everything seems to be nothing, on standard checks everything worked like a clock. But inquisitive minds have probably already understood what the fatal omission here is. Yes, of course, if we just drop a file from another folder with the same name, but different content, then the change date will be old, and the file will be completely different (one of the options, you can probably think of many more situations like this). So it was necessary to come up with a new way to check (no less ingenious, but more effective). As a result, absolutely independently, without anyone's help, an option was invented to check not only the change date, but the file hashes (well, who ever thought that the files on the disk have the md5Checksum parameter). As a result, the logic has not changed much, and the result is good.

for folder_dir in exact_folders: var = (os.path.sep).join(full_path.split( os.path.sep)[0:-1]) + os.path.sep variable = var + folder_dir last_dir = folder_dir.split(os.path.sep)[-1] # print(last_dir, folder_dir) os_files = [f for f in os.listdir(variable) if os.path.isfile(os.path.join(variable, f))] results = service.files().list( pageSize=1000, q=('%r in parents and \ mimeType!="application/vnd.google-apps.folder" and \ trashed != True' % parents_id[last_dir]), fields="files(id, name, mimeType, \ modifiedTime, md5Checksum)").execute() items = results.get('files', []) refresh_files = [f for f in items if f['name'] in os_files] remove_files = [f for f in items if f['name'] not in os_files] upload_files = [f for f in os_files if f not in [j['name']for j in items]] # Check files that exist both on Drive and on PC for drive_file in refresh_files: file_dir = os.path.join(variable, drive_file['name']) file_time = os.path.getmtime(file_dir) mtime = [f['modifiedTime'] for f in items if f['name'] == drive_file['name']][0] mtime = datetime.datetime.strptime( mtime[:-2], "%Y-%m-%dT%H:%M:%S.%f") drive_time = time.mktime(mtime.timetuple()) # print(drive_file['name']) # if file['mimeType'] in GOOGLE_MIME_TYPES.keys(): # print(file['name'], file['mimeType']) # print() os_file_md5 = hashlib.md5(open(file_dir, 'rb').read()).hexdigest() if 'md5Checksum' in drive_file.keys(): # print(1, file['md5Checksum']) drive_md5 = drive_file['md5Checksum'] # print(2, os_file_md5) else: # print('No hash') drive_md5 = None # print(drive_md5 != os_file_md5) if (file_time > drive_time) or (drive_md5 != os_file_md5): file_id = [f['id'] for f in items if f['name'] == drive_file['name']][0] file_mime = [f['mimeType'] for f in items if f['name'] == drive_file['name']][0] # File's new content. # file_mime = mimetypes.MimeTypes().guess_type(file_dir)[0] file_metadata = {'name': drive_file['name'], 'parents': [parents_id[last_dir]]} # media_body = MediaFileUpload(file_dir, mimetype=filemime) media_body = MediaFileUpload(file_dir, mimetype=file_mime) # print('I am HERE, ', ) service.files().update(fileId=file_id, media_body=media_body, fields='id').execute() , , , "" ( , ) — .

( ) —

for folder_dir in remove_folders: var = (os.path.sep).join(full_path.split(os.path.sep)[0:-1]) + os.path.sep variable = var + folder_dir last_dir = folder_dir.split('/')[-1] folder_id = parents_id[last_dir] service.files().delete(fileId=folder_id).execute() .

. Nearly.

: -> -> . , " " — " " ( - ). , , . , , ( , , " ")

3 —

Google -> . , , ( ) .

, , (, ).

:

file_id = 'idexample' request = drive_service.files().get_media(fileId=file_id) fh = io.BytesIO() downloader = MediaIoBaseDownload(fh, request) done = False while done is False: status, done = downloader.next_chunk() print "Download %d%%." % int(status.progress() * 100) . " " ( , — , , ). Google Docs. .

-, :

file_id = 'idexample' request = drive_service.files().export_media(fileId=file_id, mimeType='application/pdf') fh = io.BytesIO() downloader = MediaIoBaseDownload(fh, request) done = False while done is False: status, done = downloader.next_chunk() print "Download %d%%." % int(status.progress() * 100) -, mimeType. Google Docs ( about().get(fields='exportFormats') , Google .doc ).

, , ()[].

C , . pdf , ( ).

( ) , . ( ), , linux gui ( ).

Google Docs, "" .

: io.BytesIO() FileIO(path_to_file, 'wb'), , .

Google Docs

?

— . .

— "" , Google Docs. , ( Microsoft, OpenOffice pdf ).

, .

, , . , .

:

1) , , PDF . , .

2) Microsoft ( .., ) ? , — ( .update()), , id . , , .

. ( )

#f - , , id, mimeType . if f['mimeType'] in GoogleMimeTypes.keys(): print('File APPLICATION!!! ', f['name'], f['mimeType']) # request = service.files().get_media(fileId=file_id) request = service.files().export_media(fileId=file_id, mimeType = GoogleMimeTypes[f['mimeType']]) fh = io.FileIO(os.path.join(variable, f['name']), 'wb') downloader = MediaIoBaseDownload(fh, request) done = False while done is False: status, done = downloader.next_chunk() print("Download %d%%." % int(status.progress() * 100), GoogleMimeTypes[f['mimeType']]) else: print('Good File ', f['name'], f['mimeType']) request = service.files().get_media(fileId=file_id) fh = io.FileIO(os.path.join(variable, f['name']), 'wb') downloader = MediaIoBaseDownload(fh, request) done = False while done is False: status, done = downloader.next_chunk() print("Download %d%%." % int(status.progress() * 100)) / ( )

1) , ( - pdf).

2) mimeType . Google ( ) , . ( xml , ), .

3) -. , Google Docs Drawings, Forms , . — Google Drawings, "--" ( exportFormats importFormats). .

: Google Docs .ext , Microsoft. () . -> , - Google Doc , Office ( , , ).

.

, " ". , . , ( grive), , - , - - , - - . . .

e

.

- "" - . . , Google Docs , , . ," ", . .

, , .

, , .

, :

1) , API ,

2) quickstart-

3)

')

Source: https://habr.com/ru/post/328248/

All Articles