Multi-agent smart home

I will begin my first article with a little background. By the time it all began, for the past 7 years I had been involved in a scientific project whose goal was to develop a semantic technology for designing intelligent systems . It all started with the reading of one remarkable article (thanks to vovochkin ) in the second half of 2015. It was then that I realized that the technology we are developing is well suited for solving problems in the field of the Internet of things. This was the first factor that led me to the current project. The second factor was that I strongly liked the film “Iron Man” and I strongly wanted to have my own “Jarvis” at home.

Several months of planning and reading led me to the following tasks that were to be solved:

')

I will give a little theory which will allow to understand the further description better.

The whole system is built around the knowledge base (I will use the abbreviation BR). Knowledge in it is presented in the form of a semantic network (in more detail about the language of knowledge representation can be read in the article ). I will give a couple of simple examples. Which will simply allow to understand further text and images. In my examples, I will use two languages: graphic - SCg and text - SCs

First you need to learn how to set the "is a" relationship. Everything is quite simple. The image on the left indicates the fact that an apple and pineapple are fruits. To do this, we simply created a node denoting the set of all apples - apple and added an incoming arc of belonging from the node denoting the set of all fruits - fruit . We did the same thing with pineapple.

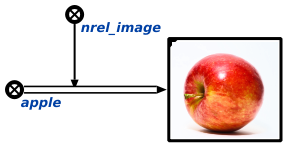

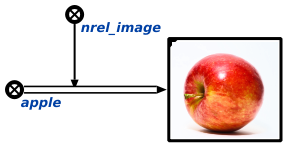

In principle, on the basis of the “is a” relationship, all storage in the BR is built, including any other relationship. For example, consider how an image of an object or a class of objects is indicated in a KB. In this picture it is clear that we have introduced a set denoting all instances of the relationship “to be an image of something” and add to it all the arcs denoting binary bundles.

In principle, on the basis of the “is a” relationship, all storage in the BR is built, including any other relationship. For example, consider how an image of an object or a class of objects is indicated in a KB. In this picture it is clear that we have introduced a set denoting all instances of the relationship “to be an image of something” and add to it all the arcs denoting binary bundles.

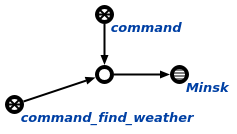

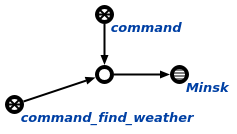

Now let's talk a little about how the processing of stored knowledge takes place. For this, an agent-based approach is used . The important principle is that agents can interact with each other only through a BR (changing its state). Immediately give a link to the documentation for the development of agents in C ++. The interaction between agents is implemented using a specialized command-query language (documentation on it in the development process). Here I will give one example of a request (read this image following the example of the two past, using the “is a” relation):

This is the simplest example of a query, the weather in Minsk. In this image, you can see the node that denotes the request instance and is included in the set command and command_find_weather , where the latter determines the type of request. In essence, a request is a set of elements of which its arguments are.

This is the simplest example of a query, the weather in Minsk. In this image, you can see the node that denotes the request instance and is included in the set command and command_find_weather , where the latter determines the type of request. In essence, a request is a set of elements of which its arguments are.

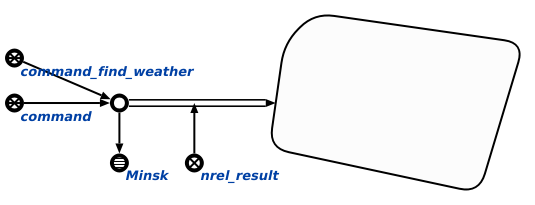

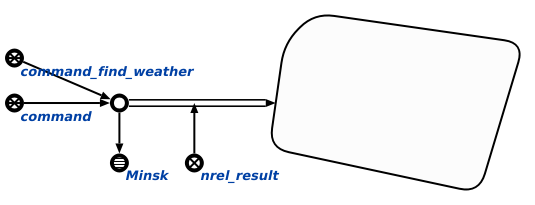

Each agent in the BR has its own specification, where the expected result, the condition of initiation and other properties of the agent are indicated. One of these properties is the event at which it is initiated. The following types of such events are currently available: deleting (creating) an incoming (outgoing) arc, deleting an element, changing the contents of a link. In other words, we sign an agent to an event in the knowledge base and when it happens the agent is initiated. For commands, such an event is to add an instance of the request to the set command_initiated . When this happens, all agents subscribed to this event are initiated. After that, only agents that can handle this type of request continue to work.

At the output, each agent generates a result that is associated with the query instance using the nrel_result relationship.

Thus, it turns out something like a forum where different agents can communicate with each other using a specialized language. In this case, none of them in the formation of the request, do not even know whether it will answer or not and who will give it. This allows you to use different implementations of agents and add them to the system on the go without stopping its work.

The description turned out quite large, although I tried to make it minimal and understandable. I am ready to answer any questions about it in detail in the comments or, if necessary, make a separate article with a detailed description and examples. But we move on.

By the time I started working on Jarvis, our core was in fairly stable and working condition, but the development of agents was possible only with the use of the C language, there are still examples of such agents in the repository.

The first thing that needed to be done was to simplify their development. Therefore, it was decided to write a C ++ library that would take on many routine tasks. At that time, I was working as a game developer on the Unreal Engine 4 and therefore I decided that it would be convenient to use a code generator and implemented this feature .

Now the agent description began to boil down to:

The implementation of this agent was to implement one function:

More details about the implementation of agents can be found in the documentation . Examples of the implementation of agents with the new library can be found here (they temporarily moved to my closed repository, but soon they will be open again).

The second thing that needed to be done was the multithreaded launch of agents. Previously, one thread was used to process events in the KB, which sequentially started the agents from the queue. Now all available kernels are used in the system. I must say at once that it was very difficult to provide asynchronous access to the BR from a variety of agents in different streams. The description of this mechanism is probably a separate article that I can write if such a need arises. Here I will focus only on a couple of nuances:

The third thing I came across was that most of the services of Amazon, Google, etc. have ready APIs in different languages, but not for C ++. Therefore, it was decided to make it possible to run Python code inside C ++. This is all implemented using Boost-Python (using Python 3, here I can already help a lot of people with this).

In addition to the things described, documentation was written (links were given already). The entire c ++ library is covered by unit tests. It all took about a year and all this was done in parallel with the rest of the things and is still being done.

The visual model was developed to refine the concept and debug basic things. Video of a small Proof of concept in this model:

This model helped to debug the system very well, since it could be assembled and let other people play. I will not go into details of its implementation, if anyone is interested - I will answer in the comments. At the moment, this thing does not develop, everything has moved to real devices.

In the early prototype, I implemented voice input using the Android API, and the resulting text was parsed using api.ai , which returned to me the request class for initiation and its parameters. In this or a slightly modified form, it exists now. But we will lead the conversation in a different way - how this mechanism is implemented with the help of agents.

If we recall the comparison of communication between agents with a forum, then this mechanism can be described by the following log (where A <agent_name> are agents that solve different tasks; user is a user):

That's what happens under the hood, when solving such a simple task. But that's not all, then we need to introduce two more concepts. In addition to agents that I have already described (they react to changes in the BR and change its state), there are two more types of agents:

When agents disassembled the user's request and formed an answer to it in the same language, this answer is added to the set indicating a dialogue with him (the user). At this moment, one of the "effector" agents displays this answer to the user as a string. Here is an example of working on video:

Separately, the user interface makes a request to generate speech by text. The agent generates it as an audio file (OGG) using the ivona service.

Last year was spent on debugging and new core functionality. A working prototype was obtained, which can already solve interesting problems. Over the past year, about 750 hours of free time was spent on development.

This approach has potential:

At this point, I’ll probably finish my first article, in which I tried to describe the basic principles and what was done in my implementation of the “smart home”, “smart butler” or “Jarvis” (to whom it is convenient). I have no illusions that the material turned out good, so I hope for feedback. Thanks to all who read.

Several months of planning and reading led me to the following tasks that were to be solved:

')

- Modify the core for storing and processing the knowledge base

- Implement the environment for visual debugging of the house model

- Implement voice input and output

Some theory

I will give a little theory which will allow to understand the further description better.

The whole system is built around the knowledge base (I will use the abbreviation BR). Knowledge in it is presented in the form of a semantic network (in more detail about the language of knowledge representation can be read in the article ). I will give a couple of simple examples. Which will simply allow to understand further text and images. In my examples, I will use two languages: graphic - SCg and text - SCs

First you need to learn how to set the "is a" relationship. Everything is quite simple. The image on the left indicates the fact that an apple and pineapple are fruits. To do this, we simply created a node denoting the set of all apples - apple and added an incoming arc of belonging from the node denoting the set of all fruits - fruit . We did the same thing with pineapple.

In principle, on the basis of the “is a” relationship, all storage in the BR is built, including any other relationship. For example, consider how an image of an object or a class of objects is indicated in a KB. In this picture it is clear that we have introduced a set denoting all instances of the relationship “to be an image of something” and add to it all the arcs denoting binary bundles.

In principle, on the basis of the “is a” relationship, all storage in the BR is built, including any other relationship. For example, consider how an image of an object or a class of objects is indicated in a KB. In this picture it is clear that we have introduced a set denoting all instances of the relationship “to be an image of something” and add to it all the arcs denoting binary bundles.Now let's talk a little about how the processing of stored knowledge takes place. For this, an agent-based approach is used . The important principle is that agents can interact with each other only through a BR (changing its state). Immediately give a link to the documentation for the development of agents in C ++. The interaction between agents is implemented using a specialized command-query language (documentation on it in the development process). Here I will give one example of a request (read this image following the example of the two past, using the “is a” relation):

This is the simplest example of a query, the weather in Minsk. In this image, you can see the node that denotes the request instance and is included in the set command and command_find_weather , where the latter determines the type of request. In essence, a request is a set of elements of which its arguments are.

This is the simplest example of a query, the weather in Minsk. In this image, you can see the node that denotes the request instance and is included in the set command and command_find_weather , where the latter determines the type of request. In essence, a request is a set of elements of which its arguments are.Each agent in the BR has its own specification, where the expected result, the condition of initiation and other properties of the agent are indicated. One of these properties is the event at which it is initiated. The following types of such events are currently available: deleting (creating) an incoming (outgoing) arc, deleting an element, changing the contents of a link. In other words, we sign an agent to an event in the knowledge base and when it happens the agent is initiated. For commands, such an event is to add an instance of the request to the set command_initiated . When this happens, all agents subscribed to this event are initiated. After that, only agents that can handle this type of request continue to work.

At the output, each agent generates a result that is associated with the query instance using the nrel_result relationship.

Thus, it turns out something like a forum where different agents can communicate with each other using a specialized language. In this case, none of them in the formation of the request, do not even know whether it will answer or not and who will give it. This allows you to use different implementations of agents and add them to the system on the go without stopping its work.

The description turned out quite large, although I tried to make it minimal and understandable. I am ready to answer any questions about it in detail in the comments or, if necessary, make a separate article with a detailed description and examples. But we move on.

Kernel improvements

By the time I started working on Jarvis, our core was in fairly stable and working condition, but the development of agents was possible only with the use of the C language, there are still examples of such agents in the repository.

The first thing that needed to be done was to simplify their development. Therefore, it was decided to write a C ++ library that would take on many routine tasks. At that time, I was working as a game developer on the Unreal Engine 4 and therefore I decided that it would be convenient to use a code generator and implemented this feature .

Now the agent description began to boil down to:

class AMyAgent : public ScAgentAction { SC_CLASS(Agent, CmdClass("command_my")) SC_GENERATED_BODY() }; The implementation of this agent was to implement one function:

SC_AGENT_ACTION_IMPLEMENTATION(AMyAgent) { // implement agent logic there return SC_RESULT_OK; } More details about the implementation of agents can be found in the documentation . Examples of the implementation of agents with the new library can be found here (they temporarily moved to my closed repository, but soon they will be open again).

The second thing that needed to be done was the multithreaded launch of agents. Previously, one thread was used to process events in the KB, which sequentially started the agents from the queue. Now all available kernels are used in the system. I must say at once that it was very difficult to provide asynchronous access to the BR from a variety of agents in different streams. The description of this mechanism is probably a separate article that I can write if such a need arises. Here I will focus only on a couple of nuances:

- Work with elements that are stored in memory is implemented using the lock-free approach. I am not strong in classifying such approaches, but I think that the implementation of “Without locks” when I have at least one agent (often more than one or almost all) moves forward;

- Currently, agents cannot guarantee that the data they are going to work with will not be deleted by another agent. In the future, it is planned to introduce locks that will force the object to be stored as long as it is necessary for the agent and in the right context (while the necessary types of locks are being discussed). For the current implementation of agents, this is not a problem, since if an element has been deleted, the agent will return an error when trying to do something in memory with this element.

The third thing I came across was that most of the services of Amazon, Google, etc. have ready APIs in different languages, but not for C ++. Therefore, it was decided to make it possible to run Python code inside C ++. This is all implemented using Boost-Python (using Python 3, here I can already help a lot of people with this).

In addition to the things described, documentation was written (links were given already). The entire c ++ library is covered by unit tests. It all took about a year and all this was done in parallel with the rest of the things and is still being done.

Visual model

The visual model was developed to refine the concept and debug basic things. Video of a small Proof of concept in this model:

This model helped to debug the system very well, since it could be assembled and let other people play. I will not go into details of its implementation, if anyone is interested - I will answer in the comments. At the moment, this thing does not develop, everything has moved to real devices.

Voice interface

In the early prototype, I implemented voice input using the Android API, and the resulting text was parsed using api.ai , which returned to me the request class for initiation and its parameters. In this or a slightly modified form, it exists now. But we will lead the conversation in a different way - how this mechanism is implemented with the help of agents.

If we recall the comparison of communication between agents with a forum, then this mechanism can be described by the following log (where A <agent_name> are agents that solve different tasks; user is a user):

user: ADialogueProcessMessage: " " AApiAiParseUserTextAgent: ""? AResolveAddr: "" - 3452 AApiAiParseUserTextAgent: ""? AResolveAddr: "" - 3443 AApiAiParseUserTextAgent: 3452 3443 AAddIntoSet: ADialogueProcessMessageAgent: AGenCmdTextResult: "..." AGenText: - " " That's what happens under the hood, when solving such a simple task. But that's not all, then we need to introduce two more concepts. In addition to agents that I have already described (they react to changes in the BR and change its state), there are two more types of agents:

- effector - these are agents that make a change in the external environment to a change in the BR. In other words, they are responsible for displaying information: a screen, a manipulator, etc.

- Receptor agents are agents that, for changes in the external environment, make changes in the BR. Responsible for entering information: input devices, sensors, etc.

Video work effector agents

In the video, you can see how the lamp (cube) turns on when the node designating it is added to the set of included devices. Conversely, when it is removed from there, it turns off. The same happens with the crane.

In the video, you can see how the lamp (cube) turns on when the node designating it is added to the set of included devices. Conversely, when it is removed from there, it turns off. The same happens with the crane.

When agents disassembled the user's request and formed an answer to it in the same language, this answer is added to the set indicating a dialogue with him (the user). At this moment, one of the "effector" agents displays this answer to the user as a string. Here is an example of working on video:

Separately, the user interface makes a request to generate speech by text. The agent generates it as an audio file (OGG) using the ivona service.

What's next?

Last year was spent on debugging and new core functionality. A working prototype was obtained, which can already solve interesting problems. Over the past year, about 750 hours of free time was spent on development.

This approach has potential:

- Adding new functionality does not affect already working. For example, when I needed to do a task scheduler (to run tasks at a certain time or periodically), then I needed only 1 agent who just adds the already formed request to the set initiated at the specified time. It turns out that any request that is available in the request language can be scheduled. For example: " " remind me to call my wife " "; "Turn off the light at 10" ; "Turn on the TV at 11.05" ; ...;

- I did not describe in this article, but there is a search by patterns , on the basis of which one can draw a logical conclusion (and already did test solvers of problems in geometry and physics);

- Cloud Natural Language API is planned to be used as an input text analyzer, which will increase the quality of the language interface;

At this point, I’ll probably finish my first article, in which I tried to describe the basic principles and what was done in my implementation of the “smart home”, “smart butler” or “Jarvis” (to whom it is convenient). I have no illusions that the material turned out good, so I hope for feedback. Thanks to all who read.

Source: https://habr.com/ru/post/328238/

All Articles