Neuroculture Part 0. Or neuro-free chicken coop

Or how to smoke in the neural network?

The chicken laid a testicle. The process itself looks terrible. The result is edible. Mass genocide of chickens.

This article will describe:

- Where, how and why you can get a little quality self-education in the field of work with neural networks for FREE, NOW and NOT FAST;

- The logic of recursion will be described and books on the topic will be recommended;

- A list of basic terms will be described that need to be broken down into levels 2-3 abstraction;

- Ipynb-notebook, which contains the necessary links and basic approaches, will be shown;

- There will be some peculiar sarcastic humor;

- Some simple patterns that you will encounter when working with neural networks will be described;

')

Articles about neurocooler

Spoiler header

- Intro to learning about neural networks

- Iron, software and config for monitoring chickens

- A bot that posts events from the life of chickens - without a neural network

- Dataset markup

- A working model for the recognition of chickens in the hen house

- The result - a working bot that recognizes chickens in the hen house

Philosophical introduction begins here.

Where to begin?

Spoiler header

You can start by saying that my girlfriend wrote an excellent article about her journey and the installation of a chicken observation system in the hen house. What for? Because the applied task motivates much more than the tasks on Kaggle, where everything is also far from ideal (faces in 1/2 tasks, winning architectures are stacks of 15 models, overfitting to search for unscalable regularities, etc., etc.). My task is to write neural networks and python code that will distinguish chickens, and, perhaps, log events in the life of chickens into our favorite DBMS . In the process, you can learn a lot of interesting things and, perhaps, even change your life by sharing your mini-experiences. And nice, and useful and fun.

You can also start by saying that, in principle, there is now a new branch of the “bubble” in the technology market - everyone abruptly ran “into AI”. Previously, everyone fled to IT, online, to Bigdat, to Skolkovo, to AR / VR. If you sit in the thematic Russian chats, then there people usually either write everything from scratch to { insert their exotic language } or make one-time chat bots, mastering the means of emerging corporations. But if you follow such principles in your self-education, then you need to learn from ardent fans of their work, who do what they do, not for profit, but for the sake of beauty .

And then an unknown person came to help me, who inserted a line into my file , where I collected educational utilities in the field of work with data. Surprisingly, these people (fast.ai is a link someone put in) did a tremendous job of promoting and learning even from scratch, following the principle of inclusiveness and integrity of education against exclusivity and the principle of the ivory tower . But about everything in order.

Approach to education in a nutshell.

The authors themselves will tell better than me.

I saw once in how in the ods chat (if you know what it is, you will understand) a team was formed that ranked second in the satellite image recognition competition, but the total number of man-hours needed for such a performance does not motivate to participate in such contests, taking into account non-ideality of the world. Also, in principle, the ratio of the remuneration of researchers to the total price of the competition does not inspire enthusiasm.

Therefore, all of the following is done for the neurocooler and only for him.

Just in case, I’ll clarify that my tasks are NOT included:

I want to share wonderful things and attach the maximum number of people to them, giving the simplest and widest possible description of the path that worked for me.

You can also start by saying that, in principle, there is now a new branch of the “bubble” in the technology market - everyone abruptly ran “into AI”. Previously, everyone fled to IT, online, to Bigdat, to Skolkovo, to AR / VR. If you sit in the thematic Russian chats, then there people usually either write everything from scratch to { insert their exotic language } or make one-time chat bots, mastering the means of emerging corporations. But if you follow such principles in your self-education, then you need to learn from ardent fans of their work, who do what they do, not for profit, but for the sake of beauty .

And then an unknown person came to help me, who inserted a line into my file , where I collected educational utilities in the field of work with data. Surprisingly, these people (fast.ai is a link someone put in) did a tremendous job of promoting and learning even from scratch, following the principle of inclusiveness and integrity of education against exclusivity and the principle of the ivory tower . But about everything in order.

Approach to education in a nutshell.

The authors themselves will tell better than me.

I saw once in how in the ods chat (if you know what it is, you will understand) a team was formed that ranked second in the satellite image recognition competition, but the total number of man-hours needed for such a performance does not motivate to participate in such contests, taking into account non-ideality of the world. Also, in principle, the ratio of the remuneration of researchers to the total price of the competition does not inspire enthusiasm.

Therefore, all of the following is done for the neurocooler and only for him.

Just in case, I’ll clarify that my tasks are NOT included:

Very thick layer of sarcasm

- Write a course to then sell the leads of its young graduates in Mail.ru xD;

- To write about how I like Caffe vs. Theano or Tensorflow - all this does not make a difference to an industrial professional level, if you are not a researcher in this field with experience and do not write scientific articles;

- Write neural networks from scratch to {your exotic language};

- Sell you something for money (only ideas and for free);

I want to share wonderful things and attach the maximum number of people to them, giving the simplest and widest possible description of the path that worked for me.

The philosophical introduction ends here.

Description of how to learn how to train neural networks

TLDR (in install / learn order from easy to hard)

If you want to train neural networks efficiently and modernly for an applied purpose (rather than rewrite everything to {X} or build a PC with 10 video cards), here’s a short and very recursive guide:

List here

- 1. Learn at least the basic concepts:

- Linear algebra. Loop intro video. Start at least with them;

- Mathematical analysis. Introductory video loop ;

- Read about gradient descent. Here is an overview of the methods and here is a visualization . You will find the rest yourself in the courses;

- 2. If you do not know about anything from the list at all, be prepared to invest 200-300 hours of your time. If only neural networks and / or python - then 50-100 hours;

- 3. Get yourself Ubuntu or its equivalent (long live the comments in the "no tru" style). My brief overview guide (the Internet will help to find more detailed technical guides). Of course, you can also pervert through virtualka, dockers, Mac, etc. and so on;

- 4. Set yourself the third python (usually already out of the box, 2017). It is the third. But it is better to say it again. Do not try to change the system python in Linux - everything will break;

- 5.If you are not very familiar with the python, then (everything, again, is googled):

- The easiest and free source of the “base” level and free (no courses for 30-50k rubles, then to go to work as an instructor for the same courses for 30k rubles, when the average salary is allegedly 100k rubles);

- The ideal recursion entry point for using python to work with data;

- 6. Put yourself a jupyter notebook and this extension ( you need code folding ). Extremely shortens work time. True ;

- 7. Buy yourself a video card (now it is more profitable to buy than to rent - the beginning of 2017):

- 8. The best educational (FREE) resources:

- www.fast.ai is the entry point for recursion. There is a lot of crazy information in a blog, in a video, on a forum, on wikis and notes;

- A great book about neural networks and the first code from it on the third python (google, maybe someone zadabazhil all the code, I got mad after the first two chapters);

- Magnificent course Andrew Ng and his interpretation on python;

- 9. Jupyter notebook ( html ipynb ), which:

- It contains a hierarchical structure of cells, each of which describes what is being done and why;

- The main inclusions, utilities and libraries are divided into types;

- There are links to the main sources needed to understand what is happening;

- Sugar is given to work with Keras (in more detail without selling here , the people from fast.ai recommend it);

- For the dataset of cars passing through the window, a prediction grade accuracy of ~ 80% was obtained (randomly gives 50%, but the pictures are shitty and small);

- For datasets from the distracted driving competition, the obtained accuracy of prediction of 10 classes in ~ 50% and an error that exactly ranks among the top 15-20% of decisions in the world;

To-do list for best results (for distracted driving, for example, you can get ~ 60-75% accuracy):

List of improvements for the future

- Using visualizations to understand what the network is learning;

- Using a training set of 300-500 images to quickly understand which image distortion parameters are best suited;

- Use test dataset (or cross-validation) to increase accuracy. It is necessary to add no more than 25-30% of such pictures (semi-supervised learning);

- Using imagenet tweaking as an option for the model;

If you have read this far, then here is a hen from which suspicions were removed that it is not rushing. In the soup, it will go much later due to this.

I will describe now what seemed to be interesting from the process of directly learning neural networks (not those in the head, but those in python):

List here

- Dataset with cars showed that if the pictures are small and the selection of classes is offset (one class is more than another), then the model will well define only one class;

- Start with a small set, so that the model will train for several tens of seconds and set up the meta-parameters for changing images;

- If you have a certain application of motion recognition + neural network, then it is better to immediately cut the pictures in open-cv, do not leave for later;

- Neural networks have a so-called “easy way out” effect, when the bone-function can stay in a local minimum for a relatively long time, because it is easier to predict the 50% chance of falling permanently into grade 1 than learning;

- Try first simple architecture, complicate gradually. If you train slowly, take a small sample or check your model;

- Simple architecture + try different meta-parameters (learning rate);

- If the bone-function does not decrease at all, then somewhere in the model if there is an annoying typo. It's easier to find it by comparing your model with the model of another person;

- Now at the level of software sugar (keras) there are already a huge number of state-of-the-art features, such as:

- Image normalization;

- Image distortion;

- Dropout;

- Convolutions;

- Iterators for sequential reading of files;

- From the interesting (not from the field of the stacking of 15 models) in Kaggle competitions, I would note the use of combinations of such things as:

- Image preprocessing, contour and shape search using open-cv;

- Convolutional neural networks for the main classification;

- Neural networks for creating meta-data about images - for example, first they search for whales' heads, and only then determine the whale;

- Gathered here an interesting about the latest architecture on Kaggle

But a set of pictures

Spoiler header

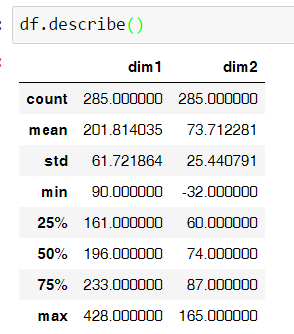

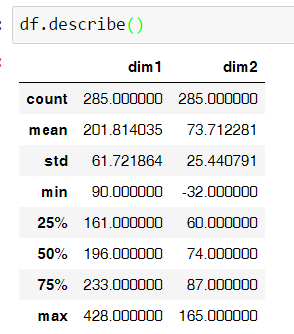

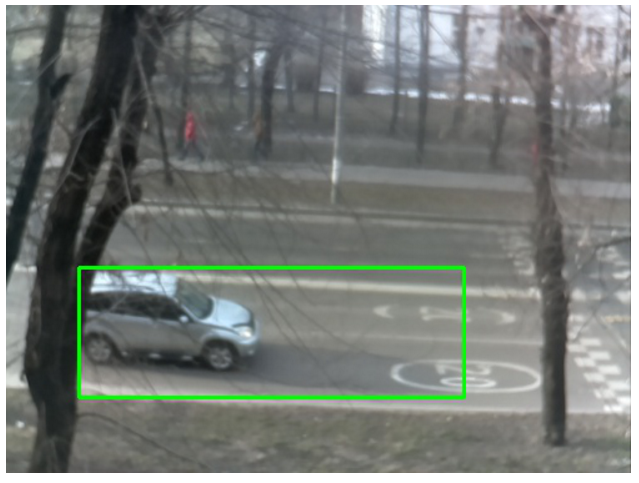

The first 285 cars have modest dimensions in pixels + everything is blurry ...

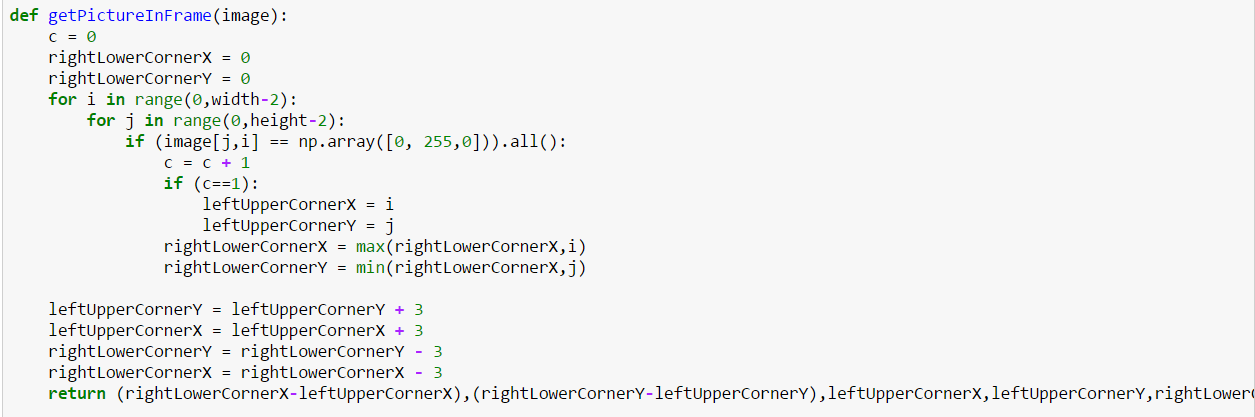

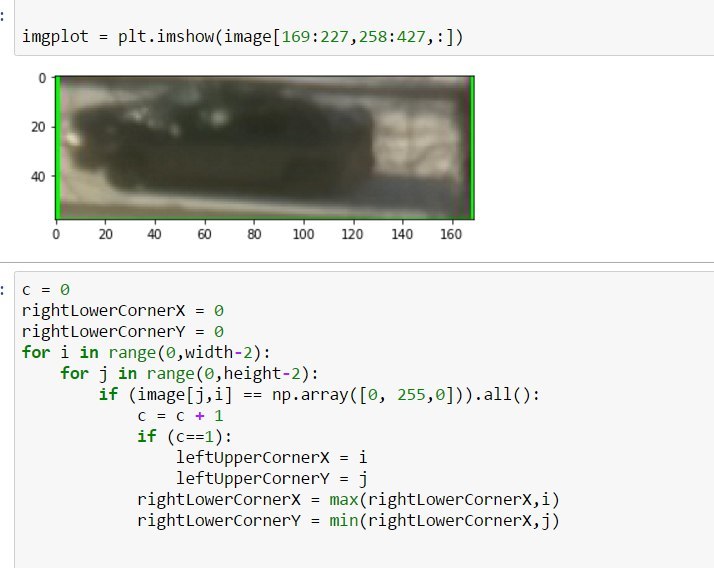

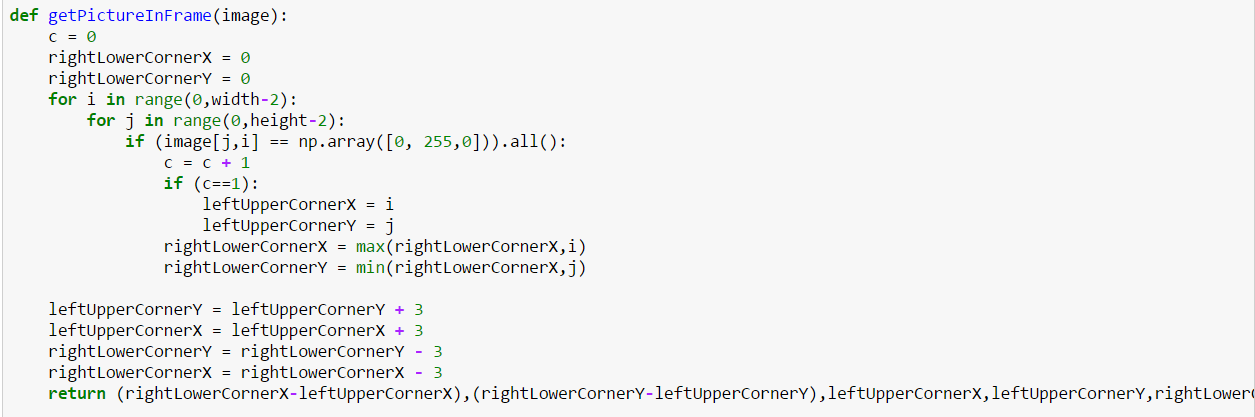

When you want to quickly cut the picture, but I do not want to think that such ugly decisions are born

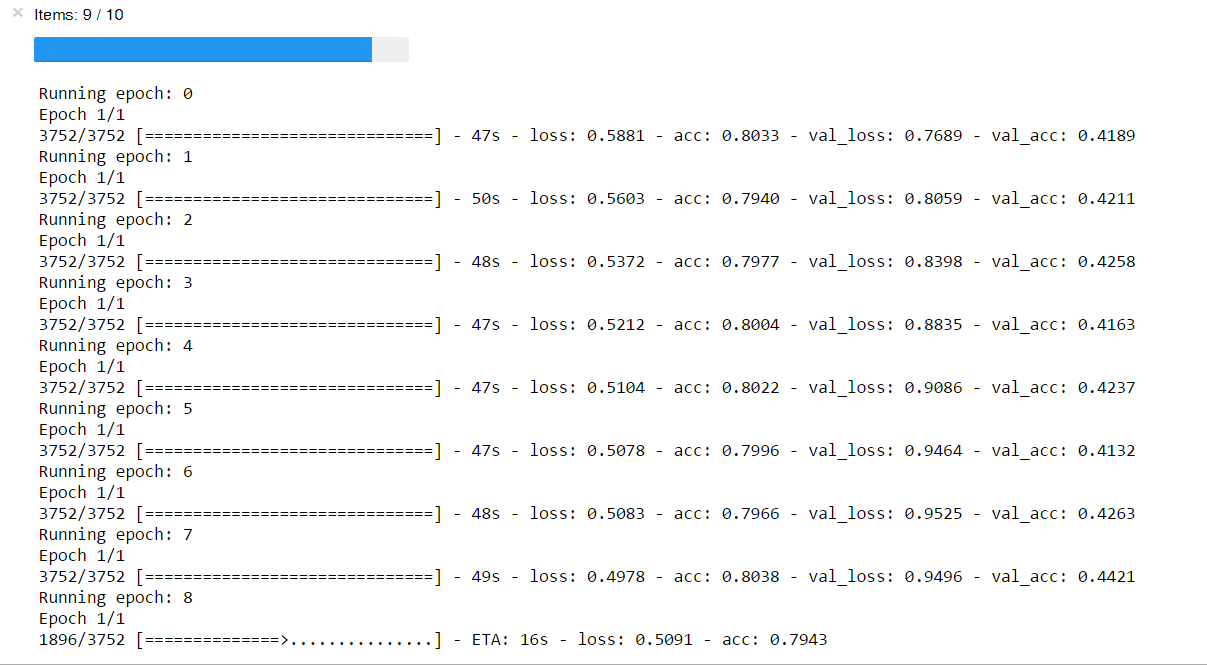

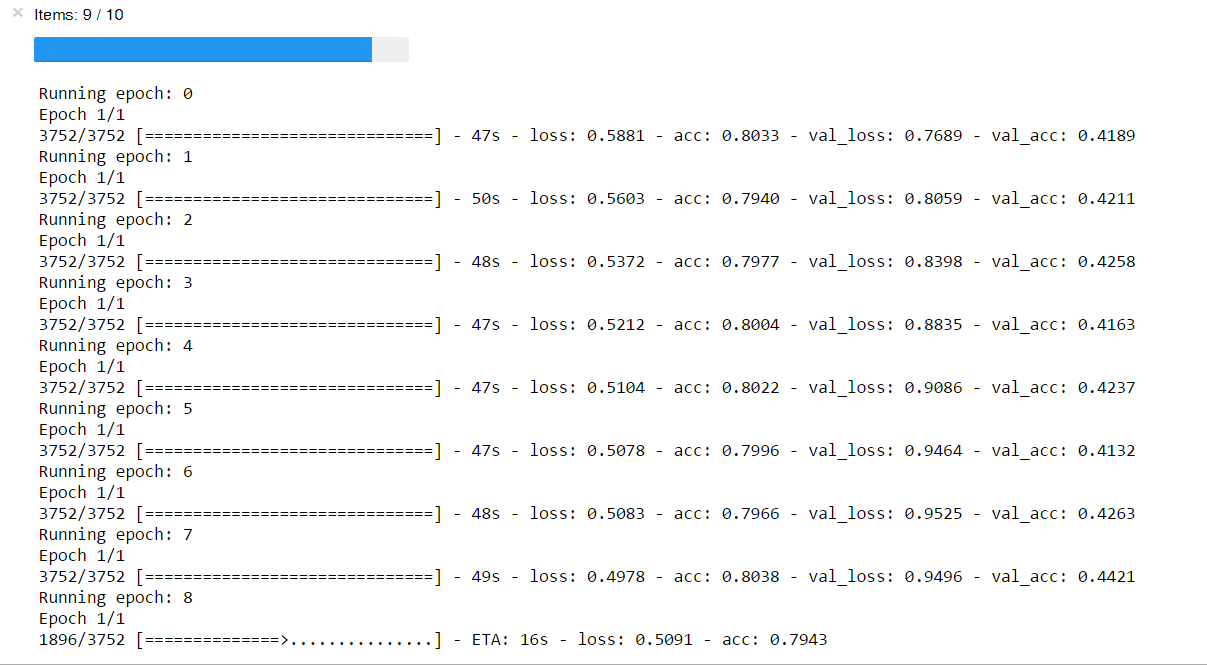

The difference in training and validation hints that it is skewed, small and general. But a beautiful progress indicator.

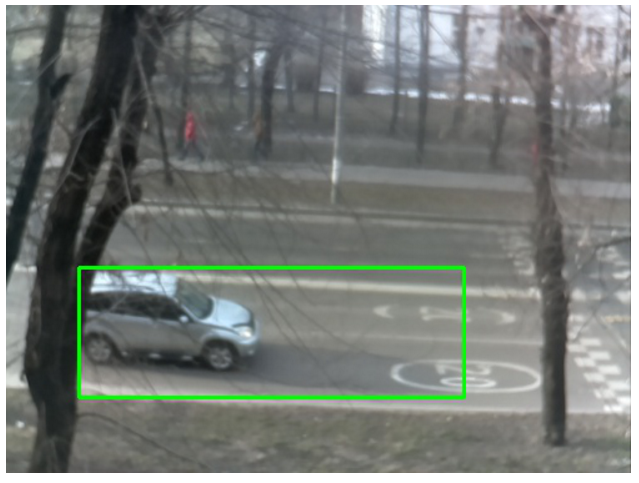

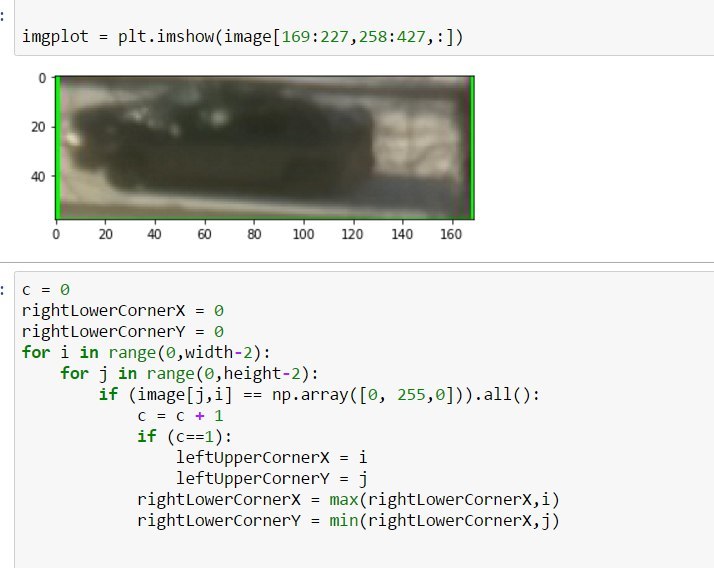

Not chickens, but heroes of the occasion. Very blurry - in motion

Joy has no boundaries, when bydlokod, the writing of which stretched for an hour, began to work and trim the car.

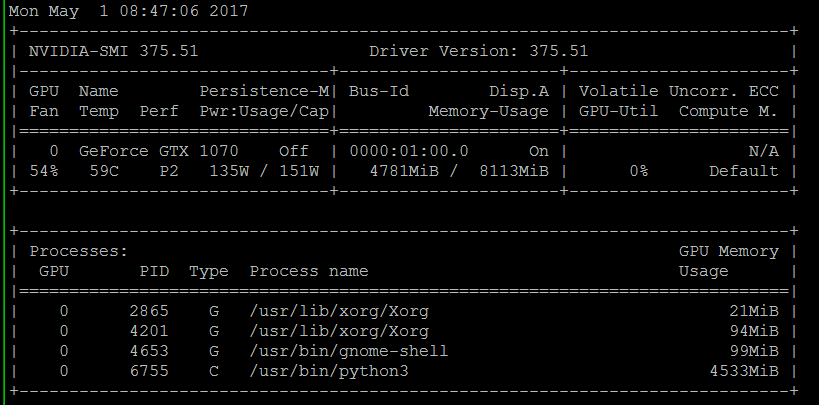

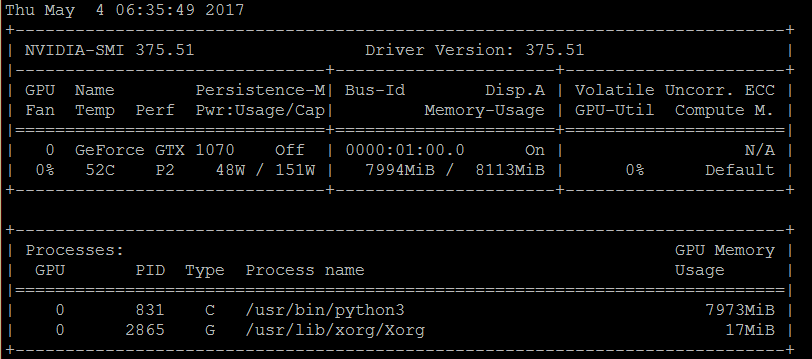

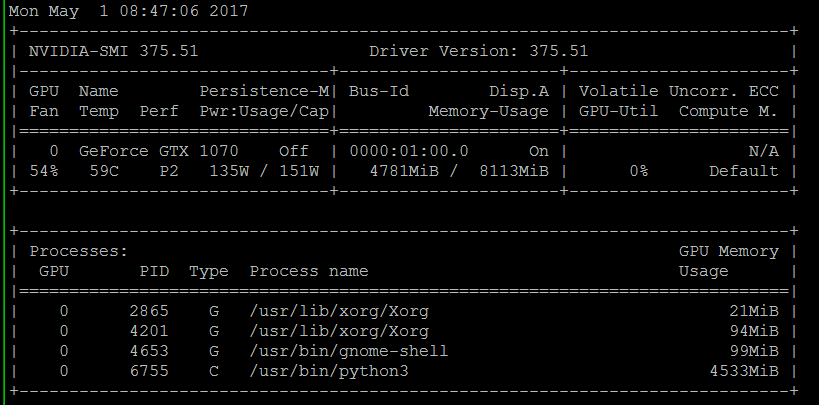

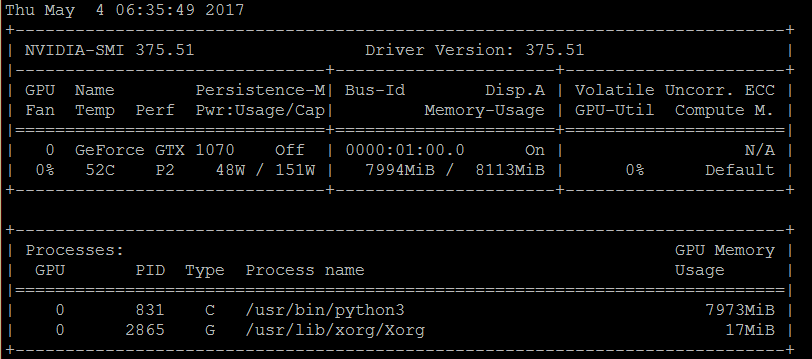

In practice, there is enough space in the memory of a video card either with a margin, or there is not enough of it at once. It is difficult to choose the size of the image and the size of the batch to eat the fish and ...

Finger rule - convolutional layers require a lot of memory, and dense layers - a lot of time.

Compare the usable area on this picture ...

And how many useful pixels are there =) Yes, the chicken's head is bigger than the machine (in pixels) - my video card will suffer

Not calculated - the memory is over and everything fell. A cleaning function, except as a restart, I have not found yet

The first 285 cars have modest dimensions in pixels + everything is blurry ...

When you want to quickly cut the picture, but I do not want to think that such ugly decisions are born

The difference in training and validation hints that it is skewed, small and general. But a beautiful progress indicator.

Not chickens, but heroes of the occasion. Very blurry - in motion

Joy has no boundaries, when bydlokod, the writing of which stretched for an hour, began to work and trim the car.

In practice, there is enough space in the memory of a video card either with a margin, or there is not enough of it at once. It is difficult to choose the size of the image and the size of the batch to eat the fish and ...

Finger rule - convolutional layers require a lot of memory, and dense layers - a lot of time.

Compare the usable area on this picture ...

And how many useful pixels are there =) Yes, the chicken's head is bigger than the machine (in pixels) - my video card will suffer

Not calculated - the memory is over and everything fell. A cleaning function, except as a restart, I have not found yet

Source: https://habr.com/ru/post/328138/

All Articles