The first decade of augmented reality

Each truly revolutionary technology goes through four stages of development: an idea, a demo, a successful project, a mass product. This is clearly seen in the example of multi-touch devices. Let's look back at previous years and see what the situation with the technology of augmented reality is, and let's fantasize what it can become as a truly mass product.

In February 2006, Jeff Han (Jeff Han) at his speech at TED talks showed a demo of an experimental "multi-touch" interface (video below). Today, many things from the presentation seem trivial, they can do each Android-smartphone for $ 50. And then the audience, for the most part - sophisticated techies, were amazed and applauded. What is commonplace today was awesome then. A year later, the first iPhone appeared, and the multitouch idea was “nullified”.

')

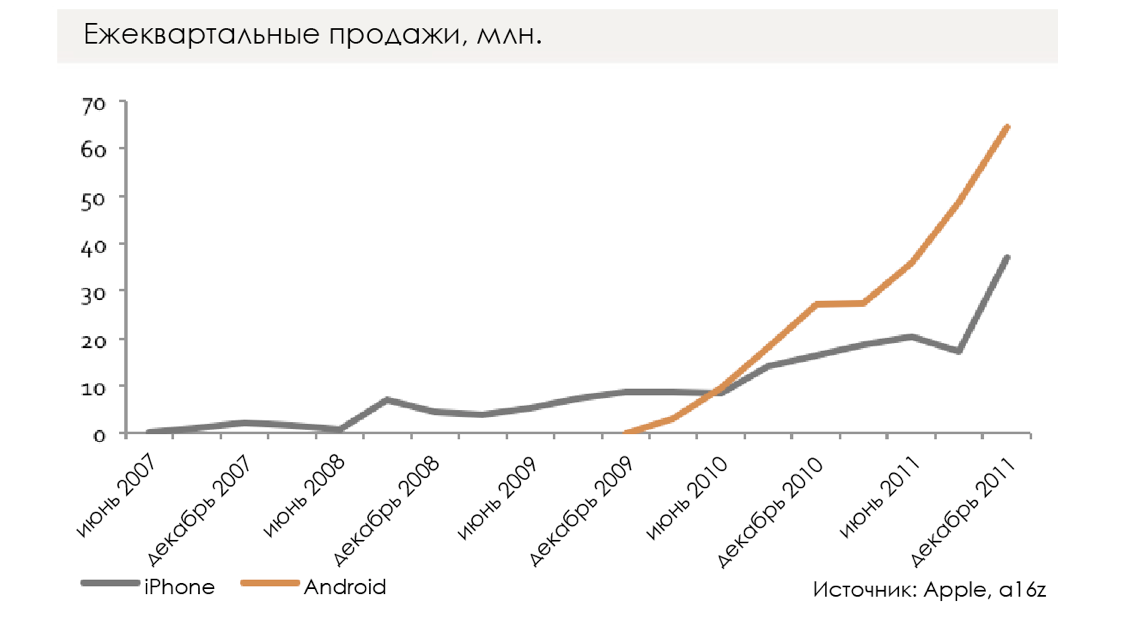

If you look at the events of the previous decade, it turns out that there were four stages of launching a multitouch. At first he was an interesting idea research laboratories. Then came the first public demos. The third stage is the first truly useful multitouch implemented in the iPhone. And finally, in a few years, with the development of the iPhone and Android smartphones, massive sales of multitouch devices began. This delay is reflected in the graph below - several years passed after 2007, even despite the cheaper iPhone, before smartphones became a truly mass commodity. Similar stages of development are characteristic of many revolutionary technologies. Rarely, when something is immediately obtained in the finished form. At the same time, the dead-end technology branches also developed: Symbian in the West and iMode in Japan.

I think today the augmented reality is somewhere between the second and third stages. We have already seen great demos and first prototypes. We have not yet received massive commercial products, but we are already close to this.

Microsoft ships Hololens. This device well tracks its position in space, has its own computing processor. However, it is rather cumbersome, has a very narrow field of view (much less than in the videos distributed by Microsoft) and costs $ 3,000. Probably, the second version of the device can be expected in 2019 . It is very similar that Apple is also working on something similar, taking into account information about their vacancies and acquisitions, as well as the CEO comments (I suspect that all this is also related to miniaturization, improved power consumption, radio communications, and so on for Apple Watch and AirPod). Probably, Google will also release something, or Facebook, or Amazon. Interesting projects sawing a number of smaller companies and startups.

Meanwhile, Magic Leap (in which it has invested a16z) is working on its own wearable technology. A number of videos have been released demonstrating the capabilities of the prototypes. But one thing - to watch a video about the gadget, and quite another - to use it. There is a huge difference between AR-video and everyday wear of such devices, when non-existent objects appear before your eyes. I tried - very badly.

All this means that if we are today at the “Jeff Khan” stage, then we can soon expect the appearance of an analogue of the iPhone 1 in the field of augmented reality. And then, in 10 years or so, we can get a really massive product. What can it be, augmented reality for billions of people?

The first step towards AR was Google Glass. The screen “hangs” the image in the space in front of you, but it does not interact with the real world. In essence, Google Glass was conceptually very close to the smartwatch; it just had to look up and to the right, not down and to the left. The glasses provided a screen for data output, but they had no idea about the environment. Probably, a more advanced technology will allow the display of information on a virtual spherical screen around the user, where you can display windows, 3D-objects or something else.

With the advent of “real augmented reality”, or “hybrid reality” (mixed reality), devices will be able to warn about surrounding objects, they will be able to place virtual objects instead of them, so that we cannot be sure if what we are seeing is real. Unlike Google Glass, future AR-glasses will be able to create three-dimensional maps of the surrounding space and constantly monitor the position of the head. This will put on the wall "virtual TV", which will be there to "hang" while you are in the room. Or even turn the entire wall into a display. Or you can put it on a Minecraft coffee table (or Populous ), and build a world with your hands, create mountains and rivers, like sculpturing with clay. For that matter, anyone who wears the same glasses can see the same thing. It will be possible to turn a wall or table in a meeting room into a display for the whole team, or for a family. Or you can take that little robot over and hide it behind the sofa, and let your children search for it. Of course, all this has intersections with virtual reality, especially when it comes to adding external cameras. Hybrid reality will turn the whole world into a screen.

Before that, it was only about SLAM , that is, about creating a three-dimensional map of the room, but not about recognition. You can go even further. Suppose I met you at the mitap and see a VKontakte profile card above your head. Or a note from the CRM system that says you are a key customer. Or a note from the Truecaller database, according to which you are going to give me some insurance and say goodbye. Or, as in the Black Mirror series, you can simply “block” specific people by placing flat black images instead. That is, glasses do not just scan objects, but recognize them. Here it will be a real addition to reality. You do not just superimpose images on real objects, but do it meaningfully, turn them into a part of the real world. On the one hand, you can enrich - or litter, this is how to look.

On the other hand, you can create very unobtrusive prompts and changes in the world around you. For example, when traveling, it is not easy to translate each inscription into your native language, but also to choose a specific style. If today you can put a plugin in your browser that replaces “millennial” with “snake people”, then what will the future technology of hybrid reality be capable of?

If the glasses are compact enough, will you wear them all day? If not, then with them you will lose many applications. For example, you can use a combination of a smartphone or a watch, playing the role of an “always on” device, and glasses that you wear in order to “view” the content correctly. This would help solve some social issues that have arisen for Google Glass owners: getting a phone, looking at a watch or putting on ordinary glasses is a clear and understandable signal to others, and when you wear Google Glass in a bar, it’s not at all obvious to people that You stare at the wall, but you read something.

All this leads to a logical question - is there a merging of augmented and virtual realities? This is definitely possible. Both technologies allow you to perform interrelated things, and imply the need to solve interrelated engineering tasks. One of them is how to transfer a user to another world using a gadget, which is a VR device. In theory, you should only look through the glass displays, that is, the glasses should be closed at the edges. But for AR it is not necessary. At the same time, the difficulty with AR lies in displaying the world around it, but at the same time cutting off the “unnecessary” (a separate question - how to deal with bright sunlight). AR-glasses are transparent, that is, the surrounding will be able to see the user's eyes. Probably in 10-20 years most of the tasks of combining AR and VR will be solved, but today both technologies look like separately developing areas. In the late 1990s, we argued whether the mobile Internet devices would have a separate radio module and display, plus headphones, plus a keyboard, or it would be a clamshell with a screen and a keyboard. The industry was looking for the right form factor, and had to wait until 2007 (and even longer) before we came to a device with a display that displays and a keyboard. Perhaps the ideal form factor for combining VR and AR has not yet been found.

Another question: how will you manage and interact with virtual objects. Is there enough physical controllers for VR? Is it easy to track hands (without modeling the movement of the fingers)? Multitouch in smartphones implies physical interaction: we touch objects of interest to us. But can we simulate a “touch” to AR-objects? Is this really a good interaction model for everyday interface? Magic Leap allows you to create a sense of depth of space, so you believe that you can touch virtual objects. But you want to use the interface in which your hands go through what should be monolithic? Do I need to use voice control, and what restrictions will it impose (imagine fully voice control of your phone or computer, even with perfect voice recognition)? Or is the solution to the problem of tracking eye movements? For example, look at an object and blink twice to select it? This kind of questions were solved in the development of personal computers and smartphones, along with the search for suitable form factors. The answers are not obvious, and there are still more questions.

The more you think about the task of embedding AR-objects and data into the world around you, the more it seems that this should be solved by a combination of AI and a physical interface. For example, coming to you, what should I see - a card from VKontakte or Facebook? When should I see a new incoming message - immediately or later? Standing in front of a restaurant, should I say, “Hey, Foursquare, is this a good place?”, Or should the operating system do it for me automatically? And by whom will this behavior be controlled - OS, services added by me or a single “Google Brain” in the cloud? Google, Apple, Microsoft and Magic Leap may have different points of view on this, but I believe that many things need to be automated (using AI). If you recall the words of Eric Raymond that the computer should never ask you about something that it can calculate itself, then we can say that if the computer sees everything you see, then it should understand what you are looking at . And after 10 years of development of AI, it is possible that we will be able to solve many of the problems now facing, which are still supposed to be solved manually. When we moved from the window-keyboard-and-mouse model of the desktop interface to touch management and direct interaction with objects in smartphones, a number of issues were removed. Just changed the level of abstraction. Smartphones do not ask us where to save photos, or where you are when ordering a taxi, or which application to use to send a letter, or what is your password (if your smartphone is equipped with a fingerprint scanner). There are no more questions (as well as a choice). AR may well take a step in the same direction, because it will be something more than appearing before you in the air applications in small windows. How Snapchat doesn’t work at all like a desktop Facebook site, and an intangible AR interface managed by AI, can change our ideas again.

The more AR-glasses will try to understand the world around you (and yourself), the more they will observe and send information about what they see on a myriad of different cloud devices, depending on the context, application methods and application model. This is the face of a man, and you talk to him? Send it (or its compressed abstraction - yes, network bandwidth will greatly affect this) in Salesforce, LinkedIn, TrueCaller, Facebook and Tinder. Is it a pair of shoes? Pinterest, Ozone and Net a Porter. Or even send everything to Google. These and many other situations raise issues related to privacy and security. It's like with unmanned vehicles that will ever fill our streets: they constantly take off the surrounding space around them, and this is just a haven for observation. And what will happen when everyone starts wearing AR-glasses - will it be possible to run away from it at all? And if your glasses hack? If your smart home is hacked, you will have poltergeists, and if you hack glasses, you will have visual hallucinations.

Finally, another important question: how many people will wear AR glasses? Will they be an additional accessory for mobile phones (for example, like a smart watch)? Or even in every Brazilian or Indonesian backwater, you can buy a dozen models of Chinese AR-glasses for $ 50, like today Android smartphones? (And what will happen to the mobile Internet?) It is still too early to predict. In the late 1990s and early 2000s, we wondered if all of them would have the same type of mobile device, or some would have what we call smartphones today, and most will have traditional push-button cell phones, even primitive devices without a camera or color. the screen? Looking back, you understand that these were disputes like "whether everyone will have personal computers, or someone will use typewriters (word processors)." The logic of scaling and computing for general purposes led to the fact that first the PC, and then the smartphone, became the only universal devices. Today, there are about 5 billion mobile smartphone owners on earth, of which 2.5-3 billion own smartphones - and it is obvious that the rest will follow. So the question is: will most people use smartphones, and some (100 million? 500 million? 1 billion?) Will switch to AR-glasses as an accessory, or will a new universal product appear? Any answer to this question is a flight of fancy, not a product of analysis. But then, in 1995, they said that everyone would have regular phones.

Source: https://habr.com/ru/post/327804/

All Articles