It is not so easy to take and expand the channels of communication.

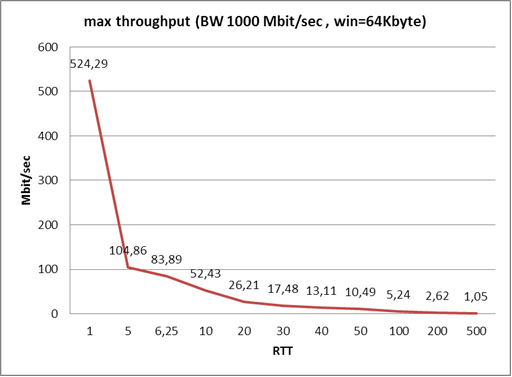

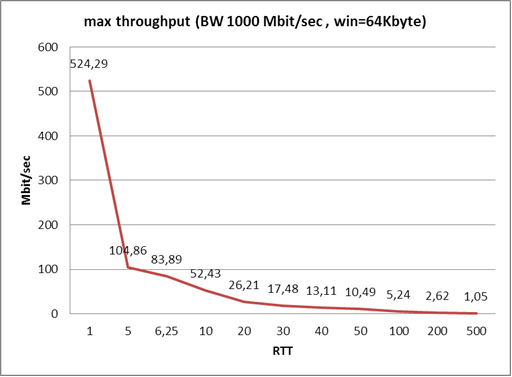

This graph shows how network latency affects maximum speed when using TCP. Simply put, if you have a ping of 500 milliseconds, then with available bandwidth of 6, 10, 100, 500, etc. megabits, the traffic between two hosts will not accelerate above one megabit.

My team is engaged in the optimization of communication channels. Sometimes it is possible to fix everything literally with a couple of clicks manually, but more often you need to install special devices that significantly squeeze the exchange and turn the protocols into more “optimistic” or “predictive” ones.

What is the “optimistic” protocol? Very roughly - this is when the remote server has not yet responded that the next frame can be sent, and the piece of iron already says “send”, because it knows that the success rate is 97%. If suddenly something goes wrong, she herself will send the desired package, without disturbing the sending server.

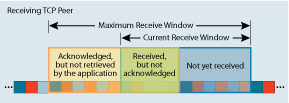

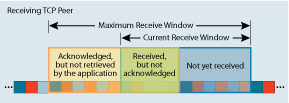

There is such a thing as RWIN (https://en.wikipedia.org/wiki/TCP_tuning). This is the size of the window.

Previously, when the transistors were large (this year 2004–2005), the limit was 64K. Accordingly, the speed, and delays, and applications were different. And there were no problems at this stage. They began to emerge when networks became faster and applications are more voracious (in the sense of becoming more traffic). Companies needed to expand the band so that everything would function quickly. However, everything worked well only up to certain points that can be caught on the chart below. Moreover, modern operating systems in corporate networks have learned how to deal with the so-called “ceiling” problem automatically and transparently for users. But after all, there are old OSs, as well as whole zoos of various OSs and stacks ...

')

Initially, TCP Receive Window is limited to 64K (WinXP times). In modern versions of the protocol, large frame sizes are supported (and this is often necessary), but the exchange begins with small ones.

There is such a thing as "TCP saw." So this is the following: when data is transmitted in TCP every time several frames are sent, the sending side increases the number of frames transmitted at one time. The diagram shows that up to a certain point we can increase this number, but once we still rest on the ceiling and losses begin.

When losses occur, RWIN will be automatically reduced by half. So, if a user has downloaded a file, you can see that he seems to be accelerating, but then falls. This behavior should be familiar to many.

A very good effect for the network with old routers and a large number of wireless hops can be achieved by changing the window size: you should try to get the maximum unscaled RWIN (up to 65535 bytes) and use the minimum scale factor (RFC 1323). New Windows choose the frame size themselves, and do it well.

So far everything is simple: very simplifying, we transmit 64K, then we wait for the answer, then we transmit 64K. If the answer comes from an oil-producing complex through half-planets and a geostationary orbit, guess what the effective speed will be. In fact, everything is a little more complicated, but the problem is the same. Therefore, we need more packages.

Working with RWIN is the simplest thing that can be done right now on the satellite channel (one setting solves a lot of problems). Example: if your packages are flying to the satellite, then back to earth, waiting for the server to respond, then flying to the satellite and back to you. For a geostationary satellite and receiving stations normal to it, this means a delay of 500 ms. If the jump is longer (the stations form a triangle), you can get a couple of seconds. In my practice, there were cases with repeaters sending information again via satellite (another one, which does not see the first teleport behind the planet at the point of departure) - there you can catch 3-4 seconds, but this is a separate kind of communication hell.

Now the most fun. For protocols working with frame windows, the maximum bandwidth is determined by the channel width and delay. Accordingly, the optimal packet size (or package assemblies in one frame, if intermediate optimization is used) should be the larger, the wider the channel is to maximize its capabilities. The application value is simpler: you need to know the RWIN / BDP ratio (Bandwidth Delay Product) - an integral indicator of the transmission delay. Here it can be calculated for the package unscaled 64k.

The next logical step is to determine the optimal TCP Receive Window - here’s a test, look .

Determining the maximum segment size (MSS) and setting it later in the exchange settings greatly speeds up the process. More precisely, it brings it to the theoretical maximum for your delay. Win7 and 2008 Server can do the following: start with a very small window (about 17520 bytes), then increase it automatically if the infrastructure between the nodes allows. Then the auto-tuning of RWIN is turned on, but it is rather limited. In Win10, the auto-tuning border is slightly extended.

You can manually control the RWIN, and this can change the situation on slow networks. At least a couple of times we saved the customer from buying expensive equipment. If the replication window is 24 hours, and it turns out only for 26, then it is quite possible that the channel setting and tuning of own compression of different nodes will allow dropping to 18-19, which is enough. With the optimizer, you can up to 6–7 with a chatty exchange, but sometimes the problem is already solved. Or is postponed for a couple of years.

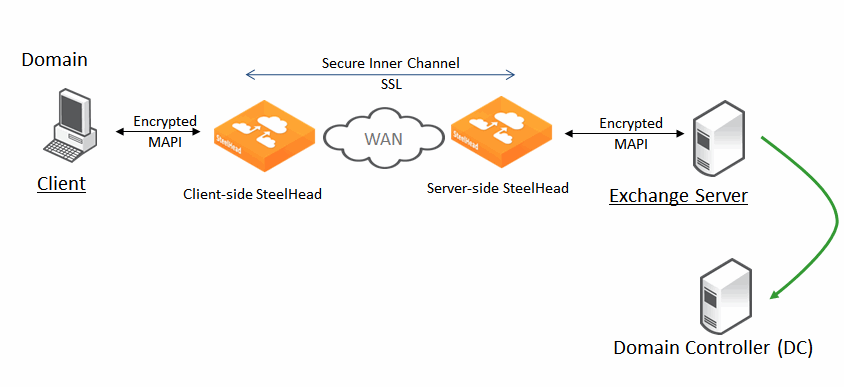

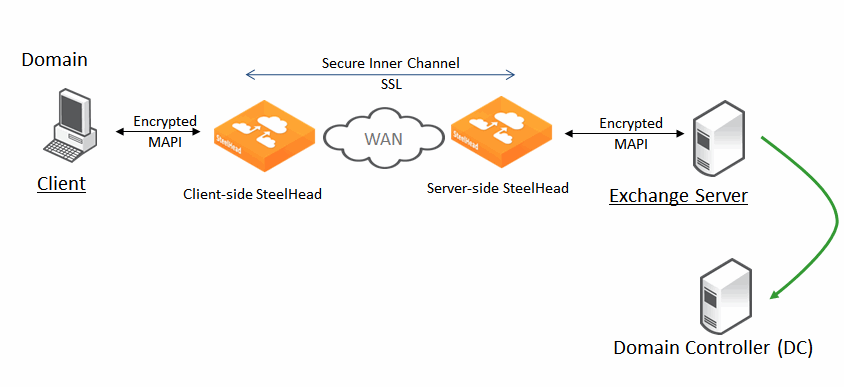

Optimizers (we’re talking about the Riverbed Steelhead family - I’ve already installed hundreds of them at various mineral deposits, and therefore I’ll rely on practice) - well, they can “save” TCP from its inherent flaws in the frame protocol by broadcasting it into its transport protocol. If the exchange is between two Riverbad nodes, the translation looks like this:

Now let's take a look at how these cute devices work with optimization levels higher. Steelhead generally has 3 optimization techniques: data streamlining (deduplication), transport streamlining (transport optimization, discussed above) and application streamlining (this below).

HTTP is a good old protocol for working with sites. Old is not the right word, and there is something to optimize. For example, to open additional connections at the moment when it becomes clear what other objects to load. Modern browsers can do this out of the box:

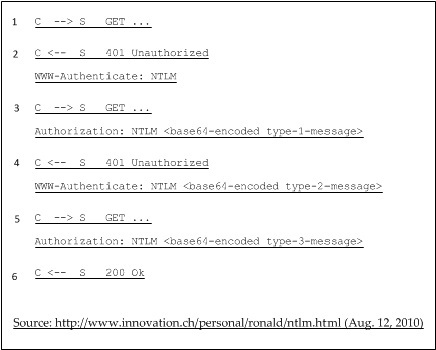

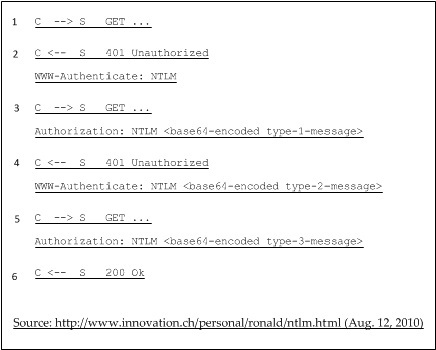

It has very dreary authentication:

Which in an optimistic case, you can squeeze up to two packages - you just need to ask the sending node all at once, then give the Riverbird at the receiving node, and he will already "talk" with whom. Very cool for corporate portals.

With several open connections, there are re-authorization problems with the so-called connection jumping, when one of the connections gives 401 and some of the handshake requests fall into the first, already authenticated.

The main types of optimization are:

It is possible to optimize HTTPS and SSL applications, including non-standard ports. In our practice, this happened more than once. In modern versions of the software for Steelhead, you can optimize Internet traffic if users constantly go to some secure portals (it’s not practical to drive all Internet traffic into optimization), including if a proxy server is used to access the Internet.

There is an Office365 optimization. You can also work with a corporate proxy.

CIFS / SMB is a 1990 protocol for sharing files between network nodes. Used also for network printing. Riverbad listens on both of its standard TCP ports (445 and 139). Exact optimization methods are not disclosed, but we are talking about assembling packages into optimal for the channel size, unnecessary blocking on a fast node, maintaining frequently occurring sessions (keeping them open until actual need), shifting part of the protection onto a pair of Steelhead devices instead of their own load on the nodes . In Win10 and Server16 for SMB 3.1.1, there is a pre-authentication integrity mechanism - some of its counterpart is implemented on older versions of the Steelhead protocol. Similar methods are used for other file protocols. Devices support all SMB versions up to 3.1.1, including Signed-SMB.

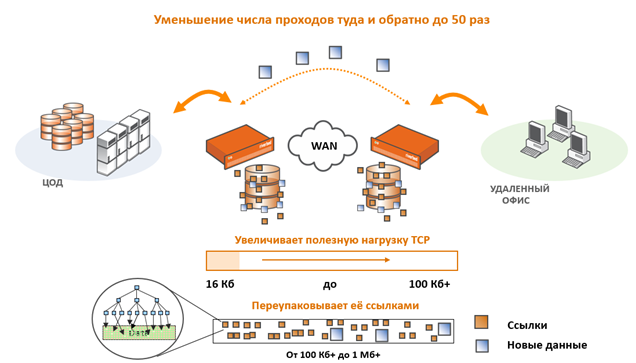

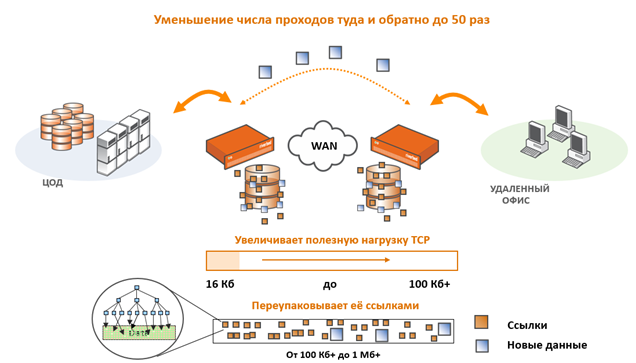

Here is described how compression occurs and the TCP payload increases. Very relevant for file transfer.

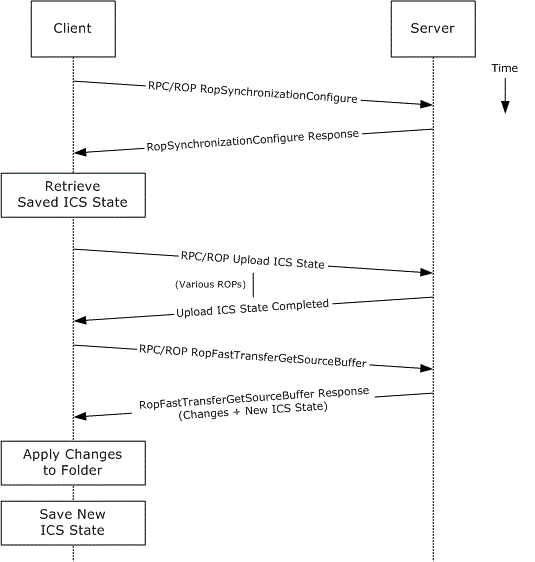

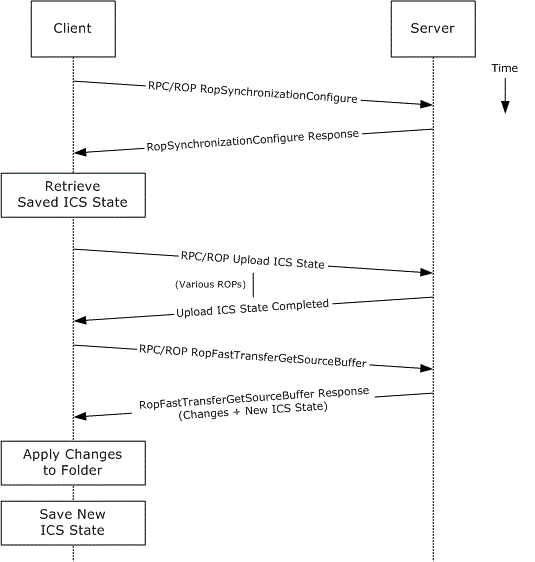

MAPI (over HTTP, Signed-MAPI and Office365) - mail exchange between Outlook clients and Exchange servers. He has quite a long handshake procedure:

Optimization is achieved by keeping this session open (Riverbad intercepts it and holds 96 hours), efficiently exchanging confirmations, streaming mail and attachments (actually caching what the client has not yet begun to receive in fact).

Traffic itself is well compressed / deduplicated and gets rid of redundancy:

In cluster installations, the port predetermination method also helps a lot.

I had a case where an HR specialist on holidays dropped the entire network of a very distant bank office. The investigation revealed that his elderly colleague had sent a BMP card under 10 megabytes of mail. Steelhead "understood" that at 10 am the staff opened the mail, and began collecting mail at night for himself. And at the same time compress these damn postcards, actually providing deduplication from 10 letters to one and compression of this reference sample. Previously, people came at 10, and they began to work at 10:30, as everything “crawls through”. Now there is no reason for sadness, the start of work is strictly on schedule.

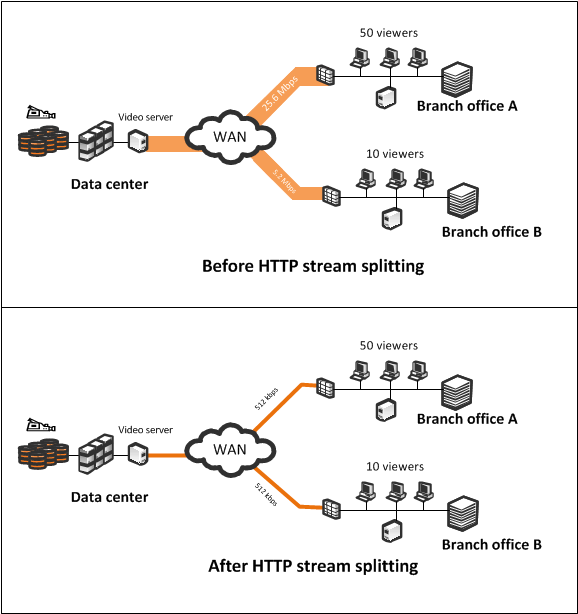

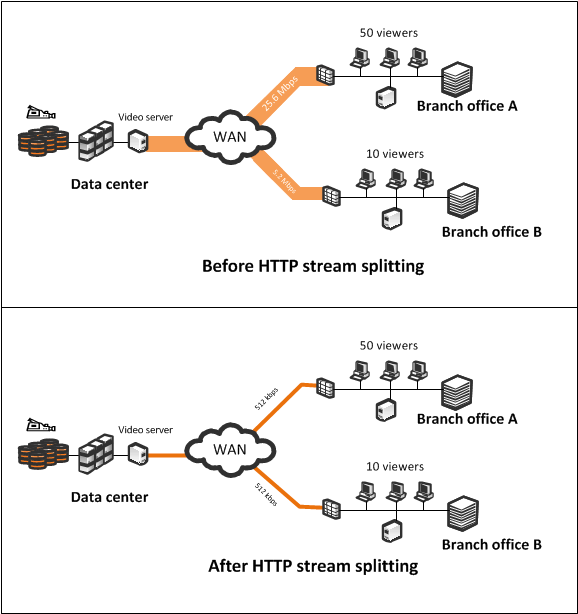

Banks use many distance learning systems, it is very convenient for employees. At remote branches, almost the entire band is clogged with training videos. Riverbed with http-stream can do just such a thing, as in the picture. As a result, channels do not “suffer” from such traffic. But this is a predictive mechanics, real-time video (video conferencing, for example) can only be prioritized.

Speech about HTTP-stream, but not RTP / RSTP

The next mechanic is Prepopulation. If we know in advance, there is an office, and it has network drives, and in doing so, marketing works with its one folder, and optimization and control of the network - with its own. If we also know that in some branch there are certain departments that use specific network folders, then we can teach Riverbed to walk on them independently (tell them these ways). He will understand what files lie there, how quickly they are requested. Thus, many sessions will not open, Riverbed itself “pretend” to the client and descends on network balls, collects everything that is there, and the most frequently used content will take over. Total: the next day, the employee comes to the office, and he can already use without delay access to the far side. Accordingly, will receive updated information.

Other protocols are sorted out using a low-level protocol optimization scheme (most often talking about frame size and setting the optimal packet lifetime for the channel) - even if the channel needs large frames, and the protocol wants to send small ones, Steelheads can sort through all this into their format, deduplicate and send as it should. Then the stream is deduplicated, compressed, cached, predicted. The "chatty" sequences are identified and translated into optimistic responses.

There is also a connection pooling - when Steelhead keeps 20 unnecessary sessions already open for current needs. This is very relevant on the satellite.

There is still a lot of people working on the standard 64K window - and this is a big hit on the exchange. For example, between data centers in Khabarovsk and Moscow on a 100 Mbit / s channel with a delay of 80 ms, the replication speed on standard media is 5 megabits / sec. Surprise! It is necessary to change the size of the window and then do the rest. Again, the same Office365 has the nearest Azure-Datacenter in Amsterdam and Dublin. With the help of a ruler and a calculator on the link above, you can estimate the delay. More traffic from the same Khabarovsk goes through Moscow (although closer through Asian Azure-DPC).

And then there is a loss. On the satellite - it's 5% of the weather easily. Some packages are stuck in the fog, some do not pass through the snowflakes, and a large and thick package can enrich the information of a pigeon. Or a crow that has flown in to peck film from a transponder (although, fortunately, on large teleports they quickly boil and slide off from the mirror). By the way, SCPS support helps.

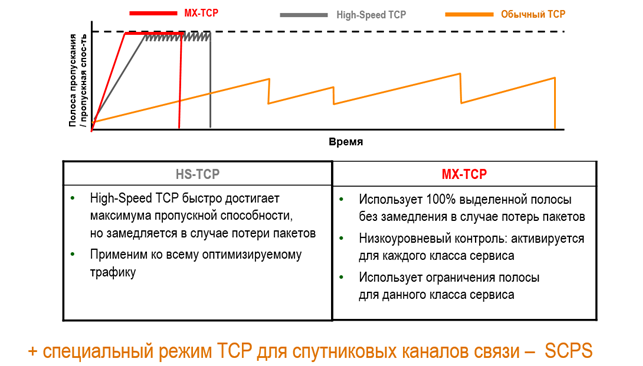

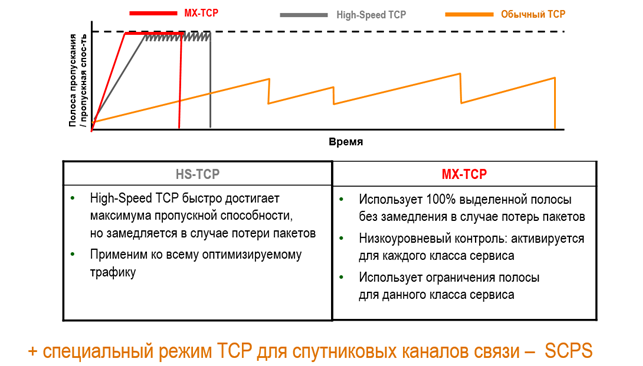

In addition to manipulating frame sizes, Riverbed SteelHead is able to use optimal congestion avoidance control mechanisms. From NewReno to specific MX-TCP and HSTCP. The latter help to avoid problems with high BDP and TCP slow start problem, that is, knowing the width of the channel, we can give a certain traffic all the way at once.

With the release of new software, new features appear. Starting from version 9.0, a full-fledged DPI appeared and you can classify traffic by application. The built-in capabilities of Steelheads for prioritizing and parsing traffic are still good to use for defining network policies. For example, in oil reservoirs, the following mechanics are often used: first, technical traffic, then video packages of video conferencing manuals, then office, then personal traffic of employees. Now, driller Vasya, who is pumping a new 20-megabyte gif with a cat, will not interrupt the traffic to the management.

Or there was an object near Lensk. There are very rugged admins, they squeezed everything they could from the channel. We read our past posts, asked for a piece of iron for a test. The main problem is not the fastest RDP-like compounds, people are furious. And this is their main way to access business applications. During the high channel load (and it is also unbalanced), business application users began to experience problems accessing RDP. Optimization they already had built-in satellite modems, but only at the transport level, so they were looking for another solution. They put the river - the hair became soft and silky, the load subsided 3 times. Then they came across a crutch of a satellite modem, - when the integrated optimization was turned off by default, the TCP queue length was set to be extremely small. This greatly harmed Riverbeds. Also picked up the optimal parameters. As a result, RPD-like things work stably, files swing faster - people sit and do something around the clock with watches. The situation is the same as that of the driller Vasya: he pumps 20 MB of hyphae with a cat faster, does not harm anyone, and everyone is happy.

Here is another example. In the open waters there are large vessels - factories on trawlers. And there

freshly caught fish immediately rolled into canned food. Production there is controlled through a network, and data is exchanged as part of the process.

But just double satellite jumps or unpleasant satellite-2G-optics transitions. They put optimizers - there came all the happiness. And companies in terms of controlling business processes, and employees in terms of improving working conditions, since they have the opportunity to communicate with families, share photos / videos.

A set of Steelheads mainly take in the following cases:

In fact, there are many more scenarios, but most often they are all born as a result of one problem - the slow work of users with business applications. I listed the most common.

Magic Riverbed starts working on 3G channels, satellite channels, 3G radio relay hops, double satellite hops, 2G-4G-hops, as well as on wide 10G channels and with a delay of 2–5 ms. Best of all, it pays off in Russia when using satellite channels, which are not physically more widely done, but necessary.

Previously, the channels cost unreal money, but now they are getting cheaper in most parts of the country. Most often, banks and the mining sector appeal to us.

For many, it is still a revelation that the expansion of communication channels does not lead to an increase in the efficiency of their work, that the delay affects the capacity of the channel and that it cannot endlessly expand the channel. Often, when faced with such a problem, for example, slow replication, slow applications, they begin to expand channels. And most often it does not help. Here, actually, I also wanted to tell about it. I hope it worked out.

My team is engaged in the optimization of communication channels. Sometimes it is possible to fix everything literally with a couple of clicks manually, but more often you need to install special devices that significantly squeeze the exchange and turn the protocols into more “optimistic” or “predictive” ones.

What is the “optimistic” protocol? Very roughly - this is when the remote server has not yet responded that the next frame can be sent, and the piece of iron already says “send”, because it knows that the success rate is 97%. If suddenly something goes wrong, she herself will send the desired package, without disturbing the sending server.

Windows and delays

There is such a thing as RWIN (https://en.wikipedia.org/wiki/TCP_tuning). This is the size of the window.

Previously, when the transistors were large (this year 2004–2005), the limit was 64K. Accordingly, the speed, and delays, and applications were different. And there were no problems at this stage. They began to emerge when networks became faster and applications are more voracious (in the sense of becoming more traffic). Companies needed to expand the band so that everything would function quickly. However, everything worked well only up to certain points that can be caught on the chart below. Moreover, modern operating systems in corporate networks have learned how to deal with the so-called “ceiling” problem automatically and transparently for users. But after all, there are old OSs, as well as whole zoos of various OSs and stacks ...

')

Initially, TCP Receive Window is limited to 64K (WinXP times). In modern versions of the protocol, large frame sizes are supported (and this is often necessary), but the exchange begins with small ones.

There is such a thing as "TCP saw." So this is the following: when data is transmitted in TCP every time several frames are sent, the sending side increases the number of frames transmitted at one time. The diagram shows that up to a certain point we can increase this number, but once we still rest on the ceiling and losses begin.

When losses occur, RWIN will be automatically reduced by half. So, if a user has downloaded a file, you can see that he seems to be accelerating, but then falls. This behavior should be familiar to many.

A very good effect for the network with old routers and a large number of wireless hops can be achieved by changing the window size: you should try to get the maximum unscaled RWIN (up to 65535 bytes) and use the minimum scale factor (RFC 1323). New Windows choose the frame size themselves, and do it well.

So far everything is simple: very simplifying, we transmit 64K, then we wait for the answer, then we transmit 64K. If the answer comes from an oil-producing complex through half-planets and a geostationary orbit, guess what the effective speed will be. In fact, everything is a little more complicated, but the problem is the same. Therefore, we need more packages.

Working with RWIN is the simplest thing that can be done right now on the satellite channel (one setting solves a lot of problems). Example: if your packages are flying to the satellite, then back to earth, waiting for the server to respond, then flying to the satellite and back to you. For a geostationary satellite and receiving stations normal to it, this means a delay of 500 ms. If the jump is longer (the stations form a triangle), you can get a couple of seconds. In my practice, there were cases with repeaters sending information again via satellite (another one, which does not see the first teleport behind the planet at the point of departure) - there you can catch 3-4 seconds, but this is a separate kind of communication hell.

Now the most fun. For protocols working with frame windows, the maximum bandwidth is determined by the channel width and delay. Accordingly, the optimal packet size (or package assemblies in one frame, if intermediate optimization is used) should be the larger, the wider the channel is to maximize its capabilities. The application value is simpler: you need to know the RWIN / BDP ratio (Bandwidth Delay Product) - an integral indicator of the transmission delay. Here it can be calculated for the package unscaled 64k.

The next logical step is to determine the optimal TCP Receive Window - here’s a test, look .

Determining the maximum segment size (MSS) and setting it later in the exchange settings greatly speeds up the process. More precisely, it brings it to the theoretical maximum for your delay. Win7 and 2008 Server can do the following: start with a very small window (about 17520 bytes), then increase it automatically if the infrastructure between the nodes allows. Then the auto-tuning of RWIN is turned on, but it is rather limited. In Win10, the auto-tuning border is slightly extended.

You can manually control the RWIN, and this can change the situation on slow networks. At least a couple of times we saved the customer from buying expensive equipment. If the replication window is 24 hours, and it turns out only for 26, then it is quite possible that the channel setting and tuning of own compression of different nodes will allow dropping to 18-19, which is enough. With the optimizer, you can up to 6–7 with a chatty exchange, but sometimes the problem is already solved. Or is postponed for a couple of years.

Optimizers (we’re talking about the Riverbed Steelhead family - I’ve already installed hundreds of them at various mineral deposits, and therefore I’ll rely on practice) - well, they can “save” TCP from its inherent flaws in the frame protocol by broadcasting it into its transport protocol. If the exchange is between two Riverbad nodes, the translation looks like this:

Now let's take a look at how these cute devices work with optimization levels higher. Steelhead generally has 3 optimization techniques: data streamlining (deduplication), transport streamlining (transport optimization, discussed above) and application streamlining (this below).

HTTP is a good old protocol for working with sites. Old is not the right word, and there is something to optimize. For example, to open additional connections at the moment when it becomes clear what other objects to load. Modern browsers can do this out of the box:

It has very dreary authentication:

Which in an optimistic case, you can squeeze up to two packages - you just need to ask the sending node all at once, then give the Riverbird at the receiving node, and he will already "talk" with whom. Very cool for corporate portals.

With several open connections, there are re-authorization problems with the so-called connection jumping, when one of the connections gives 401 and some of the handshake requests fall into the first, already authenticated.

The main types of optimization are:

- Disable compression of the transmitting and receiving node and build packages in packs. If you turn off the compression of each individual package, you can perfectly deduplicate multiple repeating ones. In fact, this will mean that for a packet once transmitted, similar to the next transmitted one, you can only send the number of the closest alike and the difference. This is significantly less than two compressed packages.

- Insert cookies. Some servers require the storage of certain technical data that is approximately the same for all users and rarely changes. They can be put in the cache. In addition, if your site does not support cookies, this support may be enabled on the traffic optimizer.

- Connection support - everything is simple: if you don’t let go of a session, you don’t have to log in again.

- URL learning. Users go through the same tracks, so the optimizer can quickly learn the habits of the office and part of the cache, put some in quick access on the pre-request, and so on.

- Predicting queries. For example, if a page with an img tag passes through Steelhead, it is logical to assume that after a few milliseconds the receiving server will request the image itself. Riverbad uses an optimistic approach and does not expect this, but simply requests immediately. When your server sends a request, Riverbad intercepts it and gives back what it has already received.

- Prefetching also perfectly openable sessions.

- Forced NTLM, if possible (this is faster).

- Standard methods of getting rid of unnecessary headers.

- "Gratuitos 401" - browsers often make an extra GET that can be avoided.

It is possible to optimize HTTPS and SSL applications, including non-standard ports. In our practice, this happened more than once. In modern versions of the software for Steelhead, you can optimize Internet traffic if users constantly go to some secure portals (it’s not practical to drive all Internet traffic into optimization), including if a proxy server is used to access the Internet.

There is an Office365 optimization. You can also work with a corporate proxy.

CIFS / SMB is a 1990 protocol for sharing files between network nodes. Used also for network printing. Riverbad listens on both of its standard TCP ports (445 and 139). Exact optimization methods are not disclosed, but we are talking about assembling packages into optimal for the channel size, unnecessary blocking on a fast node, maintaining frequently occurring sessions (keeping them open until actual need), shifting part of the protection onto a pair of Steelhead devices instead of their own load on the nodes . In Win10 and Server16 for SMB 3.1.1, there is a pre-authentication integrity mechanism - some of its counterpart is implemented on older versions of the Steelhead protocol. Similar methods are used for other file protocols. Devices support all SMB versions up to 3.1.1, including Signed-SMB.

Here is described how compression occurs and the TCP payload increases. Very relevant for file transfer.

MAPI (over HTTP, Signed-MAPI and Office365) - mail exchange between Outlook clients and Exchange servers. He has quite a long handshake procedure:

Optimization is achieved by keeping this session open (Riverbad intercepts it and holds 96 hours), efficiently exchanging confirmations, streaming mail and attachments (actually caching what the client has not yet begun to receive in fact).

Traffic itself is well compressed / deduplicated and gets rid of redundancy:

In cluster installations, the port predetermination method also helps a lot.

I had a case where an HR specialist on holidays dropped the entire network of a very distant bank office. The investigation revealed that his elderly colleague had sent a BMP card under 10 megabytes of mail. Steelhead "understood" that at 10 am the staff opened the mail, and began collecting mail at night for himself. And at the same time compress these damn postcards, actually providing deduplication from 10 letters to one and compression of this reference sample. Previously, people came at 10, and they began to work at 10:30, as everything “crawls through”. Now there is no reason for sadness, the start of work is strictly on schedule.

Banks use many distance learning systems, it is very convenient for employees. At remote branches, almost the entire band is clogged with training videos. Riverbed with http-stream can do just such a thing, as in the picture. As a result, channels do not “suffer” from such traffic. But this is a predictive mechanics, real-time video (video conferencing, for example) can only be prioritized.

Speech about HTTP-stream, but not RTP / RSTP

Similar methods are used in other protocols.

The next mechanic is Prepopulation. If we know in advance, there is an office, and it has network drives, and in doing so, marketing works with its one folder, and optimization and control of the network - with its own. If we also know that in some branch there are certain departments that use specific network folders, then we can teach Riverbed to walk on them independently (tell them these ways). He will understand what files lie there, how quickly they are requested. Thus, many sessions will not open, Riverbed itself “pretend” to the client and descends on network balls, collects everything that is there, and the most frequently used content will take over. Total: the next day, the employee comes to the office, and he can already use without delay access to the far side. Accordingly, will receive updated information.

Other protocols are sorted out using a low-level protocol optimization scheme (most often talking about frame size and setting the optimal packet lifetime for the channel) - even if the channel needs large frames, and the protocol wants to send small ones, Steelheads can sort through all this into their format, deduplicate and send as it should. Then the stream is deduplicated, compressed, cached, predicted. The "chatty" sequences are identified and translated into optimistic responses.

There is also a connection pooling - when Steelhead keeps 20 unnecessary sessions already open for current needs. This is very relevant on the satellite.

More practice

There is still a lot of people working on the standard 64K window - and this is a big hit on the exchange. For example, between data centers in Khabarovsk and Moscow on a 100 Mbit / s channel with a delay of 80 ms, the replication speed on standard media is 5 megabits / sec. Surprise! It is necessary to change the size of the window and then do the rest. Again, the same Office365 has the nearest Azure-Datacenter in Amsterdam and Dublin. With the help of a ruler and a calculator on the link above, you can estimate the delay. More traffic from the same Khabarovsk goes through Moscow (although closer through Asian Azure-DPC).

And then there is a loss. On the satellite - it's 5% of the weather easily. Some packages are stuck in the fog, some do not pass through the snowflakes, and a large and thick package can enrich the information of a pigeon. Or a crow that has flown in to peck film from a transponder (although, fortunately, on large teleports they quickly boil and slide off from the mirror). By the way, SCPS support helps.

In addition to manipulating frame sizes, Riverbed SteelHead is able to use optimal congestion avoidance control mechanisms. From NewReno to specific MX-TCP and HSTCP. The latter help to avoid problems with high BDP and TCP slow start problem, that is, knowing the width of the channel, we can give a certain traffic all the way at once.

With the release of new software, new features appear. Starting from version 9.0, a full-fledged DPI appeared and you can classify traffic by application. The built-in capabilities of Steelheads for prioritizing and parsing traffic are still good to use for defining network policies. For example, in oil reservoirs, the following mechanics are often used: first, technical traffic, then video packages of video conferencing manuals, then office, then personal traffic of employees. Now, driller Vasya, who is pumping a new 20-megabyte gif with a cat, will not interrupt the traffic to the management.

Or there was an object near Lensk. There are very rugged admins, they squeezed everything they could from the channel. We read our past posts, asked for a piece of iron for a test. The main problem is not the fastest RDP-like compounds, people are furious. And this is their main way to access business applications. During the high channel load (and it is also unbalanced), business application users began to experience problems accessing RDP. Optimization they already had built-in satellite modems, but only at the transport level, so they were looking for another solution. They put the river - the hair became soft and silky, the load subsided 3 times. Then they came across a crutch of a satellite modem, - when the integrated optimization was turned off by default, the TCP queue length was set to be extremely small. This greatly harmed Riverbeds. Also picked up the optimal parameters. As a result, RPD-like things work stably, files swing faster - people sit and do something around the clock with watches. The situation is the same as that of the driller Vasya: he pumps 20 MB of hyphae with a cat faster, does not harm anyone, and everyone is happy.

Here is another example. In the open waters there are large vessels - factories on trawlers. And there

freshly caught fish immediately rolled into canned food. Production there is controlled through a network, and data is exchanged as part of the process.

But just double satellite jumps or unpleasant satellite-2G-optics transitions. They put optimizers - there came all the happiness. And companies in terms of controlling business processes, and employees in terms of improving working conditions, since they have the opportunity to communicate with families, share photos / videos.

Where applied in practice

A set of Steelheads mainly take in the following cases:

- Where you can not technically increase the bandwidth.

- Where on the wide channel there is a very high delay (for example, for satellites).

- LFN (long thick network, for example, a pipe between two data centers or geographically distant offices, for example, Moscow - Cyprus).

In fact, there are many more scenarios, but most often they are all born as a result of one problem - the slow work of users with business applications. I listed the most common.

Magic Riverbed starts working on 3G channels, satellite channels, 3G radio relay hops, double satellite hops, 2G-4G-hops, as well as on wide 10G channels and with a delay of 2–5 ms. Best of all, it pays off in Russia when using satellite channels, which are not physically more widely done, but necessary.

Previously, the channels cost unreal money, but now they are getting cheaper in most parts of the country. Most often, banks and the mining sector appeal to us.

For many, it is still a revelation that the expansion of communication channels does not lead to an increase in the efficiency of their work, that the delay affects the capacity of the channel and that it cannot endlessly expand the channel. Often, when faced with such a problem, for example, slow replication, slow applications, they begin to expand channels. And most often it does not help. Here, actually, I also wanted to tell about it. I hope it worked out.

Source: https://habr.com/ru/post/327720/

All Articles