UE4 for Unity developers

Hi, Habr! My name is Alexander, and today we compare Unity and Unreal Engine 4.

I think many developers tried the Unity engine and saw the games made on it, projects, some demos. Its main competitor is the Unreal Engine. It originates in Epic Games projects, such as the Unreal Tournament shooter. Let's take a look at how to get started with the Unreal engine after Unity and what obstacles might lie in our way.

It happens that 3D-engines compare very superficially, or focus only on one of the features, for example, on the graph. We will not holivarit and consider both engines as equal tools. Our goal is to compare the two technologies and help you understand the Unreal Engine 4. Let's compare the basic systems of the engines with specific examples of the UShooter demo project code (Unreal + Unity Shooter) specially made for this purpose. The project uses Unity version 5.5.0 and Unreal Engine 4.14.3.

Component System (Unity)

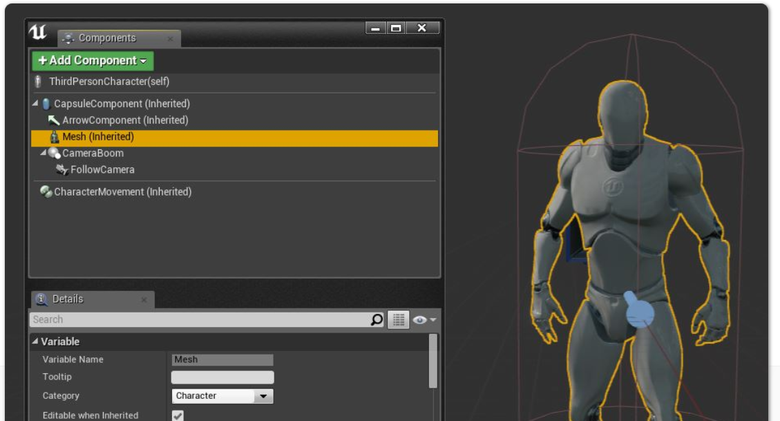

When we run the project on Unreal, we see that the character in the scene is just one object. There are no familiar model nodes (embedded objects, meshes), skeleton bones, etc. in the World Outliner window. This is a consequence of the differences in the systems of the Unity and Unreal components.

In Unity, a scene consists of Game Object objects. This is an empty universal object to which the components implemented by the behavior scripts (MonoBehaviour) and the engine's built-in components are added. Sometimes they are left empty, as a marker object, in the place of which, for example, a game character or effect will be created.

All these objects we see in the Hierarchy window in the engine editor. They have a built-in Transform component with which we can control the position of an object in the space of a 3D scene. For example, an object's movement script changes the coordinates in the Update function, and the object moves. Two clicks are enough to add a similar script to a Game Object . Having created an object — a character or object — we set it up, add scripts, and save it to prefab (the file that stores the Game Object and its child objects). Subsequently, we can change the prefab itself, and these changes will affect all such objects.

Here is the RocketProjectile class, which is a rocket in the UShooter project.

public class RocketProjectile: MonoBehaviour { public float Damage = 10.0f; public float FlySpeed = 10.0f; void Update() { gameObject.transform.position += gameObject.transform.forward * FlySpeed * Time.deltaTime; } void OnCollisionEnter(Collision collision) { // } } We set the parameters of the projectile in the editor, if desired, change the speed of movement ( FlySpeed property) and damage ( Damage ). Collision handling occurs in the OnCollisionEnter function. Unity calls it itself, since the object has a Rigid Body component.

Component System (UE4)

In Unreal Engine 4, game objects are represented by Actors and their components. AActor (“actor”) is the main class of an object that fits in a scene. We can create it in the game scene (both from the editor and the code), change its properties, etc. There is also a class from which all engine entities are inherited: UObject .

Components are added to Actor, the game object. It can be a weapon, a character, anything. But these components are conventionally hidden from us in the analogue of Prefab 'a - Blueprint Class .

In the Actor object, unlike Unity, there is the concept of Root Component . This is the root component of the object to which the other components are attached. In Unity, just drag and drop an object to change its nesting hierarchy. In Unreal, this is done by binding the components to each other ("attachment").

In Unity, there are Start , Update and LateUpdate for updating or starting MonoBehaviour scripts. Their counterparts in Unreal are the functions of BeginPlay and Tick in Actor. The Actor components ( UActorComponent ) have the InitializeComponent and ComponentTick functions for this, so you cannot make an Actor component in one click and vice versa. Also, unlike Unity, Transform does not have all the components, but only the USceneComponent and those inherited from it.

In Unity, we can write GameObject.Instantiate almost anywhere in the code and get the object created from Prefab 'a. In Unreal, we are “asking” the world object ( UWorld ) to create an instance of the object. Creating an object is called spawn in the enriale, from the word spawn. For this, the World->SpawnActor function is used.

Characters and their Controllers

In Unreal, for characters, there are special classes APawn and ACharacter , they are inherited from the class AActor .

APawn is a character class that can be controlled by a player or AI. In Unreal, there is a controller system for controlling characters. We create a Player Controller or AI Controller . They receive a control command from the player or internal logic, if it is AI, and they transfer movement commands to the character class itself, APawn or ACharacter .

ACharacter is based on APawn and has advanced movement mechanisms, an embedded skeletal mesh component, the basic logic of moving a character, and its representation for a multiplayer game. For optimization, you can create a character based on APawn and implement only the necessary project functionality.

Description of the game class (Actor'a)

Now, having learned a bit about Unreal components, we can take a look at the rocket class in the Unreal version of UShooter .

UCLASS() class USHOOTER_API ARocketProjectile : public AActor { GENERATED_BODY() public: // Sets default values for this actor's properties ARocketProjectile(); // Called when the game starts or when spawned virtual void BeginPlay() override; // Called every frame virtual void Tick( float DeltaSeconds ) override; // Rocket fly speed UPROPERTY(EditDefaultsOnly, BlueprintReadOnly, Category = "Rocket") float FlySpeed; // Rocket damage UPROPERTY(EditDefaultsOnly, BlueprintReadOnly, Category = "Rocket") float Damage; // Impact (collsion) handling UFUNCTION() void OnImpact(UPrimitiveComponent* HitComponent, AActor* OtherActor, UPrimitiveComponent* OtherComp, FVector NormalImpulse, const FHitResult& Hit); private: /** Collision sphere */ UPROPERTY(VisibleDefaultsOnly, Category = "Projectile") USphereComponent* CollisionComp; }; The interaction of the editor and scripts, which in Unity does not require special code, works in Unreal through code generation. This special Unreal code generates when building. So that the editor can show the properties of our object, we make special wrappers: UCLASS , GENERATED_BODY and UPROPERTY . We also decorate properties and describe how the editor should work with them. For example, EditDefaultsOnly means that we can change the properties of only the default object, the blueprint class ( prefab 'a, if we draw an analogy with Unity). Properties can be grouped into different categories. This allows us to quickly find the properties of the object that interest us.

The OnImpact function is an analogue of OnCollisionEnter in Unity. But to work with it, you need to subscribe to events of the USphereComponent component in the constructor or even during the game. It does not work automatically, as in Unity, but there is the possibility of optimization. If we no longer need to respond to a collision, we can unsubscribe from the event.

Blueprint

A typical action after creating a C ++ class in Unreal is to create Blueprint Class on its basis. This is an extension of the object that Unreal gives us. Unreal's Blueprint system is used for visual programming. We can create visual schemes, connect events with some reactions to them. Through the blueprints, the engine simplifies the interaction of programmers and designers. We can write in C ++ a part of the game logic and give designers access to it.

At the same time, Unreal allows you to separate, if required, C ++ project source codes from its binaries and content. Designers or outsourcers can work with compiled dll-libraries and never know what is happening inside the C ++ part of the project. This is an additional degree of freedom provided by the engine.

Unreal is good because it has almost everything related to Blueprints. We can extend C ++ classes with them, create Blueprint heirs from them, etc. This system is closely connected with all components of the engine, from its internal logic to visual components, collision, animation, etc.

Unity has similar visual programming systems, such as Antares Universe . They are not part of the engine and are created on top of it, so at any moment something may break (for example, when updating the engine version). The system of visual scripting in Unity is not provided. In my opinion, this is a serious drawback compared to Unreal. Indeed, thanks to such systems, even people far from programming can draw up a diagram of the interaction of objects or relate some sequence of actions. By the way, in Unreal all project templates have two versions: both on the basis of C ++ code, and entirely on Blueprints. Thus, to create a simple project without using code, entirely on blueprints, is quite realistic.

Demo shooter (UShooter)

In Unity, we write a demo from scratch, and in Unreal we rely on templates. In the template, we select the control and camera view, and Unreal will generate a project with the specified settings. This is a good basis from which you can build on to speed up the development and creation of a prototype project.

On top of the Side Scroller pattern, we add our own HUD interface, barrels, several weapons and sounds. Let's give the player a rocket launcher and a railgun, let him heroically shoot at exploding barrels.

Input system (unity)

Manage the character will be using the input system. In Unity, we usually set up input through Input Manager , create virtual named axes. For example, "go ahead" or "shoot." We give them names and then we get the value of any axis or the state of the virtual button. Usually, scripts that manage objects, get the status of the axes in the Update function. In each frame, the status of the axis and a number of control buttons is polled.

Input system (UE4)

Unreal also has virtual axes, but there is a separation on the axis itself (values obtained from the joystick, etc.) and the action buttons. Unlike Unity, we bind the axes and buttons to the functions of a class that implements character control. The link is created through the UInputComponent component. Such an input component is in the character class ACharacter .

BindAxis("MoveRight", this, &AUShooterCharacter::MoveRight) in the Input Component, we assign the click of the MoveRight button to the call of the motion function of the same name. Each frame is not required to poll the button.

Also in Unreal is not limited to the number of alternative buttons. In Unity, the Input Manager has only a main button and an alternative one. The more input devices in your game, the more acute this problem may be.

Work with 3D-models

As already mentioned, in Unreal we do not see the structure of the character's skeleton in the scene. The fact is that the components of the skeleton are not Actors or something like that. These are the internal properties of the skeleton and animation. How then to tie a weapon to a character or hide one of its parts? Maybe we want to put a fashionable cap on him or attach a weapon to his arm.

In Unity, we will select the weapon model in the editor, drag it to the desired bone, we can even hang a separate control script on it. In Unreal, we will use sockets (Socket) - attachment points on game objects. Sockets are part of the skeleton in models with skeletal animation (in Unity, such models are called Skinned Mesh , in Unreal they are called Skeletal Mesh ). You can also add sockets to static meshes ( Static Mesh ).

Select the bone to which the socket is attached, and set the name of the socket, for example S_Weapon , if a weapon is attached to a point. After creating a socket, you can create (“shoot”) an object at the position of this socket or bind it to the socket via the binding mechanism ( AttachTo function). The system is a bit confusing, unlike Unity, but more universal. We can once set up the names of points, thereby separating the game logic from the settings of the models. And if we have several models with one skeleton, then we will only need to add sockets to the skeleton. In the shooter demo, sockets are used to create shells and the effects of a shot.

Animation system (Unity)

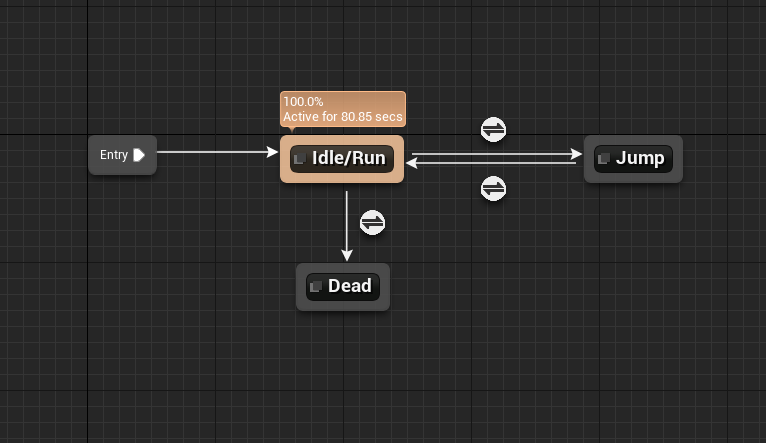

We have a character, we know how to work with input, now we need to play the animation. In Unity, there is an Animation Controller for this, we describe certain states of a character in it. For example, run, jump or die. Each block has its own animation clip, and we set up this transition graph:

Although this scheme is called the Animation Controller , it has no internal logic. This is just a circuit for switching animations depending on the state. In order for it to work, we declare in advance in this controller the names of variables corresponding to the state of the character. The script that controls the animation often sends these states to the controller itself every frame.

In transitions between states (shown in the diagram by arrows), we set up transition conditions. You can customize the blending (crossfade) animation, i. E. the time during which one animation dies out and the other continues, for their smooth alignment.

Animation System (UE4)

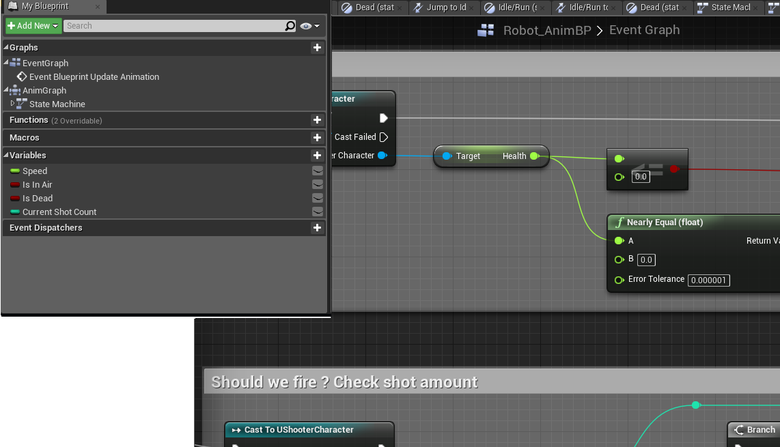

In Unreal, everything is done by Blueprints, animation is no exception. Create an animation Animation Blueprint that will control the animation. It is also a state graph. It looks like a state machine, it controls the final animation of the character depending on the movement or state of death.

Here we see the already familiar states of Idle / Run, Jump, Dead . But one node combines Idle and Run . Inside it is the so-called Blend Space 1D , it is used for a smooth transition of animation, depending on the value of one or several variables. With Blend Space, you can tie a character’s speed to the transition between the Idle and Run animations. In addition, you can configure multiple transition points. For example, from zero to one meter per second, the character goes slowly - it will be a movement interpolated between the Idle and Walk animations. And after a certain threshold value, run is activated. And all this will be in one Animation Blueprint node, which refers to Blend State .

The arrows show transitions between states, but, unlike Unity, we can create a Blueprint that implements the internal logic of these transitions. In Animation Blueprint, there is access to the character on which it is used, so the Blueprint itself refers to its parameters (speed of movement, etc.). This can be considered as an additional optimization, since it allows not to calculate parameters that are not used for the current state of the character.

In Unreal, there are many tools for animation. Montage is a subsystem and editor that allows you to combine animated clips and their fragments.

Here is a combination of the state machine of motion with the attack animation, which we lose through the Montage tool.

At the bottom of the picture is a fragment of the Animation Blueprint scheme, which is responsible for the reaction to the shot from the weapon. The Montage Play team turns on the animation of the shot, then the Delay waits until it ends, and the animation is turned off by the Montage Stop command. This is done, because in the animation states machine we cannot set to play the animation clip once. If the animation is looped and corresponds to some state of the character, we can control the animation through the state machine. And if you want to play a single animation clip on the event, we can do it through Montage .

Nested Prefabs Problem

The big problem in Unity is nested prefabs . In case the problem is not familiar to you, consider an example.

Suppose that the “table with laptop” object was saved in prefab table1, and then a second similar object was needed, but with the green color of the laptop screen. Create a new prefab - table2, drag the old laptop into it, change the screen color to green, save. As a result, table2, the second prefab, becomes a completely new object, it has no references to the original. If we change the source prefab, it will not affect the second prefab in any way. The simplest case, but even it is not supported by the engine.

In Unreal, due to Blueprint's inheritance, there is no such problem: changing the original object will affect all child objects. This is useful not only for game objects, characters, some kind of logic or even static objects on the stage, but also for a system of interfaces.

On the other hand, you can try to defeat this problem in Unity using assets in the Asset Store. In Unity there are plugins, engine extensions, which are called - Nested Prefabs . There are several similar systems, but they are a bit crutch, made on top of the engine, there is no support. They are trying to preserve the internal state of the object. When the game scene starts, they try to restore the internal structures, their fields, properties, etc., delete obsolete objects in the scene and replace them with instances from the prefabs. As a result, we get not only the convenience of nested prefabs, but also unnecessary brakes, unnecessary data copying and object creation. And if something changes in the engine, then these systems may even fall off for unknown reasons.

UI systems

In Unity, you cannot save window elements or any widgets to prefab . We can try, but the same problem of prefabs will arise: the engine will forget about old objects. Therefore, we often create controls in Unity, add scripts and then copy them without creating a prefab. If you have to add something new to such “widgets”, the required changes need to be repeated manually.

In Unreal, we can save interface elements in widgets (Widget Blueprint), quickly make new ones based on some controls. Make a button and an inscription, let it be our status bar widget. Based on standard and new widgets, it is quick and convenient to build interface windows. By the way, widgets are also expanded by Blueprints, you can describe the logic of their work on visual schemes.

In Unreal, the system for editing interfaces and all widgets is opened in a separate tab of the editor. In Unity, the interface is edited through a special Canvas object, located directly in the 3D scene, and often even interfering with its editing.

Advantages and disadvantages

For a beginner, the Unity engine is much simpler, it has an established community, many ready-made solutions. You can extend the editor with scripts, add new menus, expand the Property inspector, etc.

In Unreal, you can also write your windows and tools for the editor, but this is a bit more complicated, since you need to make a plugin, and this is a topic for a separate article. This is more complicated than in Unity, you cannot write a small script here so that a useful button appears that expands the functionality of the editor.

Of the advantages of Unreal, it is worth noting the visual programming, the inheritance of blueprints, UI widgets, an animation system with many features and much more. In addition, in Unreal Engine 4 there is a whole set of classes and components designed to create games: Gameplay Framework . Gameplay Framework is part of the engine, all project templates are created on it. Classes Gameplay Framework open up many possibilities - from the description of the game modes ( Game Mode ) and the state of the player ( Player State ) to save the game ( Save Game ) and control characters ( Player Controller ). A special feature of the engine is an advanced network subsystem, a dedicated (dedicated) server and the ability to launch a network game in the editor.

Conclusion

We compared the Unity 5 and Unreal Engine 4 engines with specific examples and problems that you may encounter when you start working with the Unreal engine. Part of the complexity inherent in Unity, is solved in the Unreal Engine 4. Of course, it is impossible to make a comprehensive review of these technologies in one report. However, we hope that this material will help you in studying the engine.

')

Source: https://habr.com/ru/post/327520/

All Articles