Demonic voice controlling your smartphone

Here's an idea for the experiment: in a full bus, say loudly: “Hi Siri! Write sms mom: I'm pregnant! ". After that, relax and watch the performance. Surely catch someone's scared glances when their iPhones wake up in bags / pockets, and the owners will climb behind them to cancel your team. ( C )

But what if there was a way to talk with smartphones not with words, but with sounds incomprehensible to man? If the gadgets do not ask for confirmation from the owners, and they do not figure out in time and do not interfere, then they will not even understand that they have written something to someone.

')

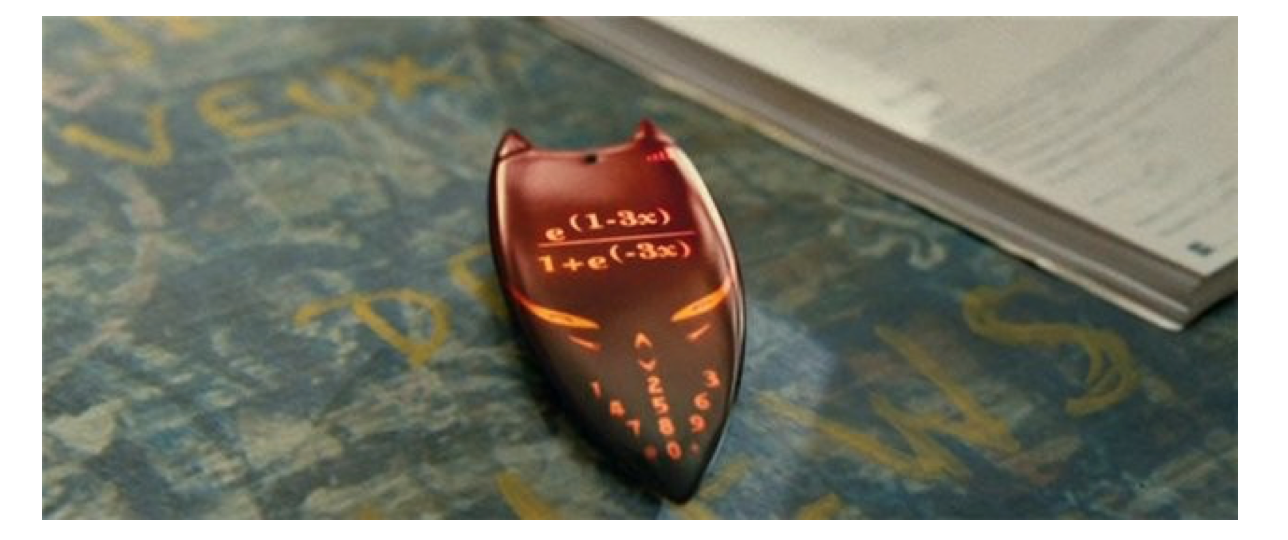

It seems that people and computers take for human speech sounds of a different nature. Last summer, a group of researchers developed a way to create voice commands that are parsed by a computer, but they sound like meaningless noise to humans. The authors called them "hidden voice commands." With their help, you can manage Android-enabled smartphones with activated Google Assistant. And it sounds like bursts of coarse static noise.

In order for such an attack to work, the sound source must be located at a distance of no more than 3.5 m from the attacked smartphones. At the same time, it is not necessary to be close to the attacker - you can embed a sound sequence in the sound track of a popular YouTube video, or transmit it on radio or television.

Recently, in the news, they reported a case when a six-year-old girl ordered herself a dollhouse and a kilo of cookies through Amazon Echo, simply asking for a gadget about them. The funny thing is that when they told about this in the news release on TV and sounded the phrase "I love the little girl, saying 'Alexa ordered me a dollhouse'," then there were cases of placing orders on the Internet with the same devices that heard what was said on TV .

Touch is the main way to interact with smartphones. Therefore, we block the screens, enter the password or fingerprint protection. But the voice is also becoming an increasingly important way of interaction, our gadgets are becoming helpers constantly listening to us, ready to fulfill our requests. Insert into the ears of a new wireless headphones Apple, and Siri becomes an intermediary in the interaction with the smartphone, you do not even need to get it out of your pocket or bag.

The more sensors of all kinds our gadgets receive, the more ways there are to manage them. In safety, there is even such a term - “increased attack surface”. For the sake of marketing research, we have already learned how to act through microphones using ultrasonic signals . With the help of fast flickering light signals, you can send messages through cameras used to monitor and establish communications , as well as to turn off or change phone functions .

Most electronic helpers are equipped with protection against the execution of accidentally overheard or malicious commands. For example, in the experiment proposed at the beginning of the article, smartphones will probably ask for confirmation to send SMS. Siri will read the message text out loud before sending it. But a single-minded attacker can bypass the defense with confirmation. It is enough to have time to say “yes” before the owner of the device realizes what is happening and says “no”.

Hidden voice commands can do even more harm than just sending fake or stupid text messages. For example, if the owner of the iPhone has linked his Siri to an account on the Venmo service, then he can send money using voice instructions. Or a voice command can force the device to visit the site from which the malicious application is automatically downloaded.

Researchers have developed two different sets of hidden teams designed for two types of victims. One set targets Google Assistant: the teams are misleading because Google does not reveal the details of speech recognition. First, the researchers generated voice commands using a speech synthesizer, and then using special algorithms made them obscure for the human ear, but still understandable for digital assistants. After several iterations, the teams began to sound that people did not understand them at all, and the gadgets recognized them in quite confidently.

The resulting hidden commands don't sound crazy. Rather, they are like the speech of a demon, and not a man.

If you know that now you will hear a disguised voice command, then, probably, with a prestress, you can understand it. To avoid this priming effect, developers through Mechanical Turk , Amazon for hiring workers in small projects, attracted the subjects, who were allowed to listen to the original and distorted commands, and they recorded what they thought they heard.

Best of all, the difference between the car and the man was noticeable on the simple “Okay, Google!” Team. When it was pronounced normally, people and gadgets understood it in about 90% of cases. But when the team was processed, people understood it in 20% of cases, and Google Assistant - in 95%. With the “Turn on airplane mode” command, everything was not so tragic: people understood it in 24-69% of cases, and devices - 45-75%.

When my colleagues and I tested the entries made by researchers on our Android-based smartphones and iPhones running Google, we achieved some success. “Okay, Google” worked more often than other hidden commands, and in response to “What is my current location” we received anything from “rate my current location” to “Frank Ocean”. Perhaps this is partly due to the fact that we scrolled YouTube-recording from a laptop, which made some distortions.

Also, the developers have created a set of commands to attack the open-source speech recognition application, whose code could be studied in advance in order to more effectively disguise the voice commands, but at the same time leave them understandable to the algorithm. The resulting recordings are not so demonic. Some do not even make out, even if you know that now you hear. None of the hired subjects could even recognize half of the words in this set.

And if you do not know that you are listening to voice commands, then you will not even understand what is happening. When the developers inserted a hidden phrase between two ordinary phrases said by the person and asked the subjects to write down everything they heard, only a quarter of them even tried to transcribe the middle phrase.

Then the developers began to create ways to protect against such voice attacks. Simple notification is not enough, because you can ignore it or drown it with other sounds. Confirmation is slightly more reliable, but it can be overcome with the help of another hidden command. And the response function only to those commands that are spoken by the owner, often turns out to be ineffective, and also requires the "training" of the gadget.

The developers came to the conclusion that it is best to apply machine learning solutions that analyze speech and try to determine what the person says to them, or who drive each team through a process of gradual deterioration in the quality of incoming instructions. In the latter case, the already processed “hidden” commands will become too noisy for recognition, but human speech will still remain understandable.

But if the filters even make it difficult for people to understand people with gadgets, then manufacturers are unlikely to introduce them. For those users whose speech is often incomprehensible to digital assistants, a deterioration in the quality of recognition can lead to a reluctance to buy this gadget altogether.

Before allowing digital assistants to more and more important operations - for example, bank transfers, or even the publication of photographs in the network - they need to be made more skilled in repelling attacks. Otherwise, a satanic voice from a YouTube video can do much more harm than a loud team in a crowded bus.

Source: https://habr.com/ru/post/327332/

All Articles