Difficulty on the border of chaos, or what is common between sex, neural networks, microservices and company organization

We very often use the concept of complexity, we struggle with it, and at the same time, we create more and more ordered structures, we reduce entropy and assert ourselves with it. At the same time, we must be prepared for change, we must be adaptive. Where is the balance point? What is behind all these concepts and concepts. Maybe there is something that unites all this, hiding from our eyes, and at the same time being constantly in sight?

We start with small steps and try to uncover the deep structures that underlie complex systems, and, to begin with, turn to the amazingly beautiful, and to some extent revolutionary, theory of NK automata S. Kaufman.

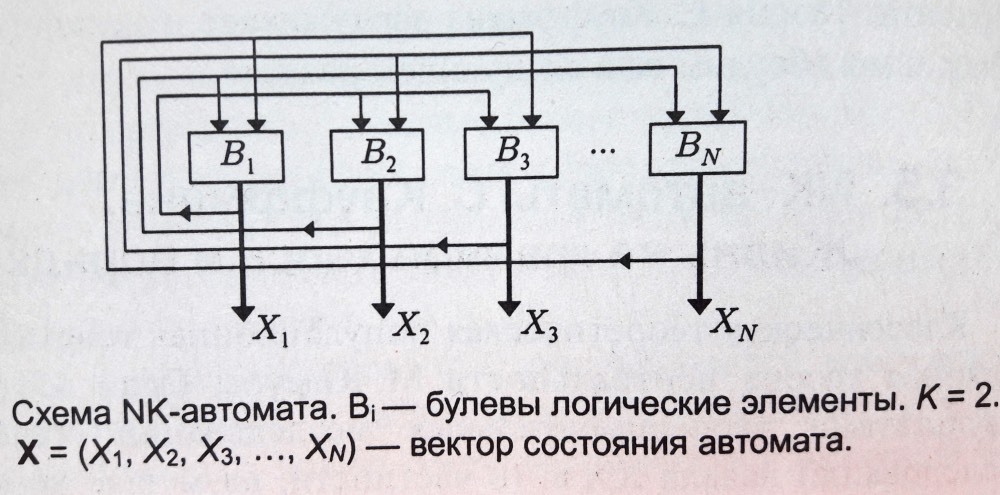

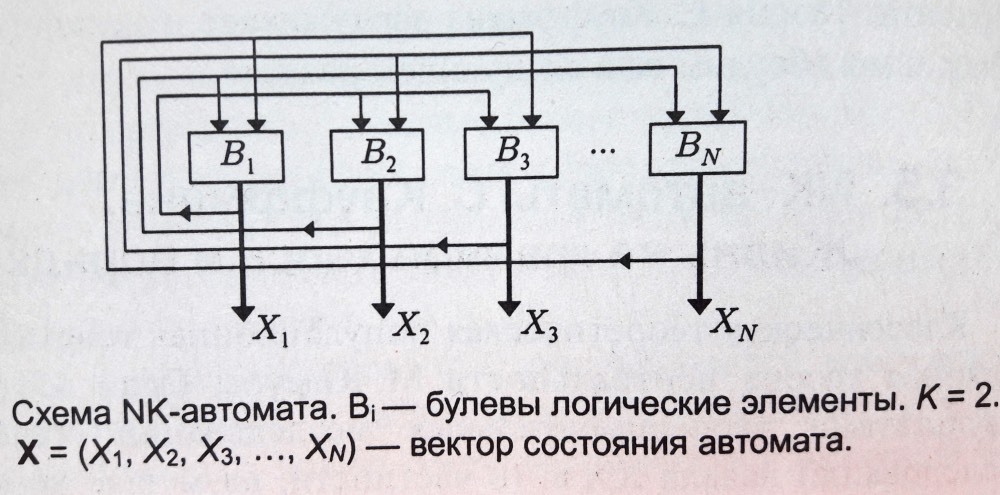

NK automaton (see figure) is a network of N Boolean logic elements. Each element has K inputs and one output. The signals at the inputs and outputs of binary elements, i.e. take the values either 0 or 1. The outputs of some elements arrive at the inputs of others, these connections are random, but the number of inputs K of each element is fixed. The logical elements themselves are also randomly selected. The system is completely autonomous, i.e. no external inputs. The number of elements included in the machine is assumed to be large, N >> 1.

')

The automaton functions in discrete time t = 1, 2, 3, ... The state of the automaton at each time instant is determined by the vector X (t) - the set of all output signals of all logic elements. In the course of its operation, the sequence of states converges to some kind of attractor , the limit cycle. The sequence of X (t) states in this attractor can be considered as a program for the functioning of an automaton. The number of attractors M and the typical length of the attractor L are important characteristics of NK automata.

The behavior of automata substantially depends on the connectivity of K.

For large K (K = N), i.e. the number of links is equal to or almost equal to the number of elements << life >> of automata is stochastic: the successive states of attractors are radically different from each other. Programs are very sensitive (change significantly) both with respect to minimal disturbances (random variation of one of the components of the output vector X (t) during the operation of the automaton) and minimal mutations (changing the type of element or the relationship between elements). The length of the attractors is very large L ~ 2 to the power of N / 2.

The number of attractors M is of order N. If the number of connections K decreases, that is, the number of inputs per Boolean element decreases, then the stochastic behavior is still observed until the connectivity measure becomes K ~ 2

When K ~ 2, the behavior of automata changes radically. Sensitivity to minimal disturbances is weak. Mutations, changes in the inputs, as a rule, cause only small variations in the dynamics of automata. Only some rare mutations cause radical, cascading shifts of “programs” of automata. The length of the attractor L and the number of attractors M of the order of the square root of N.

This behavior is on the edge of chaos . On the dividing line between order and chaos.

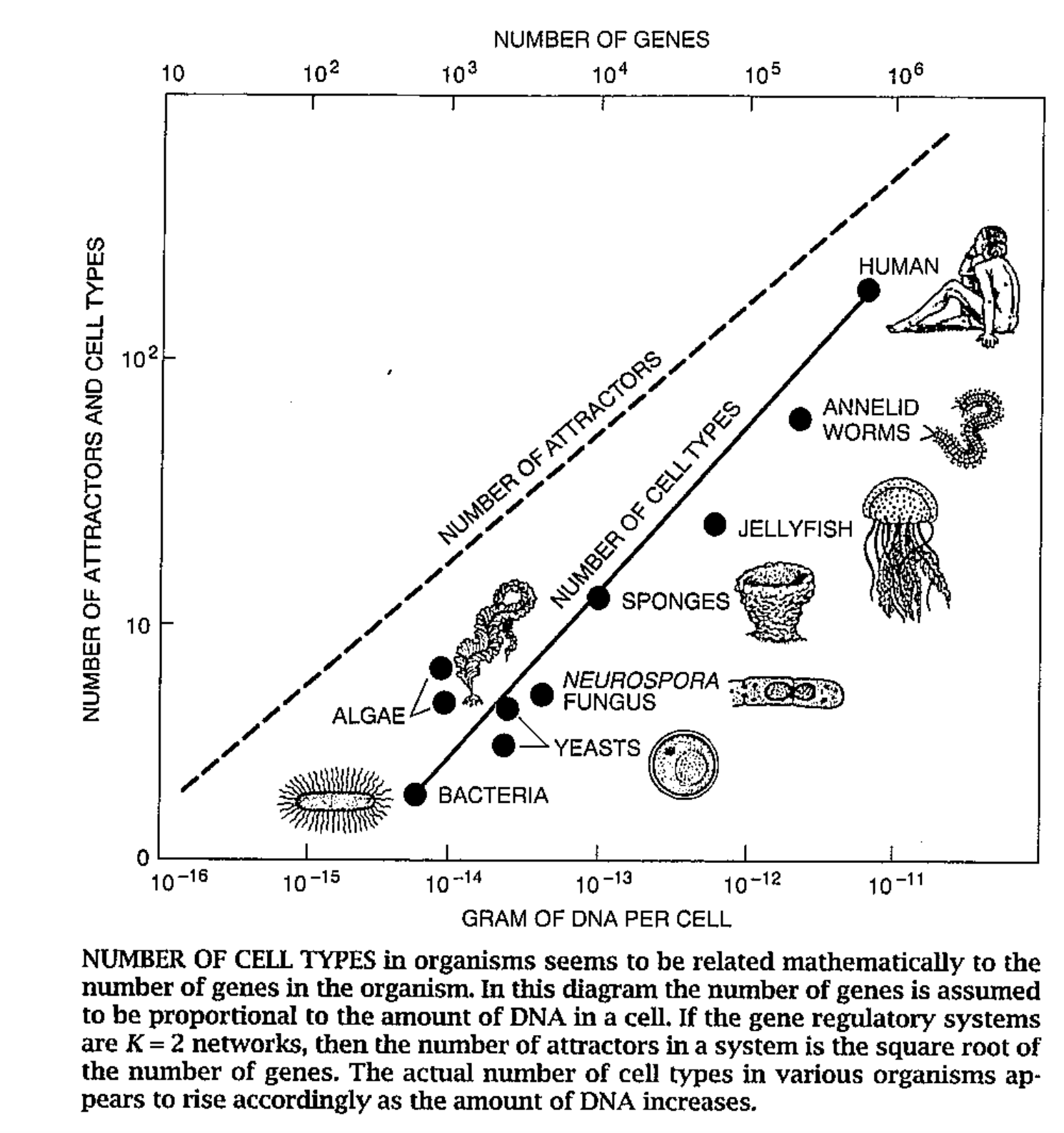

NK automaton can be considered as a model of regulatory and controlling genetic systems in living cells. For example, if we consider protein synthesis (gene expression) as regulated by other proteins, then we can approximate this regulatory gene expression scheme with a Boolean element, thus the complete network of molecular genetic control and management of a cell can be represented by a network of NK automata.

S.A. Kaufman argues that the case of K ~ 2 is suitable for modeling regulatory systems of biological cellular mechanisms, especially in an evolutionary context. The main points of this argument are as follows:

Since regulatory structures “on the edge of chaos” (K ~ 2) provide both stability and evolutionary improvements, they can provide the necessary conditions for the evolution of genetic cyber systems, since these structures have an “ability to evolve”. It seems plausible that this type of regulatory and control systems were chosen in the early stages of life, and this, in turn, made further progressive evolution possible.

→ At-home in the Universe: Self-Organization and Complexity

If we start moving a little further, then we will be asked why there are two sexes in nature? After all, in theory, if we take a simple evolutionary algorithm that performs random mutations and selects the best, according to some criterion, then sooner or later the optimum fitness will be achieved. Here are quite natural questions that arise before us:

How to find the right balance between adaptability and stability?

Sex is almost universal in life: it is everywhere - in animals and plants, fungi, bacteria. Yes, many species are involved in asexual reproduction, or budding, at some time in their lives, but they also have sexual reproduction at other points in time, thereby ensuring that their genes will be shuffled.

Not only sex is present everywhere, but apparently, it is a very central point of life, as such. It is enough to look at all the diversity of behaviors and structures, from the connection of bacteria to the intensive molecular engineering of meiosis (the recombination process of the genetic apparatus), from countless shades of colors, to dance and fascinating birds singing, from battles to the drama of human relationships, very much revolves around sex in life . So why? What role can sex play in evolution?

One fairly general answer is that sex generates enough diversity, and thus, this should help evolution. But just as sexual reproduction collects genetic combinations, it also breaks them. A very successful genotype can be broken down and lost in the next generation, since the descendant has acquired only half of the genes. Thus, to assert that the role of sex is to create very adapted genetic combinations, it’s like watching a fisherman fishing only to throw her back into the sea, and conclude from this that he wants to bring food to the table my family.

Sexual reproduction is the incorporation of half of the genes of one parent, and half of the other, the addition of very small mutation values and a combination of all of this, to get descendants. The asexual alternative is the creation of descendants from small mutated copies of the parental genotype. It seems plausible that asexual reproduction is the best way to optimize individual fitness, because a good set of genes that have shown their excellent work can be transferred directly to offspring. On the other hand, sexual reproduction will probably break this set of genes with good co-adaptation. Especially if this set of genes is big! Intuitively, it is clear to us that this should reduce the fitness of organisms that have already developed complex co-adaptation in the process of evolution.

One of the possible, and taken in the general context, the most likely explanation for the superiority of sexual reproduction is that in the long run, the criterion for natural selection may not be individual fitness, but rather the possibility of independent collaboration of genes. The ability of a set of genes to work with another random set of genes makes them more resilient. Since the gene cannot rely on a large set of partners that will always be present nearby, it must learn something useful on its own, or in collaboration with a small number of other genes. According to this theory, the role of sexual reproduction is not only to allow a successful combination of genes to spread throughout the population, but also to facilitate this process by reducing complex co-adaptations that can reduce the chance of a new gene in improving the individual's fitness.

Richard Dawkins, in his scandalous book, The Selfish Gene , also argues, and convincingly argues that the unit of evolution is not species and not populations, but gene paths, and the lifespan of these evolution units not hundreds and thousands of years, but tens of millions. We are nothing more than machines grown by genes to achieve our goals, even though it sounds shocking.

This theory of the role of sex in evolution appeared in the works of A. Livnat and K. Papadimitrou in 2010. Sex, mixability and modularity .

An excellent overview is also found in the Sex as an Algorithm: The Theory of Evolution Under the Lens of Computation article.

The aforementioned theory influenced the learning methods of neural networks. Neural networks are a universal approximator, and can accurately represent any function, with the necessary level of approximation. But since our collected data is noisy, the ideal approximation leads to overfitting, that is, the neural network learns not only the data, but also the noise with which this data was measured. Moreover, in spaces of high dimensionality on manifolds of high curvature, we cannot use Gaussian kernels to approximate the neighborhoods of our manifold, and we need to find a sweet-spot between overfitting and the correct approximation of the manifold on which the data lie. That is, here we encounter the good old Occam's razor in the form of a rule for explaining data with the minimum dimension of Vapnik-Chervoneskis.

In machine learning theory, approaches that help us find common rules explaining the structure of our data are called regularizers. In neural networks, for example, this may be the attenuation of the L2 scales, the regularizer of the L1 sparseness, early stop, etc.

DropOut was motivated by splitting co-adaptations in genotypes. What it is?

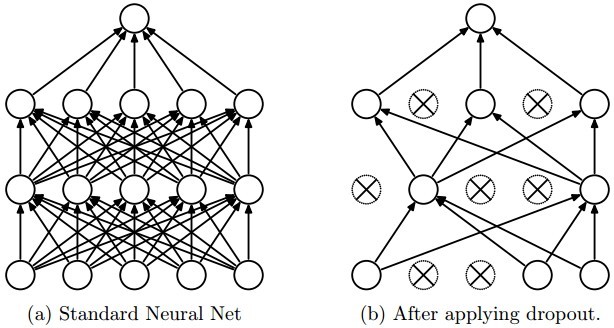

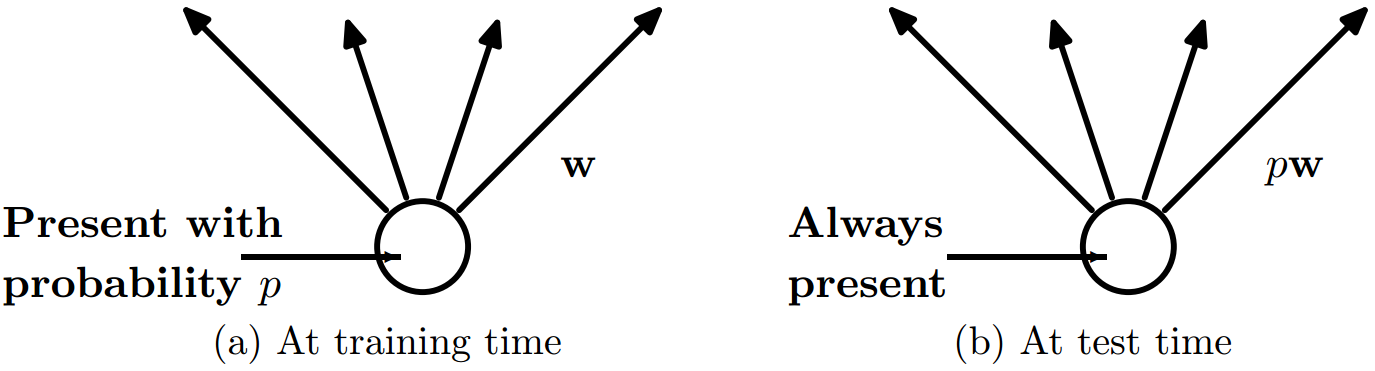

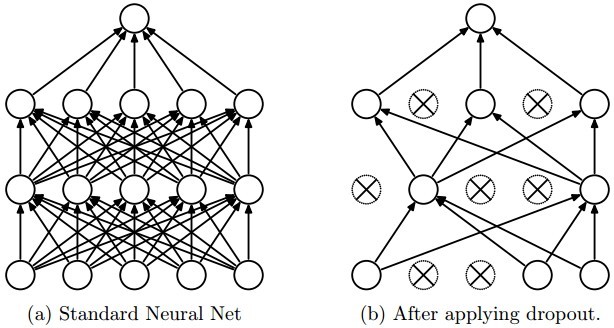

The term DropOut itself refers to the discarding of units (neurons), both visible and hidden. By discarding an item, it means its temporary exclusion from the network, both with input and output connections (see the figure below).

On the left is the standard neural network. On the right is an example of a network, after dropping part of the neurons, they are marked as crossed out.

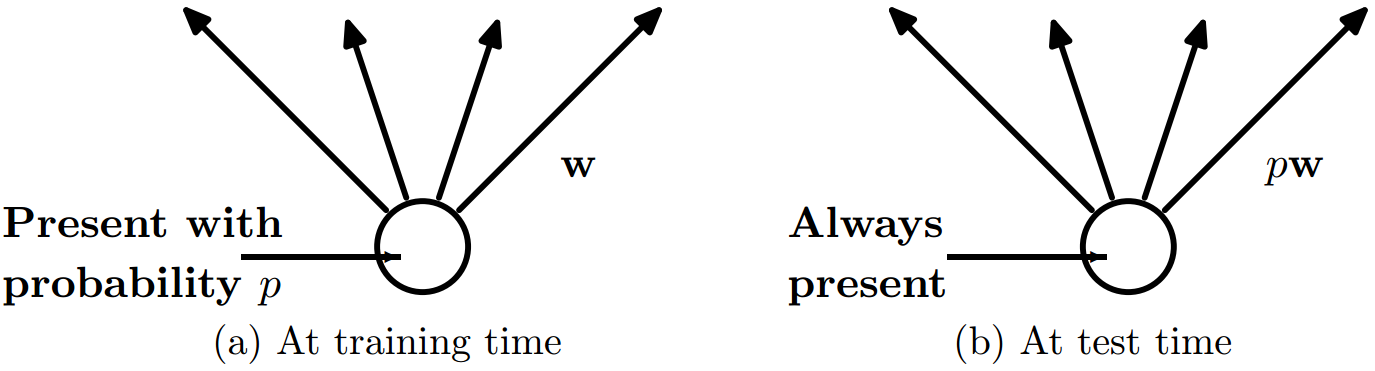

The choice of which element to drop is random. In the simplest case, each element remains in the network with a certain fixed probability, independent of other elements. Roughly speaking, before each pass through the network, in the process of learning, we throw a coin and determine whether this neuron remains in the network for this passage, or not. After each pass, the process is repeated.

After training, all neuron weights are multiplied by the probability of their presence in the learning process, and as a result we get a weighted ensemble of models.

Thus, like a set of genes, every element in a neural network trained with the use of a dropout must learn to work with a randomly selected set of other elements - neurons. This should make each neuron more resilient, and move its weights towards creating useful traits that should work on their own, with no hope of adjustment and support from other neurons that would correct its errors. Thus, the possible co-adaptation of elements is broken, which leads in general to stability and generalization.

Complex co-adaptations can be trained and show good results on training data, but on new data they are much more likely to fail than small simple co-adaptations that tend to the same goal.

→ Dropout: Prevent Neural Networks from Overfitting

The same concept, breaking co-adaptation, both in the role of sex and in the application of dropout , can be inferred when thinking about successful conspiracies. Ten groups, each with 5 people, are a better way to create confusion and panic than one big conspiracy, which requires 50 people who must fulfill their roles exactly. If conditions do not change, and there is a lot of preparation time available, then large conspiracies can work well, but in unstable conditions and rapidly changing conditions, the smaller the group of conspirators, the higher their chances of success. That is why the regular army has no chance of defeating the partisans, unless we put everyone in a concentration camp, or the fight against terrorism, using troops, will never be successful. And then if we think about where else we find ourselves in non-stationary conditions, where the situation is changing rapidly, we will discover that this is the world of business in general, and of software development in particular.

The history of business is full of examples when the giant, resting on its laurels, was not able to adequately respond to the changing environment, and was crushed by a competitor who quickly responded to changes. The most vivid example is the story of Nokia. But I want to focus on the paradigm shift in the software development industry, and, as a result, the paradigm shift in organizations. If before the business moved IT, now IT is moving the business.

Even at the dawn of programming, it became clear that as the code grows, it becomes harder and harder to manage. Changes are made more and more difficult, and the correction of some errors leads to a cascade of others. Quite naturally, we came to the concept of loosely coupled code ( loosely coupling ), began to divide systems into layers of abstraction, the object-oriented approach began to shine in our skies. Encapsulation and interfaces allowed to break the complexity of the code, to remove co-adaptation and to separate the levels of responsibility. That was a big step. But this approach worked at the level of one machine. Systems were created that were internally arranged according to the principles listed above, but externally these were monolithic applications. Those same monoliths that everyone is now trying to give up before it's too late.

The monolithic approach to creating applications introduced its own requirements into the organizational process. Although many development teams were fairly agile , the overall process in the organization was linear. There was planning, there were analysts covering the general processes in the system, there were stages of reception. It was here and there, what is called waterscrumfall . Model of a waterfall around the edges and scrum in the middle.

Along with the growth of network speeds and information flows, it came to the realization that monolithic systems are unable to adequately respond to these challenges. We can say that the approaches of weak connectivity, design through interfaces and so on, we pulled the monolith from the inside out. Microservices are the most obvious example of this approach. We break a complex system into a large number of loosely coupled elements, each of which does a minimum of things maximum and tries to be as independent and isolated as possible. The same thing that sex brings genes, dropout - neural network, microservice architecture brings in the development of IT systems. Yes, microservices for IT organizations, it's like sex, sex for genes. Changes in business requirements can be very quickly adapted by small groups and units of functionality and design, without affecting other parts of the system.

Moreover, this approach inevitably leads to a change at the level of the organization, instead of a rigidly structured hierarchy, instead of a management tree and a chain of decision-making, a community of small groups appears, each of which stores deep knowledge in its subject area (and we don’t need so many analysts ). These small groups of developers can very quickly implement a huge amount of functionality in a very short time (API first approach). Thus, if a business wants to very quickly adapt to changes, or roll out new services, then the only approach to this is to divide co-adaptations at the enterprise level.

All this is already known.

But I wanted to show that this is a division of complexity and co-adaptations, something that underlies our world, like the concept of computability.

Tom Stewart, beautifully remarked that the concept of computability, which was initially described by Turing, simply as “what the computer / computer does,” suddenly turned into something like a force of nature. It is tempting to think that computing is a complex human invention that can be performed only by specially designed systems with many complex parts, but it can also be seen that systems that are simple enough can perform calculations. The same Church system, Conway's cellular automata, SKU and tau calculus, cyclic tag systems, Gödel formal systems, etc. That is, the calculation is not a sterile, artificial process that occurs only inside the microprocessor, but rather a universal phenomenon that unexpectedly occurs in different places and in different ways. This is a purely platonic idea, something that exists besides our perceptual-mental apparatus, something that is true in the highest sense of the word.

Moreover, all mathematics, this is not purely our notion, which we invented. On the contrary, mathematics is something more, we discover there that we did not invest there. A vivid example is fractals. This is something that exists outside of us and besides us, we are like captains in forgotten lands, who discover new shores.

I tried to show that between life “on the border of chaos”, between mathematical concepts of training neural networks, between methods of software design and management of organizations, there is something in common that permeates and underlies, as a necessary prerequisite to simultaneous abilities for adaptation and stability.

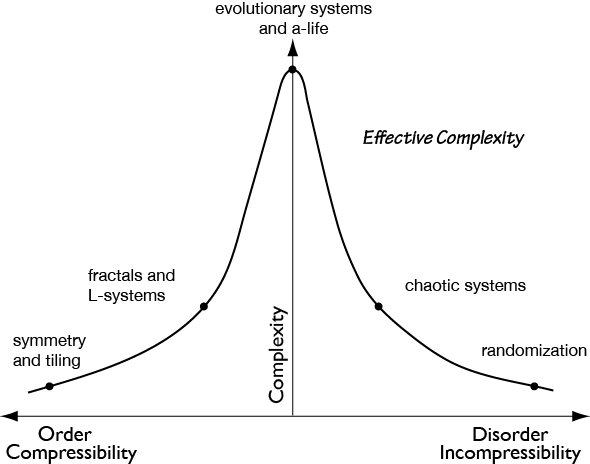

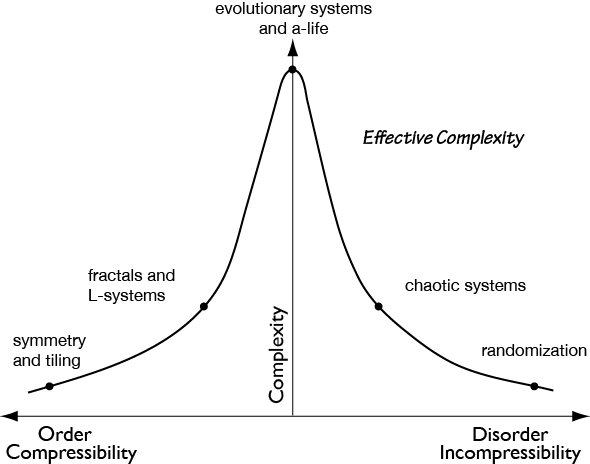

It turns out that in various areas of our life, we, like life itself, are trying to climb to the top of that mountain that we see in the picture below.

And finally, I want to remember Stanislav Lem's wonderful novel Invincible . The writer, possessing outstanding intelligence and intuition, also grabbed this concept, which I tried to describe, and very convincingly concluded. I will remind you.

quotes from the book:

We start with small steps and try to uncover the deep structures that underlie complex systems, and, to begin with, turn to the amazingly beautiful, and to some extent revolutionary, theory of NK automata S. Kaufman.

NK machines or "life at the edge of chaos"

NK automaton (see figure) is a network of N Boolean logic elements. Each element has K inputs and one output. The signals at the inputs and outputs of binary elements, i.e. take the values either 0 or 1. The outputs of some elements arrive at the inputs of others, these connections are random, but the number of inputs K of each element is fixed. The logical elements themselves are also randomly selected. The system is completely autonomous, i.e. no external inputs. The number of elements included in the machine is assumed to be large, N >> 1.

')

The automaton functions in discrete time t = 1, 2, 3, ... The state of the automaton at each time instant is determined by the vector X (t) - the set of all output signals of all logic elements. In the course of its operation, the sequence of states converges to some kind of attractor , the limit cycle. The sequence of X (t) states in this attractor can be considered as a program for the functioning of an automaton. The number of attractors M and the typical length of the attractor L are important characteristics of NK automata.

The behavior of automata substantially depends on the connectivity of K.

For large K (K = N), i.e. the number of links is equal to or almost equal to the number of elements << life >> of automata is stochastic: the successive states of attractors are radically different from each other. Programs are very sensitive (change significantly) both with respect to minimal disturbances (random variation of one of the components of the output vector X (t) during the operation of the automaton) and minimal mutations (changing the type of element or the relationship between elements). The length of the attractors is very large L ~ 2 to the power of N / 2.

The number of attractors M is of order N. If the number of connections K decreases, that is, the number of inputs per Boolean element decreases, then the stochastic behavior is still observed until the connectivity measure becomes K ~ 2

When K ~ 2, the behavior of automata changes radically. Sensitivity to minimal disturbances is weak. Mutations, changes in the inputs, as a rule, cause only small variations in the dynamics of automata. Only some rare mutations cause radical, cascading shifts of “programs” of automata. The length of the attractor L and the number of attractors M of the order of the square root of N.

This behavior is on the edge of chaos . On the dividing line between order and chaos.

NK automaton can be considered as a model of regulatory and controlling genetic systems in living cells. For example, if we consider protein synthesis (gene expression) as regulated by other proteins, then we can approximate this regulatory gene expression scheme with a Boolean element, thus the complete network of molecular genetic control and management of a cell can be represented by a network of NK automata.

S.A. Kaufman argues that the case of K ~ 2 is suitable for modeling regulatory systems of biological cellular mechanisms, especially in an evolutionary context. The main points of this argument are as follows:

- Genetic control systems that exist on the edge of chaos provide both the necessary stability and the potential for progressive evolutionary improvements. Those. if you are stable, then when you change the external conditions, you will become extinct. If you are on the contrary, you often change, then you will also become extinct, because you will not save the optimal set of genes;

- A typical scheme for cellular regulation of genes includes a small number of inputs (effects) from other genes, in accordance with the value of K ~ 2;

- If we compare the number of attractors of the automaton M at K = 2 (calculated for a given number of N genes) with the number of different cell types (nerve, muscle, etc.) in biological organisms at different levels of evolution, we will find similar values; For example, for a person (N ~ 100 000): M = 370, the number of cell types = 254

Since regulatory structures “on the edge of chaos” (K ~ 2) provide both stability and evolutionary improvements, they can provide the necessary conditions for the evolution of genetic cyber systems, since these structures have an “ability to evolve”. It seems plausible that this type of regulatory and control systems were chosen in the early stages of life, and this, in turn, made further progressive evolution possible.

→ At-home in the Universe: Self-Organization and Complexity

Sex as an evolutionary algorithm

If we start moving a little further, then we will be asked why there are two sexes in nature? After all, in theory, if we take a simple evolutionary algorithm that performs random mutations and selects the best, according to some criterion, then sooner or later the optimum fitness will be achieved. Here are quite natural questions that arise before us:

- What is the role of sex in evolution? Reproduction with recombination is almost ubiquitous in life (even bacteria exchange genetic material), while asexual species seem to be rare evolutionary dead ends. What gives the floor such an advantage? What gives the advantage of sex as such, we all know :)

- What specifically optimizes evolution?

- Paradox of variation. Genetic diversity in humans, and other species, is much higher than predicted by the theory of evolution (natural selection). And if we take into account the fact that to some extent this variation is neutral, then again a problem arises.

How to find the right balance between adaptability and stability?

Sex is almost universal in life: it is everywhere - in animals and plants, fungi, bacteria. Yes, many species are involved in asexual reproduction, or budding, at some time in their lives, but they also have sexual reproduction at other points in time, thereby ensuring that their genes will be shuffled.

Not only sex is present everywhere, but apparently, it is a very central point of life, as such. It is enough to look at all the diversity of behaviors and structures, from the connection of bacteria to the intensive molecular engineering of meiosis (the recombination process of the genetic apparatus), from countless shades of colors, to dance and fascinating birds singing, from battles to the drama of human relationships, very much revolves around sex in life . So why? What role can sex play in evolution?

One fairly general answer is that sex generates enough diversity, and thus, this should help evolution. But just as sexual reproduction collects genetic combinations, it also breaks them. A very successful genotype can be broken down and lost in the next generation, since the descendant has acquired only half of the genes. Thus, to assert that the role of sex is to create very adapted genetic combinations, it’s like watching a fisherman fishing only to throw her back into the sea, and conclude from this that he wants to bring food to the table my family.

Sexual reproduction is the incorporation of half of the genes of one parent, and half of the other, the addition of very small mutation values and a combination of all of this, to get descendants. The asexual alternative is the creation of descendants from small mutated copies of the parental genotype. It seems plausible that asexual reproduction is the best way to optimize individual fitness, because a good set of genes that have shown their excellent work can be transferred directly to offspring. On the other hand, sexual reproduction will probably break this set of genes with good co-adaptation. Especially if this set of genes is big! Intuitively, it is clear to us that this should reduce the fitness of organisms that have already developed complex co-adaptation in the process of evolution.

One of the possible, and taken in the general context, the most likely explanation for the superiority of sexual reproduction is that in the long run, the criterion for natural selection may not be individual fitness, but rather the possibility of independent collaboration of genes. The ability of a set of genes to work with another random set of genes makes them more resilient. Since the gene cannot rely on a large set of partners that will always be present nearby, it must learn something useful on its own, or in collaboration with a small number of other genes. According to this theory, the role of sexual reproduction is not only to allow a successful combination of genes to spread throughout the population, but also to facilitate this process by reducing complex co-adaptations that can reduce the chance of a new gene in improving the individual's fitness.

Richard Dawkins, in his scandalous book, The Selfish Gene , also argues, and convincingly argues that the unit of evolution is not species and not populations, but gene paths, and the lifespan of these evolution units not hundreds and thousands of years, but tens of millions. We are nothing more than machines grown by genes to achieve our goals, even though it sounds shocking.

This theory of the role of sex in evolution appeared in the works of A. Livnat and K. Papadimitrou in 2010. Sex, mixability and modularity .

An excellent overview is also found in the Sex as an Algorithm: The Theory of Evolution Under the Lens of Computation article.

Neural Networks and DropOut

The aforementioned theory influenced the learning methods of neural networks. Neural networks are a universal approximator, and can accurately represent any function, with the necessary level of approximation. But since our collected data is noisy, the ideal approximation leads to overfitting, that is, the neural network learns not only the data, but also the noise with which this data was measured. Moreover, in spaces of high dimensionality on manifolds of high curvature, we cannot use Gaussian kernels to approximate the neighborhoods of our manifold, and we need to find a sweet-spot between overfitting and the correct approximation of the manifold on which the data lie. That is, here we encounter the good old Occam's razor in the form of a rule for explaining data with the minimum dimension of Vapnik-Chervoneskis.

In machine learning theory, approaches that help us find common rules explaining the structure of our data are called regularizers. In neural networks, for example, this may be the attenuation of the L2 scales, the regularizer of the L1 sparseness, early stop, etc.

DropOut was motivated by splitting co-adaptations in genotypes. What it is?

The term DropOut itself refers to the discarding of units (neurons), both visible and hidden. By discarding an item, it means its temporary exclusion from the network, both with input and output connections (see the figure below).

On the left is the standard neural network. On the right is an example of a network, after dropping part of the neurons, they are marked as crossed out.

The choice of which element to drop is random. In the simplest case, each element remains in the network with a certain fixed probability, independent of other elements. Roughly speaking, before each pass through the network, in the process of learning, we throw a coin and determine whether this neuron remains in the network for this passage, or not. After each pass, the process is repeated.

After training, all neuron weights are multiplied by the probability of their presence in the learning process, and as a result we get a weighted ensemble of models.

Thus, like a set of genes, every element in a neural network trained with the use of a dropout must learn to work with a randomly selected set of other elements - neurons. This should make each neuron more resilient, and move its weights towards creating useful traits that should work on their own, with no hope of adjustment and support from other neurons that would correct its errors. Thus, the possible co-adaptation of elements is broken, which leads in general to stability and generalization.

Complex co-adaptations can be trained and show good results on training data, but on new data they are much more likely to fail than small simple co-adaptations that tend to the same goal.

→ Dropout: Prevent Neural Networks from Overfitting

Conspiracy Theory and Terrorism

The same concept, breaking co-adaptation, both in the role of sex and in the application of dropout , can be inferred when thinking about successful conspiracies. Ten groups, each with 5 people, are a better way to create confusion and panic than one big conspiracy, which requires 50 people who must fulfill their roles exactly. If conditions do not change, and there is a lot of preparation time available, then large conspiracies can work well, but in unstable conditions and rapidly changing conditions, the smaller the group of conspirators, the higher their chances of success. That is why the regular army has no chance of defeating the partisans, unless we put everyone in a concentration camp, or the fight against terrorism, using troops, will never be successful. And then if we think about where else we find ourselves in non-stationary conditions, where the situation is changing rapidly, we will discover that this is the world of business in general, and of software development in particular.

Information System Development and Business

The history of business is full of examples when the giant, resting on its laurels, was not able to adequately respond to the changing environment, and was crushed by a competitor who quickly responded to changes. The most vivid example is the story of Nokia. But I want to focus on the paradigm shift in the software development industry, and, as a result, the paradigm shift in organizations. If before the business moved IT, now IT is moving the business.

Even at the dawn of programming, it became clear that as the code grows, it becomes harder and harder to manage. Changes are made more and more difficult, and the correction of some errors leads to a cascade of others. Quite naturally, we came to the concept of loosely coupled code ( loosely coupling ), began to divide systems into layers of abstraction, the object-oriented approach began to shine in our skies. Encapsulation and interfaces allowed to break the complexity of the code, to remove co-adaptation and to separate the levels of responsibility. That was a big step. But this approach worked at the level of one machine. Systems were created that were internally arranged according to the principles listed above, but externally these were monolithic applications. Those same monoliths that everyone is now trying to give up before it's too late.

The monolithic approach to creating applications introduced its own requirements into the organizational process. Although many development teams were fairly agile , the overall process in the organization was linear. There was planning, there were analysts covering the general processes in the system, there were stages of reception. It was here and there, what is called waterscrumfall . Model of a waterfall around the edges and scrum in the middle.

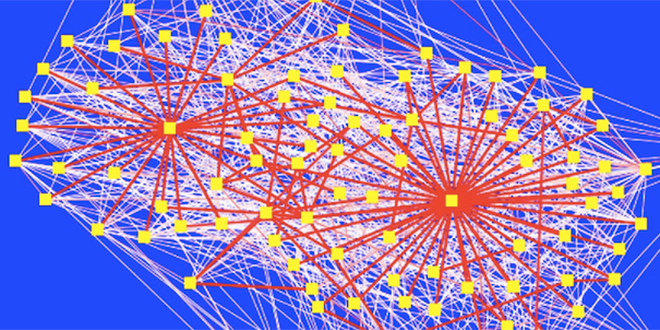

Along with the growth of network speeds and information flows, it came to the realization that monolithic systems are unable to adequately respond to these challenges. We can say that the approaches of weak connectivity, design through interfaces and so on, we pulled the monolith from the inside out. Microservices are the most obvious example of this approach. We break a complex system into a large number of loosely coupled elements, each of which does a minimum of things maximum and tries to be as independent and isolated as possible. The same thing that sex brings genes, dropout - neural network, microservice architecture brings in the development of IT systems. Yes, microservices for IT organizations, it's like sex, sex for genes. Changes in business requirements can be very quickly adapted by small groups and units of functionality and design, without affecting other parts of the system.

Moreover, this approach inevitably leads to a change at the level of the organization, instead of a rigidly structured hierarchy, instead of a management tree and a chain of decision-making, a community of small groups appears, each of which stores deep knowledge in its subject area (and we don’t need so many analysts ). These small groups of developers can very quickly implement a huge amount of functionality in a very short time (API first approach). Thus, if a business wants to very quickly adapt to changes, or roll out new services, then the only approach to this is to divide co-adaptations at the enterprise level.

All this is already known.

But I wanted to show that this is a division of complexity and co-adaptations, something that underlies our world, like the concept of computability.

Computation, which is what we need, ” It has been shown that it has been especially difficult to support it. It is a sterile process that can be seen as a microprocessor.

Tom Stewart, beautifully remarked that the concept of computability, which was initially described by Turing, simply as “what the computer / computer does,” suddenly turned into something like a force of nature. It is tempting to think that computing is a complex human invention that can be performed only by specially designed systems with many complex parts, but it can also be seen that systems that are simple enough can perform calculations. The same Church system, Conway's cellular automata, SKU and tau calculus, cyclic tag systems, Gödel formal systems, etc. That is, the calculation is not a sterile, artificial process that occurs only inside the microprocessor, but rather a universal phenomenon that unexpectedly occurs in different places and in different ways. This is a purely platonic idea, something that exists besides our perceptual-mental apparatus, something that is true in the highest sense of the word.

Moreover, all mathematics, this is not purely our notion, which we invented. On the contrary, mathematics is something more, we discover there that we did not invest there. A vivid example is fractals. This is something that exists outside of us and besides us, we are like captains in forgotten lands, who discover new shores.

I tried to show that between life “on the border of chaos”, between mathematical concepts of training neural networks, between methods of software design and management of organizations, there is something in common that permeates and underlies, as a necessary prerequisite to simultaneous abilities for adaptation and stability.

It turns out that in various areas of our life, we, like life itself, are trying to climb to the top of that mountain that we see in the picture below.

Epilogue

And finally, I want to remember Stanislav Lem's wonderful novel Invincible . The writer, possessing outstanding intelligence and intuition, also grabbed this concept, which I tried to describe, and very convincingly concluded. I will remind you.

The crew of the Invincible was tasked to find traces of the missing Condor expedition and find out the cause of its death. The biologist Lauda has the idea that the evolution of mechanisms left over from the alien civilization, which has arrived from the Lyra constellation (Lyrian), is happening on the planet.

Judging by the few traces that remained from the first colonies of robots, there were several types of robots. “Mobile” - mobile robots, sophisticated, intelligent, armed, powered by nuclear energy. The “simplest” are simple constructively, these robots, however, were capable of limited adaptability. They were not as intelligent and specialized as "mobile", but they did not depend on the availability of spare parts and radioactive fuel.

Deprived of the attention of the Lyrens, the robots began to grow and change uncontrollably. Necroevolution led to the fact that the fittest survived, and they were the simplest robots. Expeditions of earthlings collided with their distant descendants, who look like flies.

Under the Regis-III conditions, the most adapted were not the most complex and intellectual, not the most powerful, but the most numerous and flexible. Over thousands of years of necroevolution, these automata have learned how to effectively deal with competitors who surpass them both in intelligence and in power equipment. They had to fight not only with other robots, but also with the living world of the planet.

quotes from the book:

- ... here we are talking about a dead evolution of a very peculiar type, which arose under completely unusual conditions created by coincidence of circumstances, <...> devices evolved in this evolution, first, the most effectively decreasing, and second, settled, not moving. The first laid the foundation for these black clouds. I personally think that these are very small pseudo-insect who, if necessary, are able, in general, so to speak, to unite into large systems. Namely, here in these clouds. This was the evolution of moving mechanisms. The settled ones initiated the strange kind of metallic vegetation that we saw - the ruins of the so-called cities ...

“It’s still incredible that creatures with more advanced intelligence, these macro-automatic machines, won out,” one of the cybernetic said. - It would be an exception to the rule that evolution is following the path of complication, improvement of homeostasis ... Questions of information, its use ...

“These machines had no chance precisely because they were already so highly developed and complex from the very beginning,” said Zaurakhan. - You must understand: they were highly specialized for cooperation with their designers, the lyrenians, and when the lyreans disappeared, they seemed to be crippled because they lost their leadership. And the mechanisms that the present flies evolved from — I don’t claim at all that they already existed then, I even think that this is excluded, they could have been formed much later, were relatively primitive, and therefore many paths of development were opened up before them [one].

“Perhaps there was an even more important factor,” Dr. Saks added, approaching the cyberneticists. “We are talking about mechanisms, and mechanisms never show such an ability to regenerate, like a living creature, living tissue, which is restored when damaged. A macroautomat, even if it is able to repair another automaton, needs tools for this, as a whole, a machine park. Therefore, it was enough to cut them off from such tools, and they were disarmed. , … <…> - , <…> … , «» , . : <…> , , , , , … —

Source: https://habr.com/ru/post/327138/

All Articles