Looking for fast local storage

The text of this article is also available in English .

Not so long ago, we had to develop functionality for which you need to quickly and often dump large amounts of data onto a disk, and from time to time to read them from there. It was necessary to find where, how and with what help to store this data. In the article a brief analysis of the problem, research and comparison of solutions.

I work in a team that develops development tools for relational database developers (SqlServer, MySql, Oracle), among them both individual applications and those that are embedded in such a 32-bit dreadnought as Microsoft Management Studio.

')

Recover documents opened in the IDE at the time of closure at the next launch.

Quickly close the IDE before leaving home, not thinking about which documents are saved and which are not. The next time you start the environment, get the same environment, which was at the time of closing and continue working.

Save all the results of the developer at the time of the emergency shutdown: the fall of the program or operating system, power failure.

A similar feature is in browsers. There, from a technical point of view, they live a lot easier: you just need to save a pack of URL addresses, and even I rarely have more than a hundred tabs, the URL itself is on average only two hundred characters. Thus, we want to get a behavior similar to what is in the browser, but we need to store the entire contents of the document. It turns out that we need somewhere often and quickly save all user documents. Complicated the task and the fact that people sometimes work with SQL not like with other languages. If I, as a C # developer, write a class with more than a thousand lines of code, thenI will go to the forest in the trunk and in parts, this will cause many questions and discontent, in the SQL world, along with tiny queries for 10-20 lines, there are monstrous database dumps, which are very laborious to edit, which means users will want their edits to be safe.

After analyzing the problem, we formulated the following storage requirements:

The first frontal and clumsy option: keep everything in a folder, somewhere in AppData.

The obvious option is SQLite . Standard in the field of embedded databases. Very detailed and popular project.

The third was the base LiteDB . Google’s first answer to the question: “embedded database for .net”

File system - files are files. They will have to be followed, they will have to invent names. In addition to the contents of the file, you need to store a small set of properties (the original path on the disk, the connection string, the IDE version in which it was opened), which means you have to either create two files per document or come up with a format to separate the properties from the contents.

SQLite is a classic relational database. The base is represented by one file on the disk. The data scheme is rolled onto this file, after which it is necessary to interact with it using SQL tools. It will be possible to create two tables: one for properties, another for content, in case there are tasks where you need one without the other.

LiteDB is a non-relational database. As in SQLite, the database is represented by a single file. Fully written in C #. The appealing simplicity of use: you just need to give the object to the library, and it already takes on serialization.

Before giving the code, I’d better explain the general concept and give the comparison results.

The general idea was as follows: compare how many small files will be recorded in the database, the average number of medium-sized files and some very large files. The variant with average files is closest to the real one, and small and large files are borderline cases that also need to be taken into account.

I wrote to files via FileStream with standard buffer size.

In SQLite there was one nuance on which I consider it necessary to focus attention. We could not put all the contents of the document (I wrote above that they can be really large) in one cell of the database. The fact is that in order to optimize, we store the text of the document line by line, which means that in order to put the text in one cell, we would need to merge all the text into one line, rather than double, the amount of RAM used. The other side of the same problem would be obtained by reading the data from the database. Therefore, in SQLite there was a separate table where the data was stored line by line and were linked by a foreign key with a table where only the properties of the documents lay. In addition, it turned out to speed up the base a bit by inserting data in batches of several thousand lines in the synchronization mode OFF, without logging and within the framework of a single transaction (I caught this trick here and here ).

In LiteDB, an object was simply given, in which List <string> was one of the properties and the library itself saved it to disk.

Even during the development of the test application, I realized that I like LiteDB more, the fact is that the test code for SQLite took more than 120 lines, and the code solving the same problem for LiteDB is less than 20.

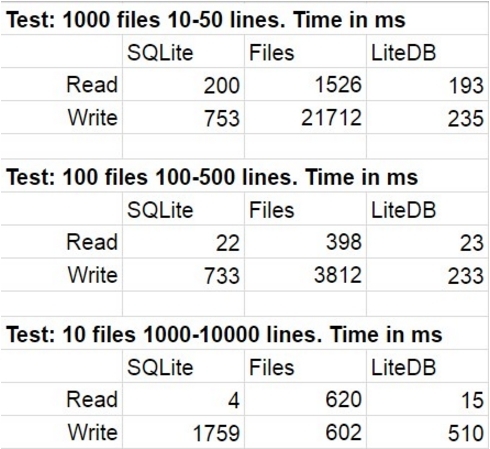

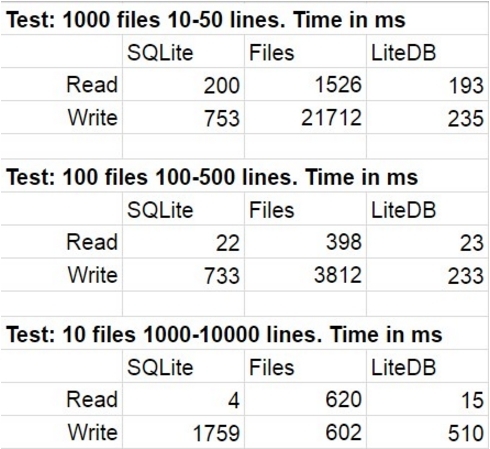

The table shows the average results of several test code runs. When measured, the statistical deviation was insignificant.

We were not surprised by the victory of LiteDB over SQLite, although we were surprised at the order of this victory. I was shocked by the LiteDB win over files. After a little research of the library repository, for example, I found a very well implemented page-by-page writing to disk, and I calmed down on it, although I am sure it is just one of many performance-tricks that are used there. I also want to draw attention to how quickly the speed of access to the file system degrades when there are really a lot of files in the folder.

LiteDB was chosen to develop this feature, which we rarely regretted later on. It saved that the library was written in native c # for all, and if something was not completely clear, it could always be read in the source code.

In addition to the above advantages LiteDB over competitors as development has begun to emerge and disadvantages, most of which can be attributed to the youth of the library. Having started using the library slightly beyond the framework of the “normal” script, we found several problems ( # 419 , # 420 , # 483 , # 496 ) The author of the library always answered questions very quickly, most problems were fixed very quickly. Now there is only one (and let the status of closed does not bother you). This is a problem of competitive access. Apparently somewhere in the depths of the library, a very nasty race-condition was hidden. For ourselves, we have bypassed this bug in a rather interesting way, which I plan to write separately.

Another worth mentioning is the lack of a convenient editor and viewer. There is LiteDBShell, but this is for console fans.

UPD: recently found a tool

We have built a large and important functionality on top of LiteDB, and now we are developing another major feature where we will also use this library. For those who are now looking for an in-process base for their needs, I suggest looking towards LiteDB and how it will fall on your tasks, because there is no guarantee that what worked for one will work just as well for something completely different. .

Not so long ago, we had to develop functionality for which you need to quickly and often dump large amounts of data onto a disk, and from time to time to read them from there. It was necessary to find where, how and with what help to store this data. In the article a brief analysis of the problem, research and comparison of solutions.

Task context

I work in a team that develops development tools for relational database developers (SqlServer, MySql, Oracle), among them both individual applications and those that are embedded in such a 32-bit dreadnought as Microsoft Management Studio.

')

Task

Recover documents opened in the IDE at the time of closure at the next launch.

Usecase

Quickly close the IDE before leaving home, not thinking about which documents are saved and which are not. The next time you start the environment, get the same environment, which was at the time of closing and continue working.

Save all the results of the developer at the time of the emergency shutdown: the fall of the program or operating system, power failure.

Task analysis

A similar feature is in browsers. There, from a technical point of view, they live a lot easier: you just need to save a pack of URL addresses, and even I rarely have more than a hundred tabs, the URL itself is on average only two hundred characters. Thus, we want to get a behavior similar to what is in the browser, but we need to store the entire contents of the document. It turns out that we need somewhere often and quickly save all user documents. Complicated the task and the fact that people sometimes work with SQL not like with other languages. If I, as a C # developer, write a class with more than a thousand lines of code, then

Storage Requirements

After analyzing the problem, we formulated the following storage requirements:

- It should be an embedded lightweight solution.

- Write speed

- The ability to multiprocessor access. The requirement is not critical, as we could provide it ourselves with the help of synchronization objects, but it would be damn nice to have it out of the box.

Applicants for the role

The first frontal and clumsy option: keep everything in a folder, somewhere in AppData.

The obvious option is SQLite . Standard in the field of embedded databases. Very detailed and popular project.

The third was the base LiteDB . Google’s first answer to the question: “embedded database for .net”

First look

File system - files are files. They will have to be followed, they will have to invent names. In addition to the contents of the file, you need to store a small set of properties (the original path on the disk, the connection string, the IDE version in which it was opened), which means you have to either create two files per document or come up with a format to separate the properties from the contents.

SQLite is a classic relational database. The base is represented by one file on the disk. The data scheme is rolled onto this file, after which it is necessary to interact with it using SQL tools. It will be possible to create two tables: one for properties, another for content, in case there are tasks where you need one without the other.

LiteDB is a non-relational database. As in SQLite, the database is represented by a single file. Fully written in C #. The appealing simplicity of use: you just need to give the object to the library, and it already takes on serialization.

Performance test

Before giving the code, I’d better explain the general concept and give the comparison results.

The general idea was as follows: compare how many small files will be recorded in the database, the average number of medium-sized files and some very large files. The variant with average files is closest to the real one, and small and large files are borderline cases that also need to be taken into account.

I wrote to files via FileStream with standard buffer size.

In SQLite there was one nuance on which I consider it necessary to focus attention. We could not put all the contents of the document (I wrote above that they can be really large) in one cell of the database. The fact is that in order to optimize, we store the text of the document line by line, which means that in order to put the text in one cell, we would need to merge all the text into one line, rather than double, the amount of RAM used. The other side of the same problem would be obtained by reading the data from the database. Therefore, in SQLite there was a separate table where the data was stored line by line and were linked by a foreign key with a table where only the properties of the documents lay. In addition, it turned out to speed up the base a bit by inserting data in batches of several thousand lines in the synchronization mode OFF, without logging and within the framework of a single transaction (I caught this trick here and here ).

In LiteDB, an object was simply given, in which List <string> was one of the properties and the library itself saved it to disk.

Even during the development of the test application, I realized that I like LiteDB more, the fact is that the test code for SQLite took more than 120 lines, and the code solving the same problem for LiteDB is less than 20.

Test data generation

FileStrings.cs

Program.cs

internal class FileStrings { private static readonly Random random = new Random(); public List<string> Strings { get; set; } = new List<string>(); public int SomeInfo { get; set; } public FileStrings() { } public FileStrings(int id, int minLines, decimal lineIncrement) { SomeInfo = id; int lines = minLines + (int)(id * lineIncrement); for (int i = 0; i < lines; i++) { Strings.Add(GetString()); } } private string GetString() { int length = 250; StringBuilder builder = new StringBuilder(length); for (int i = 0; i < length; i++) { builder.Append(random.Next((int)'a', (int)'z')); } return builder.ToString(); } } Program.cs

List<FileStrings> files = Enumerable.Range(1, NUM_FILES + 1) .Select(f => new FileStrings(f, MIN_NUM_LINES, (MAX_NUM_LINES - MIN_NUM_LINES) / (decimal)NUM_FILES)) .ToList(); Sqlite

private static void SaveToDb(List<FileStrings> files) { using (var connection = new SQLiteConnection()) { connection.ConnectionString = @"Data Source=data\database.db;FailIfMissing=False;"; connection.Open(); var command = connection.CreateCommand(); command.CommandText = @"CREATE TABLE files ( id INTEGER PRIMARY KEY, file_name TEXT ); CREATE TABLE strings ( id INTEGER PRIMARY KEY, string TEXT, file_id INTEGER, line_number INTEGER ); CREATE UNIQUE INDEX strings_file_id_line_number_uindex ON strings(file_id,line_number); PRAGMA synchronous = OFF; PRAGMA journal_mode = OFF"; command.ExecuteNonQuery(); var insertFilecommand = connection.CreateCommand(); insertFilecommand.CommandText = "INSERT INTO files(file_name) VALUES(?); SELECT last_insert_rowid();"; insertFilecommand.Parameters.Add(insertFilecommand.CreateParameter()); insertFilecommand.Prepare(); var insertLineCommand = connection.CreateCommand(); insertLineCommand.CommandText = "INSERT INTO strings(string, file_id, line_number) VALUES(?, ?, ?);"; insertLineCommand.Parameters.Add(insertLineCommand.CreateParameter()); insertLineCommand.Parameters.Add(insertLineCommand.CreateParameter()); insertLineCommand.Parameters.Add(insertLineCommand.CreateParameter()); insertLineCommand.Prepare(); foreach (var item in files) { using (var tr = connection.BeginTransaction()) { SaveToDb(item, insertFilecommand, insertLineCommand); tr.Commit(); } } } } private static void SaveToDb(FileStrings item, SQLiteCommand insertFileCommand, SQLiteCommand insertLinesCommand) { string fileName = Path.Combine("data", item.SomeInfo + ".sql"); insertFileCommand.Parameters[0].Value = fileName; var fileId = insertFileCommand.ExecuteScalar(); int lineIndex = 0; foreach (var line in item.Strings) { insertLinesCommand.Parameters[0].Value = line; insertLinesCommand.Parameters[1].Value = fileId; insertLinesCommand.Parameters[2].Value = lineIndex++; insertLinesCommand.ExecuteNonQuery(); } } LiteDB

private static void SaveToNoSql(List<FileStrings> item) { using (var db = new LiteDatabase("data\\litedb.db")) { var data = db.GetCollection<FileStrings>("files"); data.EnsureIndex(f => f.SomeInfo); data.Insert(item); } } The table shows the average results of several test code runs. When measured, the statistical deviation was insignificant.

We were not surprised by the victory of LiteDB over SQLite, although we were surprised at the order of this victory. I was shocked by the LiteDB win over files. After a little research of the library repository, for example, I found a very well implemented page-by-page writing to disk, and I calmed down on it, although I am sure it is just one of many performance-tricks that are used there. I also want to draw attention to how quickly the speed of access to the file system degrades when there are really a lot of files in the folder.

LiteDB was chosen to develop this feature, which we rarely regretted later on. It saved that the library was written in native c # for all, and if something was not completely clear, it could always be read in the source code.

disadvantages

In addition to the above advantages LiteDB over competitors as development has begun to emerge and disadvantages, most of which can be attributed to the youth of the library. Having started using the library slightly beyond the framework of the “normal” script, we found several problems ( # 419 , # 420 , # 483 , # 496 ) The author of the library always answered questions very quickly, most problems were fixed very quickly. Now there is only one (and let the status of closed does not bother you). This is a problem of competitive access. Apparently somewhere in the depths of the library, a very nasty race-condition was hidden. For ourselves, we have bypassed this bug in a rather interesting way, which I plan to write separately.

Another worth mentioning is the lack of a convenient editor and viewer. There is LiteDBShell, but this is for console fans.

UPD: recently found a tool

Summary

We have built a large and important functionality on top of LiteDB, and now we are developing another major feature where we will also use this library. For those who are now looking for an in-process base for their needs, I suggest looking towards LiteDB and how it will fall on your tasks, because there is no guarantee that what worked for one will work just as well for something completely different. .

Source: https://habr.com/ru/post/326990/

All Articles