Jonker-Volgenta + t-SNE algorithm = superpower

Before:

After:

')

Intrigued? But first things first.

t-SNE is a very popular algorithm that allows you to reduce the dimension of your data so that it is easier to visualize them. This algorithm can roll hundreds of dimensions to only two, while maintaining important relationships between the data: the closer the objects are located in the original space, the smaller the distance between these objects in the space of the reduced dimension. t-SNE works well on small and medium real data sets and does not require a large number of hyper-parameter settings. In other words, if you take 100,000 points and pass them through this magic black box, we will get a beautiful scatter plot at the output.

Here is a classic example from the field of computer vision. There is a well-known dataset called MNIST , created by Jan Lekun (one of the creators of the neural network learning algorithm using the back propagation method of error — the basis of modern deep learning) and others. It is often used as a universal data set for evaluating ideas related to neural networks, as well as in scientific research. MNIST is 70,000 black and white images of size 28x28. Each of them is a scan of a handwritten digit from the segment [0, 9]. There is a way to get the “ infinite ” set of MNIST, but I will not deviate from the topic.

Thus, the MNIST sample contains sign and can be represented as a 784-dimensional vector. The vectors are linear, and in this interpretation we lose the position relationship between the individual pixels, but still there is a benefit. If you try to imagine what our data set looks like in 784D, you can go crazy if you are not a specially trained mathematician. Ordinary people can consume information only dimensions of 3D, 2D or 1D. Implicitly, you can add another dimension - time, but hardly anyone would say that the display of the 3D computer is only because it updates the image at a frequency of 100 Hz. Therefore, it would be great to know how to display 784 measurements in two. Sounds impossible? In general, it is. The Dirichlet principle comes into play: what mapping we do not choose, conflicts cannot be avoided.

Illusion 3D -> 2D projection in Shadowmatic

Fortunately, the following assumptions can be made:

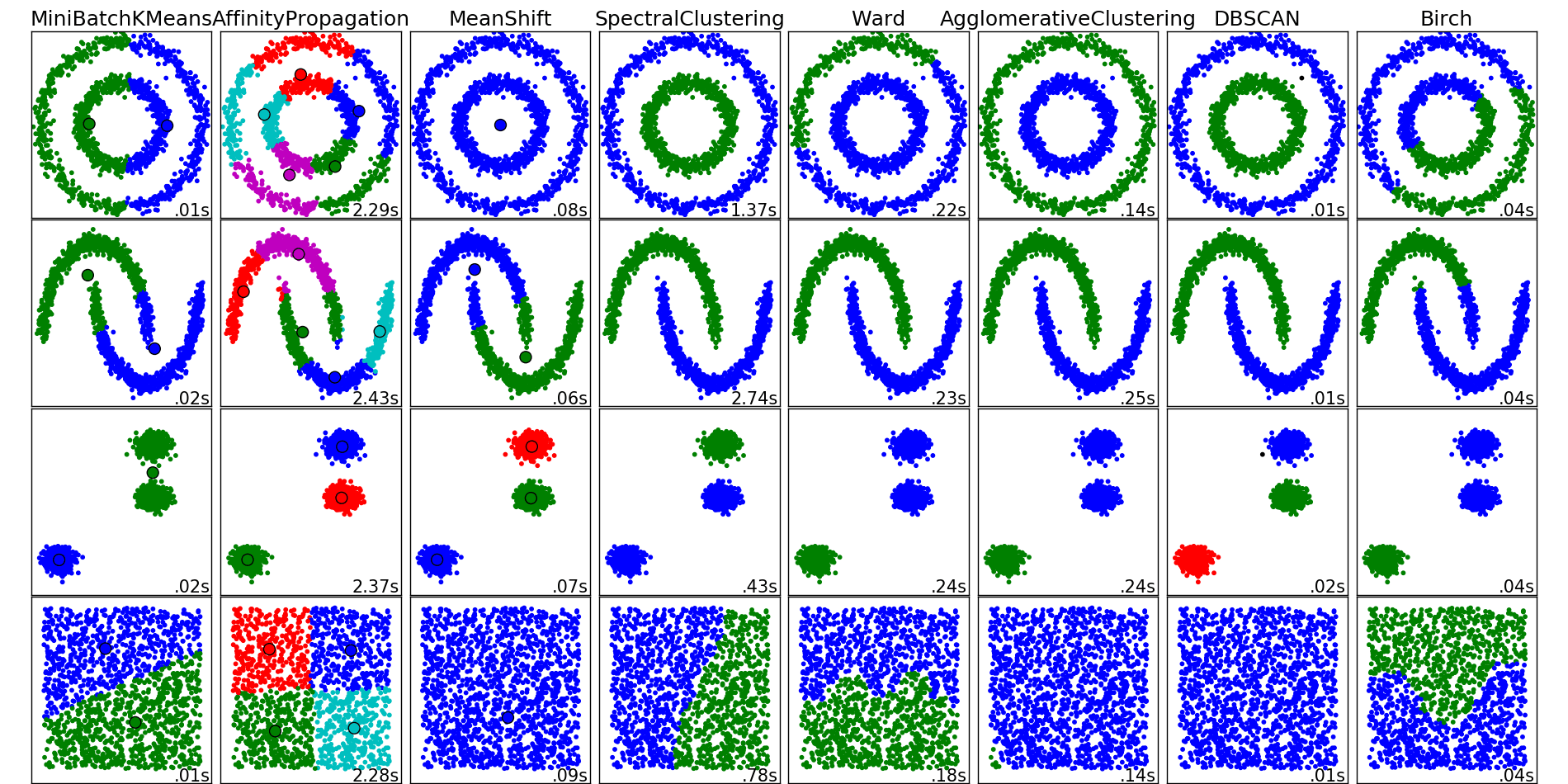

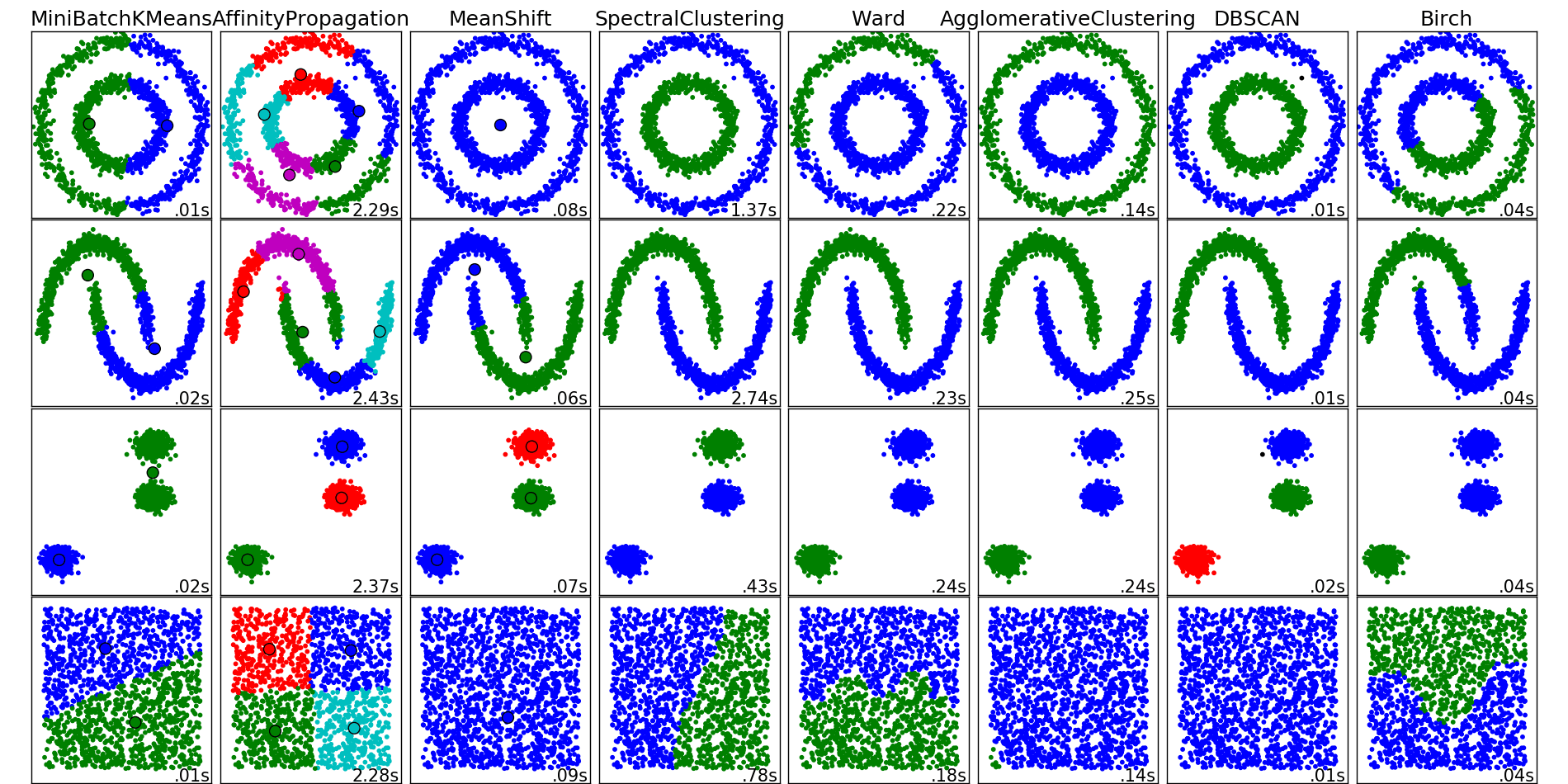

The question arises: what is the best approximating problem in paragraph 2? Unfortunately, the “best” is not. The quality of the reduction of dimensions is subjective and depends on your final goal, as well as when choosing a clustering method.

Different clustering algorithms from sklearn

t-SNE is one of the possible dimension reduction algorithms, which are called algorithms for graph laying on a plane (embedded algorithms). The key idea is to maintain a relationship of “similarity”. Try it yourself .

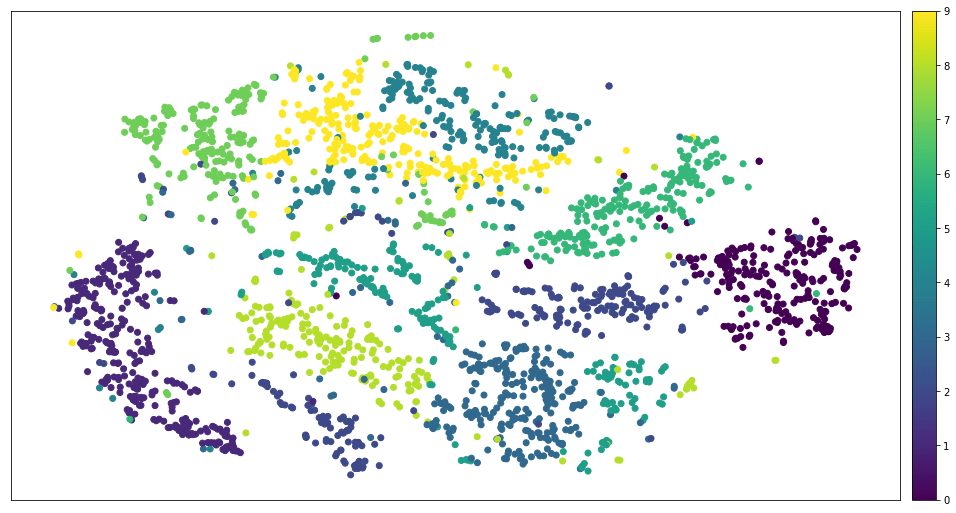

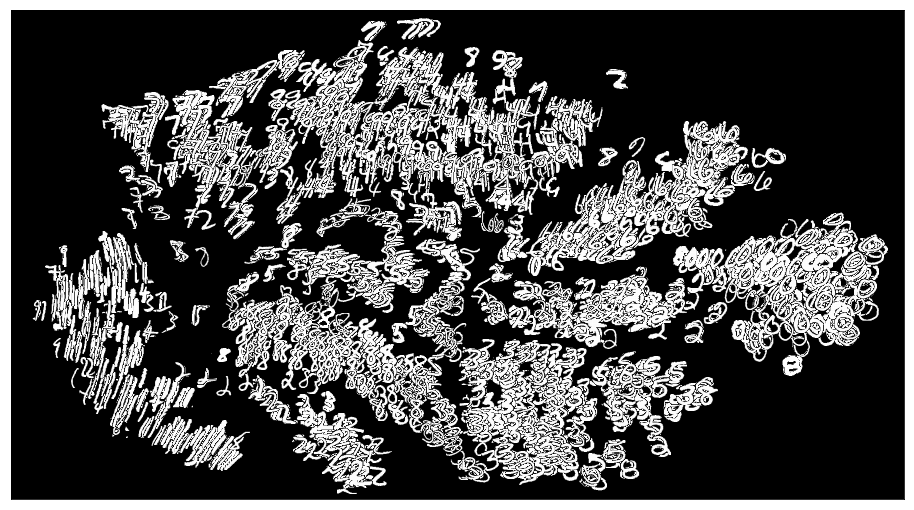

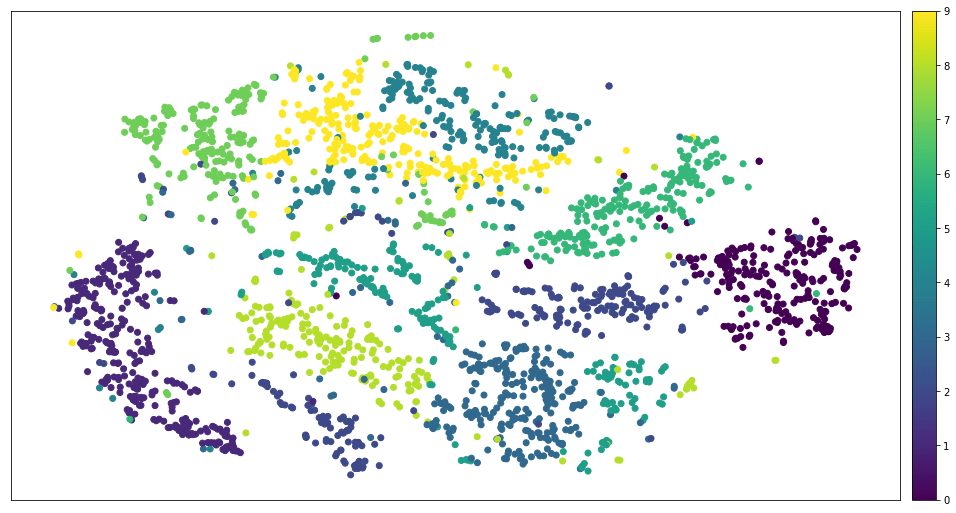

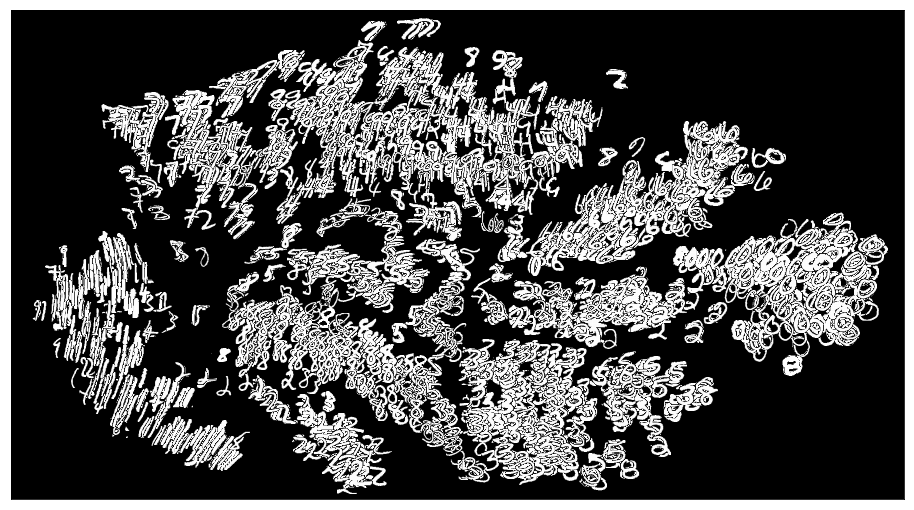

These are all artificial examples, they are good, but not enough. Most real data sets resemble a cloud with equestrian clusters. For example, MNIST looks like this.

MNIST after applying t-SNE

Now let's turn sharply away and get acquainted with the concept of linear programming (LP). Sorry, but this is not a new design pattern, a Javascript framework or a startup. This is math:

We minimize scalar product and taking into account the system of linear inequalities depending on , and the requirements that all its coordinates are non-negative. LP is a well-studied topic in convex optimization theory; as is known, this problem is solved in weakly polynomial time — usually for where n is the number of variables (the dimension of the problem). But there are also approximating algorithms that run in linear time. These algorithms use matrix multiplication and can be effectively parallelized. Paradise for the programmer!

To LP can be reduced to surprisingly many tasks. Take, for example, the transportation problem .

Transport challenge: points of departure (S) and points of consumption (D)

There are a number of points of departure and points of consumption, which may be unequal. Each item of consumption needs a certain amount of goods. Each point of departure has limited resources and is associated with some points of consumption. The main task is as follows: each edge has its value Therefore, it is necessary to find the distribution of supplies with minimal costs.

Strictly speaking,

The latter condition means that either the goods will run out at the points of departure, or the demand for the goods will run low. If a expression 8 can be normalized and simplified as follows:

If we replace “shipment” and “consumption” with “land”, then leads us to the task of the excavator ( Earth Mover's Distance , EMD): to minimize the work necessary for dragging the earth from one set of heaps to others. Now you know what to do if you have to dig holes in the ground.

Earth Mover's Distance

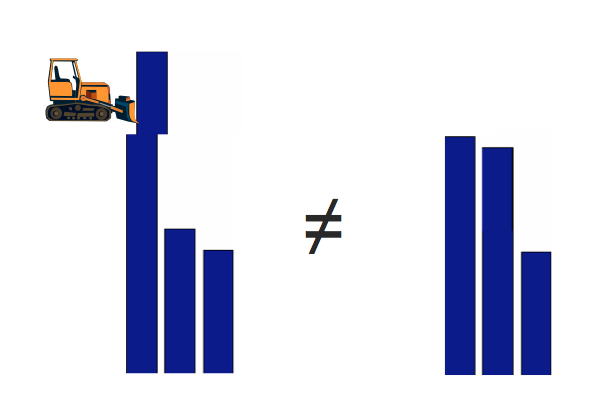

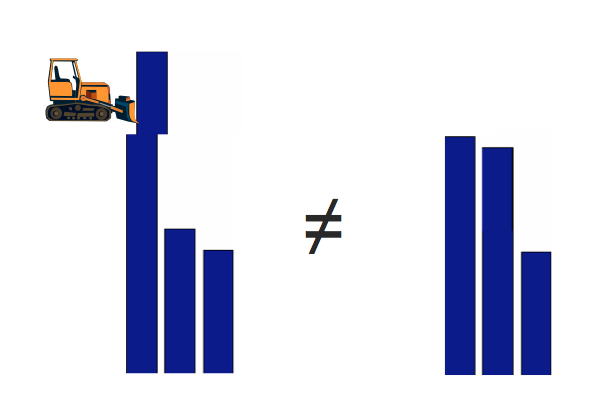

If we replace “sending” and “consuming” with “histograms”, we will get the most common way of comparing images of the “before deep learning” era ( example of an article ). This method is better than the naive L2, since it takes into account not only the differences in absolute value, but also the difference in location.

EMD is better in comparing histograms than Euclidean distance

If we replace “consignment” and “consumption” with the words that we will come to Word Mover's Distance , an effective way to compare the meanings of the two sentences, using word2vec to get vector representations of the words.

Word Mover's Distance.

If we relax conditions 5-8 , discarding 8 , taking and turning inequalities 6 and 7 into equations, adding symmetric inequalities to them, we get the assignment problem (Linear Assignment Problem, LAP):

Unlike the transportation problem, one can prove that - the solution is always binary. In other words, either all the goods from the points of departure go to the points of consumption, or nothing goes anywhere.

As we understood from the first section, t-SNE, like all laying algorithms on a plane, produces a scatterplot at the output. While this is ideal for researching datasets, sometimes there is a need to lay out the original diagram on the correct grid. For example, this installation is necessary source {d} ... soon find out why.

Correct mesh

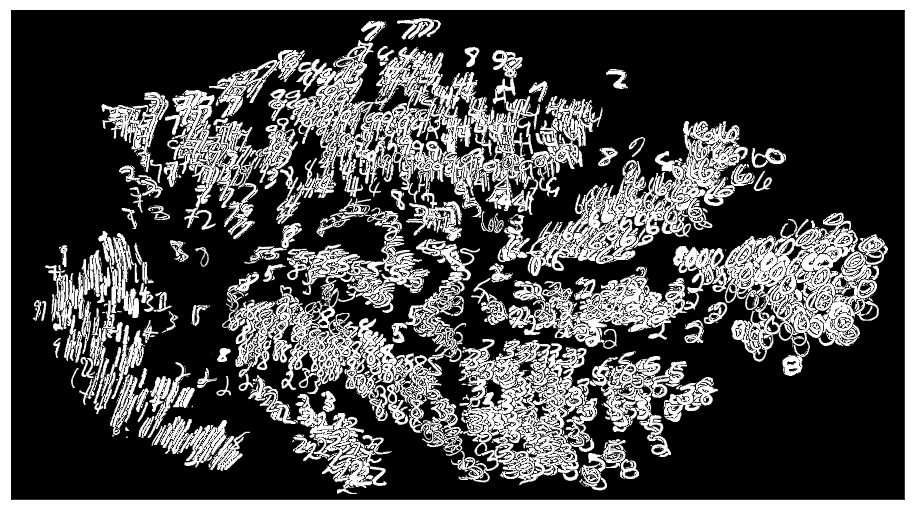

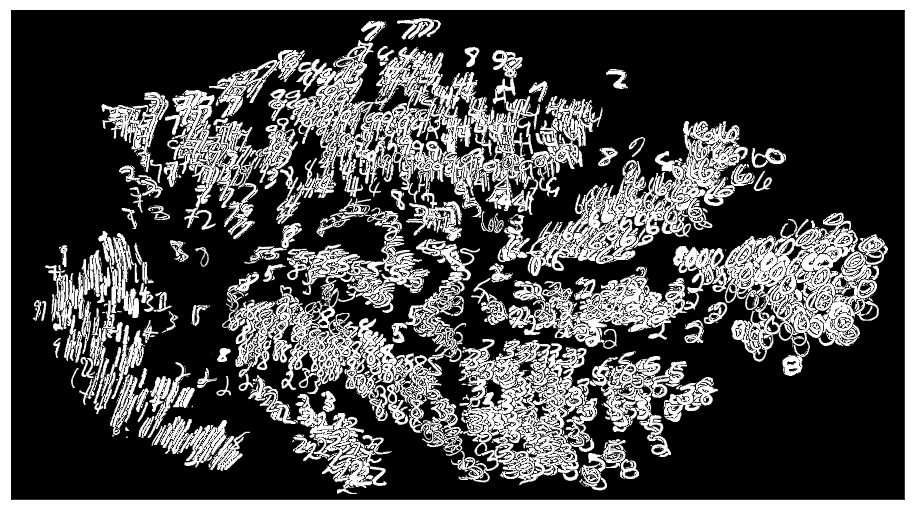

After applying t-SNE, instead of the dots, we represent the figures MNIST; here's what it looks like:

MNIST numbers after t-SNE

Not too clear. This is where LAP occurs: you can define the cost matrix as the pairwise Euclidean distance between the t-SNE samples and the grid nodes, set the grid area equal to the size of the data set , and as a result solve the problem. But how? We have not yet described any of the algorithms.

It turns out that there are a huge number of linear optimization algorithms for general use, starting with the simplex method and ending with extremely complex tools. Algorithms designed specifically for a particular task usually converge much faster, although they have some limitations.

The Hungarian algorithm is one of the specialized methods developed in the 1950s. Its complexity is . It is quite simple to understand and implement, and therefore popular in many projects. For example, he recently became part of ssipy . Unfortunately, it does not work fast on large amounts of data, and the scipy version is especially slow. I waited an hour to process 2500 samples of MNIST, and Python was still digesting the victim.

The Jonker-Volgenant (JV) algorithm is an improved approach developed in 1987. It is also based on passing the shortest augmental chain, and its complexity is also cubic, but it drastically reduces the computational load with some cunning techniques. The performance of many graph styling algorithms, including JV, was thoroughly investigated in a 2000 article, Discrete Applied Mathematics . The conclusion was as follows:

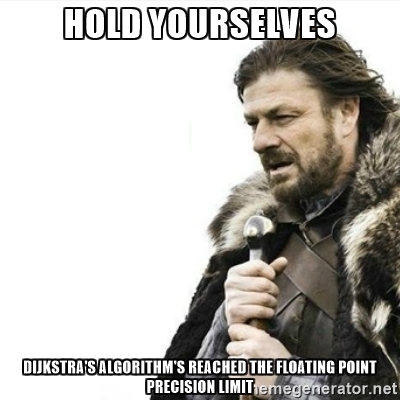

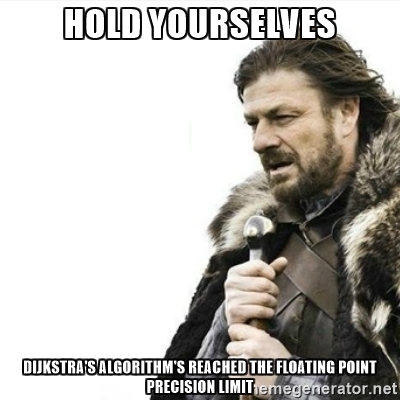

However, the JV has a flaw. It is very tolerant of differences between pairs of elements in the matrix of values. For example, if we are looking for the shortest path in a graph using the Dijkstra algorithm, and two very close values appear on this graph, the algorithm risks going into an infinite loop. If we look closely at Dijkstra’s algorithm, we find that when the precision limit of floating-point values is reached, terrible things can happen. The usual way out is to multiply the cost matrix by some large number.

Still, the most tempting in a JV for a lazy engineer like me is the Python 2 package, which is a wrapper around the ancient implementation of the C algorithm: pyLAPJV . This C code was written by Roy Jonker in 1996 for MagicLogic Optimization, of which he is president. Besides the fact that pyLAPJV is no longer supported, there is a minor issue with the data output, which I fixed in pull-request # 2 . The C code is reliable, but it does not use multithreading or SIMD instructions. Of course, we understand that JV is single-threaded by its nature, and it is not so easy to parallelize it, nevertheless, I managed to speed it up almost twice, optimizing the most costly stage - reducing the augmental chain - using AVX2 . The result is a Python 3 src-d / lapjv package, available under the MIT license.

Reducing the augmental chain basically comes down to finding the minimum and second minimum elements in the array. It sounds simple, as it really is, a non-optimized C code takes 20 lines. The AVX version is 4 times larger: we send the minimum values to each SIMD processor element, perform blending , and then use some other black magic spells SIMD, which I learned while I was working on libSoundFeatureExtraction at Samsung.

Finding two consecutive minimum values, C ++

Finding two consecutive minimum values, optimized code with AVX2 instructions

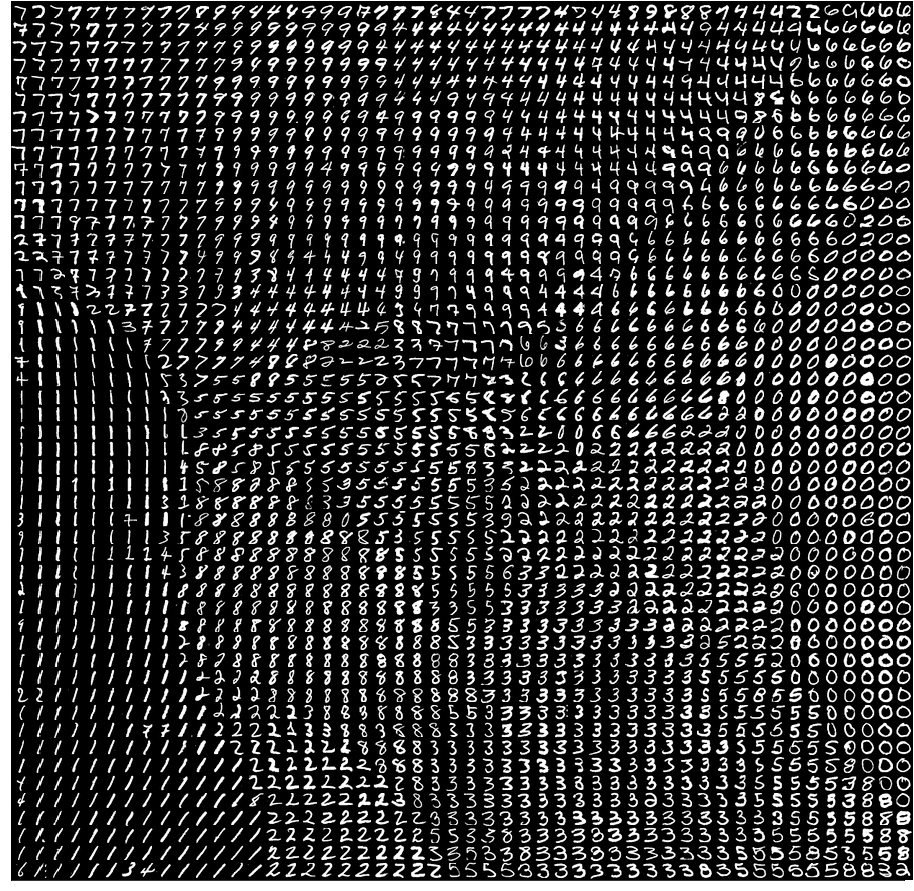

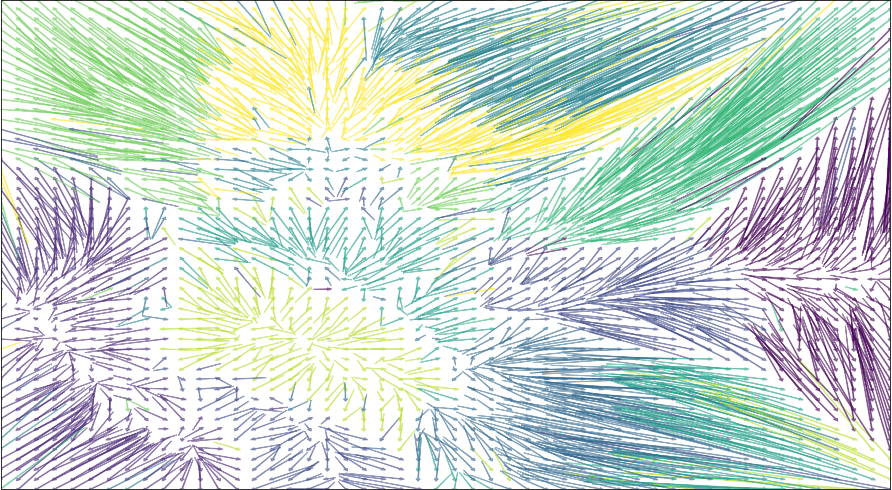

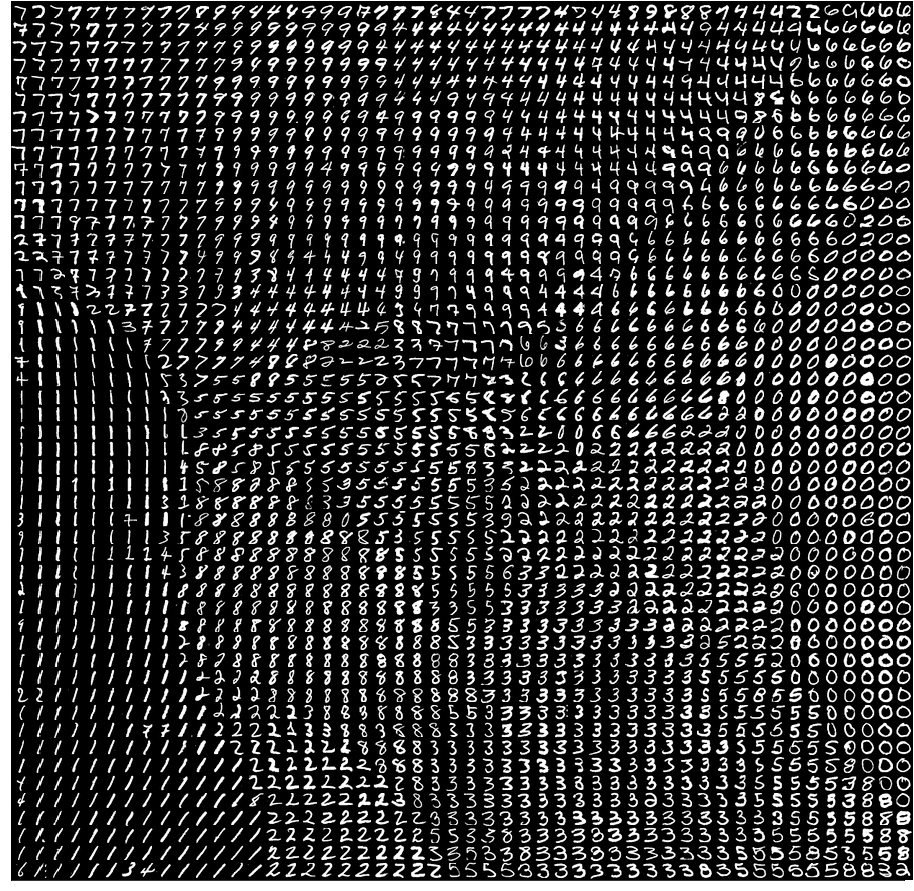

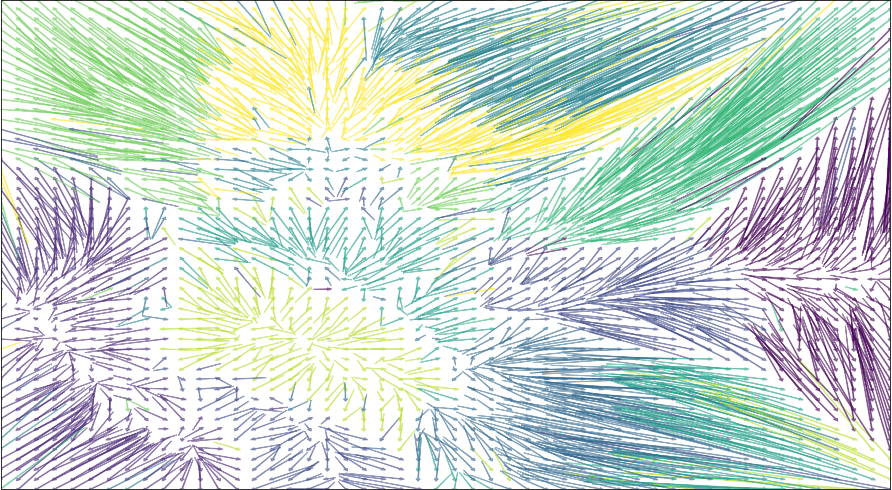

On my lapjv laptop, it packs 2500 MNIST samples in 5 seconds, and here it is - a precious result:

Assignment task assignment for MNIST after t-SNE

For the preparation of this post I used Jupiter Notebook (source here ).

There is an efficient way to place t-SNE-treated samples on the correct mesh. It is based on solving an assignment problem (LAP) using the Jonker-Volgenant algorithm implemented in src-d / lapjv . This algorithm can be scaled to 10,000 samples.

After:

')

Intrigued? But first things first.

t-SNE

t-SNE is a very popular algorithm that allows you to reduce the dimension of your data so that it is easier to visualize them. This algorithm can roll hundreds of dimensions to only two, while maintaining important relationships between the data: the closer the objects are located in the original space, the smaller the distance between these objects in the space of the reduced dimension. t-SNE works well on small and medium real data sets and does not require a large number of hyper-parameter settings. In other words, if you take 100,000 points and pass them through this magic black box, we will get a beautiful scatter plot at the output.

Here is a classic example from the field of computer vision. There is a well-known dataset called MNIST , created by Jan Lekun (one of the creators of the neural network learning algorithm using the back propagation method of error — the basis of modern deep learning) and others. It is often used as a universal data set for evaluating ideas related to neural networks, as well as in scientific research. MNIST is 70,000 black and white images of size 28x28. Each of them is a scan of a handwritten digit from the segment [0, 9]. There is a way to get the “ infinite ” set of MNIST, but I will not deviate from the topic.

Thus, the MNIST sample contains sign and can be represented as a 784-dimensional vector. The vectors are linear, and in this interpretation we lose the position relationship between the individual pixels, but still there is a benefit. If you try to imagine what our data set looks like in 784D, you can go crazy if you are not a specially trained mathematician. Ordinary people can consume information only dimensions of 3D, 2D or 1D. Implicitly, you can add another dimension - time, but hardly anyone would say that the display of the 3D computer is only because it updates the image at a frequency of 100 Hz. Therefore, it would be great to know how to display 784 measurements in two. Sounds impossible? In general, it is. The Dirichlet principle comes into play: what mapping we do not choose, conflicts cannot be avoided.

Illusion 3D -> 2D projection in Shadowmatic

Fortunately, the following assumptions can be made:

- The original multidimensional space is sparse , that is, it is unlikely to be uniformly filled with samples.

- We do not need to find the exact mapping, especially knowing that it does not exist. Instead, you can solve another problem that has a guaranteed exact solution and approximates the expected result. This is reminiscent of the principle of JPEG compression: the result is never exactly identical, but the image looks very similar to the original.

The question arises: what is the best approximating problem in paragraph 2? Unfortunately, the “best” is not. The quality of the reduction of dimensions is subjective and depends on your final goal, as well as when choosing a clustering method.

Different clustering algorithms from sklearn

t-SNE is one of the possible dimension reduction algorithms, which are called algorithms for graph laying on a plane (embedded algorithms). The key idea is to maintain a relationship of “similarity”. Try it yourself .

These are all artificial examples, they are good, but not enough. Most real data sets resemble a cloud with equestrian clusters. For example, MNIST looks like this.

MNIST after applying t-SNE

Linear programming

Now let's turn sharply away and get acquainted with the concept of linear programming (LP). Sorry, but this is not a new design pattern, a Javascript framework or a startup. This is math:

We minimize scalar product and taking into account the system of linear inequalities depending on , and the requirements that all its coordinates are non-negative. LP is a well-studied topic in convex optimization theory; as is known, this problem is solved in weakly polynomial time — usually for where n is the number of variables (the dimension of the problem). But there are also approximating algorithms that run in linear time. These algorithms use matrix multiplication and can be effectively parallelized. Paradise for the programmer!

To LP can be reduced to surprisingly many tasks. Take, for example, the transportation problem .

Transport challenge: points of departure (S) and points of consumption (D)

There are a number of points of departure and points of consumption, which may be unequal. Each item of consumption needs a certain amount of goods. Each point of departure has limited resources and is associated with some points of consumption. The main task is as follows: each edge has its value Therefore, it is necessary to find the distribution of supplies with minimal costs.

Strictly speaking,

The latter condition means that either the goods will run out at the points of departure, or the demand for the goods will run low. If a expression 8 can be normalized and simplified as follows:

If we replace “shipment” and “consumption” with “land”, then leads us to the task of the excavator ( Earth Mover's Distance , EMD): to minimize the work necessary for dragging the earth from one set of heaps to others. Now you know what to do if you have to dig holes in the ground.

Earth Mover's Distance

If we replace “sending” and “consuming” with “histograms”, we will get the most common way of comparing images of the “before deep learning” era ( example of an article ). This method is better than the naive L2, since it takes into account not only the differences in absolute value, but also the difference in location.

EMD is better in comparing histograms than Euclidean distance

If we replace “consignment” and “consumption” with the words that we will come to Word Mover's Distance , an effective way to compare the meanings of the two sentences, using word2vec to get vector representations of the words.

Word Mover's Distance.

If we relax conditions 5-8 , discarding 8 , taking and turning inequalities 6 and 7 into equations, adding symmetric inequalities to them, we get the assignment problem (Linear Assignment Problem, LAP):

Unlike the transportation problem, one can prove that - the solution is always binary. In other words, either all the goods from the points of departure go to the points of consumption, or nothing goes anywhere.

t-SNE LAP

As we understood from the first section, t-SNE, like all laying algorithms on a plane, produces a scatterplot at the output. While this is ideal for researching datasets, sometimes there is a need to lay out the original diagram on the correct grid. For example, this installation is necessary source {d} ... soon find out why.

Correct mesh

After applying t-SNE, instead of the dots, we represent the figures MNIST; here's what it looks like:

MNIST numbers after t-SNE

Not too clear. This is where LAP occurs: you can define the cost matrix as the pairwise Euclidean distance between the t-SNE samples and the grid nodes, set the grid area equal to the size of the data set , and as a result solve the problem. But how? We have not yet described any of the algorithms.

Jonker-Volgenta algorithm

It turns out that there are a huge number of linear optimization algorithms for general use, starting with the simplex method and ending with extremely complex tools. Algorithms designed specifically for a particular task usually converge much faster, although they have some limitations.

The Hungarian algorithm is one of the specialized methods developed in the 1950s. Its complexity is . It is quite simple to understand and implement, and therefore popular in many projects. For example, he recently became part of ssipy . Unfortunately, it does not work fast on large amounts of data, and the scipy version is especially slow. I waited an hour to process 2500 samples of MNIST, and Python was still digesting the victim.

The Jonker-Volgenant (JV) algorithm is an improved approach developed in 1987. It is also based on passing the shortest augmental chain, and its complexity is also cubic, but it drastically reduces the computational load with some cunning techniques. The performance of many graph styling algorithms, including JV, was thoroughly investigated in a 2000 article, Discrete Applied Mathematics . The conclusion was as follows:

“The JV has a good and stable average performance on all classes (tasks - author's note), and this is the best algorithm for both random and specific tasks.”

However, the JV has a flaw. It is very tolerant of differences between pairs of elements in the matrix of values. For example, if we are looking for the shortest path in a graph using the Dijkstra algorithm, and two very close values appear on this graph, the algorithm risks going into an infinite loop. If we look closely at Dijkstra’s algorithm, we find that when the precision limit of floating-point values is reached, terrible things can happen. The usual way out is to multiply the cost matrix by some large number.

Still, the most tempting in a JV for a lazy engineer like me is the Python 2 package, which is a wrapper around the ancient implementation of the C algorithm: pyLAPJV . This C code was written by Roy Jonker in 1996 for MagicLogic Optimization, of which he is president. Besides the fact that pyLAPJV is no longer supported, there is a minor issue with the data output, which I fixed in pull-request # 2 . The C code is reliable, but it does not use multithreading or SIMD instructions. Of course, we understand that JV is single-threaded by its nature, and it is not so easy to parallelize it, nevertheless, I managed to speed it up almost twice, optimizing the most costly stage - reducing the augmental chain - using AVX2 . The result is a Python 3 src-d / lapjv package, available under the MIT license.

Reducing the augmental chain basically comes down to finding the minimum and second minimum elements in the array. It sounds simple, as it really is, a non-optimized C code takes 20 lines. The AVX version is 4 times larger: we send the minimum values to each SIMD processor element, perform blending , and then use some other black magic spells SIMD, which I learned while I was working on libSoundFeatureExtraction at Samsung.

template <typename idx, typename cost> __attribute__((always_inline)) inline std::tuple<cost, cost, idx, idx> find_umins( idx dim, idx i, const cost *assigncost, const cost *v) { cost umin = assigncost[i * dim] - v[0]; idx j1 = 0; idx j2 = -1; cost usubmin = std::numeric_limits<cost>::max(); for (idx j = 1; j < dim; j++) { cost h = assigncost[i * dim + j] - v[j]; if (h < usubmin) { if (h >= umin) { usubmin = h; j2 = j; } else { usubmin = umin; umin = h; j2 = j1; j1 = j; } } } return std::make_tuple(umin, usubmin, j1, j2); } Finding two consecutive minimum values, C ++

Show code

template <typename idx> __attribute__((always_inline)) inline std::tuple<float, float, idx, idx> find_umins( idx dim, idx i, const float *assigncost, const float *v) { __m256i idxvec = _mm256_setr_epi32(0, 1, 2, 3, 4, 5, 6, 7); __m256i j1vec = _mm256_set1_epi32(-1), j2vec = _mm256_set1_epi32(-1); __m256 uminvec = _mm256_set1_ps(std::numeric_limits<float>::max()), usubminvec = _mm256_set1_ps(std::numeric_limits<float>::max()); for (idx j = 0; j < dim - 7; j += 8) { __m256 acvec = _mm256_loadu_ps(assigncost + i * dim + j); __m256 vvec = _mm256_loadu_ps(v + j); __m256 h = _mm256_sub_ps(acvec, vvec); __m256 cmp = _mm256_cmp_ps(h, uminvec, _CMP_LE_OQ); usubminvec = _mm256_blendv_ps(usubminvec, uminvec, cmp); j2vec = _mm256_blendv_epi8( j2vec, j1vec, reinterpret_cast<__m256i>(cmp)); uminvec = _mm256_blendv_ps(uminvec, h, cmp); j1vec = _mm256_blendv_epi8( j1vec, idxvec, reinterpret_cast<__m256i>(cmp)); cmp = _mm256_andnot_ps(cmp, _mm256_cmp_ps(h, usubminvec, _CMP_LT_OQ)); usubminvec = _mm256_blendv_ps(usubminvec, h, cmp); j2vec = _mm256_blendv_epi8( j2vec, idxvec, reinterpret_cast<__m256i>(cmp)); idxvec = _mm256_add_epi32(idxvec, _mm256_set1_epi32(8)); } float uminmem[8], usubminmem[8]; int32_t j1mem[8], j2mem[8]; _mm256_storeu_ps(uminmem, uminvec); _mm256_storeu_ps(usubminmem, usubminvec); _mm256_storeu_si256(reinterpret_cast<__m256i*>(j1mem), j1vec); _mm256_storeu_si256(reinterpret_cast<__m256i*>(j2mem), j2vec); idx j1 = -1, j2 = -1; float umin = std::numeric_limits<float>::max(), usubmin = std::numeric_limits<float>::max(); for (int vi = 0; vi < 8; vi++) { float h = uminmem[vi]; if (h < usubmin) { idx jnew = j1mem[vi]; if (h >= umin) { usubmin = h; j2 = jnew; } else { usubmin = umin; umin = h; j2 = j1; j1 = jnew; } } } for (int vi = 0; vi < 8; vi++) { float h = usubminmem[vi]; if (h < usubmin) { usubmin = h; j2 = j2mem[vi]; } } for (idx j = dim & 0xFFFFFFF8u; j < dim; j++) { float h = assigncost[i * dim + j] - v[j]; if (h < usubmin) { if (h >= umin) { usubmin = h; j2 = j; } else { usubmin = umin; umin = h; j2 = j1; j1 = j; } } } return std::make_tuple(umin, usubmin, j1, j2); } Finding two consecutive minimum values, optimized code with AVX2 instructions

On my lapjv laptop, it packs 2500 MNIST samples in 5 seconds, and here it is - a precious result:

Assignment task assignment for MNIST after t-SNE

Notebook

For the preparation of this post I used Jupiter Notebook (source here ).

Conclusion

There is an efficient way to place t-SNE-treated samples on the correct mesh. It is based on solving an assignment problem (LAP) using the Jonker-Volgenant algorithm implemented in src-d / lapjv . This algorithm can be scaled to 10,000 samples.

Oh, and come to work with us? :)wunderfund.io is a young foundation that deals with high-frequency algorithmic trading . High-frequency trading is a continuous competition of the best programmers and mathematicians of the whole world. By joining us, you will become part of this fascinating fight.

We offer interesting and challenging data analysis and low latency tasks for enthusiastic researchers and programmers. Flexible schedule and no bureaucracy, decisions are quickly made and implemented.

Join our team: wunderfund.io

Source: https://habr.com/ru/post/326750/

All Articles