How to talk with artificial intelligence?

Translation of Stephen Wolfram's post (" How should we talk to AIs? ").

I express my deep gratitude to Polina Sologub for assistance in translating and preparing the publication.

Content

- Computation is power

- The language of computational thinking

- Understanding AI

- What will AI do?

- setting goals for AI

- Talking one AI with another

- Information gathering: a review of a billion years

- And what if everyone could write code?

- Will it really work?

- I will say more

More recently, the idea of having a computer that can answer questions in English seemed like science fiction. But when we released Wolfram | Alpha in 2009, one of the biggest surprises (at least for me!) Was that we managed to make our product really working. And now people daily ask a lot of questions to personal assistants - in ordinary spoken language.

')

All this works quite well (although we always try to do better !). But what about more complex things? How to communicate with artificial intelligence ?

I thought about it for a long time, trying to combine philosophy, linguistics, neurology, computer science and other areas of knowledge. And I realized that the answer was always in front of my nose, and it lay in the area that I have been studying for the past 30 years: Wolfram Language .

Maybe this is the case when you have a hammer and you see only nails around. Although I am sure that it is not only that. At the very least, thinking through this question is a good way to understand more about artificial intelligence and its relationship with people.

Calculations are power

The first key point (in which I came to a clear understanding only after a series of discoveries I made in basic science) is that computation is a very powerful thing that allows even tiny programs (for example, cellular automata or neural networks) to behave incredibly complicated way. And it can use AI.

Looking at a picture like this, it is easy to become a pessimist: everything looks too complicated . In the end, we must hope that we can build a bridge between what our brain can process and what calculations it can do. And although I did not look at it in this way, it turns out that, in essence, this is exactly what I was trying to do all these years when developing Wolfram Language.

Computational thinking language

I saw my role in defining the “chunks” of the calculations that people would use — like FindShortestTour , ImageIdentify, or Predict . Traditional computer languages have focused on low-level constructs. But in the Wolfram Language, I began by saying that we humans understand, and then tried to capture as much of this “understandable” language as possible.

In the early years, we mostly dealt with rather abstract concepts: say, about mathematics or logic or abstract networks. But one of the greatest achievements of recent years, closely related to Wolfram | Alpha, was that we were able to expand the structure we had built, covering countless types of real things that exist in our world: cities, cinemas or animals, etc.

One may ask: why invent a language for all this ? Why not just use, say, English? Well, for specific concepts like “ bright pink ,” “ New York, ” or “ Pluto's moons, ” English is a really good fit (and in such cases, the Wolfram Language allows people to use only English ). But if you try to describe more complex things, spoken English pretty quickly becomes too cumbersome.

Imagine, for example, a description of even a rather simple algorithmic program. Turing-style dialogue will quickly disappoint you.

But the Wolfram Language is built specifically to solve such problems. It is designed to be easily understood by people and takes into account the peculiarities of human thinking. It also has a structure that allows you to put together complex elements. And, of course, not only people can easily understand it, but also cars.

For many years I thought and communicated in a mixture of English and Wolfram languages. For example, if I say something in English, then I can just start typing a continuation of my thought using the Wolfram Language code.

Understanding of AI

But let's go back to AI. For most of the computational history, we created programs for which programmers wrote every line of code, understanding (except for errors) what each line is responsible for. However, achieving the level of AI requires more powerful calculations. Going along this path means going beyond programs that people can write and expanding the range of possible programs.

We can do this through a kind of automation of algorithms , which we have long used in Mathematica and Wolfram Language, either through machine learning or through a search in the Computational Universe for possible programs. However, these programs have one feature: they have no reason to be understandable to people .

At some level, it is disturbing. We do not know how programs work from the inside, or what they can do. But we know that they produce complex calculations that are in some sense too complicated to analyze.

However, in some places the same thing happens: it is about the natural world . If we look closely at the dynamics of fluid , or turn to biology , we will see that all levels of complexity are represented in the natural world. Indeed, the principle of computational equivalence , derived from fundamental science, implies that this complexity is in some sense exactly the same as that which may arise in computing systems.

Over the centuries, we have identified those aspects of the natural world that we can understand, and then use them to create useful technologies. And our traditional engineering approach to programming works more or less the same.

But in the case of AI, we have to leave the beaten track that lies within the Computational Universe, and we will have to (as well as in the natural world) deal with phenomena that we cannot understand.

What will the AI do?

Let's imagine that we have such a perfect AI, which is able to do everything connected with the intellect. Maybe it will receive input from a variety of IoT sensors. Inside it will be all kinds of calculations. But what will he ultimately try to do? What will be his goal?

The idea is to delve into some rather deep philosophy related to the problems that have been discussed for thousands of years, but which ultimately will acquire real meaning only in interaction with the AI.

You might think that since the AI becomes more complex, in the end it will pursue some abstract goal. But it does not make sense. Because in reality there is no such thing as an abstract absolute goal that is deduced purely formally - mathematically or by means of calculations. The goal is determined only in relation to people and is related to their specific history and culture.

" Abstract AIs " that are not related to human goals will simply continue to do the calculations. And, as is the case with most cellular automata and most natural systems, we will not be able to determine any particular goal of this calculation.

Setting goals for AI

Technology has always been an automation of things in such a way that people can set goals, and then these goals can be achieved automatically with the help of technology.

In most cases, these technologies were severely limited, so that their description is not difficult. But for a general computing system, they can be completely arbitrary. Thus, the task is to describe them.

How do you tell the AI what you want him to do for you? You cannot formulate exactly what the AI should do in each case. You could do this if the calculations performed by the AI were severely limited, as in traditional software. To work more efficiently, AI needs to use the wider parts of the Computational Universe. As a result, we are dealing with the phenomenon of computational irreducibility , that is, we will never be able to determine everything that it will do.

So what is the best way to set targets for AI? It's a difficult question. If an AI can experience your life with you, seeing what you see, reading your email with you, and so on, then, as is the case with a person you know well, you could set the AI simple goals by simply voicing them in natural language.

But what if you want to set more complex goals, or those that have little to do with what is already familiar with AI? In such a case, natural language will not be enough. Probably, AI could receive versatile education. However, the best idea would still be to use Wolfram Language, which has a lot of knowledge built in that can be used by both people and AI.

Talking one AI with another

Thinking about how people communicate with AI is one thing. But how will AI communicate with each other? It can be assumed that they could do a literal translation of their knowledge. But this will not work, since the two AIs have different “experiences,” and the representations they use will inevitably differ.

So in the end, AI (as well as people) will have to come to the use of some form of symbolic language, which represents concepts in the abstract (without a specific reference to the underlying concepts).

You might think that the AI should simply communicate in English; at least in this way we could understand them! But it will not work. Since AI must inevitably gradually expand its language, so that even if they started from English, they would still soon go beyond it.

In natural languages, new words are added when new concepts appear, which are quite widespread. Sometimes a new concept is connected with something new in the world (“blog”, “smiley”, “smartphone”, etc.); Sometimes this is due to the emergence of new differences between existing concepts (“road” and “highway”).

Often, it is science that reveals new differences between things by identifying different clusters of behavior or structure. But the fact is that AI can do this much more extensively than humans. For example, our image identification project is set up to recognize 10,000 kinds of objects that have the usual names. He trained on real images and discovered many differences for which we have no name, but thanks to which we can successfully separate things.

I called it " post-linguistic concepts " (or PLECs ). And I think that the hierarchy of these concepts will constantly expand, causing the AI language to also gradually grow.

But how is this supported within the English language? I believe that each new concept may be denoted by a word formed from a certain hash code (such as a set of letters). But a structured symbolic language (like the Wolfram Language) provides a more appropriate basis. Since it is not necessary for it to have simple “words” as units of the language, arbitrary blocks of symbolic information, such as sets of examples, can become such units.

So should AIs talk to each other in Wolfram Language? I think this makes sense. It does not matter how the syntax is encoded (input form, XML, JSON, binary, whatever). More important is the structure and content that is built into the language.

Information gathering: a review of a billion years

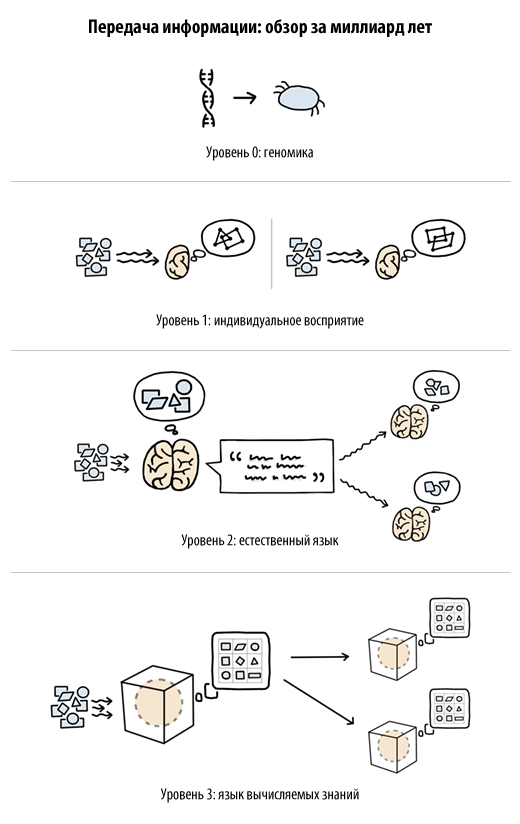

During the billions of years during which life existed on Earth, there were several different ways of transmitting information. The main one is the one that genomics deals with: information transfer at the “hardware” level . For this we have a nervous system and, in particular, a brain that receives information (for example, from our image identification project), accumulating it from the experience. This mechanism is used in our body in order to see and make many other pieces like those that are peculiar to AI.

But in a sense, this mechanism is fundamentally limited, because each organism is different from the other, and each individual must go through the entire learning process independently: not a single piece of information obtained in one generation can be easily transferred to the next one .

However, our species made a great discovery: natural language . Because with natural language, it becomes possible to take information that has been learned and pass it on in an abstract form, say, from one generation to another. However, there is another problem, because when a natural language is developed, it must still be interpreted - by each person individually.

And it is here that the significance of a language based on calculated knowledge (like the Wolfram Language) becomes obvious, because it provides the opportunity to exchange concepts and facts about the world, without requiring a separate interpretation.

It is probably not an exaggeration to say that the invention of the human language led to the emergence of civilization and our modern world. So, what will be the consequences of owning a language that is based on knowledge, and which contains not only abstract concepts, but also a way of their derivation?

One of the possible options is a description of a civilization with AI - no matter what it may be. And, perhaps, it will be far from what we, the people (at least in our present state) can understand. The good news is that, at least in the case of the Wolfram Language, the exact language based on calculated knowledge is not incomprehensible to humans; it can be a bridge between people and cars.

And what if everyone could write code?

So let's imagine a world in which (in addition to natural language) a language like the Wolfram Language is used throughout. Of course, such a language will be more often used for communication between machines. However, it is possible that he will become the dominant form of communication between man and machine.

In the modern world, only a small part of people can write computer code - just as 500 years ago, only a small part of people could write in natural language. But what if computer literacy levels increase dramatically, and as a result, most people will be able to write knowledge-based code?

It was natural language that contributed to the formation of many features of modern society. What possibilities does the code based on knowledge open? Variety. Today you can get a menu in the restaurant to choose a dish. But if people could read the code, you could create code for each option so you can easily make changes to your taste (in fact, something like this will soon be possible (Emerald Cloud Lab) using the Wolfram Language code for laboratory experiments in biology and chemistry). Another consequence for people who are able to read the code: instead of writing plain text, you can write code that can be used by both people and machines .

I suspect that the consequences of the widespread literacy of knowledge-based programming will be much deeper, because it will not only allow many people to express something new, but also give them a new way to think about it.

Will it really work?

Good: let's say we want to use Wolfram Language to communicate with AI. Will it work out? This version is already being implemented, because within Wolfram | Alpha and systems based on it, questions formulated in natural language are converted into Wolfram Language code.

But what about more complex applications of artificial intelligence? In many cases, when using Wolfram Language, we are dealing with examples of AI, whether they are computations produced with images, or data, or character structures. Sometimes calculations include algorithms whose goals we can accurately determine ( FindShortestTour ); sometimes targets are less accurate ( ImageIdentify ). Sometimes calculations are presented in the form of " what needs to be done ", sometimes - as " what needs to be found ", or " what to strive for ."

We have come a long way in representing the world in Wolfram Language. However, more needs to be done. Back in the 17th century, attempts were made to create a " philosophical language " that would somehow symbolically reflect the essence of everything that can be imagined. Now we need to really create such a language. At the same time, it will be necessary to cover all types of actions and processes that may occur, as well as such phenomena as the beliefs of peoples and various mental states. As our AIs become more sophisticated and more integrated into our lives, the presentation of such things is an important step.

, , , , - . , , .

, , - , . , , , . Wolfram Language : , , .

, . , . , , — , , Wolfram Language .

, , — . , Wolfram Language ; . , , . , … , , , , …

Source: https://habr.com/ru/post/326570/

All Articles