Automation QA is a separate command?

"Certainly separate!", - the majority of those reading will answer. Such an answer fits into their picture of the world, because “they have always worked this way.”

It always worked

This phrase usually means having a product already working on production or just preparing to be released, but written without modular and integration tests. Without a safety net from tests, changes are made for a long time, expensively and with a large number of new bugs. Such a project in the development world is called “Legacy”.

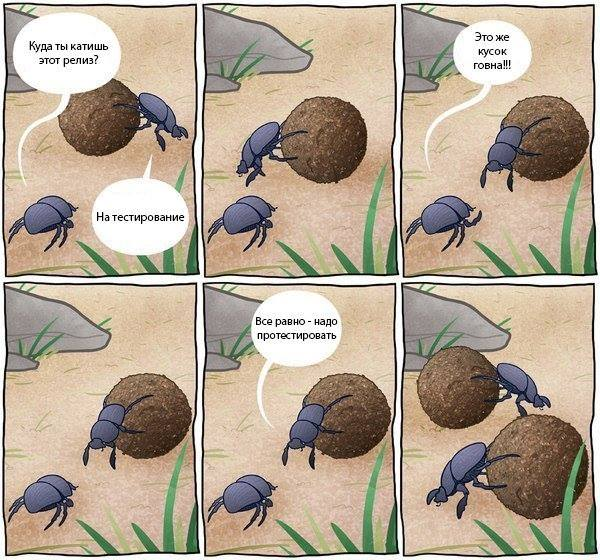

The company understands that it is impossible to do without the safety net, so a QA department is created, which usually does not ensure the quality of the product, but only controls it. With a QA department, a developer can safely do what he likes - write code, because the dedicated department now has responsibility for quality! The classic “code flip through the wall” to the testing department takes place:

QA-department writes hundreds of black-box test cases for ready-made functionality that must be completed during the regression. And also, if the product continues to evolve, hundreds of test cases for the new functionality, which are later added to the regression.

Passing each test case is manual, so the testing process takes a lot of time. For natural reasons, the number of test cases in the regression is constantly growing, and the decision is made to create an automation team within the QA department.

Since the new team was recruited because of the need to speed up the regression cycle, which consists of black-box tests, automation also takes place at the black-box level: via a GUI or API. Automation through the GUI is the most painful and expensive because of the fragility and low speed of the tests, but they often start with it.

Meanwhile, the fact of creating a new team does not affect the development team in any way: it still continues to give a poor-quality product to testing, ignoring the writing of modular and integration tests. Considering the huge number of black-box scenarios queuing for automation, we get the Ice-Cream Cone anti-testing pattern, in which the number of the slowest and most expensive GUI autotests is much more than the number of cheap and fast modular and integration tests.

The unstable and slow by nature GUI autotests become more and more with each release, which means more resources are spent on their support, which leads to the expansion of the automation team. The quality assurance department is growing, but it does not ensure a proper increase in the quality of the manufactured product. Do you really want to work like this always?

What is the problem?

One of the main causes of the situation described above is the lack of a development culture in which each developer is responsible for the code written by him. And even the minimum responsibility means the need to verify the performance of the code before joyfully exclaiming: “My work is ready!”.

Eye Driven Development is the easiest way to make sure that the code works, but not the most optimal one. This method is simple in that it does not involve virtually any intellectual work: we are testing with hands the application, service, class, etc. from the point of view of the end user, without considering the boundary values, equivalence classes, negative scenarios, scenarios with different levels of permissions and so on. This method does not provide quick feedback during development, does not allow checking the essence on a diverse sample of data, takes a lot of time and does not improve the quality of the product.

The most optimal way is to write autotests for the code being developed. Speaking of responsibility for the code, it does not matter when the autotests are written: before or after the code itself. The main thing that gives such a way is the confidence that the work is really completed and you can move on to another task. Considering other advantages in the form of fast feedback, the ability to check the essence on a large sample, as well as high speed, auto tests written by developers are an excellent tool for improving the quality of the product.

We do not work as always

I propose to mentally model the possible development of events in a team whose members are responsible for the written code.

We have a product already working on production or just preparing to be released, which is covered by modular and integration tests. The code is constantly being improved, and the new functionality is added without fear of breaking the existing one. To make sure that the developed functionality works, you no longer need to “transfer code through the wall” to the testing department and lose time waiting for the verdict, and then repeat the iteration over and over again.

QA-department has already been created and is actively involved in the development process, being really engaged in ensuring the quality of the product. When talking about quality assurance, it seems to me convenient to be guided by Testing Quadrants:

Using Testing Quadrants, testing can be divided into 4 categories.

The first category is product implementation testing, creating a safety net for the development team. This category is responsible for low-level testing using modular and integration tests and allows developers to understand that, from a technical point of view, they are doing things right (Do Things Right). Obviously, low-level tests are fully automated and are written by the development team, as they lie in its area of interest.

The second category is testing the business functions of the product, creating a safety net for the development team. Here we are talking about such types of testing as Examples, Story Tests and others, aimed primarily at creating the right communication between the business and the development team, and allowing the team to understand that it is doing the right things (Do Right Things) that are really needed by the business.

Automating Examples or Story Tests is end-to-end tests that test not complex interface usage scenarios, but only business logic, but through an interface that is accessible to the end user. Since this category of testing is still in the area of development interests, automation falls on the shoulders of the development team.

The third category is testing the business functions of the product, which are critical for the end user to perceive the quality of the product. This category includes research testing, testing of complex product use scenarios, usability testing, alpha and beta testing. Tests from this category are completely on the shoulders of the QA-team, and their automation is impossible or too complicated.

If we speak directly about research testing and testing of complex scenarios that may find functional errors, then it is worth noting that any error found is a code error and can be covered by appropriate modular or integration tests. This was well written by Martin Fowler, and I allowed myself liberties in translation:

There is always a second line of defense. If you’re not in trouble, you’ll have a problem. Therefore, you should replicate the bug test. Then the unit stays dead.

I argue that high-level tests are just a second line of defense. A fallen high-level test means not only a bug in the product code, but also the absence of an appropriate unit test or an error in an existing one. My advice to you is: before fixing a bug found by a high-level test, reproduce it using the unit test, and you will say goodbye to the bug forever.

The fourth category is product testing, which is critical for end-user perception of product quality. Typically, load testing, performance testing, system safety and reliability testing fall into this category. Such testing is conducted using special tools, often written for the needs of a particular project. In an amicable way, the DevOps department deals with the infrastructure for conducting such tests, and

separate team. Moreover, the tools should allow to conduct tests on demand (Testing as a Service).

Since the created QA-department is now responsible not only for quality control of the product, but also for quality assurance, its responsibilities include:

- Help developers with writing tests from the first category

- Participation in the formation of Examples and Story Tests during the communication of the development team with business representatives

- Conducting research testing and testing of complex scenarios

- Conduct usability testing and work with feedback from users

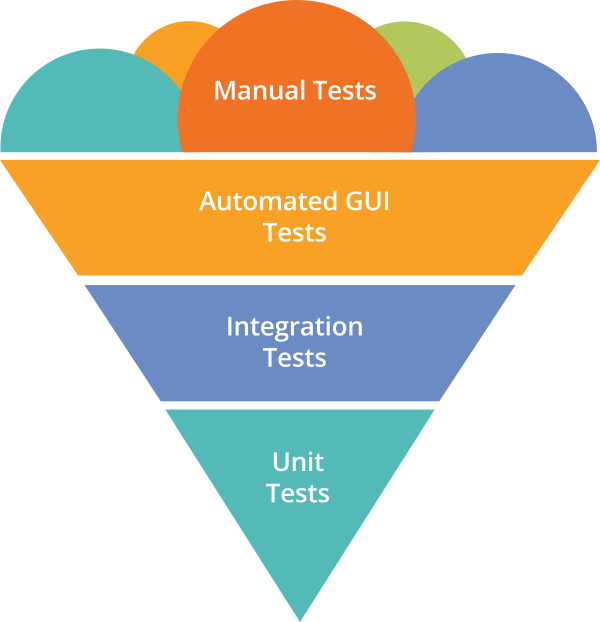

The infinite growth of regression hand-held black-box test cases does not occur, because most of them are covered in the first and second categories by the development team. In this case, we form the correct test ratio or, as it is commonly called, the “testing pyramid”:

The growth in the number of regression hand tests is minimal, automation at the level of modular, integration and GUI tests is carried out by the development team. Moreover, the number of GUI tests is small, they do not describe complex scenarios for using GUIs, but rather the work of business logic through the user interface, which means they are less fragile and cheaper to maintain. The QA department is really engaged in quality assurance, receiving and working with feedback from users, conducting research testing, testing new functionality using complex scripts, as well as helping developers communicate with the business. Testing in a project is automated more efficiently than in the first case, but the automation team inside the QA-department has not appeared.

And I repeat the question: “Is Automation QA a separate command?”

')

Source: https://habr.com/ru/post/326020/

All Articles