WebRTC, Safari

Last April, a press release rolled around the network that Apple was rolling out WebRTC support in Safari browsers for Mac OS and iOS. Since the release of the press release, exactly one year will soon pass, as Apple continues to roll out WebRTC for Safari. We wait.

However, not everyone is waiting. Someone needs a real-time video in Safari right now and in this article we will explain how to do without WebRTC in the iOS Safari and Mac OS Safari browser and how you can replace it.

Today we know the following options:

')

- Hls

- Flash

- Websockets

- WebRTC Plugin

- iOS native app

Since we are looking for an alternative to RTC (Real Time Communication), we compare these options not only on iOS / Mac OS platforms, but also on average delay (Latency) in seconds.

| iOS | Mac OS | Latency | |

| HLS, DASH | Yes | Yes | 15 |

| Flash rtmp | Not | Yes | 3 |

| Flash RTMFP | Not | Yes | one |

| Websockets | Yes | Yes | 3 |

| WebRTC Plugin | Not | Yes | 0.5 |

Hls

As can be seen from this table, HLS completely drops out of real-time with its 15 or more seconds of delay, although it works fine on both platforms.

Flash rtmp

Despite the fact that Flash is dead, it continues to work on Mac OS and gives a real-time delay. But things on Flash on Safari really are not very. It happens that Flash is simply disabled.

Flash RTMFP

Same as Flash RTMP, with the only difference being that it works via UDP and can drop packets, which is undoubtedly better for realtime. Good delay. Does not work on iOS.

Websockets

Some alternative for HLS if you need a relatively low latency. Works in iOS and Mac OS.

In this case, the video stream comes through Websockets (RFC6455), is decoded at the JavaScript level, and drawn to the HTML5 canvas using WebGL . This method works much faster than HLS, but has its drawbacks:

- One-way delivery. You can only play the stream in real-time, but you cannot capture it from the device's camera and send it to the server.

- Restrictions on permission. With a resolution of 800x400 and higher, we need an already powerful CPU on the latest iPhone or iPad for smooth decoding of such a stream into JavaScript. With iPhone5 and iPhone6, the resolution will most likely not be able to be raised above 640x480 and left smooth.

WebRTC Plugin

On Mac OS, you can install the WebRTC plugin that implements WebRTC. This certainly gives the best latency, but it does require the user to download and install third-party software. It can be fairly noted that Adobe Flash Player is also a plugin and may also require manual installation, but Adobe's “dead flash” plugin obviously surpasses Noname WebRTC plugins in terms of prevalence. In addition, WebRTC plugins do not work in iOS Safari.

Other WebRTC Alternatives for iOS

If you are not limited to the Safari browser, you can consider the following options:

iOS native app

Implement an iOS application with WebRTC support and get low latency and all the power of WebRTC technology. Not a browser. Requires installation from the App Store.

Bowser

Browser with WebRTC support for iOS. They say that supports WebRTC, but we have not tested. Not very popular browser. But if you can force users to use it, you can try to do it.

Ericsson

Same as Bowser. It is not clear how well it works with WebRTC. Not popular on iOS.

Waiting

You can wait for the introduction of Apple's WebRTC. A year has passed. Perhaps it remains to wait not so long. Maybe someone has an insider?

Websockets as a replacement for WebRTC on iOS

The table above shows that on iOS Safari there are only two options: HLS and Websockets . The first has a delay of more than 15 seconds . The second has its limitations and a delay of about 3 seconds . There is MPEG DASH , but this is the same HLS / HTTP in terms of realtime.

Due to the above limitations:

- JavaScript decoding and low resolution support

- One-way streaming (playback only)

Web sockets, of course, cannot claim to be a full replacement for WebRTC in the iOS Safari browser, but allow you to play real-time streams right now.

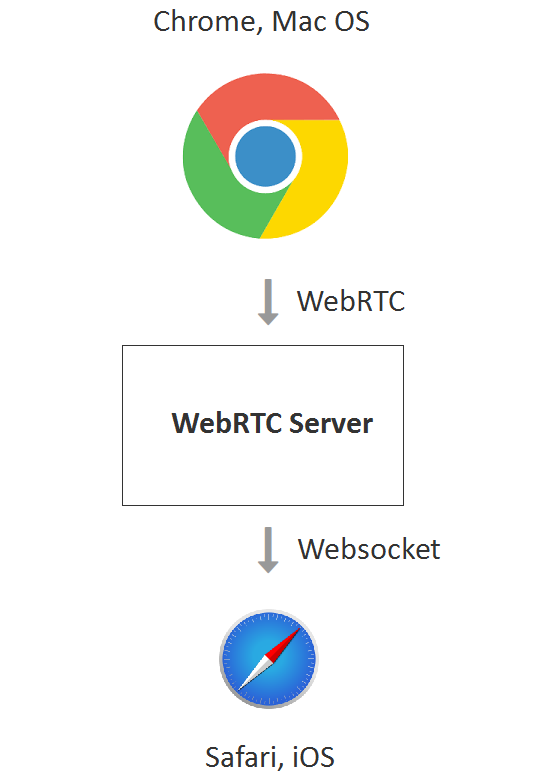

In this case, the real-time broadcast scheme looks like this:

- We send a WebRTC video stream, for example, from Mac OS or Win, the Chrome browser to a WebRTC server with support for converting to webboxes.

- The WebRTC server converts the stream to MPEG + G.711 and wraps it in the Websockets transport protocol.

- iOS Safari establishes a connection to the server using the Websockets protocol and collects the video stream. Next, it unpacks the video stream and decodes the audio and video. Audio plays through the Web Audio API, and video renders to Canvas using WebGL.

Testing Websockets playback in iOS Safari

The server used is Web Call Server 5, which supports this conversion and sends the stream to iOS Safari via Websockets. The source of a real-time video stream can be a webcam that sends video to the server or an IP camera that uses RTSP.

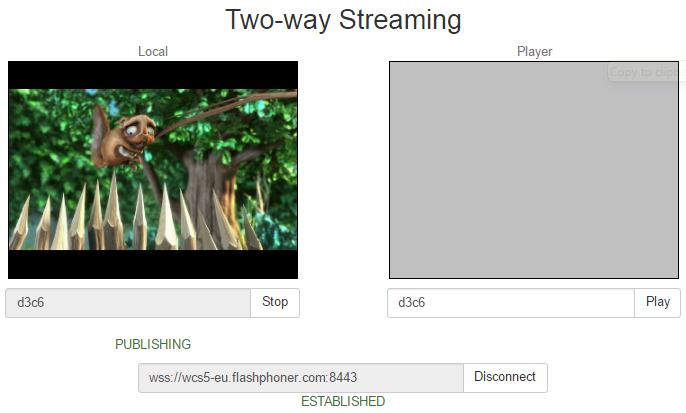

This is how sending a real-time WebRTC video stream to a server in Google Chrome browser from the desktop:

And this is the playback of the same video stream in realtime, in the iOS Safari browser:

Here we have specified d3c6 as the name of the video stream, i.e. The video stream that was sent from the Chrome browser via WebRTC.

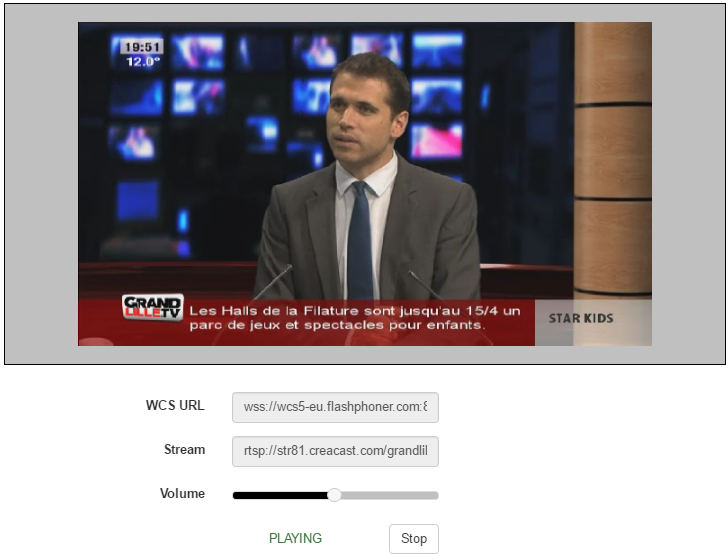

In case the video stream is taken from an IP camera, in iOS Safari it will look like this:

As you can see from the screenshot, we used the RTSP address as the video stream name. The server took the RTSP stream and converted it to Websockets for iOS Safari.

Integration of the player for iOS Safari into a web page

The source code of the player is available here . However, the link player works not only for iOS Safari. It can switch between three technologies: WebRTC, Flash, Websockets in order of priority and contains a bit more code than is required for playback in iOS Safari.

Let's try to minimize the player code to demonstrate the minimum configuration that will play in iOS Safari

The minimal code of the HTML page will look like this: player-ios-safari.html

<html> <head> <script language="javascript" src="flashphoner.js"></script> <script language="javascript" src="player-ios-safari.js"></script> </head> <body onLoad="init_page()"> <h1>The player</h1> <div id="remoteVideo" style="width:320px;height:240px;border: 1px solid"></div> <input type="button" value="start" onClick="start()"/> <p id="status"></p> </body> </html> From this code, you can see that the main element on the page is a div block.

<div id="remoteVideo" style="width:320px;height:240px;border: 1px solid"></div> It is this block that will be responsible for playing the video after the API scripts insert the HTML5 Canvas there.

Next comes the player script. Script size: 80 lines: player-ios-safari.js

var SESSION_STATUS = Flashphoner.constants.SESSION_STATUS; var STREAM_STATUS = Flashphoner.constants.STREAM_STATUS; var remoteVideo; var stream; function init_page() { //init api try { Flashphoner.init({ receiverLocation: '../../dependencies/websocket-player/WSReceiver2.js', decoderLocation: '../../dependencies/websocket-player/video-worker2.js' }); } catch(e) { return; } //video display remoteVideo = document.getElementById("remoteVideo"); onStopped(); } function onStarted(stream) { //on playback start } function onStopped() { //on playback stop } function start() { Flashphoner.playFirstSound(); var url = "wss://wcs5-eu.flashphoner.com:8443"; //create session console.log("Create new session with url " + url); Flashphoner.createSession({urlServer: url}).on(SESSION_STATUS.ESTABLISHED, function(session){ setStatus(session.status()); //session connected, start playback playStream(session); }).on(SESSION_STATUS.DISCONNECTED, function(){ setStatus(SESSION_STATUS.DISCONNECTED); onStopped(); }).on(SESSION_STATUS.FAILED, function(){ setStatus(SESSION_STATUS.FAILED); onStopped(); }); } function playStream(session) { var streamName = "12345"; var options = { name: streamName, display: remoteVideo }; options.playWidth = 640; options.playHeight = 480; stream = session.createStream(options).on(STREAM_STATUS.PLAYING, function(stream) { setStatus(stream.status()); onStarted(stream); }).on(STREAM_STATUS.STOPPED, function() { setStatus(STREAM_STATUS.STOPPED); onStopped(); }).on(STREAM_STATUS.FAILED, function() { setStatus(STREAM_STATUS.FAILED); onStopped(); }); stream.play(); } //show connection or remote stream status function setStatus(status) { //display stream status } The most important parts of this script are API initialization.

Flashphoner.init({ receiverLocation: '../../dependencies/websocket-player/WSReceiver2.js', decoderLocation: '../../dependencies/websocket-player/video-worker2.js' }); At initialization two more scripts are loaded:

- WSReceiver2.js

- video-worker2.js

These scripts are the core of the web socket player. The first is responsible for the delivery of the video stream, and the second for its processing. The flashphoner.js , WSReceiver2.js and video-worker2.js scripts are available for download in the Web SDK assembly for Web Call Server and must be connected in order to play the stream in iOS Safari.

Thus, we have the following required scripts:

- flashphoner.js

- WSReceiver2.js

- video-worker2.js

- player-ios-safari.js

The connection to the server is established using the following code:

Flashphoner.createSession({urlServer: url}).on(SESSION_STATUS.ESTABLISHED, function(session){ setStatus(session.status()); //session connected, start playback playStream(session); }).on(SESSION_STATUS.DISCONNECTED, function(){ setStatus(SESSION_STATUS.DISCONNECTED); onStopped(); }).on(SESSION_STATUS.FAILED, function(){ setStatus(SESSION_STATUS.FAILED); onStopped(); }); } Directly play the video stream using the API method createStream (). Play () . When playing a video stream in the div-element remoteVideo will be embedded HTML-element Canvas, which will be the rendering of the video stream.

function playStream(session) { var streamName = "12345"; var options = { name: streamName, display: remoteVideo }; options.playWidth = 640; options.playHeight = 480; stream = session.createStream(options).on(STREAM_STATUS.PLAYING, function(stream) { setStatus(stream.status()); onStarted(stream); }).on(STREAM_STATUS.STOPPED, function() { setStatus(STREAM_STATUS.STOPPED); onStopped(); }).on(STREAM_STATUS.FAILED, function() { setStatus(STREAM_STATUS.FAILED); onStopped(); }); stream.play(); } We have hardcoded two things in the code:

1) server url

var url = "wss://wcs5-eu.flashphoner.com:8443"; This is a public demo server Web Call Server 5. If something is wrong with it, you need to install your own for testing.

2) the name of the stream to play

var streamName = "12345"; This is the name of the video stream we are playing. In case this is a stream from an RTSP IP camera, it can be set as follows.

var streamName = "rtsp://host:554/stream.sdp"; The most inconspicuous, but very important function:

Flashphoner.playFirstSound(); On mobile platforms, in particular on iOS Safari, there is a restriction to the Web Audio API, which does not allow the sound to be played from the speakers until the user clicks on any element of the web page. Therefore, when you click the Start button, we call the playFirstSound () method, which plays a short piece of generated audio so that the video can finally play with audio.

In the end, our custom minimal player consisting of four scripts and one HTML file player-ios-safari.html looks like this:

- flashphoner.js

- WSReceiver2.js

- video-worker2.js

- player-ios-safari.js

- player-ios-safari.html

Download the source code of the player here .

Thus, we talked about current WebRTC alternatives for iOS Safari and analyzed the example of a real-time player with the transfer of video using Websockets technology. Perhaps he will help someone to wait for the arrival of WebRTC on Safari.

Links

Press release - Apple rolls out WebRTC for Safari

Websockets - RFC6455

WebGL Specification

Web Call Server - a WebRTC server that can convert a stream to Websockets for playback on iOS Safari

WCS installation - download and install

Launch on Amazon EC2 - launch a ready-made server image on Amazon AWS

Source code is an example of a player: player-ios-safari.js and player-ios-safari.html

Web SDK - web API for WCS server, containing scripts flashphoner.js, WSReceiver2.js, video-worker2.js

Source: https://habr.com/ru/post/325978/

All Articles