Archaic video compression algorithms of the era of FMV games

In the 90s, an interesting situation developed: the computing power of computers was not enough to calculate at least some realistic graphics, and at the same time, compact discs provided an opportunity to record a huge amount of information for those times. In general, the idea lay on the surface: it would be nice to improve the quality of the gaming picture at the expense of video content and there is where to write this content.

But there was also a problem: the typical game resolution of that time was 320 by 200 points with a palette of 256 colors, which gives us 64 kilobytes per frame or one and a half megabytes per 25 frames, at a speed of reading from a CD of 150 kilobytes per second. Those. the video had to be pressed and pressed quite strongly, and squeezed, then we had to be able to decode, because we remember, the computers were weak and decoding, for example, they were not capable of MPEG at all. Nevertheless, video game manufacturers successfully solved the problem of insufficient performance, creating at the same time a multitude of video codecs and video game formats , some of which could be played by as many as 286 (written: two hundred eighty sixth) processor.

Thus began the era of FMV games (Full Motion Video Games). I think many people remember her outstanding representatives: Crime Patrol from American Laser Games, Lost Eden , Cyberia , Novastorm, and even Command & Conquer , which many played just for the sake of video sequences between missions. In those days, it looked very cool. Wow Wow Well, I was wondering how they encoded this video, in the multimedia books they gave the same description of the problem as I did above, but they did not write anything intelligible about the compression methods, apparently, the authors understood this a little and retold some dubious gossip.

')

In fact, everything turned out to be very simple, there were only three methods and everything was very simple.

But before we move on to the methods themselves, there were still widely used tricks. First, of course, about 25 frames per second was out of the question. All polls were cutting fps, up to 10 frames per second (interactive shots Americal Laser Games). 12, 14, 15 fps - typical frame rates. Secondly, of course, the resolution of the picture was almost always cut, because if you cut 10 pixels (3% each) from each side of the frame, this would give ~ 15% of the winnings. Well, if the resolution is reduced to 256 by 144 points, as the Novastorm developers did, then the gain will be almost half.

And so, look, if you take 256 by 144 pixels and 14 frames per second, then this will give us half a megabyte of uncompressed video instead of half a megabyte, which is encouraging.

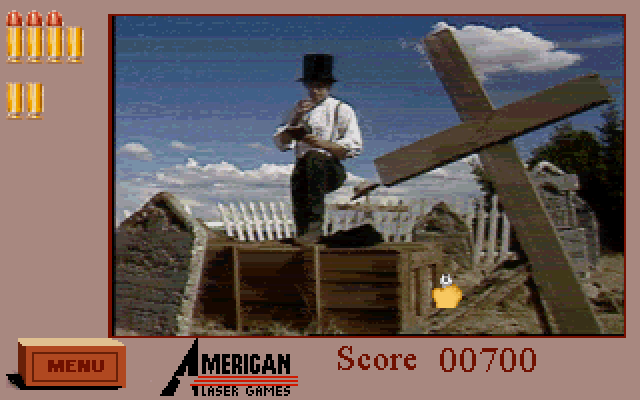

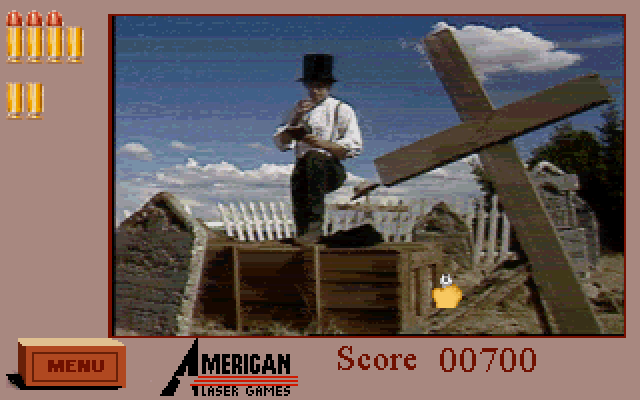

There was a BFI format that was deciphered by developers as “Brute Force & Ignorance”. This group includes various lightweight variations based on the RLE and LZ algorithms. This may seem surprising, but often this was enough, but if, for example, there was not enough compression in the dynamic scene, then you can always start skipping frames, or lower the resolution even more than two or even four times ( MM ). Videos in shooting galleries of American Lazer Games, games of the Wing Commander series, Lost Eden, and other games of Cryo studio are encoded in this way.

Pay attention to the characteristic horizontal stripes for Lossy RLE.

The idea is simple: let's take, say, eight frames following each other and cut them all into blocks of 4x2 pixels, with a picture resolution of 320 by 156 pixels, this will give us 50,000 24-dimensional vectors (8 pixels in a block, three color components for each pixel). The frames following each other are very likely to have a lot in common, therefore among these vectors there will be many close to each other. Further we divide these vectors into 4096 groups, for each group we calculate the centroid. We form a codebook from centroids, but frames are written to the file as chains of 12-bit links to the codebook. Together, this gives us about 13 kilobytes per frame, which in principle can do for a two-speed sidir.

Approximately this approach was used in the early games of the Command & Conquer series.

Decoding is obviously very fast and cheap, but it’s very difficult to encode video with this method. Not everyone who used VQ did it well. Look at the screenshots of the game Creature Shock.

Here again everything is simple, nothing complicated was applied in the 90s. To save the 256-color image, we need a byte for each pixel. And let's reduce the number of colors, let's say, to two, now we need a bit per pixel, we got eightfold compression and a nightmare image. What can you do about it? Just apply this approach not to the entire image as a whole, but to small blocks, because with a high probability all colors within a fairly small block are close to each other. We cut the image into blocks, say, 4 by 4 pixels and for each of them we save two colors (his personal palette) and another 16 bits of references to these colors. Only 4 bytes per block of 16 pixels, i.e. Got 4x compression. But if suddenly it turns out that there are not enough two colors for this block, then we can save four colors - this will give us a two-fold compression. And you can divide the block into 4 smaller blocks and calculate two colors for each of them.

This method was very popular, it is simple and fast in coding and decoding, provides reasonable quality, easy to implement. The Novastorm game uses blocks of 8 to 8, which can have a palette of 2, 4, 8 colors. In the well-known game Z is also used cutting into blocks of 8 by 8 pixels and dividing them into pieces if necessary ( JV ). In Cyberia - the combined approach: it is used as a division into smaller blocks and palettes of different sizes ( C93 , M95 ).

Of course, everyone used interframe compression in its most primitive form, often even without motion prediction. There could be no question of any brightness correction in palette videos, therefore the frame rate visually fell on scenes with dimming. This was very noticeable in the very first Need For Speed videos, but I thought that I had problems with my 4-speed sidir. Even tried to return it to the store.

Shot from the game Rebel Assault. Blockiness, characteristic for VQ repeats of blocks. Blocks 4 to 4, mostly two-color, most likely the codebook is additionally encoded with BTC.

As soon as the computational power allowed, everyone started using codecs based on discrete cosine transform, and then switched to video game inserts on the game engine, the era of FMV games ended.

There are people who investigate old video formats, reverse engineer codecs, carefully collect and retain this knowledge. I highly recommend visiting their website wiki.multimedia.cx . A lot of information for all those interested in audio and video compression issues, including on modern codecs.

The article used screenshots from mobygames.com .

But there was also a problem: the typical game resolution of that time was 320 by 200 points with a palette of 256 colors, which gives us 64 kilobytes per frame or one and a half megabytes per 25 frames, at a speed of reading from a CD of 150 kilobytes per second. Those. the video had to be pressed and pressed quite strongly, and squeezed, then we had to be able to decode, because we remember, the computers were weak and decoding, for example, they were not capable of MPEG at all. Nevertheless, video game manufacturers successfully solved the problem of insufficient performance, creating at the same time a multitude of video codecs and video game formats , some of which could be played by as many as 286 (written: two hundred eighty sixth) processor.

Thus began the era of FMV games (Full Motion Video Games). I think many people remember her outstanding representatives: Crime Patrol from American Laser Games, Lost Eden , Cyberia , Novastorm, and even Command & Conquer , which many played just for the sake of video sequences between missions. In those days, it looked very cool. Wow Wow Well, I was wondering how they encoded this video, in the multimedia books they gave the same description of the problem as I did above, but they did not write anything intelligible about the compression methods, apparently, the authors understood this a little and retold some dubious gossip.

')

In fact, everything turned out to be very simple, there were only three methods and everything was very simple.

But before we move on to the methods themselves, there were still widely used tricks. First, of course, about 25 frames per second was out of the question. All polls were cutting fps, up to 10 frames per second (interactive shots Americal Laser Games). 12, 14, 15 fps - typical frame rates. Secondly, of course, the resolution of the picture was almost always cut, because if you cut 10 pixels (3% each) from each side of the frame, this would give ~ 15% of the winnings. Well, if the resolution is reduced to 256 by 144 points, as the Novastorm developers did, then the gain will be almost half.

And so, look, if you take 256 by 144 pixels and 14 frames per second, then this will give us half a megabyte of uncompressed video instead of half a megabyte, which is encouraging.

1. Brute force and ignorance (RLE, LZ)

There was a BFI format that was deciphered by developers as “Brute Force & Ignorance”. This group includes various lightweight variations based on the RLE and LZ algorithms. This may seem surprising, but often this was enough, but if, for example, there was not enough compression in the dynamic scene, then you can always start skipping frames, or lower the resolution even more than two or even four times ( MM ). Videos in shooting galleries of American Lazer Games, games of the Wing Commander series, Lost Eden, and other games of Cryo studio are encoded in this way.

Pay attention to the characteristic horizontal stripes for Lossy RLE.

2. Vector quantization (VQ)

The idea is simple: let's take, say, eight frames following each other and cut them all into blocks of 4x2 pixels, with a picture resolution of 320 by 156 pixels, this will give us 50,000 24-dimensional vectors (8 pixels in a block, three color components for each pixel). The frames following each other are very likely to have a lot in common, therefore among these vectors there will be many close to each other. Further we divide these vectors into 4096 groups, for each group we calculate the centroid. We form a codebook from centroids, but frames are written to the file as chains of 12-bit links to the codebook. Together, this gives us about 13 kilobytes per frame, which in principle can do for a two-speed sidir.

Approximately this approach was used in the early games of the Command & Conquer series.

Decoding is obviously very fast and cheap, but it’s very difficult to encode video with this method. Not everyone who used VQ did it well. Look at the screenshots of the game Creature Shock.

3. Block Truncation Coding (BTC)

Here again everything is simple, nothing complicated was applied in the 90s. To save the 256-color image, we need a byte for each pixel. And let's reduce the number of colors, let's say, to two, now we need a bit per pixel, we got eightfold compression and a nightmare image. What can you do about it? Just apply this approach not to the entire image as a whole, but to small blocks, because with a high probability all colors within a fairly small block are close to each other. We cut the image into blocks, say, 4 by 4 pixels and for each of them we save two colors (his personal palette) and another 16 bits of references to these colors. Only 4 bytes per block of 16 pixels, i.e. Got 4x compression. But if suddenly it turns out that there are not enough two colors for this block, then we can save four colors - this will give us a two-fold compression. And you can divide the block into 4 smaller blocks and calculate two colors for each of them.

This method was very popular, it is simple and fast in coding and decoding, provides reasonable quality, easy to implement. The Novastorm game uses blocks of 8 to 8, which can have a palette of 2, 4, 8 colors. In the well-known game Z is also used cutting into blocks of 8 by 8 pixels and dividing them into pieces if necessary ( JV ). In Cyberia - the combined approach: it is used as a division into smaller blocks and palettes of different sizes ( C93 , M95 ).

The original BTC, which can be read on Wikipedia, is designed to compress half-tone images, colors are calculated in such a way as to preserve the average, and the standard deviation of brightness in the block. I must say that this approach will produce results of terrifying quality. The correct palette should minimize the sum of squares of deviations - that's when it turns out beautifully.

Of course, everyone used interframe compression in its most primitive form, often even without motion prediction. There could be no question of any brightness correction in palette videos, therefore the frame rate visually fell on scenes with dimming. This was very noticeable in the very first Need For Speed videos, but I thought that I had problems with my 4-speed sidir. Even tried to return it to the store.

Shot from the game Rebel Assault. Blockiness, characteristic for VQ repeats of blocks. Blocks 4 to 4, mostly two-color, most likely the codebook is additionally encoded with BTC.

As soon as the computational power allowed, everyone started using codecs based on discrete cosine transform, and then switched to video game inserts on the game engine, the era of FMV games ended.

There are people who investigate old video formats, reverse engineer codecs, carefully collect and retain this knowledge. I highly recommend visiting their website wiki.multimedia.cx . A lot of information for all those interested in audio and video compression issues, including on modern codecs.

The article used screenshots from mobygames.com .

Source: https://habr.com/ru/post/325968/

All Articles