Do we need neural networks?

Or the story of how I made image recognition using a convolutional neural network without a neural network. Interesting? Then I ask for cat.

One summer evening playing Dota 2, I thought it would not be a bad thing to recognize the characters in the game, and to give out statistics on the most successful counter-character selection. First thought, you need to somehow pull the data from the match and immediately process them. But I rejected this idea, since I have no experience in hacking games. Then I decided that it was possible to make screenshots during the game, process them quickly, and thus obtain data about the selected characters.

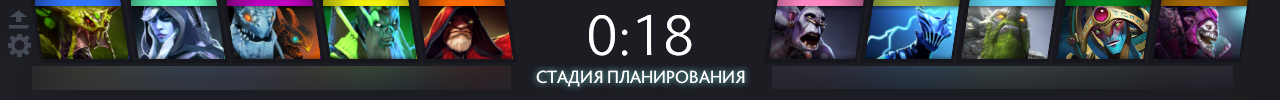

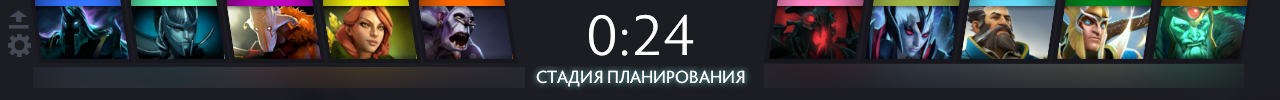

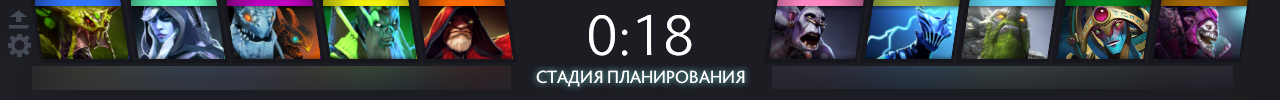

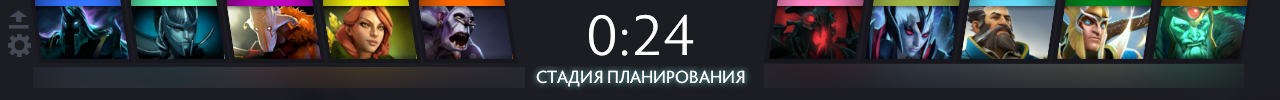

And so we begin. First we take a screenshot of the screen.

Everything looks like the image; it may just be to take hash from the image and search for it.

“Oh Gabe! Well, for that kind of torment me. "No, it will not work. One Gabe knows why the characters' images are so wriggled (although not only to him, I have an assumption that the images go resize (tsya), and the dimensions are given by a fractional number). So it is necessary to recognize the image in another way. Now neural networks are in fashion. So try to screw them. We will use convolutional neural networks. So we need:

Wait, what about oh oh oh oh

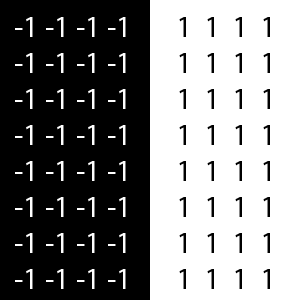

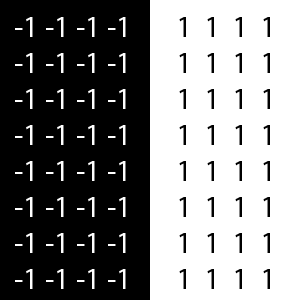

I don’t have enough life to accumulate such a base. I thought we should somehow do without a huge training sample, while not at the expense of recognition speed. There is an exhaustive article about the SNS (Convolutional Neural Network) on Wikipedia . Briefly explain the principle of the SNA can be so. The input matrix is the brightness of the pixels in grayscale. And multiplied by the convolutional core, then the values are added together and normalized. A convolution core is usually -1 and 1, denoting black and white colors, respectively, or vice versa.

There are popular kernel patterns, for example, Haar signs. Further in the article we will use precisely some signs of Haar. Then the convolution values pass through the input connections of the neuron, are multiplied by the weights of the connections, summed, and ultimately the value obtained is fed to the neuron's activation function. But this is pure comparison, or the difference of bundles, here it is. We just need to take the difference of the original convolution with the convolutions of the characters, the smaller the difference, the more similar the images. This is the simplest architecture. There are no hidden layers in it, since we do not need any abstract parameters. Let's try to do all of the above.

The original character image will be 78x53 px. In grayscale. That is, a matrix of size 78x53 with values of 0 ... 255. We will take the core of 10x10 in size. The kernel pitch will be the size of the core - 10. Both in x and y. (We don’t need a lot of parameters. After all, the images are not very different from each other). Total 48 values per character. Now let's get down to the code. We need to initialize the kernel with numbers according to the signs of Haar. Take the following signs.

Let's create the class ConvolutionCore where we will initialize the convolutional kernel.

I did dynamic stuffing, and initialization, regardless of kernel size. Now we will create a class of Convolution, in it we will do convolution

I will explain that in the class, in the static block, we load convolution kernels, since they are constant and do not change, and we will do a lot of bundles, initialize the kernels once and we will not do this anymore. In the constructor we take a matrix of values from the existing image, it is necessary to speed up the algorithm, so that we don’t take 1 pixel from the image each time, we will create a gray gradation matrix. In the ColapseMatrix method at the entrance, we have an image matrix and a convolution kernel. First, zeros are added to the end of the matrix in case the core does not align with the matrix. Then we go through the kernel along the matrix and consider the convolution. We save everything in an array. We also need to store the convolutions of characters. Therefore, we will create the hero class and add the following fields:

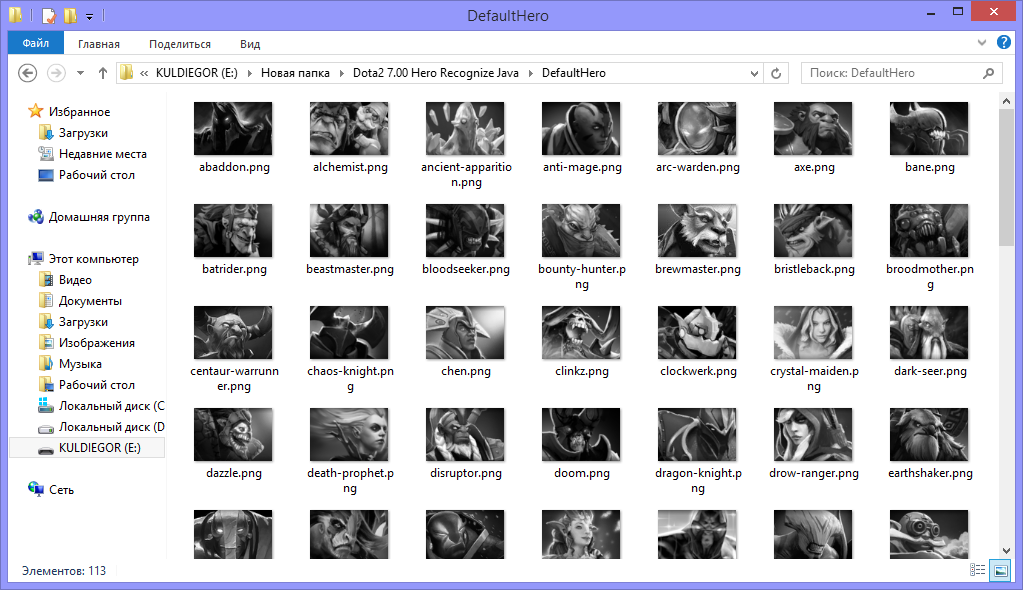

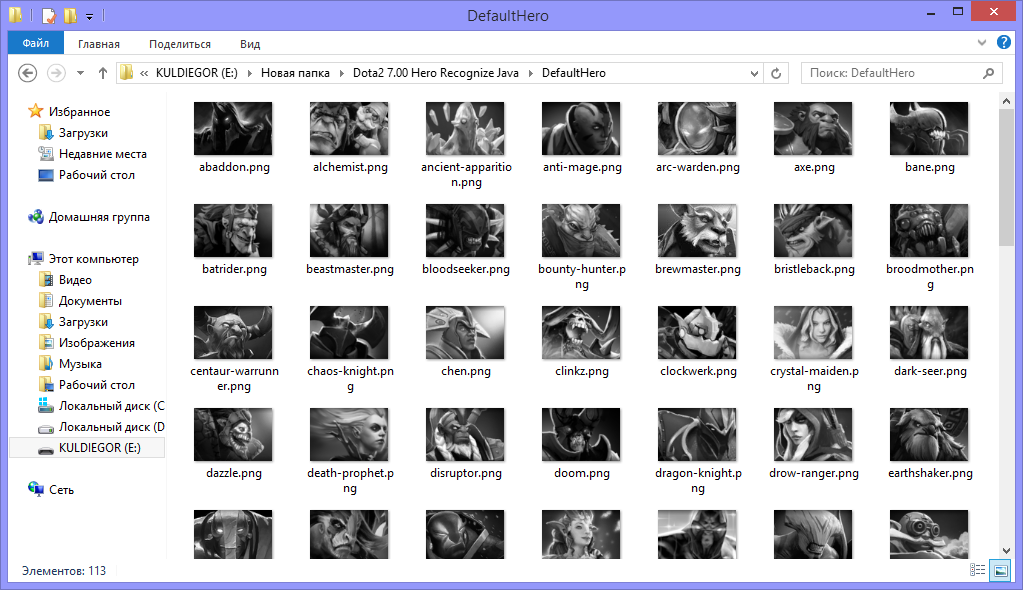

It is also necessary to load the convolutions samples, to compare the characters, create the class DefaultHero.

It's all very simple, run through all the convolutions and save them to a file. Similarly, loading from a file. Downloading from the catalog is different in that we consider the convolutions from the images that we will need when we accumulate a database of character images.

Well, the comparison itself. We consider the arithmetic mean of the difference between convolutions per core, if less than 0.02, then we assume that the images are similar, roughly speaking: “if the images are similar to 98%, then we consider them the same”. Then we assume that if at least half, or even more signs showed a positive result, then we indicate that the characters are equal.

Now we will make a screenshot of the screen.

In order not to look at the image of characters each time, we will immediately determine the boundaries of ten images. Then copy ten images from the screenshot and convert them to grayscale. In conclusion, we obtain a convolution of the image. We do this with ten images. And compare with the existing characters.

Now you need to accumulate a database of images. I tried to take pictures with dotabuff, but there were images that were absolutely different from dota (vskih). Therefore, it was decided to assemble them in semi-automatic mode. Slightly rewriting the code for the convolution wizard, added the “Make Screenshot” button. Comparison of convolutions occurs, each time, with loading of samples from the catalog, and if there are no samples, then save them to the catalog.

Wizard convolutions on github

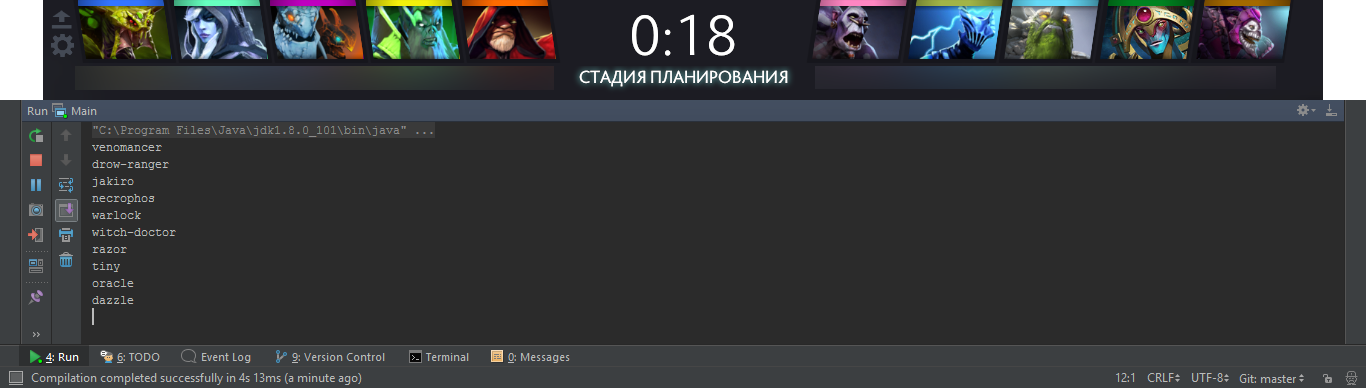

Go! We start the pillbox in the lobby and in turn select all 113 characters.

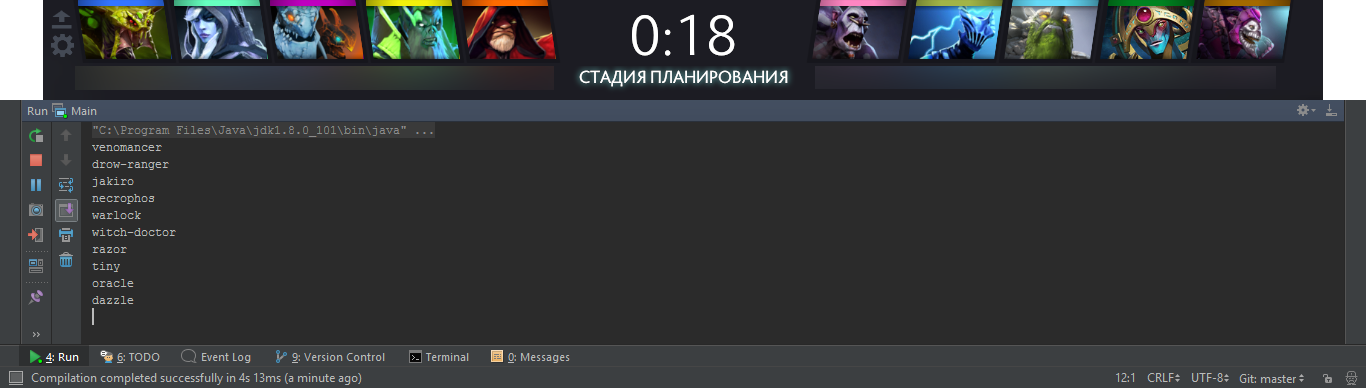

Base accumulated. Now you need to give a name to each character.

And save all the convolutions to a file. Now you can try to test the application.

Recognition errors are not practical. There is only one, when the game starts and around the black background the application reacts to this and gives out the character to Shadow Fiend, and when the choice of character comes, there are no errors. It remains to send data about the recognized characters on the network to the Android application so as not to minimize the game every time.

It's simple.

Accept the broadcast request. We answer it, plus we additionally send your ip in the request, although it is not necessary, but let it be. Then they open a tcp connection with us, and we start sending data every 300 milliseconds. As soon as the connection fails, we stop recognizing characters. I did this to reduce the CPU load. When the game has already started, I just rip the connection on the client and the processor is no longer loaded.

Android application. I will not give the code here since this very simple application will tell you briefly how it works. And I will give a link to GitHub.

The application sends a broadcast request over the network (you can enter a specific ip address of the server) to determine the server part of the application. As soon as a response comes from one of the servers, we connect to it and begin to receive data from it. To define a counter-counterpart, I chose the site dotabuff.com. I go through the list for each character and pull out the data "Strong against" and "Weak against" I am building a linked list. Then I take the sent characters and display a list of all the characters who are weaker than the chosen five. Along the way, checking that there were no characters in the list that “control” one of the five, that is, the characters in the list are absolutely weak against the five selected characters.

It works only at a resolution of 1280x1024, I have not tried it on other resolutions.

Recognition happens very quickly, the processor practically does not strain on my Lenovo B570e laptop with an Intel Celeron 1.5 GHz processor. Load 6%, with the recognition cycle 3 times per second. If the topic is interesting, I will tell you how I did the recognition of numbers on HealthBar (e), what difficulties I encountered, and how I solved them.

Thank you all who read to the end.

PS All references to the githab with the convolution wizard and the recognition program itself and the archive of images with convolution:

→ GitHub link to wizard convolutions

→ GitHub link to the application itself

→ GitHub link for Android app

→ Link to archive with images

→ Link to convolution file

Prehistory

One summer evening playing Dota 2, I thought it would not be a bad thing to recognize the characters in the game, and to give out statistics on the most successful counter-character selection. First thought, you need to somehow pull the data from the match and immediately process them. But I rejected this idea, since I have no experience in hacking games. Then I decided that it was possible to make screenshots during the game, process them quickly, and thus obtain data about the selected characters.

Training

And so we begin. First we take a screenshot of the screen.

Screenshot

')

')

Everything looks like the image; it may just be to take hash from the image and search for it.

Hm

“Oh Gabe! Well, for that kind of torment me. "No, it will not work. One Gabe knows why the characters' images are so wriggled (although not only to him, I have an assumption that the images go resize (tsya), and the dimensions are given by a fractional number). So it is necessary to recognize the image in another way. Now neural networks are in fashion. So try to screw them. We will use convolutional neural networks. So we need:

- Convolution core.

- Signs of haar.

- Test set of images. 1000 is another for one character ...

- ...

Wait, what about oh oh oh oh

I don’t have enough life to accumulate such a base. I thought we should somehow do without a huge training sample, while not at the expense of recognition speed. There is an exhaustive article about the SNS (Convolutional Neural Network) on Wikipedia . Briefly explain the principle of the SNA can be so. The input matrix is the brightness of the pixels in grayscale. And multiplied by the convolutional core, then the values are added together and normalized. A convolution core is usually -1 and 1, denoting black and white colors, respectively, or vice versa.

There are popular kernel patterns, for example, Haar signs. Further in the article we will use precisely some signs of Haar. Then the convolution values pass through the input connections of the neuron, are multiplied by the weights of the connections, summed, and ultimately the value obtained is fed to the neuron's activation function. But this is pure comparison, or the difference of bundles, here it is. We just need to take the difference of the original convolution with the convolutions of the characters, the smaller the difference, the more similar the images. This is the simplest architecture. There are no hidden layers in it, since we do not need any abstract parameters. Let's try to do all of the above.

Write the code

The original character image will be 78x53 px. In grayscale. That is, a matrix of size 78x53 with values of 0 ... 255. We will take the core of 10x10 in size. The kernel pitch will be the size of the core - 10. Both in x and y. (We don’t need a lot of parameters. After all, the images are not very different from each other). Total 48 values per character. Now let's get down to the code. We need to initialize the kernel with numbers according to the signs of Haar. Take the following signs.

Let's create the class ConvolutionCore where we will initialize the convolutional kernel.

ConvolutionCore class

package com.kuldiegor.recognize; /** * Created by aeterneus on 17.03.2017. */ public class ConvolutionCore { public int unitMin; // -1 public int unitMax; // 1 public int matrix[][]; public ConvolutionCore(int width,int height,int haar){ matrix = new int[height][width]; unitMax=0; unitMin=0; switch (haar){ case 0:{ // -1 = // 1 = /* -1 -1 -1 1 1 1 -1 -1 -1 1 1 1 -1 -1 -1 1 1 1 */ for (int y=0;y<height;y++){ for (int x=0;x<(width/2);x++){ matrix[y][x]=-1; unitMin++; } for (int x=width/2;x<width;x++){ matrix[y][x]=1; unitMax++; } } break; } case 1:{ // -1 = // 1 = /* 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 */ for (int y=0;y<(height/2);y++){ for (int x=0;x<width;x++){ matrix[y][x]=1; unitMax++; } } for (int y=(height/2);y<height;y++){ for (int x=0;x<width;x++){ matrix[y][x]=-1; unitMin++; } } break; } case 2:{ // -1 = // 1 = /* 1 1 -1 -1 -1 1 1 1 1 -1 -1 -1 1 1 1 1 -1 -1 -1 1 1 */ for (int y=0;y<height;y++){ for (int x=0;x<(width/3);x++){ matrix[y][x]=1; unitMax++; } for (int x=(width/3);x<(width*2/3);x++){ matrix[y][x]=-1; unitMin++; } for (int x=(width*2/3);x<width;x++){ matrix[y][x]=1; unitMax++; } } break; } case 3:{ // -1 = // 1 = /* 1 1 1 1 1 1 1 1 1 1 1 1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 1 1 1 1 1 1 1 1 1 1 1 1 */ for (int y=0;y<(height/3);y++){ for (int x=0;x<width;x++){ matrix[y][x]=1; unitMax++; } } for (int y=(height/3);y<(height*2/3);y++){ for (int x=0;x<width;x++){ matrix[y][x]=-1; unitMin++; } } for (int y=(height*2/3);y<height;y++){ for (int x=0;x<width;x++){ matrix[y][x]=1; unitMax++; } } break; } case 4:{ // -1 = // 1 = /* 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 -1 -1 -1 1 1 -1 -1 -1 1 1 -1 -1 -1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 */ for (int y=0;y<(height/3);y++){ for (int x=0;x<width;x++){ matrix[y][x]=1; unitMax++; } } for (int y=(height/3);y<(height*2/3);y++){ for (int x=0;x<(width/3);x++){ matrix[y][x]=1; unitMax++; } for (int x=(width/3);x<(width*2/3);x++){ matrix[y][x]=-1; unitMin++; } for (int x=(width*2/3);x<width;x++){ matrix[y][x]=1; unitMax++; } } for (int y=(height*2/3);y<height;y++){ for (int x=0;x<width;x++){ matrix[y][x]=1; unitMax++; } } break; } case 5:{ // -1 = // 1 = /* 1 1 1 -1 -1 -1 1 1 1 -1 -1 -1 -1 -1 -1 1 1 1 -1 -1 -1 1 1 1 */ for (int y=0;y<(height/2);y++){ for (int x=0;x<(width/2);x++){ matrix[y][x]=1; unitMax++; } for (int x=width/2;x<width;x++){ matrix[y][x]=-1; unitMin++; } } for (int y=(height/2);y<height;y++){ for (int x=0;x<(width/2);x++){ matrix[y][x]=-1; unitMin++; } for (int x=width/2;x<width;x++){ matrix[y][x]=1; unitMax++; } } break; } } } } I did dynamic stuffing, and initialization, regardless of kernel size. Now we will create a class of Convolution, in it we will do convolution

onvolution class

package com.kuldiegor.recognize; import java.awt.image.BufferedImage; import java.util.ArrayList; /** * Created by aeterneus on 17.03.2017. */ public class Convolution { static ConvolutionCore convolutionCores[]; // static { // convolutionCores = new ConvolutionCore[6]; for (int i=0;i<6;i++){ convolutionCores[i] = new ConvolutionCore(10,10,i); } } private int matrixx[][]; // 0 .. 255 public Convolution(BufferedImage image){ matrixx = getReadyMatrix(image); } private int[][] getReadyMatrix(BufferedImage bufferedImage){ // int width = bufferedImage.getWidth(); int heigth = bufferedImage.getHeight(); int[] lineData = new int[width * heigth]; bufferedImage.getRaster().getPixels(0, 0, width, heigth, lineData); int[][] res = new int[heigth][width]; int shift = 0; for (int row = 0; row < heigth; ++row) { System.arraycopy(lineData, shift, res[row], 0, width); shift += width; } return res; } private double[] ColapseMatrix(int[][] matrix,ConvolutionCore convolutionCore){ // int cmh=convolutionCore.matrix.length; // int cmw=convolutionCore.matrix[0].length; // int mh=matrix.length; // int mw=matrix[0].length; // int addWidth = cmw - (mw%cmw); // , int addHeight = cmh - (mh%cmh); int nmatrix[][]=new int[mh+addHeight][mw+addWidth]; for (int row = 0; row < mh; row++) { System.arraycopy(matrix[row], 0, nmatrix[row], 0, mw); } int nw = nmatrix[0].length/cmw; int nh = nmatrix.length/cmh; double result[] = new double[nh*nw]; int dmin = -convolutionCore.unitMin*255; // int dm = convolutionCore.unitMax*255-dmin; int q=0; for (int ny=0;ny<nh;ny++){ for (int nx=0;nx<nw;nx++){ int sum=0; for (int y=0;y<cmh;y++){ for (int x=0;x<cmw;x++){ sum += nmatrix[ny*cmh+y][nx*cmw+x]*convolutionCore.matrix[y][x]; } } result[q++]=((double)sum-dmin)/dm; } } return result; } public ArrayList<double[]> getConvolutionMatrix(){ // ArrayList<double[]> result = new ArrayList<>(); for (int i=0;i<convolutionCores.length;i++){ result.add(ColapseMatrix(matrixx,convolutionCores[i])); } return result; } } I will explain that in the class, in the static block, we load convolution kernels, since they are constant and do not change, and we will do a lot of bundles, initialize the kernels once and we will not do this anymore. In the constructor we take a matrix of values from the existing image, it is necessary to speed up the algorithm, so that we don’t take 1 pixel from the image each time, we will create a gray gradation matrix. In the ColapseMatrix method at the entrance, we have an image matrix and a convolution kernel. First, zeros are added to the end of the matrix in case the core does not align with the matrix. Then we go through the kernel along the matrix and consider the convolution. We save everything in an array. We also need to store the convolutions of characters. Therefore, we will create the hero class and add the following fields:

- Array of convolutions;

- Character `s name;

Hero class

package com.kuldiegor.recognize; import java.util.ArrayList; /** * Created by aeterneus on 17.03.2017. */ public class Hero { public String name; public ArrayList<double[]> convolutions; public Hero(String Name){ name = Name; } } It is also necessary to load the convolutions samples, to compare the characters, create the class DefaultHero.

Class DefaultHero

package com.kuldiegor.recognize; import javax.imageio.ImageIO; import java.awt.image.BufferedImage; import java.io.*; import java.util.ArrayList; import java.util.Collections; import java.util.Comparator; /** * Created by aeterneus on 17.03.2017. */ public class DefaultHero { public ArrayList<Hero> heroes; public String path; public DefaultHero(String path,int tload){ heroes = new ArrayList<>(); this.path = path; switch (tload){ case 0:{ LoadFromFolder(path); break; } case 1:{ LoadFromFile(path); } } } private void LoadFromFolder(String path){ // File folder = new File(path); File[] folderEntries = folder.listFiles(); for (File entry : folderEntries) { if (!entry.isDirectory()) { BufferedImage image = null; try { image = ImageIO.read(entry); } catch (IOException e) { e.printStackTrace(); } Hero hero = new Hero(StringTool.parse(entry.getName(),"",".png")); hero.convolutions = new Convolution(image).getConvolutionMatrix(); heroes.add(hero); } } } private void LoadFromFile(String name){ // try { BufferedReader bufferedReader = new BufferedReader(new FileReader(name)); String str; while ((str = bufferedReader.readLine())!= null){ Hero hero =new Hero(StringTool.parse(str,"",":")); String s = StringTool.parse(str,":",""); String mas[] = s.split(";"); int n=mas.length/48; hero.convolutions = new ArrayList<>(n); for (int c=0;c<n;c++) { double dmas[] = new double[48]; for (int i = 0; i < 48; i++) { dmas[i] = Double.parseDouble(mas[i+c*48]); } hero.convolutions.add(dmas); } heroes.add(hero); } } catch (IOException e){ e.printStackTrace(); } } public void SaveToFile(String name){ // Collections.sort(heroes, Comparator.comparing(o -> o.name)); FileWriter fileWriter = null; try { fileWriter = new FileWriter(name); } catch (IOException e){ e.printStackTrace(); } for (int i=0;i<heroes.size();i++){ Hero hero = heroes.get(i); StringBuilder stringBuilder = new StringBuilder(); stringBuilder.append(hero.name).append(":"); for (int i2=0;i2<hero.convolutions.size();i2++){ double matrix[] = hero.convolutions.get(i2); for (int i3=0;i3<matrix.length;i3++){ stringBuilder.append(matrix[i3]).append(";"); } } try { fileWriter.write(stringBuilder.append("\r\n").toString()); } catch (IOException e){ e.printStackTrace(); } } try { fileWriter.close(); } catch (IOException e){ e.printStackTrace(); } } public String getSearhHeroName(Hero hero){ // for (int i=0;i<heroes.size();i++){ if (equalsHero(hero,heroes.get(i))){ return heroes.get(i).name; } } return "0"; } public boolean equalsHero(Hero hero1,Hero hero2){ // 2 int min=0; int max=0; for (int i=0;i<hero1.convolutions.size();i++){ double average=0; for (int i1=0;i1<hero1.convolutions.get(i).length;i1++){ // 2 average += Math.abs(hero1.convolutions.get(i)[i1]-hero2.convolutions.get(i)[i1]); } average /=hero1.convolutions.get(0).length; if (average<0.02){ // min++; } else { max++; } } return (min>=max); } } It's all very simple, run through all the convolutions and save them to a file. Similarly, loading from a file. Downloading from the catalog is different in that we consider the convolutions from the images that we will need when we accumulate a database of character images.

Well, the comparison itself. We consider the arithmetic mean of the difference between convolutions per core, if less than 0.02, then we assume that the images are similar, roughly speaking: “if the images are similar to 98%, then we consider them the same”. Then we assume that if at least half, or even more signs showed a positive result, then we indicate that the characters are equal.

Now we will make a screenshot of the screen.

Screenshot code

try { // image = new Robot().createScreenCapture(new Rectangle(Toolkit.getDefaultToolkit().getScreenSize())); } catch (AWTException e) { e.printStackTrace(); } In order not to look at the image of characters each time, we will immediately determine the boundaries of ten images. Then copy ten images from the screenshot and convert them to grayscale. In conclusion, we obtain a convolution of the image. We do this with ten images. And compare with the existing characters.

Class HRecognize

package com.kuldiegor.recognize; import java.awt.image.BufferedImage; import java.util.ArrayList; /** * Created by aeterneus on 17.03.2017. */ public class HRecognize { private DefaultHero defaultHero; public String heroes[]; // public HRecognize(BufferedImage screen, DefaultHero defaultHero){ heroes = new String[10]; ArrayList<Hero> heroArrayList = new ArrayList<>(); this.defaultHero = defaultHero; for (int i=0;i<5;i++){ // BufferedImage bufferedImage = new BufferedImage(78,53,BufferedImage.TYPE_BYTE_GRAY); // bufferedImage.getGraphics().drawImage(screen.getSubimage(43+i*96,6,78,53),0,0,null); Hero hero = new Hero(""); // hero.convolutions = new Convolution(bufferedImage).getConvolutionMatrix(); heroArrayList.add(hero); } for (int i=0;i<5;i++) { // BufferedImage bufferedImage = new BufferedImage(78, 53, BufferedImage.TYPE_BYTE_GRAY); // bufferedImage.getGraphics().drawImage(screen.getSubimage(777 + i * 96, 6, 78, 53), 0, 0, null); Hero hero = new Hero(""); // hero.convolutions = new Convolution(bufferedImage).getConvolutionMatrix(); heroArrayList.add(hero); } for (int i=0;i<10;i++){ // heroes[i] = defaultHero.getSearhHeroName(heroArrayList.get(i)); } } } Now you need to accumulate a database of images. I tried to take pictures with dotabuff, but there were images that were absolutely different from dota (vskih). Therefore, it was decided to assemble them in semi-automatic mode. Slightly rewriting the code for the convolution wizard, added the “Make Screenshot” button. Comparison of convolutions occurs, each time, with loading of samples from the catalog, and if there are no samples, then save them to the catalog.

Wizard convolutions on github

Go! We start the pillbox in the lobby and in turn select all 113 characters.

Screenshot Lobby

Base accumulated. Now you need to give a name to each character.

Screenshot of the directory with characters

And save all the convolutions to a file. Now you can try to test the application.

Recognition Screenshot

Recognition errors are not practical. There is only one, when the game starts and around the black background the application reacts to this and gives out the character to Shadow Fiend, and when the choice of character comes, there are no errors. It remains to send data about the recognized characters on the network to the Android application so as not to minimize the game every time.

It's simple.

Broadcast Acceptance Code

try { DatagramSocket socket = new DatagramSocket(6001); byte buffer[] = new byte[1024]; DatagramPacket packet = new DatagramPacket(buffer, 1024); InetAddress localIP= InetAddress.getLocalHost(); while (!Thread.currentThread().isInterrupted()) { // socket.receive(packet); String s=new String(packet.getData(),0,packet.getLength()); if (StringTool.parse(s,"",":").equals("BroadCastFastDefinition")){ String str = "OK:"+localIP.getHostAddress(); byte buf[] = str.getBytes(); DatagramPacket p = new DatagramPacket(buf,buf.length,packet.getAddress(),6001); // socket.send(p); } } }catch (Exception e) { e.printStackTrace(); } Code send data

try { // Socket client = socket.accept(); final String ipclient = client.getInetAddress().getHostAddress(); Platform.runLater(new Runnable() { @Override public void run() { label1.setText(""); label1.setTextFill(Color.GREEN); textfield2.setText(ipclient); } }); DataOutputStream streamWriter = new DataOutputStream(client.getOutputStream()); BufferedImage image = null; while (client.isConnected()){ try { // image = new Robot().createScreenCapture(new Rectangle(Toolkit.getDefaultToolkit().getScreenSize())); } catch (AWTException e) { e.printStackTrace(); } // HRecognize hRecognize = new HRecognize(image,defaultHero); StringBuilder stringBuilder = new StringBuilder(); for (int i=0;i<5;i++){ stringBuilder.append(hRecognize.heroes[i]).append(";"); } stringBuilder.append(":"); for (int i=5;i<10;i++){ stringBuilder.append(hRecognize.heroes[i]).append(";"); } stringBuilder.append("\n"); String str = stringBuilder.toString(); // streamWriter.writeUTF(str); try { Thread.sleep(300); } catch (InterruptedException e){ threadSocket.interrupt(); } } }catch (IOException e){ } Accept the broadcast request. We answer it, plus we additionally send your ip in the request, although it is not necessary, but let it be. Then they open a tcp connection with us, and we start sending data every 300 milliseconds. As soon as the connection fails, we stop recognizing characters. I did this to reduce the CPU load. When the game has already started, I just rip the connection on the client and the processor is no longer loaded.

Android application. I will not give the code here since this very simple application will tell you briefly how it works. And I will give a link to GitHub.

The application sends a broadcast request over the network (you can enter a specific ip address of the server) to determine the server part of the application. As soon as a response comes from one of the servers, we connect to it and begin to receive data from it. To define a counter-counterpart, I chose the site dotabuff.com. I go through the list for each character and pull out the data "Strong against" and "Weak against" I am building a linked list. Then I take the sent characters and display a list of all the characters who are weaker than the chosen five. Along the way, checking that there were no characters in the list that “control” one of the five, that is, the characters in the list are absolutely weak against the five selected characters.

Unresolved Tasks

It works only at a resolution of 1280x1024, I have not tried it on other resolutions.

Conclusion

Recognition happens very quickly, the processor practically does not strain on my Lenovo B570e laptop with an Intel Celeron 1.5 GHz processor. Load 6%, with the recognition cycle 3 times per second. If the topic is interesting, I will tell you how I did the recognition of numbers on HealthBar (e), what difficulties I encountered, and how I solved them.

Thank you all who read to the end.

PS All references to the githab with the convolution wizard and the recognition program itself and the archive of images with convolution:

→ GitHub link to wizard convolutions

→ GitHub link to the application itself

→ GitHub link for Android app

→ Link to archive with images

→ Link to convolution file

Source: https://habr.com/ru/post/325526/

All Articles