Teach TensorFlow to draw Cyrillic

Hi Habr! In recent years, new approaches to learning neural networks have significantly expanded the scope of practical application of machine learning. And the emergence of a large number of good high-level libraries provided an opportunity to test their skills to specialists of different levels of training.

Having some experience in machine learning, I have so far not dealt specifically with neural networks. In the wake of their rapid popularity, it was decided to fill this gap and at the same time try to write an article about it.

I set myself two goals. The first is to come up with a task that is complex enough to deal with problems arising in real life when solving it. And second, to solve this problem with the use of one of the modern libraries, having understood the peculiarities of working with them.

')

TensorFlow was chosen as a library. And for the task and its solution, I ask under the cat ...

Trying to come up with a task, I was guided by the following considerations:

Wandering through the internet and studying the material, I, among other things, came across the following articles:

These articles, as well as the requirement for learning speed, prompted me to the idea of the problem.

For most fonts available on the web, only Latin versions exist. And what if using only images of Latin letters to restore its Cyrillic version while maintaining the original style?

Immediately make a reservation, I did not set out to make the final product ready for use in publishing. My goal was to learn how to work with neural networks. Therefore, I limited myself to working with raster images of 64 × 64 pixels.

Among the existing zoo of packages and libraries, my choice fell on TensorFlow for the following reasons:

All fonts have individual features. An ordinary user will soon remember about italics or thickness, and a specialist in typographer will not forget about antiques, grotesques and other beautiful words.

Such individual characteristics are difficult to formalize. When learning neural networks, a typical approach to solving this problem is to use an embedding layer. In short, an attempt is made to match a one-dimensional number vector to the object being modeled. The semantics of the individual components of the vector will remain unknown, however, oddly enough, the whole vector allows to model the object in the context of the task. The most famous example of this approach is the Word2Vec model.

I decided to match each font vector and each letter is a vector . Picture specific letters for this font is obtained using a neural network The input to which is fed two vectors corresponding to the font and letter.

As a network a deconvolution neural network acts. The image above is taken from the openai.com blog for a demonstration. More detail about the deployment operation used and the number of layers in the network will be discussed later.

Learning set consists of images of Russian and Latin letters. For each image, the letter and font used is known.

At the training stage, we are looking for network weights and embedding vectors for all letters and fonts from the training set using one of the variations of the stochastic gradient method.

Trained neural network and embedding vectors of Cyrillic and Latin characters are used in the above task of restoring Cyrillic fonts.

Suppose we have entered the Latin font missing in the training set. We can try to restore his embedding vector. using only images of Latin letters . To do this, we solve the following optimization problem:

Further, using the embedding vectors of Cyrillic symbols and embedding vectors obtained at the learning stage , we can easily restore the corresponding images using the network .

As is often the case, a significant proportion of the time was spent preparing the training set. It all started with the fact that I went to well-known sites in narrow circles and downloaded the font collections available there. The unzipped size of all files was ~ 14 GB.

At first it turned out that for different fonts letters of the same size can occupy a different number of pixels. To combat this, a program was written that selects the font size to fit all its characters into an 64 by 64 pixel image.

Further, all the fonts were conditionally divided into three types - classical, exotic and ornamental. On the latter, anything other than Cyrillic and Latin characters can be depicted, for example, emoticons or pictures. On exotic fonts, all the letters are in place, but depicted in a special way. For example, on exotic fonts, colors can be inverted, frames added, a record can be used, and so on.

By sight, ornamental fonts made up a large proportion of the collection, and nothing but noise could be considered. There was a problem of their filtering. Unfortunately, using metadata this did not work. Instead, I began to count the number of monochrome areas in black and white images of individual letters and compare it with the reference value. For example, letter A corresponds to the reference number three, and letter T two. If there are a lot of letters in the font with differences from the reference values, it is considered noise.

As a result, much of the exotic fonts were also screened out. I was not very upset because I did not harbor any special illusions about the generalizing ability of the network being developed. The total size of the collection turned out to be ~ 6000 fonts.

If you've read this far, I think you have an idea about convolution neural networks. If not, there is enough material on this topic in the network. Here I just want to mention two points about convolution networks:

In the context of the article, the first statement suggests an analogy between the resulting characteristics and the embedding layer described above. And the second gives an intuitive rationale to try to gradually restore the local characteristics of the original image from the global ones.

The convolution + ReLu + maxpooling function used in convolutional networks is not reversible. The literature suggests several ways [2] [4] of its “recovery”. I decided to use the simplest one — convolution transpose + ReLu, which is essentially a linear function + the simplest nonlinear activation function. The main thing for me was to preserve the property of locality.

TensorFlow has a function conv2d_transpose , which performs this linear transformation. The main problem I encountered here was to imagine exactly how the calculation was performed in order to rationally find the parameters. Here, an illustration for a one-dimensional case from the article [5] MatConvNet - Convolutional Neural Networks for MATLAB (p. 30) [arXiv] came to my aid:

As a result, I stopped at the parameters stride = [2, 2] and kernel = [4, 4]. This allowed in each deconvolution layer to double the length and width of the image and use groups of 4 neighboring pixels to calculate the neurons of the next layer.

When learning the network, I came across two points that I think would be useful to share.

Firstly, it turned out to be very important to balance the number of Cyrillic and Latin images in the training set. First, the Latin characters in my training sample turned out much more, as a result, the network drew them better, to the detriment of the quality of the recovery of Cyrillic characters.

Secondly, with the training, a gradual decrease in the learning rate of the gradient descent helped. I did this manually, following the error in the TensorBoard, but in TensorFlow there are opportunities to automate this process, for example using the tf.train.exponential_decay function.

I didn’t have to use other useful tricks like regularization, dropout , batch normalization , as without them it turned out to achieve results acceptable for my purposes.

The dimension of embedding layer 64 is both for symbols and for fonts.

4 deconvolution layers of inner layers 8 × 8 × 128 → 16 × 16 × 64 → 32 × 32 × 32 → 64 × 64 × 16, stride = [2, 2], kernel = [4, 4], ReLu activation

1 convolution layer 64 × 64 × 16 → 64 × 64 × 1, stride = [1, 1], kernel = [4, 4], sigmoid activation

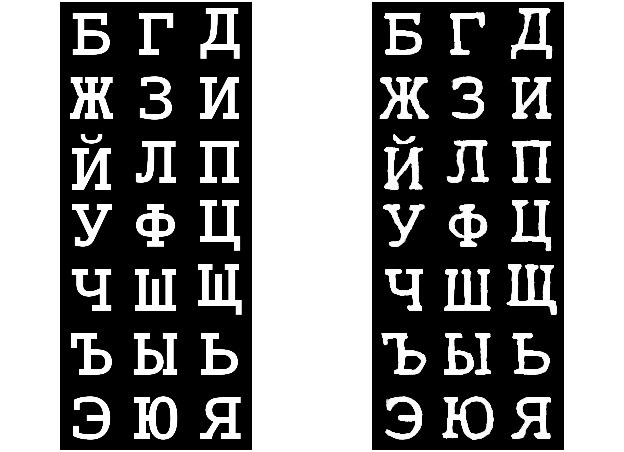

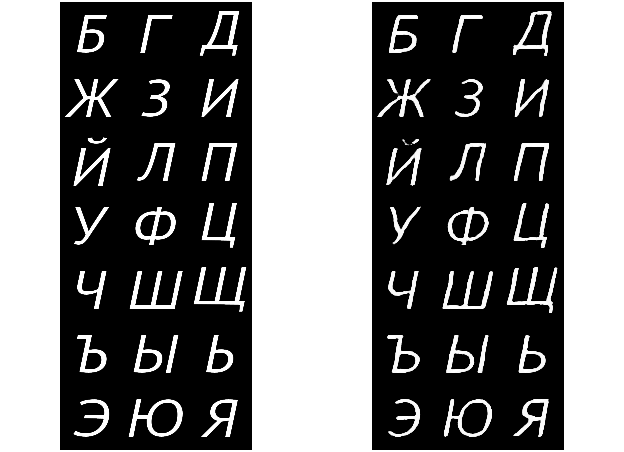

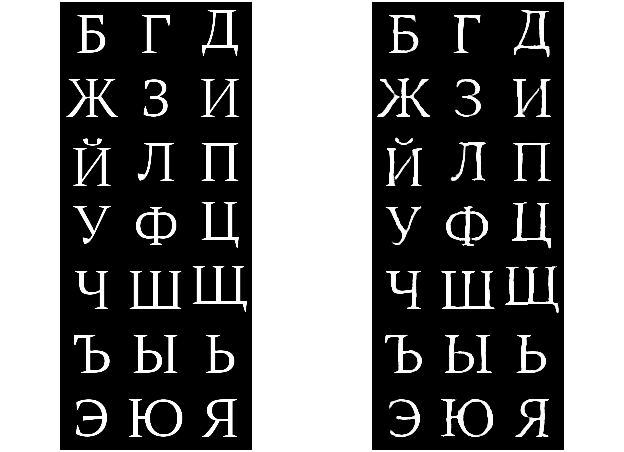

To demonstrate the results, an informational reason was recently found when at once two Russian projects MoiOffice and Astra Linux released free font collections. These fonts were not used in the training set. On the left is the original font, on the right is the output of the neural network. For compactness, only Cyrillic letters are given that have no Latin analogs.

The remaining results lay out a separate archive .

Having received these results, I believe that I have achieved my goals. Although it is worth noting that they are far from ideal (especially noticeable for some Italic fonts) and there are still a lot of things that can be added, improved and tested.

What is the article, to judge you.

[ 1] Gatys, Ecker, Bethge, A neural algorithm of artistic style [arXiv]

[ 2] Dosovitskiy, Springenberg, Tatarchenko, Brox, Learning to Generate Chairs, Tables and Cars with Convolutional Networks [arXiv]

[ 3] Hertel, Barth, Käster, Martinetz, Deep Convolutional Neural Networks as Generic Feature Extractors [PDF]

[ 4] Zeiler, Krishnan, Taylor, Fergus, Deconvolutional Networks [PDF]

[ 5] Vedaldi, Lenc, MatConvNet - Convolutional Neural Networks for MATLAB [arXiv]

Having some experience in machine learning, I have so far not dealt specifically with neural networks. In the wake of their rapid popularity, it was decided to fill this gap and at the same time try to write an article about it.

I set myself two goals. The first is to come up with a task that is complex enough to deal with problems arising in real life when solving it. And second, to solve this problem with the use of one of the modern libraries, having understood the peculiarities of working with them.

')

TensorFlow was chosen as a library. And for the task and its solution, I ask under the cat ...

Task selection

Trying to come up with a task, I was guided by the following considerations:

- This should be something more complicated and interesting than the standard MNIST classification, as I was looking for opportunities to face the specific features of neural network training;

- A forward feed neural network was planned as an architecture, since familiarity is always better to start with simple things;

- Training should work fast enough on a regular gaming video card, since everything was done in free time from the main work.

Wandering through the internet and studying the material, I, among other things, came across the following articles:

- [1] A neural algorithm of artistic style [arXiv] which presents an image processing approach in the style of famous artists. The development of this direction later led to the emergence of the popular service Prisma;

- [2] Learning to Generate Chairs, Tables and Cars with Convolutional Networks [arXiv] in which a method for generating images, in particular chairs, according to specified parameters such as color or angle of view, is proposed.

These articles, as well as the requirement for learning speed, prompted me to the idea of the problem.

Task

For most fonts available on the web, only Latin versions exist. And what if using only images of Latin letters to restore its Cyrillic version while maintaining the original style?

Immediately make a reservation, I did not set out to make the final product ready for use in publishing. My goal was to learn how to work with neural networks. Therefore, I limited myself to working with raster images of 64 × 64 pixels.

Library selection

Among the existing zoo of packages and libraries, my choice fell on TensorFlow for the following reasons:

- The library is rather low-level in comparison, for example, with Keras , which on the one hand promised some amount of sample code, and on the other hand the possibility to sort out the details and try non-standard architectures;

- TensorFlow supports multi-machine / video learning. Therefore, the acquired skills of working with the library can be useful on real practical problems;

- The TensorBoard tool in TensorFlow makes it easy to follow the learning process online. Among other things, this made it possible to detect errors in the code in the early stages of learning and not to waste time on obviously bad architectures and parameter sets.

Network architecture

All fonts have individual features. An ordinary user will soon remember about italics or thickness, and a specialist in typographer will not forget about antiques, grotesques and other beautiful words.

Such individual characteristics are difficult to formalize. When learning neural networks, a typical approach to solving this problem is to use an embedding layer. In short, an attempt is made to match a one-dimensional number vector to the object being modeled. The semantics of the individual components of the vector will remain unknown, however, oddly enough, the whole vector allows to model the object in the context of the task. The most famous example of this approach is the Word2Vec model.

I decided to match each font vector and each letter is a vector . Picture specific letters for this font is obtained using a neural network The input to which is fed two vectors corresponding to the font and letter.

It is important to note that the same vector used when constructing images of a single letter in different font styles (similarly for a separate font).

As a network a deconvolution neural network acts. The image above is taken from the openai.com blog for a demonstration. More detail about the deployment operation used and the number of layers in the network will be discussed later.

Model training

Learning set consists of images of Russian and Latin letters. For each image, the letter and font used is known.

At the training stage, we are looking for network weights and embedding vectors for all letters and fonts from the training set using one of the variations of the stochastic gradient method.

As a loss function the total cross entropy between the predicted and real pixels is used (the pixel value is scaled from zero to one).

Image Recovery

Trained neural network and embedding vectors of Cyrillic and Latin characters are used in the above task of restoring Cyrillic fonts.

Suppose we have entered the Latin font missing in the training set. We can try to restore his embedding vector. using only images of Latin letters . To do this, we solve the following optimization problem:

This problem can already be solved by the method of ordinary gradient descent (or any other), since the power of the set about two or three dozen letters.

Further, using the embedding vectors of Cyrillic symbols and embedding vectors obtained at the learning stage , we can easily restore the corresponding images using the network .

Training sample preparation

As is often the case, a significant proportion of the time was spent preparing the training set. It all started with the fact that I went to well-known sites in narrow circles and downloaded the font collections available there. The unzipped size of all files was ~ 14 GB.

At first it turned out that for different fonts letters of the same size can occupy a different number of pixels. To combat this, a program was written that selects the font size to fit all its characters into an 64 by 64 pixel image.

Further, all the fonts were conditionally divided into three types - classical, exotic and ornamental. On the latter, anything other than Cyrillic and Latin characters can be depicted, for example, emoticons or pictures. On exotic fonts, all the letters are in place, but depicted in a special way. For example, on exotic fonts, colors can be inverted, frames added, a record can be used, and so on.

By sight, ornamental fonts made up a large proportion of the collection, and nothing but noise could be considered. There was a problem of their filtering. Unfortunately, using metadata this did not work. Instead, I began to count the number of monochrome areas in black and white images of individual letters and compare it with the reference value. For example, letter A corresponds to the reference number three, and letter T two. If there are a lot of letters in the font with differences from the reference values, it is considered noise.

As a result, much of the exotic fonts were also screened out. I was not very upset because I did not harbor any special illusions about the generalizing ability of the network being developed. The total size of the collection turned out to be ~ 6000 fonts.

About convolutional neural networks

If you've read this far, I think you have an idea about convolution neural networks. If not, there is enough material on this topic in the network. Here I just want to mention two points about convolution networks:

- Their ability to produce at the output of the last layers a number of characteristics of the original image, a combination of which can be used in its analysis ( [3] Deep Convolutional Neural Networks as Generic Feature Extractors [PDF] );

- These global characteristics are obtained through the sequential calculation of local characteristics in the inner layers of the neural network.

In the context of the article, the first statement suggests an analogy between the resulting characteristics and the embedding layer described above. And the second gives an intuitive rationale to try to gradually restore the local characteristics of the original image from the global ones.

Deconvolution

The convolution + ReLu + maxpooling function used in convolutional networks is not reversible. The literature suggests several ways [2] [4] of its “recovery”. I decided to use the simplest one — convolution transpose + ReLu, which is essentially a linear function + the simplest nonlinear activation function. The main thing for me was to preserve the property of locality.

TensorFlow has a function conv2d_transpose , which performs this linear transformation. The main problem I encountered here was to imagine exactly how the calculation was performed in order to rationally find the parameters. Here, an illustration for a one-dimensional case from the article [5] MatConvNet - Convolutional Neural Networks for MATLAB (p. 30) [arXiv] came to my aid:

As a result, I stopped at the parameters stride = [2, 2] and kernel = [4, 4]. This allowed in each deconvolution layer to double the length and width of the image and use groups of 4 neighboring pixels to calculate the neurons of the next layer.

Learning process

When learning the network, I came across two points that I think would be useful to share.

Firstly, it turned out to be very important to balance the number of Cyrillic and Latin images in the training set. First, the Latin characters in my training sample turned out much more, as a result, the network drew them better, to the detriment of the quality of the recovery of Cyrillic characters.

Secondly, with the training, a gradual decrease in the learning rate of the gradient descent helped. I did this manually, following the error in the TensorBoard, but in TensorFlow there are opportunities to automate this process, for example using the tf.train.exponential_decay function.

I didn’t have to use other useful tricks like regularization, dropout , batch normalization , as without them it turned out to achieve results acceptable for my purposes.

Network Summary

The dimension of embedding layer 64 is both for symbols and for fonts.

4 deconvolution layers of inner layers 8 × 8 × 128 → 16 × 16 × 64 → 32 × 32 × 32 → 64 × 64 × 16, stride = [2, 2], kernel = [4, 4], ReLu activation

1 convolution layer 64 × 64 × 16 → 64 × 64 × 1, stride = [1, 1], kernel = [4, 4], sigmoid activation

results

To demonstrate the results, an informational reason was recently found when at once two Russian projects MoiOffice and Astra Linux released free font collections. These fonts were not used in the training set. On the left is the original font, on the right is the output of the neural network. For compactness, only Cyrillic letters are given that have no Latin analogs.

XOCourserBold

PTAstraSansItalic

XOThamesBoldItalic

PTAstraSerifRegular

Rest

The remaining results lay out a separate archive .

Total

Having received these results, I believe that I have achieved my goals. Although it is worth noting that they are far from ideal (especially noticeable for some Italic fonts) and there are still a lot of things that can be added, improved and tested.

What is the article, to judge you.

Related Links

[ 1] Gatys, Ecker, Bethge, A neural algorithm of artistic style [arXiv]

[ 2] Dosovitskiy, Springenberg, Tatarchenko, Brox, Learning to Generate Chairs, Tables and Cars with Convolutional Networks [arXiv]

[ 3] Hertel, Barth, Käster, Martinetz, Deep Convolutional Neural Networks as Generic Feature Extractors [PDF]

[ 4] Zeiler, Krishnan, Taylor, Fergus, Deconvolutional Networks [PDF]

[ 5] Vedaldi, Lenc, MatConvNet - Convolutional Neural Networks for MATLAB [arXiv]

Source: https://habr.com/ru/post/325510/

All Articles