Twitter infrastructure: scale

Twitter Park Overview

Twitter came from an era when it was decided to install equipment from specialized manufacturers in data centers. Since then, we have continuously developed and updated the server park, seeking to benefit from the latest open technology standards, as well as improve the efficiency of the equipment to provide the best experience for users.

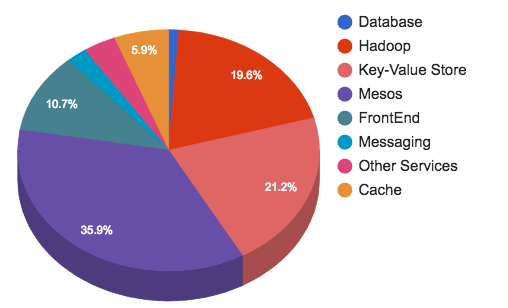

Our current equipment distribution is shown below:

Network traffic

We started leaving third-party hosting in early 2010. This means that we had to learn how to build and maintain our own infrastructure. Having a vague idea of the needs of basic infrastructure, we began to try different versions of the network architecture, equipment and manufacturers.

')

By the end of 2010, we completed the first network architecture project. She had to solve the problems with scaling and maintenance that we experienced at the hoster. We had ToR switches with deep buffers to handle bursts of service traffic, as well as core and carrier class switches without oversubscription at this level. They supported an early version of Twitter, which set several notable engineering achievements, such as TPS records (tweets per second) after the release of the Japanese film “Castle in the Sky” and during the 2014 FIFA World Cup.

If we quickly travel back a couple of years, then we already had a network with points of presence on five continents and data centers with hundreds of thousands of servers. In early 2015, we began to experience some growth problems due to changes in the service architecture and increased power requirements, and eventually reached physical limitations of scalability in the data center when the mesh topology could not withstand the addition of additional equipment in the new racks. In addition, IGP in the existing data center began to behave unpredictably due to the increased scale of routing and complexity of the topology.

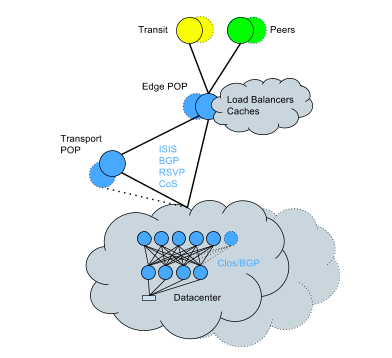

To cope with this, we began to transfer existing data centers to the topology of Clos + BGP - a transformation that had to be done on a live network. Despite the complexity, it was completed with minimal impact on services in a relatively short time. Now the network looks like this:

Key points of the new approach:

- Smaller radius of impact from failure of one device.

- Capacity horizontal scaling capabilities.

- Smaller overhead routing engine on the CPU; much more efficient handling of routing changes.

- More routing capacity due to low overhead CPU.

- More detailed control of routing rules for each device and each connection.

- There is no longer any chance of facing the root causes of the most serious incidents that happened in the past: increased protocol reconvergence time, problems with downed routes, and unexpected problems due to the inherent complexity of OSPF.

- The ability to move racks without consequences.

Let's take a closer look at our network infrastructure.

Data Center Traffic

Tasks

Our first data center was built on the model of the capacity and traffic profiles of a well-known system installed at our hosting provider. Only a few years passed, and our data centers were 400% larger than the original design. And now, when our application stack has evolved, and Twitter has become more distributed, the traffic profiles have also changed. The initial assumptions on which network design was developed at the beginning are no longer relevant.

Traffic grows faster than we manage to redo the whole data center, so it is important to create a highly scalable architecture that allows you to consistently add capacity instead of sharp migrations.

An extensive microservice system requires a highly reliable network that can withstand a variety of traffic. Our traffic ranges from long-lived TCP connections to special MapReduce tasks and incredibly short microbursts. Initially, we deployed network equipment with deep packet buffers to work with this variety of traffic types, but this caused our own problems: higher costs and increased hardware complexity. Later projects used more standard buffer sizes and end-to-end switching functions along with a better configured TCP stack on the server side for more elegant micro splash processing.

Lessons learned

Years later and after the improvements made, we realized a few things that are worth mentioning:

- Design beyond the original specifications and requirements, make bold and quick changes if traffic approaches the upper limit of the capacity embedded in the network.

- Rely on data and metrics to make correct technical design decisions, and make sure that these metrics are understandable to network operators - this is especially important on hosting or in the cloud.

- There is no such thing as a temporary change or a workaround: in most cases, workarounds are technical debt.

Backbone traffic

Tasks

We have backbone traffic dramatically increasing every year - and we still see bursts of 3-4 times the normal traffic, when we move traffic between data centers. This sets unique challenges for historical protocols that were not designed for this. For example, the RSVP protocol in MPLS assumes to some extent a gradual increase in traffic, rather than sudden bursts. We had to spend a lot of time setting up these protocols in order to get the fastest response time. In addition, to handle traffic spikes (especially when replicating to storage systems), we implemented a priority system. Although we always need to guarantee the delivery of user traffic, we can allow a delay of low-priority replication traffic from storage systems with daily SLAs. Thus, our network uses all available bandwidth and maximizes resource utilization. User traffic is always more important than low-priority backend traffic. In addition, to solve the container packing (bin-packing) problems associated with RSVP automatic bandwidth, we implemented the TE ++ system, which, as traffic increases, creates additional LSPs and removes them as traffic decreases. This allows us to effectively manage traffic between connections, while reducing the CPU load needed to support a large number of LSPs.

Although initially no one was engaged in designing traffic for backbone, it was later added to help us scale in line with our growth. To do this, we have split the roles with separate routers to route kernel traffic and boundary traffic, respectively. It also allowed us to scale in a budget mode, because we did not need to buy routers with complex boundary functionality.

At the border, this means that here our kernel is connected to everything and can be scaled in a very horizontal way (for example, installing many, many routers in one place, and not just a couple, since the core interconnects connect everything through).

To scale the RIB in our routers to meet the scaling requirements, we had to implement route reflection, but by doing this and moving to a hierarchical structure, we also implemented route reflector clients for their own route reflectors!

Lessons learned

Over the last year, we transferred device configurations to templates, and now we regularly check them.

Boundary traffic

Twitter has direct interconnections with more than 3,000 unique networks in many data centers around the world. Direct traffic delivery is a top priority. We move 60% of our traffic through our global network backbone to interconnect points and points of presence (POP), where we have local frontend servers, closing client sessions, all to be as close as possible to users.

Tasks

The unpredictability of world events leads to the same unpredictable traffic bursts. These surges during major events like sports, elections, natural disasters and other significant events stress our network infrastructure (especially photos and videos). They are difficult to predict or come at all without warning. We provide capacity for such events and prepare for big jumps - often 3-10 times higher than normal peak levels if a significant event is planned in the region. Due to our significant annual growth, it is an important task to increase capacity in the required volume.

Although we set up peering connections with all client networks whenever possible, this is not without problems. Surprisingly often, networks or providers prefer to set up interconnects far from the home market or, due to their routing rules, direct traffic to a point of presence outside the market. And although Twitter openly establishes peer-to-peer connections with all the largest (by the number of users) networks where we see traffic, not all Internet providers do the same. We spend considerable time optimizing our routing rules to drive traffic as close to our users as possible.

Lessons learned

Historically, when someone sent a request to

www.twitter.com , based on the location of our DNS server, we gave them different regional IP addresses to direct to a specific server cluster. Such a methodology (GeoDNS) is partly inaccurate, because you cannot rely on users to select the correct DNS servers or our ability to pinpoint where the DNS server is physically located in the world. In addition, the topology of the Internet does not always correspond to geography.To solve the problem, we switched to the BGP Anycast model, in which we stated the same route from all locations and optimized our routing in order to work out the best path from users to our points of presence. By doing so, we get the best possible performance given the limitations of the topology of the Internet and do not depend on unpredictable assumptions about where the DNS servers are located.

Storage

Hundreds of millions of tweets are published daily. They are processed, stored, cached, shipped, and analyzed. For this amount of content, we need the appropriate infrastructure. The storage and messaging represent 45% of the total Twitter infrastructure.

Storage and messaging groups provide the following services:

- Hadoop clusters for computing and HDFS.

- Manhattan clusters for all key-value storages with low latency.

- Graph storage for shardish MySQL clusters.

- Blobstore clusters for all large objects (video, images, binary files ...).

- Caching clusters

- Messaging clusters.

- Relational storage ( MySQL , PostgreSQL and Vertica ).

Tasks

Although at this level there are a number of different problems, one of the most notable problems that had to be overcome was multiple ownership (multi-tenancy). Often, users have boundary situations that affect existing tenure and force us to build dedicated clusters. The more dedicated clusters, the greater the operational load to support them.

There is nothing surprising in our infrastructure, but here are some interesting facts:

- Hadoop: our numerous clusters store more than 500 PBs, divided into four groups (real time, processing, data storage and cold storage). In the largest cluster more than 10 thousand nodes. We have 150 thousand applications and runs 130 million containers per day.

- Manhattan (backend for tweets, private messages, twitter accounts, etc.): we have several clusters for different tasks, these are large clusters with multiple ownership, smaller for unusual tasks, read only and read / write clusters for heavy traffic for reading and writing. The read only cluster processes tens of millions of requests per second (QPS), and the read / write cluster processes millions of QPS. Each data center has a cluster with the highest performance - a cluster of observability that processes tens of millions of records.

- Graph: our historic shard cluster based on Gizzard / MySQL for graph storage. Our social graph Flock is able to cope with a peak load of tens of millions of QPS, distributing it to MySQL servers with an average of 30-45 thousand QPS.

- Blobstore: a repository for images, videos, and large files that contains hundreds of billions of objects.

- Cache: Redis and Memcache clusters cache users, timelines, tweets, etc.

- SQL: includes MySQL, PostgreSQL and Vertica. MySQL / PosgreSQL is used where strict integrity is needed in managing campaigns, advertising exchanges, and for internal tools. Vertica is a column repository that is often used as a backend for sales with Tableau support and user organization.

Hadoop / HDFS is also a backend for Scribe-based logging systems, but the final stages of testing for Apache Flume are now being completed. It should help to overcome the limitations, such as the lack of limits / narrowing of the bandwidth for individual customer traffic to aggregators, the lack of delivery guarantee by category. Also will help solve problems with memory corruption. We process more than a trillion messages a day, all of them are processed and distributed into more than 500 categories, combined and then selectively copied across our clusters.

Chronological evolution

Twitter was built on MySQL and initially all data was stored in it. We moved from a small database to a large instance, and then to a large number of large database clusters. Manual data transfer between MySQL instances took a lot of time, so in April 2010 we introduced Gizzard , a framework for creating distributed data stores.

At the time, the ecosystem looked like this:

- Replicated MySQL Clusters.

- Shardirovannye MySQL clusters based on Gizzard.

After the release of Gizzard in May 2010, we introduced FlockDB , a solution for storing graphs on top of Gizzard and MySQL, and in June 2010 - Snowflake , our unique identifier service. 2010 was the year we invested in Hadoop . Originally intended for storing MySQL backups, it is now heavily used for analytics.

Around 2010, we also implemented Cassandra as a storage solution, and although it did not completely replace MySQL due to the lack of an automatic gradual increase function, it was used to store indicators. As traffic increased exponentially, we needed to increase the cluster, so in April 2014 we launched Manhattan : our distributed real-time database with multiple ownership. Since then, Manhattan has become one of our main storage levels, and Cassandra has been disabled.

In December 2012, Twitter allowed uploading photos. Behind the façade, this was made possible by the new data storage solution Blobstore .

Lessons learned

Over the years, as data migrated from MySQL to Manhattan to improve accessibility, reduce delays and simplify development, we also introduced additional data storage engines (LSM, b + tree ...) to better serve our traffic patterns. In addition, we learned lessons from incidents and began to protect our data storage levels from abuse by sending back pressure and activating request filtering.

We continue to concentrate on providing the right tool for the job, but for that you need to understand all the use cases. A universal “one size fits all” solution rarely works - you should avoid cutting corners in boundary situations, because there is nothing more permanent than a temporary solution. And finally, do not overestimate your decision. Everything has advantages and disadvantages, and everything needs to be adapted without losing touch with reality.

Cache

Although the cache occupies only about 3% of our infrastructure, it is critical for Twitter because it protects our backend storage from heavy read traffic and also allows us to store objects with a high price of swelling. We use several caching technologies, like Redis and Twemcache, on a huge scale. More specifically, we have a mix of Twitter memcached (twemcache) clusters, dedicated or multi-owned, as well as Nighthawk clusters (shaded Redis). We have transferred almost all of our main caching from bare metal to Mesos to reduce maintenance costs.

Tasks

Scaling and performance - the main tasks for the caching system. We have hundreds of clusters with an aggregate packet transfer rate of 320 million packets per second, delivering 120 GB / s to our customers, and we set the goal to provide each response with a delay of 99.9% and 99.99% even during peak traffic spikes during significant events.

To meet our service level objectives (SLO) in terms of high bandwidth and low latency, we need to continuously measure the performance of our systems and look for options to optimize efficiency. To this end, we have written the rpc-perf program, it helps to better understand how our caching systems behave. This is critical for capacity planning, since we have switched from dedicated servers to the current Mesos infrastructure. As a result of this optimization, it was possible to more than double the bandwidth per server without sacrificing delays. We still think that big optimizations are possible here.

Lessons learned

The transition to Mesos was a huge operational victory. We codify our configurations and can gradually deploy them, maintaining the cache hit ratio and avoiding problems for persistent data stores. Growth and scaling of this level occurs with greater confidence.

With thousands of connections to each twemcache instance, any process restart, spike in network traffic, or another problem can cause a DDoS-like connection flow to the cache level. As it progressed, it became more than a serious problem. We have implemented benchmarks that help in the case of DDoS to narrow the band for connections to each individual cache with a high level of reconnects, otherwise we would have departed from our stated goals in terms of service level.

We logically split our caches by users, tweets, timelines, etc., so that, in general, each caching cluster is configured for a specific use. Depending on the type of cluster, it can process from 10 million to 50 million QPS, and works on the number of instances from hundreds to thousands.

Haplo

Let us introduce Haplo. This is the main Twitter timeline cache and it works on a customized version of Redis (using HybridList). Read operations from Haplo are performed by the Timeline Service, and write operations by the Timeline Service and Fanout Service. It is also one of our caches that have not yet migrated to Mesos.

- The total number of teams from 40 million to 100 million per second.

- Network I / O 100 Mbps to the host.

- The total number of requests for service 800 thousand per second.

For further reading

Yao Yue ( @thinkingfish ) over the years has spent several excellent lectures and has published a number of articles on caching, including our use of Redis , as well as our newer code base Pelikan . You can watch these videos and read a recent blog post .

Puppet work on a large scale

We have a large array of kernel infrastructure services, such as Kerberos, Puppet, Postfix, Bastions, Repositories, and Egress Proxies. We focus on scaling, creating tools and managing these services, as well as supporting the expansion of data centers and points of presence. Last year alone, we significantly expanded the geography of our points of presence, which required a complete redesign of the architecture of how we plan, prepare and launch new locations.

To manage all configurations, we use Puppet and install an initial batch installation on our systems. This section describes some of the tasks that had to be solved and what we plan to do with our infrastructure for managing configurations.

Tasks

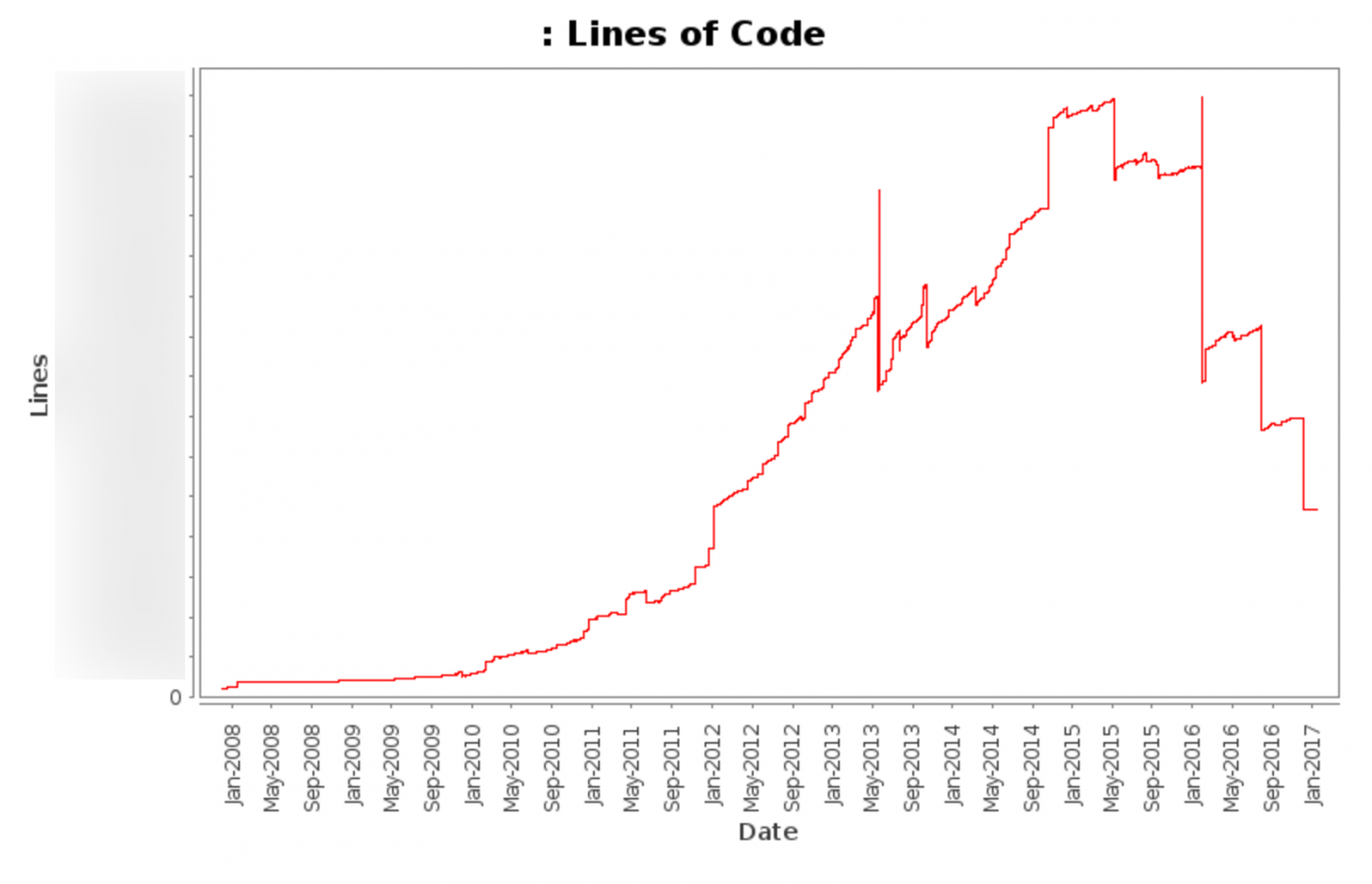

As we grow to meet user requests, we quickly outgrow our standard tools and practices. We have more than 100 authors of commits per month, more than 500 modules and more than 1000 roles. In the end, we managed to reduce the number of roles, modules and lines of code, while at the same time improving the quality of our code base.

Branches

We have three branches that Puppet refers to as environments. This allows for testing, testing and eventually releasing changes for the working environment. We also allow separate specialized environments for more isolated testing.

Transferring changes from the test to the work environment currently requires some human participation, but we are moving to a more automated CI system with an automated integration / rollback process.

Code base

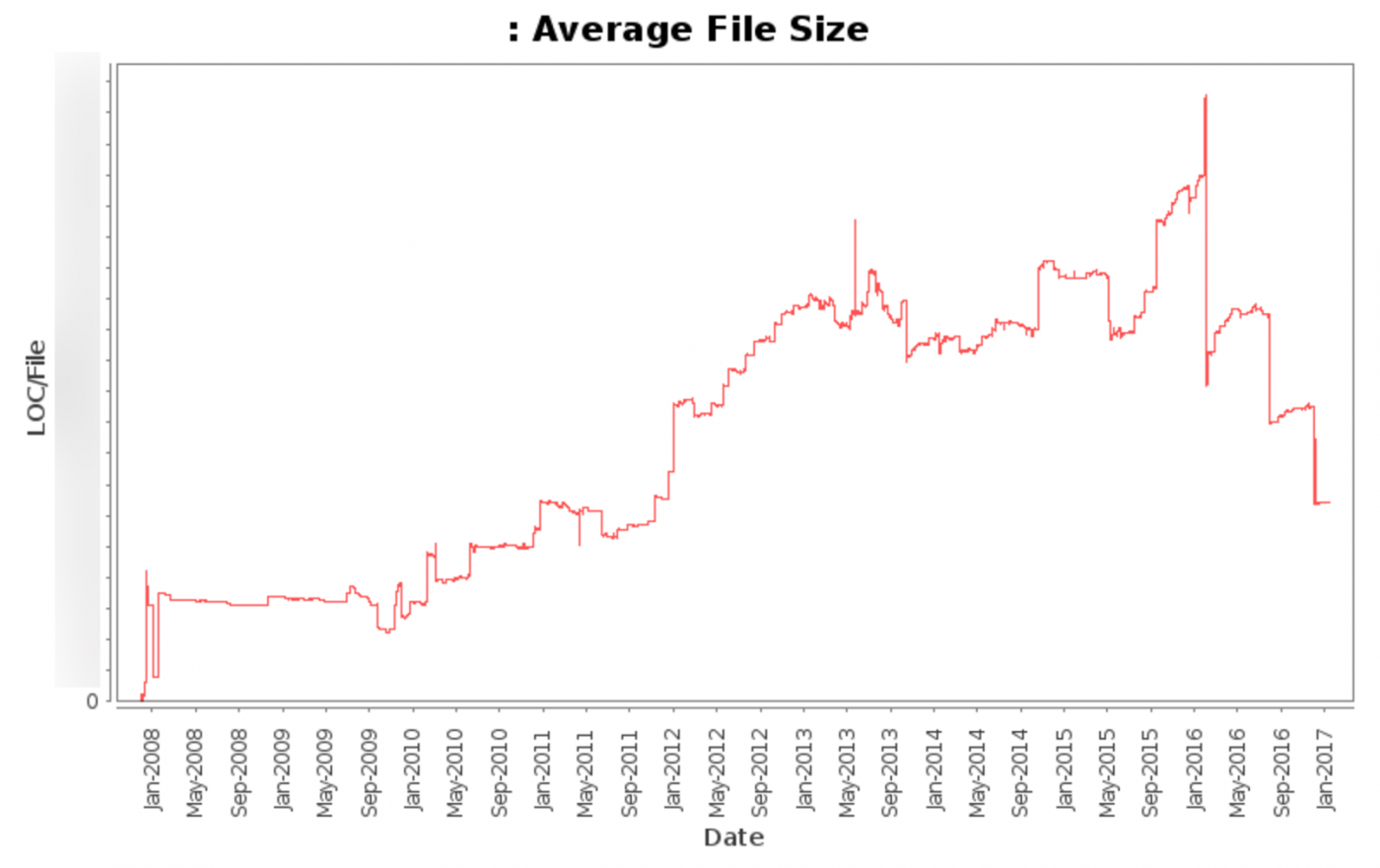

Our Puppet repository contains more than 1 million lines of code, where only the Puppet code is more than 100 thousand lines in each branch. We recently did a massive cleanup of the codebase, removing unnecessary and duplicate code.

This graph shows the total number of lines of code (excluding various automatically updated files) from 2008 to today.

This graph shows the total number of files (excluding various automatically updated files) from 2008 to today.

This graph shows the average file size (excluding various automatically updated files) from 2008 to today.

Big wins

The biggest victories for our code base were static code analysis (lint), style checking hooks, documentation of best practices, and regular work meetings.

With lint tools (puppet-lint), we were able to meet generally accepted lint standards. We reduced the number of lint errors and warnings in our code base to tens of thousands of lines, and the transformation affected 20% of the code base.

After the initial purge, it is now easier to make smaller changes to the code base, and the introduction of automated style checking as a hook for version control has dramatically reduced the number of style errors in our code base.

With more than a hundred contributors to Puppet throughout the organization, the importance of documenting best practice practices, both internal practices and community standards, is greatly increasing. The presence of a single reference document improved the quality of the code and the speed of its implementation.

Holding regular ancillary meetings (sometimes by invitation) helps to provide one-on-one assistance when the tickets and channel in the chat do not provide sufficient communication density or cannot display a complete picture of what needs to be achieved. As a result, after the meetings, many commit authors improved the quality of the code and the speed of work, understanding the requirements of the community, best practices and how best to apply the changes.

Monitoring

System indicators are not always useful (see the Caitlin McCaffrey lecture at the Monitorama 2016 conference), but they provide additional context for those indicators that we consider useful.

Some of the most useful indicators for which alerts are generated and charts are drawn up:

- Malfunctions: Number of unsuccessful Puppet launches.

- Duration of work: the time it takes the Puppet client to complete the work.

- No job: the number of Puppet launches that did not take place in the expected interval.

- Directory sizes: size of directories in megabytes.

- Directory compilation time: the time in seconds the directory needs to be compiled.

- Number of compiled directories: the number of directories that each Master compiled.

- File resources: the number of files processed.

All these indicators are collected for each host and are summarized by roles. This allows you to instantly issue alerts and determine if a problem exists for specific roles, role sets, or wider problems.

Effect

After switching from Puppet 2 to Puppet 3 and updating Passenger (we will later post posts on both topics), we were able to reduce the average operating time of Puppet processes in Mesos clusters from more than 30 minutes to less than 5 minutes.

This graph shows the average Puppet process time in seconds in our Mesos clusters.

If you want to help with our Puppet infrastructure, we invite you to work !

Source: https://habr.com/ru/post/325282/

All Articles