Tuning SQL Server 2012 under SharePoint 2013/2016. Part 1

Hello. My name is Lyubov Volkova, I am a systems architect in the business solutions development department. From time to time, I write application posts about Microsoft server products (for example, about monitoring SharePoint servers and about servicing databases related to content databases, services, and components of this platform.

This post is the first of two, in which I will talk about an important topic from the point of view of administering SharePoint portals - on tuning SQL servers aimed at achieving high performance. It is extremely important to ensure careful planning, correct installation and subsequent configuration of the SQL server that will be used to store data hosted on the corporate portal.

In this post you can read about planning the installation of a SQL server. A little later, the second part will be published, devoted to the installation of a SQL server and subsequent configuration.

')

Here are seven main reasons why server virtualization is being considered:

Considering the issue of server virtualization that is part of a SharePoint farm or network infrastructure servers that provide user authentication, name resolution, PKI management, and other functions, you need to remember that there is a possibility of virtualization:

Summary data about guest operating systems, supported versions of SQL servers and virtual machine clustering are presented in the table below:

Note that guest clustering support is provided in versions of Windows Server 2008 SP2 and later.

A more detailed description of the purpose and architecture of a fault-tolerant guest cluster can be found here , and the hardware requirements, features of support for working in a virtual environment for specific versions of SQL servers can be obtained from the links in the following table:

ESG Labs research confirmed that the performance of SQL Server 2012 OLTP on virtual servers is only about 6.3% lower than that of a physical platform.

Hyper-V supports up to 64 processors per separate virtual machine, tests have shown a 6-fold increase in performance and a 5-fold increase in transaction time. The illustration below shows the main results of the tests. A detailed report can be found here .

Hyper-V can be used to virtualize large volumes of SQL Server databases and supports the use of advanced features such as SR-IOV , Virtual Fiber Channel and Virtual NUMA .

We list its main shortcomings, which become the main reasons that the SQL server for SharePoint is deployed on a physical server:

To ensure the normal level of functioning of the SQL server, it is necessary to ensure an adequate amount of RAM. In the case when only one instance of SQL is deployed, which is allocated exclusively for working with SharePoint databases, the requirements are minimal:

SharePoint is a powerful platform for building portal solutions that have a modular architecture. The set of services and components supporting the operation of the corporate portal allows us to single out a list of databases, the storage of which must be planned. There are three groups of databases that differ in the time of the recommended planning:

The Microsoft article details the main characteristics of all the databases listed in the groups. The table below provides recommendations for setting up a recovery mode for each of them based on many years of experience in technical support, migrations, and recovery of SharePoint databases:

Ideally, on a SQL server, it is recommended to have six disks to accommodate the following files:

SharePoint uses a SQL server to store its data, which has its own data storage features. In this regard, the preparation of the disk subsystem at both the physical and logical levels, taking into account these features, will have a huge impact on the final performance of the corporate portal.

The data that Microsoft SQL Server stores is broken up into pages of 8 KB each, which in turn are grouped into so-called extents of 64 KB each. Read more about it here . In accordance with this, the tuning of the disk subsystem is to ensure the holistic placement of the extent at all physical and logical levels of the disk subsystem.

The first thing to start is the initialization of RAID. In this case, the array on which the databases will be located should have a stripe size of multiple 64 KB, preferably if it is exactly 64 KB.

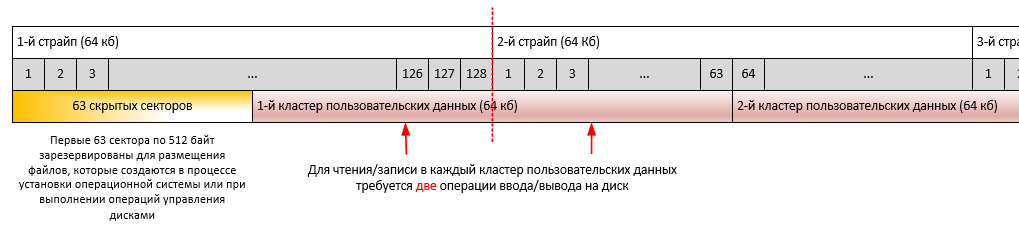

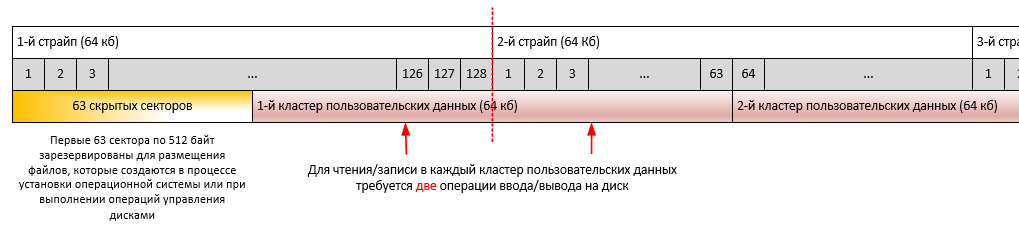

The alignment of the file system relative to its physical placement on the disk array is very important when considering how to improve the performance of the SQL server. It is described in detail here . On any hard disk or SSD, the first 63 sectors of 512 bytes are reserved for placing files that are created during the installation of the operating system or when performing disk management operations, as well as with the help of special programs - hard disk partition managers. The essence of the alignment procedure is to select such a starting offset (offset), in which an entire number of file system clusters would fit in one stripe of the disk array. Otherwise, it is possible that in order to perform a read operation on a single cluster of file system data, it will be necessary to perform two physical read operations from the disk array, which significantly degrades the performance of the disk subsystem (the performance loss can be up to 30%). In the case of a solid-state drive, the performance loss will be somewhat lower, but it will run out of its resource faster due to more frequent read-write operations.

The illustration below shows the features of performing read / write operations for unaligned partitions using the example of a 64 KB stripe.

The illustration below shows the features of performing read / write operations for leveled partitions using the example of a 64 KB stripe.

By default, in Windows Server 2008/2012, the partition offset is 1024 Kb, which is well correlated with the size of stripes on 64 Kb, 128 Kb, 256 Kb, 512 Kb and 1024 Kb.

In cases of setting up operating systems from images, using different software for managing disk partitions, situations may arise related to the violation of partition alignments. In this regard, it is recommended that before installing the SQL server, as an additional insurance, perform a double-check of correlations, which are described in the next section.

Partition offset

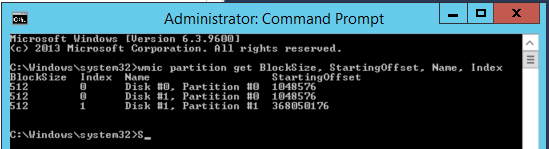

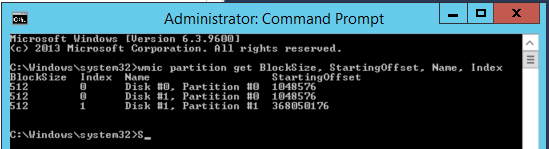

The most correct method for checking partition offsets for basic disks is to use the wmic.exe command line utility :

wmic partition get BlockSize, StartingOffset, Name, Index

To check the alignment of partitions for dynamic disks, you must use diskdiag.exe (for a description of working with it, see the section “ Dynamic Disk Partition Offsets ”).

Stripe Unit Size

In Windows, there are no standard means for determining the size of the minimum data block for writing to disk (stripe unit size). The value of this parameter must be obtained from the vendor’s documentation on the hard disk or from the SAN administrator. The most common indicators of Stripe Unit Size are 64 Kb, 128 Kb, 256 Kb, 512 Kb or 1024 Kb. In the examples considered earlier, a value of 64 Kb was used.

File Allocation Unit Size

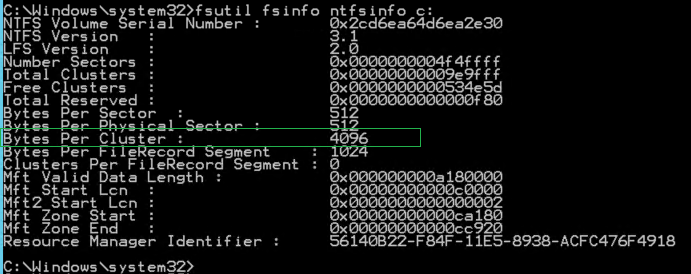

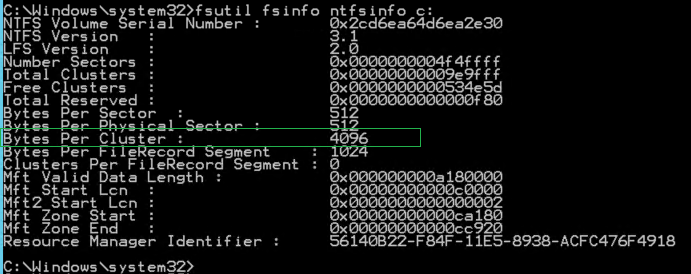

To determine the value of this indicator, you must use the following command separately for each of the disks (Value of the Bytes Per Cluster property):

fsutil fsinfo ntfsinfo c:

For partitions that store data files and SQL Server transaction log files, the metric value should be 65,536 bytes (64 KB). See "Optimizing Storage at the File System Level".

Two important correlations, the observance of which are the basis for optimal disk I / O performance. The results of the calculations for the following expressions should be an integer:

The most important is the first correlation.

An example of non-equal values. Partition offset is 32,256 bytes (31.5 Kb) and the minimum block size for writing data to disk is 65,536 bytes (64 Kb). 32,256 bytes / 65,536 bytes = 0.4921875. The result of division is not an integer, as a result we have not equalized values of Partition_Offset and Stripe_Unit_Size.

If the offset of the partition (Partition offset) is 1,048,576 bytes (1 MB), and the size of the minimum block of data written to disk is 65,536 bytes (64 KB), then the result of the division is 8, is an integer.

The next level at which to organize optimal storage of extents is the file system. The optimal settings here are the NTFS file system with a cluster size of 65,536 bytes (64 KB). In this regard, before installing the SQL server, it is strongly recommended to format the disks and set the cluster size to 65,536 bytes (64 KB) instead of 4096 bytes (4 KB) by default.

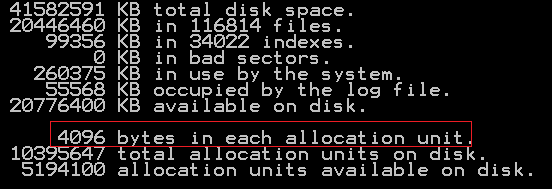

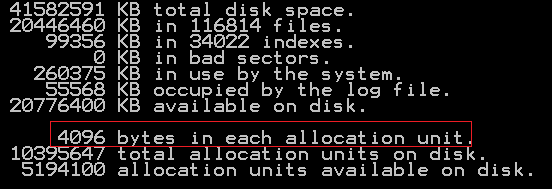

You can check the current values for the disk using the following command:

chkdsk c:

Example output:

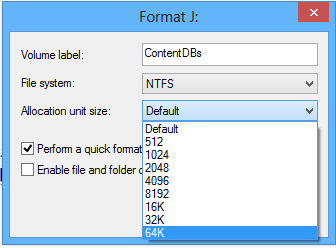

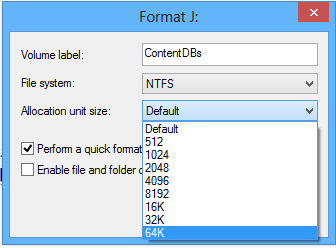

In the presented example, the cluster size is 4096 bytes / 1024 = 4 KB, which does not comply with the recommendations. To change you have to reformat the disk. Cluster size can be set by adjusting the disk formatting options using the Windows operating system:

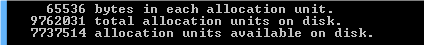

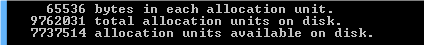

After that, make sure that the cluster size now complies with the recommendations (65536 bytes / 1024 = 64 kb):

When designing the placement of the SQL Server system databases and the SharePoint databases, it will be important to choose the disks according to their rank based on the read / write speeds.

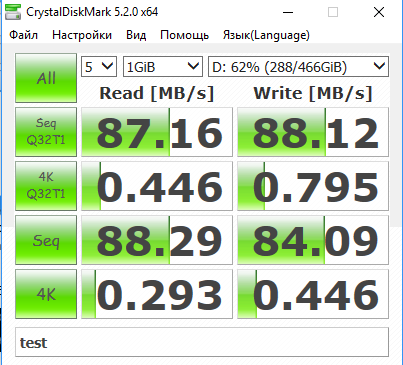

To test and determine accurate data on the speed of reading / writing files with random access and sequential access, you can use software similar to CrystalDiskMark (for example, SQLI O). Typically, an application allows you to enter basic test parameters, at a minimum:

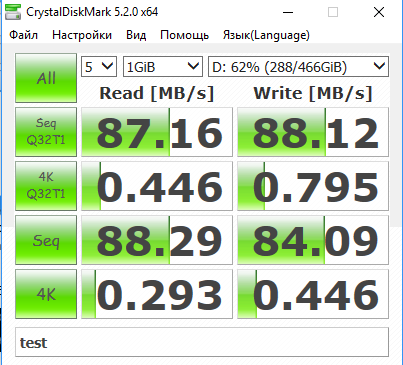

Below is an example of the results of tests performed:

Below is a description of the content of the tests performed:

Note that the tests use the recording unit size of 4 KB, which does not fully correspond to the actual data storage scheme of the SQL server and work with 8 KB pages of akents in 64 KB. When performing, there is no goal to ensure that the results obtained are absolutely consistent with the actual data on the same operations performed by the SQL server. The main final goal is to rank the discs according to the speed of read / write operations and get the final table.

For quick decision making during the installation of the SQL server, configuring the parameters for placing the database files it will be useful to format the test results as a table separately for each type of test, the template of which is shown below:

For magnetic disks (individual or as part of RAID), sequential operations operations (Seq Q32 test) often exceed the results of other tests by 10x-100x times. These metrics often depend on how you connect to the repository. It must be remembered that the number of MB / s claimed by vendors is a theoretical limitation. In practice, they are usually less than the claimed by 5-20%.

For solid-state drives, the difference between the speed of sequential and arbitrary read / write operations should not differ much from each other, usually no more than 2-3 times. Connection speeds such as 3Gb SATA, 1Gb iSCSI, or 2 / 4Gb FC will affect the speed.

If the server boots from a local disk and stores the SQL server data on another disk, you should also include both of these disks in your test plan. Comparison of the test results of the CrystalDiskMark disk, on which variables are stored, data with indicators of the disk on which the operating system is installed, can demonstrate an advantage in the performance of the second. In such a situation, the system administrator needs to check the disk or SAN storage settings for correctness and optimal performance.

Information about the purposes for which the portal is used allows you to correctly prioritize the selection of disks for storing SharePoint databases.

If the corporate portal is mainly used by users for reading content and there is no active daily increase in content (for example, the company's external website), the most efficient disks need to be allocated for data storage, having allocated less efficient storage for transaction log files:

If the corporate portal is used to organize collaboration between users who actively download dozens of documents every day, the priorities will be different:

The low speed of read / write operations for the tempdb system database of the SQL server seriously affects the overall performance of the SharePoint farm, as a result of the performance of the corporate portal. The best recommendation would be to use RAID 10 to store the files of this database.

For sites intended for collaboration or large volume of update operations, special attention should be paid to the choice of disks for storing SharePoint content database files. The following recommendations should be considered:

The general formula for calculating the expected size of a content database is:

((D * V) * S) + (10Kb * (L + (V * D))), where:

It is necessary to consider additional storage costs:

Despite the fact that RAID 5 has the best performance / cost, for SharePoint databases it is strongly recommended to use RAID 10, especially in the case of active use of the corporate portal for user collaboration. RAID 5 .

, SQL SharePoint .

SharePoint , SQL SharePoint, .

SQL SharePoint Windows Server 2012 SharePoint:

.

, SharePoint :

2007 Windows Server 2003 SP2 , Scalable Networking Pack (SNP). . SNP , Receive Side Scaling (RSS), TCP/IP Chimney Offload ( TOE) Network Direct Memory Access (NetDMA). , TCP/IP .

- SNP Server 2003 SP2 - . Server 2003 . Server 2008, Server 2008 R2 . Server 2008 R2 ( SP1 ). , - , .

RSS .

RSS, , , . , , , , .

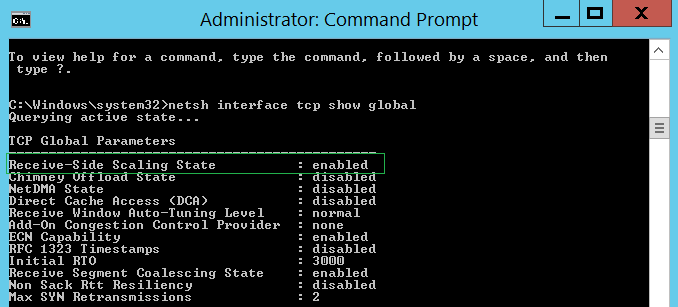

Server 2008 R2 RSS . , , :

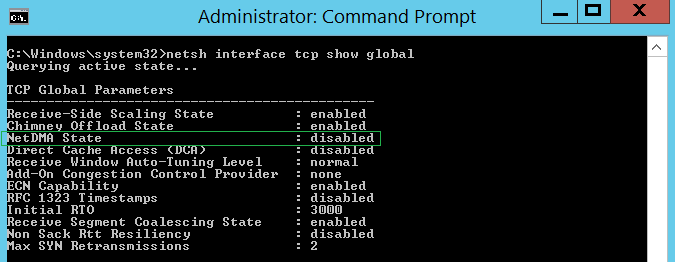

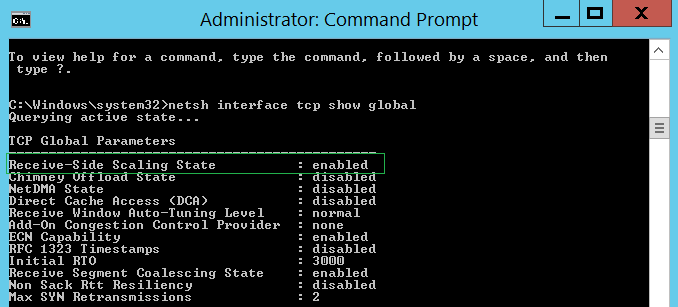

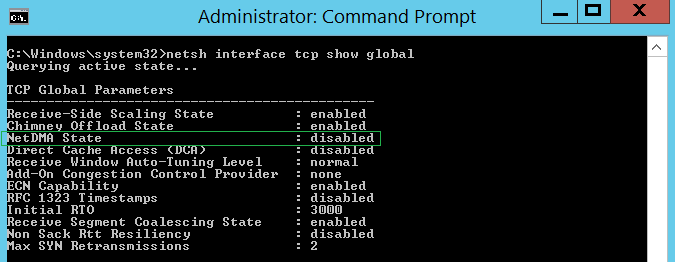

netsh interface tcp show global

, RSS :

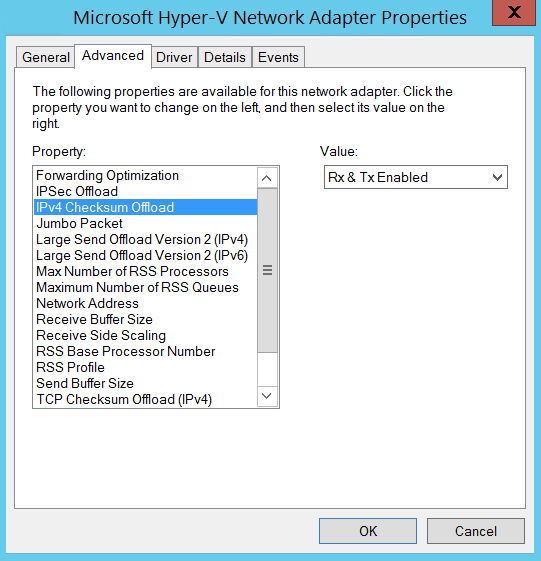

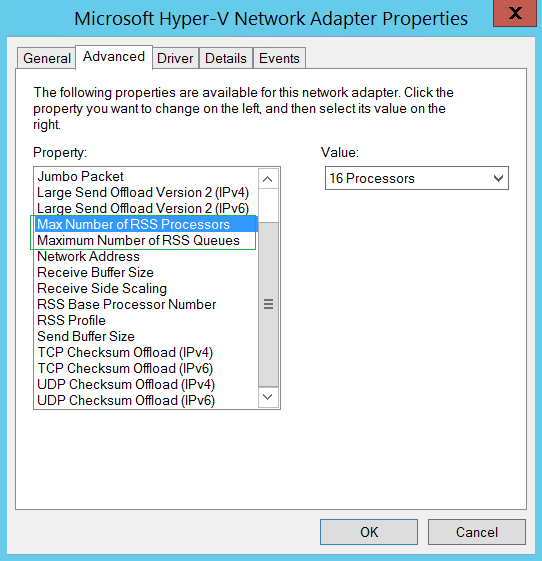

RSS , TCP Checksum Offload, IP Checksum Offload, Large Send Offload UDP Checksum Offload ( IPv4 IPv6). , , RSS .

, , , RSS, RSS.

RSS . , , .

TCP Chimney Offload ( TCP/IP Offloading , — TOE) TCP , TOE. TCP , . TOE TCP/IPv4, TCP/IPv6 , .

- , TCP/IP ( ), TOE , . , , , , , TOE.

, - SharePoint . TCP Chimney Offload.

TCP :

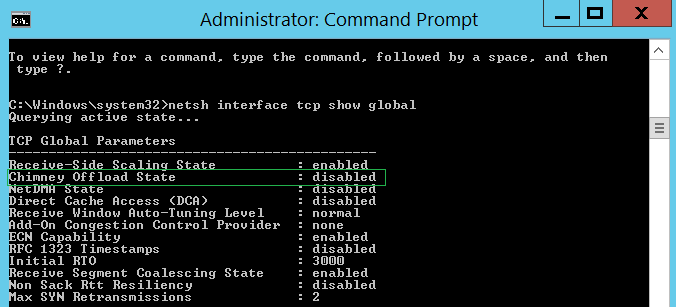

netsh interface tcp show global

TOE TCP :

netsh int tcp set global chimney=disable

TOE TOE TCP :

Netsh netsh interface tcp show chimneystats

IP

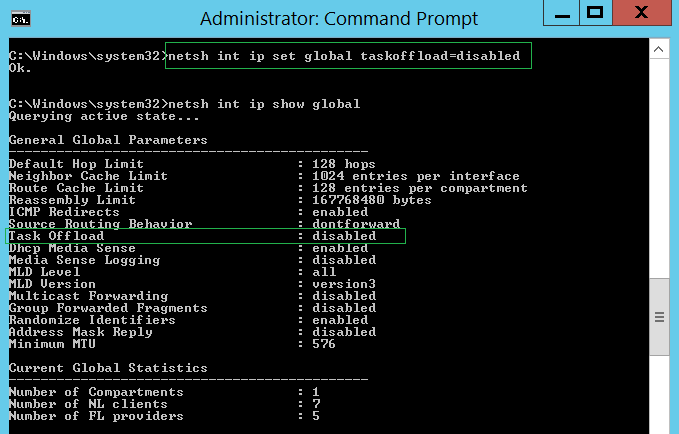

netsh int ip set global taskoffload=disable d

IP :

netsh int ip show global

NetDMA – . , TOE . NetDMA CPU , CPU .

, NetDMA, , , – Windows Intel I/O Acceleration Technology (I/OAT). NetDMA AMD , , .

, TOE NetDMA , .

NetDMA Windows 2008 R2 :

netsh interface tcp set global netdma=enabled

:

netsh interface tcp show global

Windows Server 2008 R 2

«Automatic» TCP Chimney Offload , , «TCP Chimney Offload» , 10 GB Ethernet.

Windows Server 2012, Windows Server 2012 R2 2

This post is the first of two, in which I will talk about an important topic from the point of view of administering SharePoint portals - on tuning SQL servers aimed at achieving high performance. It is extremely important to ensure careful planning, correct installation and subsequent configuration of the SQL server that will be used to store data hosted on the corporate portal.

In this post you can read about planning the installation of a SQL server. A little later, the second part will be published, devoted to the installation of a SQL server and subsequent configuration.

')

SQL Server Installation Planning

SQL Server Virtualization

Why do you need server virtualization?

Here are seven main reasons why server virtualization is being considered:

- Increase hardware utilization . According to statistics, most servers are loaded by 15-20 percent when they perform daily tasks. Using multiple virtual servers on a single physical server will increase it to 80 percent, while providing substantial savings on hardware purchases.

- Reduced hardware replacement costs. Since virtual servers are decoupled from specific equipment, it is not necessary to reinstall and configure the software when updating the physical server fleet. The virtual machine can simply be copied to another server.

- Increase the flexibility of using virtual servers. If you need to use multiple servers (for example, for testing and working in a product environment) with varying load, virtual servers are the best solution, as they can be safely transferred to other platforms when the physical server is under increased load.

- Improved manageability of server infrastructure. There are many virtual infrastructure management products that allow you to centrally manage virtual servers and provide load balancing and live migration.

- Provide high availability. Preparing backups of virtual machines and restoring them takes much less time and is simpler. Also, in case of equipment failure, a backup copy of the virtual server can be immediately launched on another physical server.

- Savings on maintenance staff . Simplifying the management of virtual servers in the future entails savings on specialists serving the infrastructure of the company. If two people can do what four did with the help of virtual server management tools, why do you need two extra specialists who receive at least $ 15,000 a year? Nevertheless, it must be borne in mind that considerable money is also needed to train qualified personnel in the field of virtualization.

- Savings on electricity. For small companies, this factor, of course, does not matter much, but for large data centers, where the cost of maintaining a large server fleet includes the cost of electricity (power, cooling systems), this point is of considerable importance. The concentration of several virtual servers on one physical will reduce these costs.

SQL Server Virtualization Support

Considering the issue of server virtualization that is part of a SharePoint farm or network infrastructure servers that provide user authentication, name resolution, PKI management, and other functions, you need to remember that there is a possibility of virtualization:

- Active Directory Domain Services;

- Web servers and SharePoint application servers (Front-End Web Server and Application Server);

- SQL Server Services;

- Any component of SQL, ADS, SharePoint 2013.

Summary data about guest operating systems, supported versions of SQL servers and virtual machine clustering are presented in the table below:

| SQL Server Version | Supported Windows Server Guest Operating Systems | Hyper-V Support | Guest Clustering Support |

|---|---|---|---|

| SQL Server 2008 SP3 | 2003 SP2, 2003 R2 SP2, 2008 SP2, 2008 R2 SP1, 2012 SP2, 2012 | Yes | Yes |

| SQL Server 2008 R2 SP2 | 2003 SP2, 2008 SP2, 2008 R2 SP1, 2012, 2012 R2 | Yes | Yes |

| SQL Server 2012 SP1 | 2008 SP2, 2008 R2 SP1, 2012, 2012 R2 | Yes | Yes |

| SQL Server 2014 | 2008 SP2, 2008 R2 SP1, 2012, 2012 R2 | Yes | Yes |

Note that guest clustering support is provided in versions of Windows Server 2008 SP2 and later.

A more detailed description of the purpose and architecture of a fault-tolerant guest cluster can be found here , and the hardware requirements, features of support for working in a virtual environment for specific versions of SQL servers can be obtained from the links in the following table:

| SQL Server Version | Documentation link |

|---|---|

| SQL Server 2008 SP3 | Hardware & Software Requirements, Hardware Virtualization & Guest Clustering Support |

| SQL Server 2008 R2 SP2 | Hardware & Software Requirements, Hardware Virtualization & Guest Clustering Support |

| SQL Server 2012 SP1 | Hardware & Software Requirements, Hardware Virtualization & Guest Clustering Support |

| SQL Server 2014 | Hardware & Software Requirements, Hardware Virtualization & Guest Clustering Support |

SQL Server Performance with Virtualization

ESG Labs research confirmed that the performance of SQL Server 2012 OLTP on virtual servers is only about 6.3% lower than that of a physical platform.

Hyper-V supports up to 64 processors per separate virtual machine, tests have shown a 6-fold increase in performance and a 5-fold increase in transaction time. The illustration below shows the main results of the tests. A detailed report can be found here .

Hyper-V can be used to virtualize large volumes of SQL Server databases and supports the use of advanced features such as SR-IOV , Virtual Fiber Channel and Virtual NUMA .

Disadvantages of SQL Server Virtualization

We list its main shortcomings, which become the main reasons that the SQL server for SharePoint is deployed on a physical server:

- The need to restructure the approach to work with the reliability of the system. Indeed, since several virtual machines are simultaneously running on the same physical server, the failure of the host leads to the simultaneous failure of all VMs and applications running on them.

- Load balancing If a virtual machine, the SQL server typically uses a lot of processor (or memory) computing resources, which affects the performance of other virtual machines and host applications that also require processor time (memory). Even if only a virtual machine with a SQL server is hosted on the host, setting the optimal performance level requires a whole range of actions. Read more about this in the article by Michael Ooty, available here. Administrators have to distribute the load by setting the rules by which the running virtual machines will automatically move to less loaded servers or “unload” the loaded ones.

RAM & CPU

To ensure the normal level of functioning of the SQL server, it is necessary to ensure an adequate amount of RAM. In the case when only one instance of SQL is deployed, which is allocated exclusively for working with SharePoint databases, the requirements are minimal:

| Small SharePoint farm (content up to 500 GB) | Average SharePoint farm (content from 501 GB to 1 TB) | Big SharePoint farm (content 1-2 TB) | Very large SharePoint farm (content 2-5 TB) | Special cases | |

|---|---|---|---|---|---|

| Ram | 8 GB | 16 GB | 32 GB | 64 GB | 64 GB |

| CPU | four | four | eight | eight | eight |

Plan size, layout, and general database requirements for SharePoint

SharePoint is a powerful platform for building portal solutions that have a modular architecture. The set of services and components supporting the operation of the corporate portal allows us to single out a list of databases, the storage of which must be planned. There are three groups of databases that differ in the time of the recommended planning:

- Before installing the SQL server. This group includes all system databases of the SQL server, the SharePoint content database (the default is one).

- Before installing the SharePoint server. This group includes the SharePoint configuration database, the SharePoint Central Administration content database.

- Before deploying a service application storing data in a database / databases. Examples: Managed Metadata Service, User Profile Service, Search, etc.

- Before creating additional SharePoint content databases.

The Microsoft article details the main characteristics of all the databases listed in the groups. The table below provides recommendations for setting up a recovery mode for each of them based on many years of experience in technical support, migrations, and recovery of SharePoint databases:

| Master database | Plain | Plain |

| Model database template | Full access | Plain. As a rule, tuning is performed once or very rarely. It is enough to perform a backup after making changes. |

| Msdb database | Plain | Plain |

| Tempdb database | Plain | Plain |

| Central Administration Content Database | Full access | Plain. |

| Configuration database | Full access | Changes are usually actively made at the stage of initial or spot configuration of services and components of the SharePoint farm, which has clear short time boundaries. It is more efficient to perform a backup after making completed blocks of changes. |

| Application Management Service Database | Full access | Plain. Typically, in the SharePoint farm is set from 0 to a small number of applications (1-5) SharePoint. Application installations are usually significantly separated in time. More efficiently perform a full backup of the database after installing a separate application |

| Subscription Settings Service Database | Full access | Plain. As a rule, SharePoint applications are installed on a small number of sites (1-5). Application installations are usually significantly separated in time. It is more efficient to perform a full backup of the database after installing a separate application. Full. If you intend to install applications on an unlimited number of web sites, installations are often performed |

| Business Connectivity Service | Full access | Plain. Setting up and making changes related to the operation of this service is usually short in time and is performed once or a small number of times. It is more efficient to perform a full database backup after the completed blocks for creating / editing data models, external content types and external data lists |

| Managed Metadata service application database | Full access | Simple in the case of using directories with rarely changeable content. Full access in case of changes to the term sets on a regular basis (active creation and editing of hierarchical directories, tagging of list items and documents). |

| SharePoint Translation Service Application Database | Full access | Plain. |

| Power Pivot Database | Full access | Full access |

| PerformancePoint Services Database | Full access | Simple when working with a set of indicator panels, in whose settings changes are rarely made. Full access in case of intensive creation / modification of indicator panels |

| Search Administration Database | Plain | Plain. Setting up and making changes related to the operation of this service is usually short in time and is performed once or a small number of times. It is more efficient to perform a full backup of the database after the completed setting blocks, changes to the search pattern |

| Analytics Reporting Database | Plain | Simple, if the analysis of search results and intelligent adaptation of the issuance of results, search suggestions is not critical. Full access if the search on the portal and its constant personalized work are critical for business |

| Crawl database | Plain | Plain. Restoring this database from a backup or using a recovery wizard will often be much longer compared to resetting the index and crawling the content completely. |

| Link Database | Plain | Simple, if the analysis of search results and intelligent adaptation of the issuance of results, search suggestions is not critical. Full access if the search on the portal and its constant personalized work are critical for business |

| Secure Store Database | Full access | Plain. Setting up and making changes related to the operation of this service is usually short in time and is performed once or a small number of times. It is more efficient to perform a full backup of the database after the completed settings blocks. |

| State Service Application Database | Full access | Plain. Because this database provides temporary storage for InfoPath forms, Visio Web Parts is not intended for long-term storage of this information. The correctness of the data in this database is important at the moment when a user views a particular InfoPath form or Visio diagram through a web part. |

| Usage and Health Data Collection Database | Plain | Simple or Full access. Depends on the requirements for an acceptable level of data loss for the organization. |

| Profile Database | Plain | Simple, if there is no business-critical functionality, whose work requires working with the most relevant data on user profiles Full access if the situation is reversed. |

| Profile Sync Database | Plain | Simple if recovery time takes less time compared to reconfiguring connections and performing full profile synchronization Full access if the situation is reversed |

| Social Tag Database | Plain | Simple, if working with social content is not a business critical functionality Full access if the situation is reversed |

| Word Automation Database | Full access | Full access |

Number of disks

Ideally, on a SQL server, it is recommended to have six disks to accommodate the following files:

- Tempdb database data files;

- Tempdb database transaction log file;

- SharePoint database data files;

- SharePoint database transaction log files;

- Operating system;

- Files of other applications.

Disk Subsystem Preparation

SharePoint uses a SQL server to store its data, which has its own data storage features. In this regard, the preparation of the disk subsystem at both the physical and logical levels, taking into account these features, will have a huge impact on the final performance of the corporate portal.

The data that Microsoft SQL Server stores is broken up into pages of 8 KB each, which in turn are grouped into so-called extents of 64 KB each. Read more about it here . In accordance with this, the tuning of the disk subsystem is to ensure the holistic placement of the extent at all physical and logical levels of the disk subsystem.

The first thing to start is the initialization of RAID. In this case, the array on which the databases will be located should have a stripe size of multiple 64 KB, preferably if it is exactly 64 KB.

Alignment of hard drive or SSD SSD partitions

The alignment of the file system relative to its physical placement on the disk array is very important when considering how to improve the performance of the SQL server. It is described in detail here . On any hard disk or SSD, the first 63 sectors of 512 bytes are reserved for placing files that are created during the installation of the operating system or when performing disk management operations, as well as with the help of special programs - hard disk partition managers. The essence of the alignment procedure is to select such a starting offset (offset), in which an entire number of file system clusters would fit in one stripe of the disk array. Otherwise, it is possible that in order to perform a read operation on a single cluster of file system data, it will be necessary to perform two physical read operations from the disk array, which significantly degrades the performance of the disk subsystem (the performance loss can be up to 30%). In the case of a solid-state drive, the performance loss will be somewhat lower, but it will run out of its resource faster due to more frequent read-write operations.

The illustration below shows the features of performing read / write operations for unaligned partitions using the example of a 64 KB stripe.

The illustration below shows the features of performing read / write operations for leveled partitions using the example of a 64 KB stripe.

By default, in Windows Server 2008/2012, the partition offset is 1024 Kb, which is well correlated with the size of stripes on 64 Kb, 128 Kb, 256 Kb, 512 Kb and 1024 Kb.

In cases of setting up operating systems from images, using different software for managing disk partitions, situations may arise related to the violation of partition alignments. In this regard, it is recommended that before installing the SQL server, as an additional insurance, perform a double-check of correlations, which are described in the next section.

Important correlations: Partition Offset, File Allocation Unit Size, Stripe Unit Size

Partition offset

The most correct method for checking partition offsets for basic disks is to use the wmic.exe command line utility :

wmic partition get BlockSize, StartingOffset, Name, Index

To check the alignment of partitions for dynamic disks, you must use diskdiag.exe (for a description of working with it, see the section “ Dynamic Disk Partition Offsets ”).

Stripe Unit Size

In Windows, there are no standard means for determining the size of the minimum data block for writing to disk (stripe unit size). The value of this parameter must be obtained from the vendor’s documentation on the hard disk or from the SAN administrator. The most common indicators of Stripe Unit Size are 64 Kb, 128 Kb, 256 Kb, 512 Kb or 1024 Kb. In the examples considered earlier, a value of 64 Kb was used.

File Allocation Unit Size

To determine the value of this indicator, you must use the following command separately for each of the disks (Value of the Bytes Per Cluster property):

fsutil fsinfo ntfsinfo c:

For partitions that store data files and SQL Server transaction log files, the metric value should be 65,536 bytes (64 KB). See "Optimizing Storage at the File System Level".

Two important correlations, the observance of which are the basis for optimal disk I / O performance. The results of the calculations for the following expressions should be an integer:

- Partition_Offset ÷ Stripe_Unit_Size

- Stripe_Unit_Size ÷ File_Allocation_Unit_Size

The most important is the first correlation.

An example of non-equal values. Partition offset is 32,256 bytes (31.5 Kb) and the minimum block size for writing data to disk is 65,536 bytes (64 Kb). 32,256 bytes / 65,536 bytes = 0.4921875. The result of division is not an integer, as a result we have not equalized values of Partition_Offset and Stripe_Unit_Size.

If the offset of the partition (Partition offset) is 1,048,576 bytes (1 MB), and the size of the minimum block of data written to disk is 65,536 bytes (64 KB), then the result of the division is 8, is an integer.

File system-level storage optimization

The next level at which to organize optimal storage of extents is the file system. The optimal settings here are the NTFS file system with a cluster size of 65,536 bytes (64 KB). In this regard, before installing the SQL server, it is strongly recommended to format the disks and set the cluster size to 65,536 bytes (64 KB) instead of 4096 bytes (4 KB) by default.

You can check the current values for the disk using the following command:

chkdsk c:

Example output:

In the presented example, the cluster size is 4096 bytes / 1024 = 4 KB, which does not comply with the recommendations. To change you have to reformat the disk. Cluster size can be set by adjusting the disk formatting options using the Windows operating system:

After that, make sure that the cluster size now complies with the recommendations (65536 bytes / 1024 = 64 kb):

Disk rankings based on read / write speed

When designing the placement of the SQL Server system databases and the SharePoint databases, it will be important to choose the disks according to their rank based on the read / write speeds.

To test and determine accurate data on the speed of reading / writing files with random access and sequential access, you can use software similar to CrystalDiskMark (for example, SQLI O). Typically, an application allows you to enter basic test parameters, at a minimum:

- The number of tests. There should be several tests to get a more accurate average.

- The size of the file to be used during the execution of tests. It is recommended to set a value that exceeds the amount of server RAM to avoid using only the cache. In addition, the figures will provide statistics on the processing of large file sizes, which is relevant for many corporate portals with intensive user collaboration on content.

- The name of the disk on which read / write operations will be performed;

- List of tests that must be performed.

Below is an example of the results of tests performed:

Below is a description of the content of the tests performed:

- Seq Q32 - speed of reading / writing to files with sequential access. For a SQL server, these are operations such as backing up, scanning tables, defragmenting indexes, reading / writing transaction files.

- 4K QD32 - the speed of a large number of random reads / writes with small data (4 KB) at the same time. The test results allow you to judge the performance of the disk when performing transactions in the course of the OLTP-server, which has a high load.

- 512K is the speed of a large number of read / write operations with large data sizes (4 Kb) at the same time. The results of this test can be ignored, because They have no relation to the work of the SQL server as such.

- 4K is the speed of a small number of random read / write operations with small data sizes (4 Kb) at the same time. The test results allow you to judge the performance of the disk when performing transactions during the work of the OLTP server with a small load.

Note that the tests use the recording unit size of 4 KB, which does not fully correspond to the actual data storage scheme of the SQL server and work with 8 KB pages of akents in 64 KB. When performing, there is no goal to ensure that the results obtained are absolutely consistent with the actual data on the same operations performed by the SQL server. The main final goal is to rank the discs according to the speed of read / write operations and get the final table.

For quick decision making during the installation of the SQL server, configuring the parameters for placing the database files it will be useful to format the test results as a table separately for each type of test, the template of which is shown below:

| Disks sorted by read / write speed from fastest to slowest <Test name> | |||

|---|---|---|---|

| 0 | I: | 20GB | Fixed size VHD |

| one | H: | 20GB | Fixed size VHD |

| 2 | G: | 50GB | |

| 3 | F: | 200GB | |

| four | C: | 80GB | operating system |

| five | E: | 2TB | |

| 6 | D: | 100GB |

How to interpret the test results CrystalDiskMark?

For magnetic disks (individual or as part of RAID), sequential operations operations (Seq Q32 test) often exceed the results of other tests by 10x-100x times. These metrics often depend on how you connect to the repository. It must be remembered that the number of MB / s claimed by vendors is a theoretical limitation. In practice, they are usually less than the claimed by 5-20%.

For solid-state drives, the difference between the speed of sequential and arbitrary read / write operations should not differ much from each other, usually no more than 2-3 times. Connection speeds such as 3Gb SATA, 1Gb iSCSI, or 2 / 4Gb FC will affect the speed.

If the server boots from a local disk and stores the SQL server data on another disk, you should also include both of these disks in your test plan. Comparison of the test results of the CrystalDiskMark disk, on which variables are stored, data with indicators of the disk on which the operating system is installed, can demonstrate an advantage in the performance of the second. In such a situation, the system administrator needs to check the disk or SAN storage settings for correctness and optimal performance.

Prioritization when choosing disks

Information about the purposes for which the portal is used allows you to correctly prioritize the selection of disks for storing SharePoint databases.

If the corporate portal is mainly used by users for reading content and there is no active daily increase in content (for example, the company's external website), the most efficient disks need to be allocated for data storage, having allocated less efficient storage for transaction log files:

| Speed / Usage Scenario | The prevalence of viewing content over its editing (external website) is significant |

|---|---|

| Highest performance | Data Files and Tempdb Database Transaction Log Files |

| ... | Database files |

| ... | Search service data files except administration database |

| Lowest performance | SharePoint Content Database Transaction Log Files |

If the corporate portal is used to organize collaboration between users who actively download dozens of documents every day, the priorities will be different:

| Speed / Usage Scenario | Predominance of content editing over its reading (external website) |

|---|---|

| Highest performance | Data Files and Tempdb Database Transaction Log Files |

| ... | SharePoint Content Database Transaction Log Files |

| ... | Search service data files except administration database |

| Lowest performance | Content Database Data Files |

Recommendations for database storage types for a SharePoint farm

Recommendations for choosing a disk for tempdb

The low speed of read / write operations for the tempdb system database of the SQL server seriously affects the overall performance of the SharePoint farm, as a result of the performance of the corporate portal. The best recommendation would be to use RAID 10 to store the files of this database.

Disc recommendations for heavily used content databases

For sites intended for collaboration or large volume of update operations, special attention should be paid to the choice of disks for storing SharePoint content database files. The following recommendations should be considered:

- Placement of data files and transaction logs for content databases on different physical disks

- Consider the growth of content in the design of portal solutions. The most optimal is to support the size of each individual content database up to 200 GB.

- For heavily used SharePoint content databases, it is recommended to use several data files, placing them on separate disks. In order to avoid sudden system failures of databases, it is strongly recommended not to use the database size limit setting.

Calculate the expected size of the content database

The general formula for calculating the expected size of a content database is:

((D * V) * S) + (10Kb * (L + (V * D))), where:

- D - number of documents, taking into account personal sites, documents and pages in libraries, forecast for an increase in the number of countries in the coming year;

- V - number — in versions of documents;

- S - the average size of the documents (if it is possible to find out);

- 10 Kb - constant, expected SharePoint 2013 number of metadata for one document;

- L - the expected number of list items, taking into account the forecast for an increase in the count in the coming year.

It is necessary to consider additional storage costs:

- Audit, the storage of audit data + forecasts for the growth of audit data;

- Recycle bin, data storage time in the site basket and site collection.

RAID recommendations

Despite the fact that RAID 5 has the best performance / cost, for SharePoint databases it is strongly recommended to use RAID 10, especially in the case of active use of the corporate portal for user collaboration. RAID 5 .

, SQL SharePoint .

SharePoint , SQL SharePoint, .

SQL SharePoint Windows Server 2012 SharePoint:

- , , . , .

- , . , , , , .

.

, SharePoint :

- 64- Windows Server 2008 R2 1 (SP1) Standard, Enterprise Datacenter

- 64- Windows Server 2012 Standard Datacenter

- Microsoft SharePoint Server 2013 SharePoint 2013 64- Windows Server 2012 R2 .

Scalable Networking Pack

2007 Windows Server 2003 SP2 , Scalable Networking Pack (SNP). . SNP , Receive Side Scaling (RSS), TCP/IP Chimney Offload ( TOE) Network Direct Memory Access (NetDMA). , TCP/IP .

- SNP Server 2003 SP2 - . Server 2003 . Server 2008, Server 2008 R2 . Server 2008 R2 ( SP1 ). , - , .

Receive Side Scaling

RSS .

RSS, , , . , , , , .

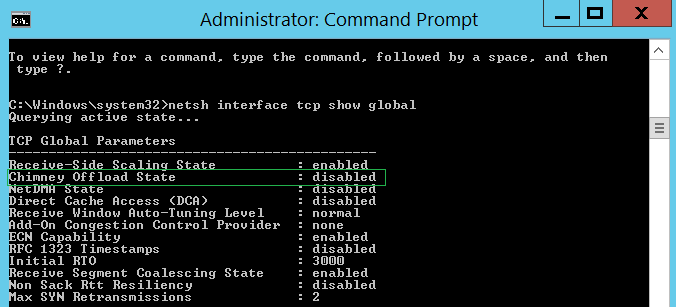

Server 2008 R2 RSS . , , :

netsh interface tcp show global

, RSS :

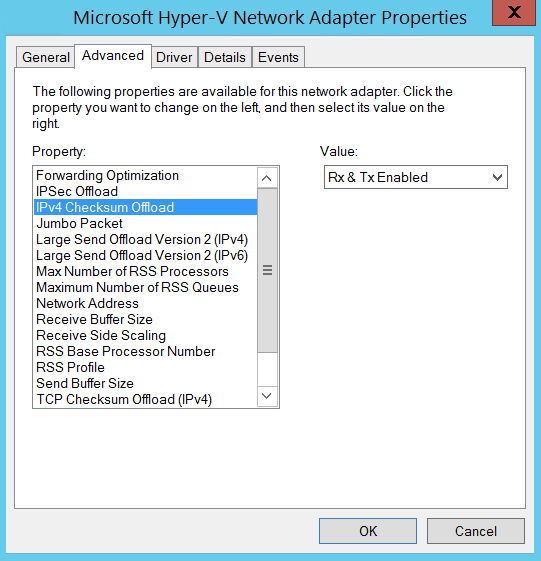

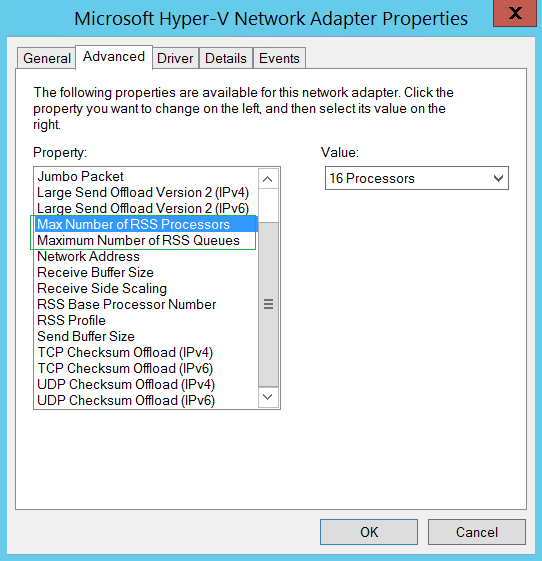

RSS , TCP Checksum Offload, IP Checksum Offload, Large Send Offload UDP Checksum Offload ( IPv4 IPv6). , , RSS .

, , , RSS, RSS.

RSS . , , .

TCP/IP Chimney Offload

TCP Chimney Offload ( TCP/IP Offloading , — TOE) TCP , TOE. TCP , . TOE TCP/IPv4, TCP/IPv6 , .

- , TCP/IP ( ), TOE , . , , , , , TOE.

, - SharePoint . TCP Chimney Offload.

TCP :

netsh interface tcp show global

TOE TCP :

netsh int tcp set global chimney=disable

TOE TOE TCP :

Netsh netsh interface tcp show chimneystats

IP

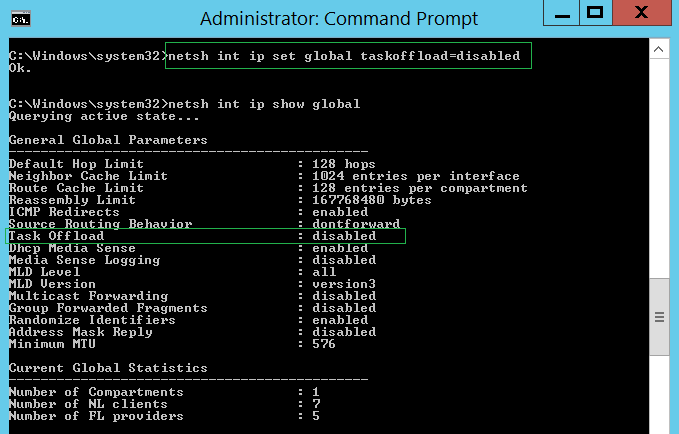

netsh int ip set global taskoffload=disable d

IP :

netsh int ip show global

Network Direct Memory Access

NetDMA – . , TOE . NetDMA CPU , CPU .

, NetDMA, , , – Windows Intel I/O Acceleration Technology (I/OAT). NetDMA AMD , , .

, TOE NetDMA , .

NetDMA Windows 2008 R2 :

netsh interface tcp set global netdma=enabled

:

netsh interface tcp show global

SNP

Windows Server 2008 R 2

| Parameter | |

|---|---|

| TCP Chimney Offload | automatic |

| RSS feed | enabled |

| NetDMA | enabled |

«Automatic» TCP Chimney Offload , , «TCP Chimney Offload» , 10 GB Ethernet.

Windows Server 2012, Windows Server 2012 R2 2

| Parameter | |

|---|---|

| TTCP Chimney Offload | disable |

| RSS feed | enabled |

| NetDMA |

Source: https://habr.com/ru/post/325242/

All Articles