Splunk + Check Point, an example of analyzing your firewall logs

If you are not satisfied with the standard reports and analytics tools from Check Point, if your Smart Event hangs and loads your server, if Smart Event reports seem to be somewhat uninformative ... Then why not create your own?

Today we will tell you how to load Check Point logs into Splunk, what reports can be, and how, filter the data so as not to load the system once again and reduce the license. And yes, if your company is not very big, then you can safely do with a free license.

Loading logs in Splunk

- We will need a server with Splunk installed on top of Linux (due to the OPSEC protocol specificity), we usually use CentOS. About how to install Splunk, we wrote earlier here .

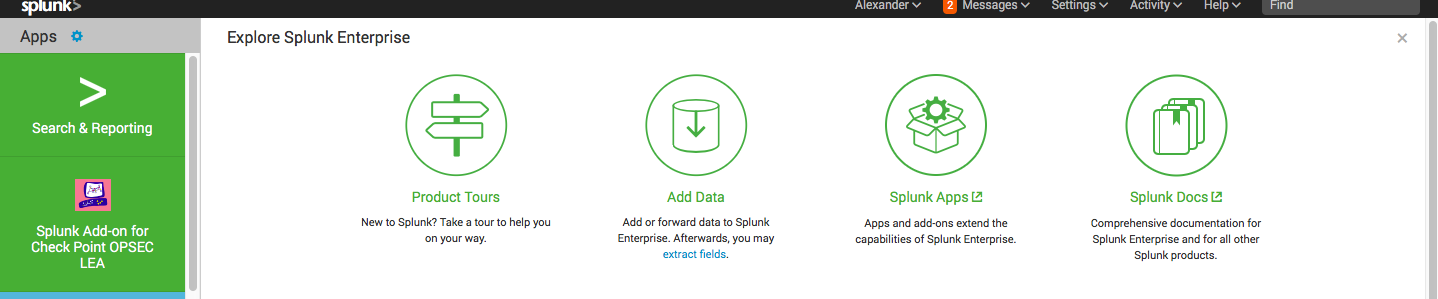

') - On Splunk you need to install the Add-on for Check Point OPSEC LEA , step-by-step instructions here . To download an addon with SplunkBase, you can use the same account you created to download Splunk.

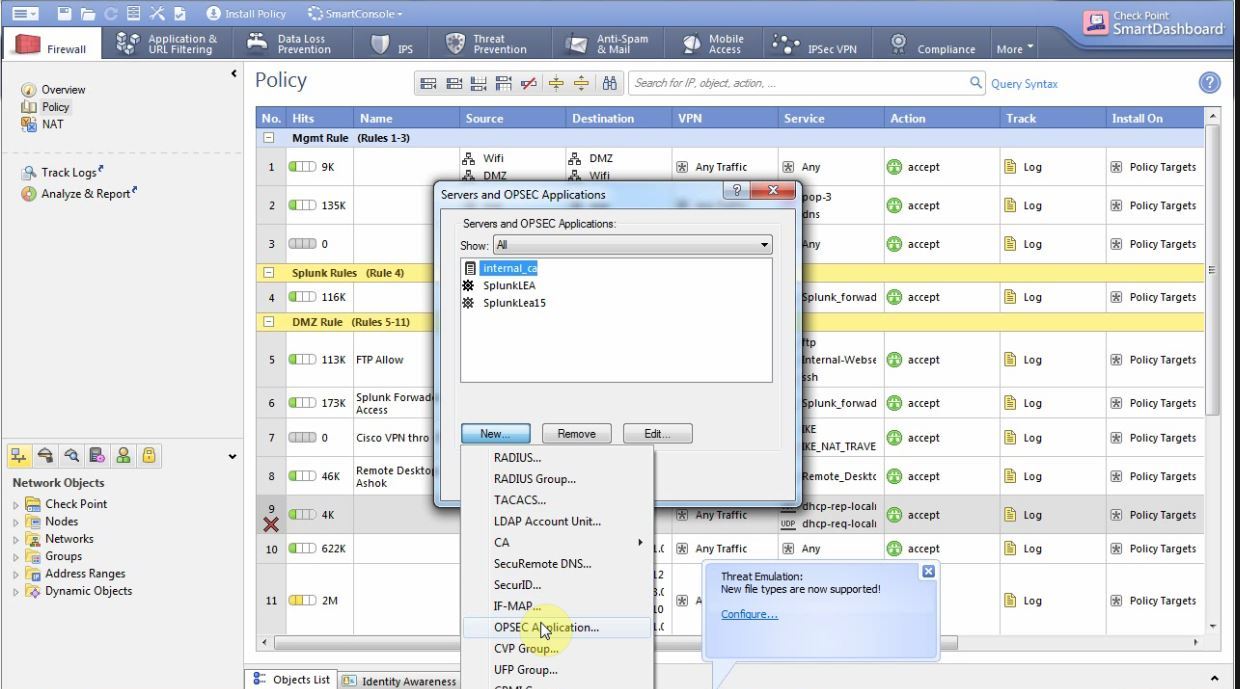

- Next you need:Prepare yourself Check Point Management Server:In Smart Dashboard: File → Manage → Servers And OPSEC Applications → New → OPSEC Application

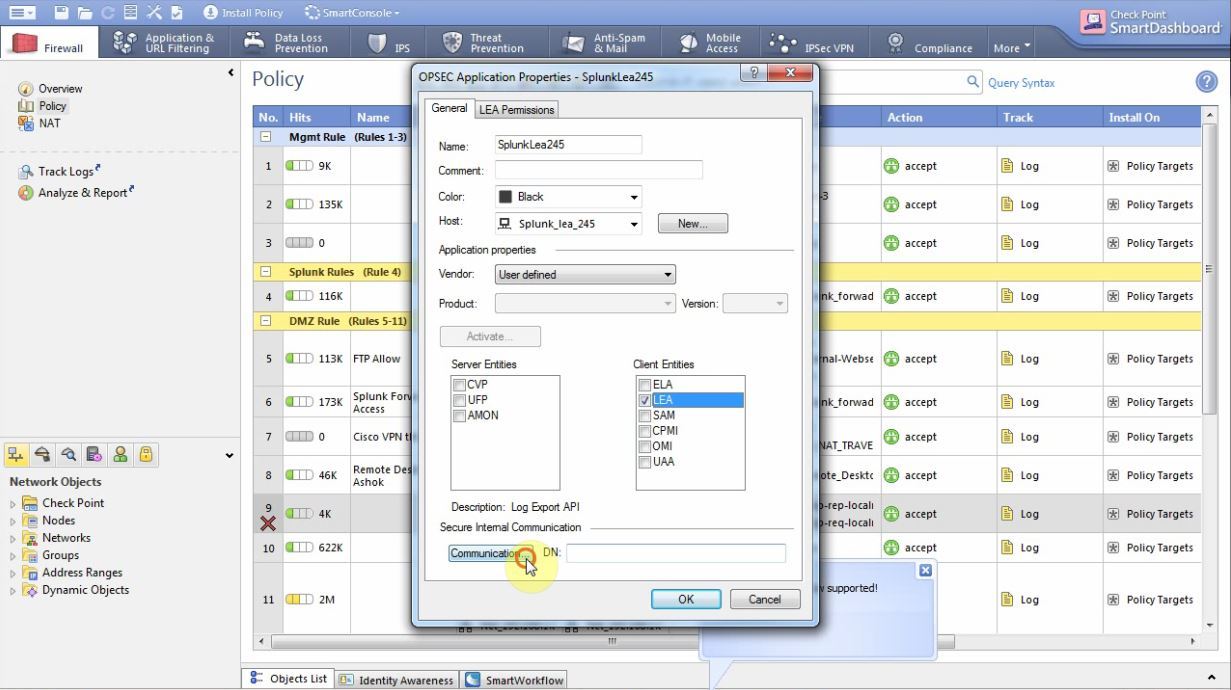

We give it any name (we need it later), in the host field we select a server with Splunk if it is already scored in Check Point or create a new host with the address of the Splunk server. Go to the Permissions tab and see if there was a shalok on Show all log fields . Go back to the previous tab, check the LEA checkbox and press the Communication button.

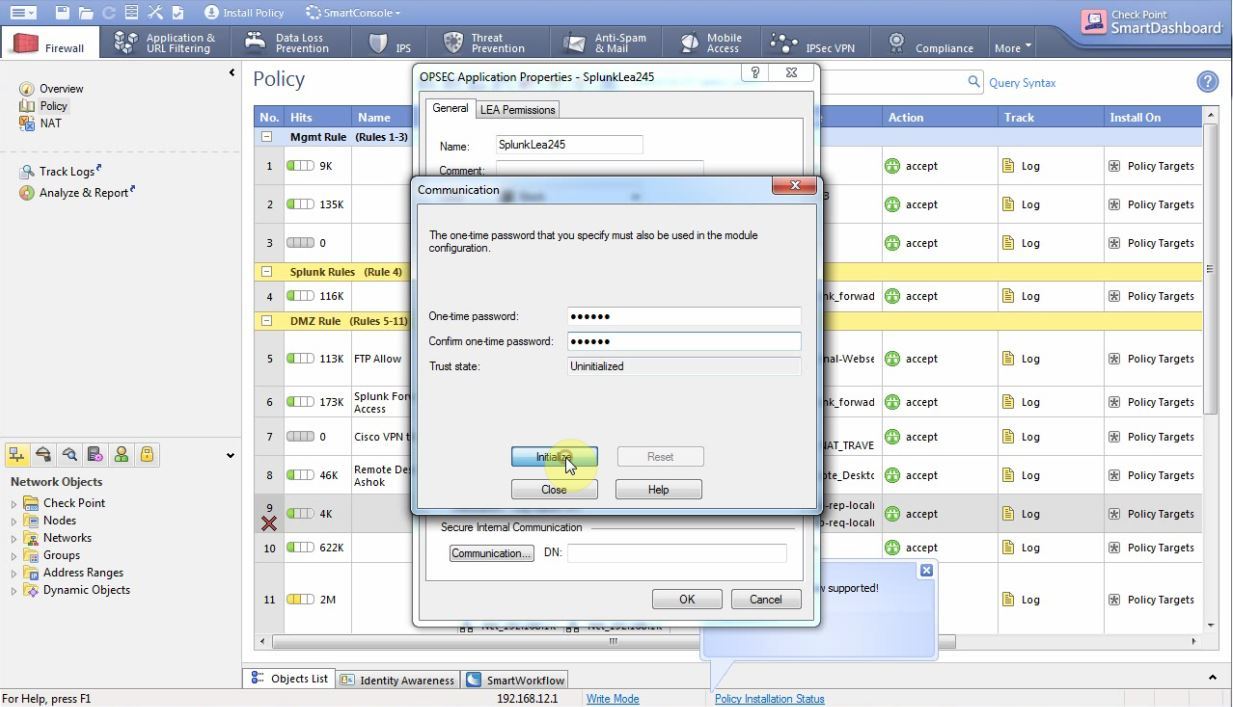

There we enter any one-time password (remember it)

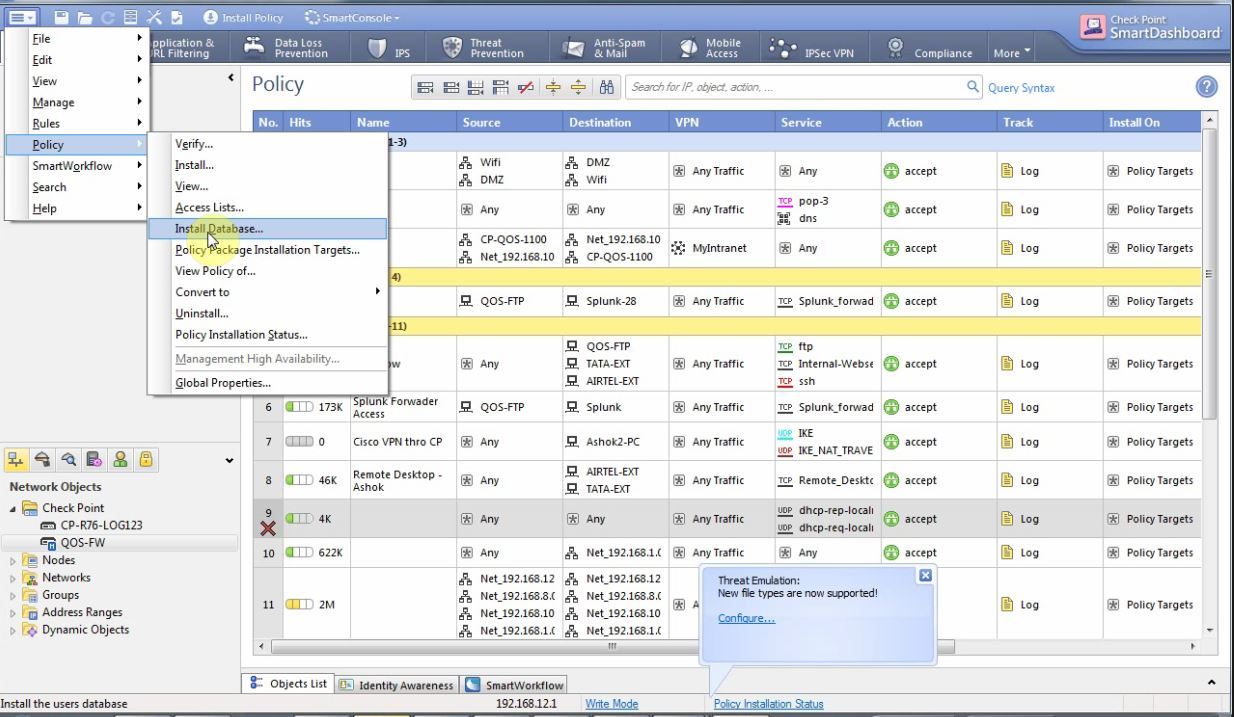

Then install the File → Policy → Install Database:

Then you need to create a rule that opens ports 18210 and 18184 for the server with Splunk and install the policies. - Install the following packages on the Linux server:

yum install glibc.i686 yum install pam.i686 - Next you need:Configure the Addon on Splunk itself.After installing OPSEC addon you should have its icon

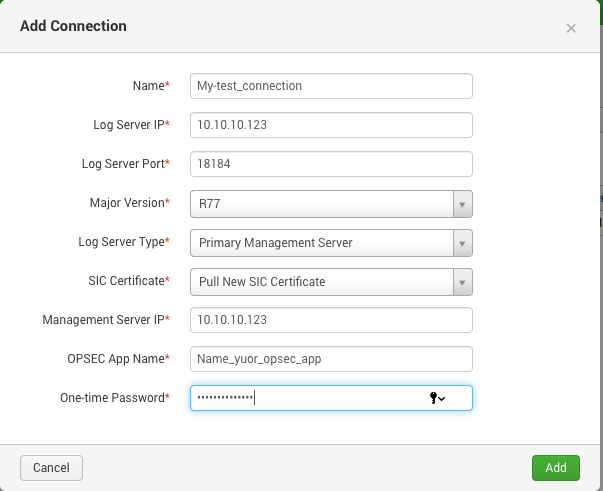

Go to it → Configuration → Add Connection and enter your data (important: the name of the application should coincide with what you indicated on the Check Point server, and the one-time password too)

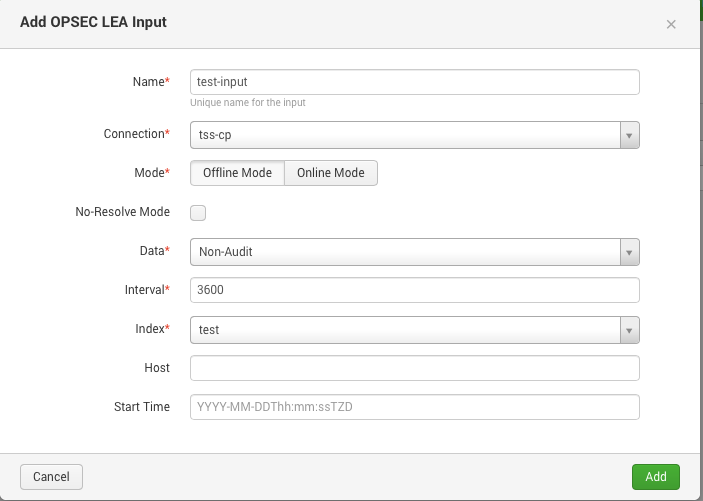

Next, go to the Inputs tab, select which logs to load (for completeness, select No Audit)

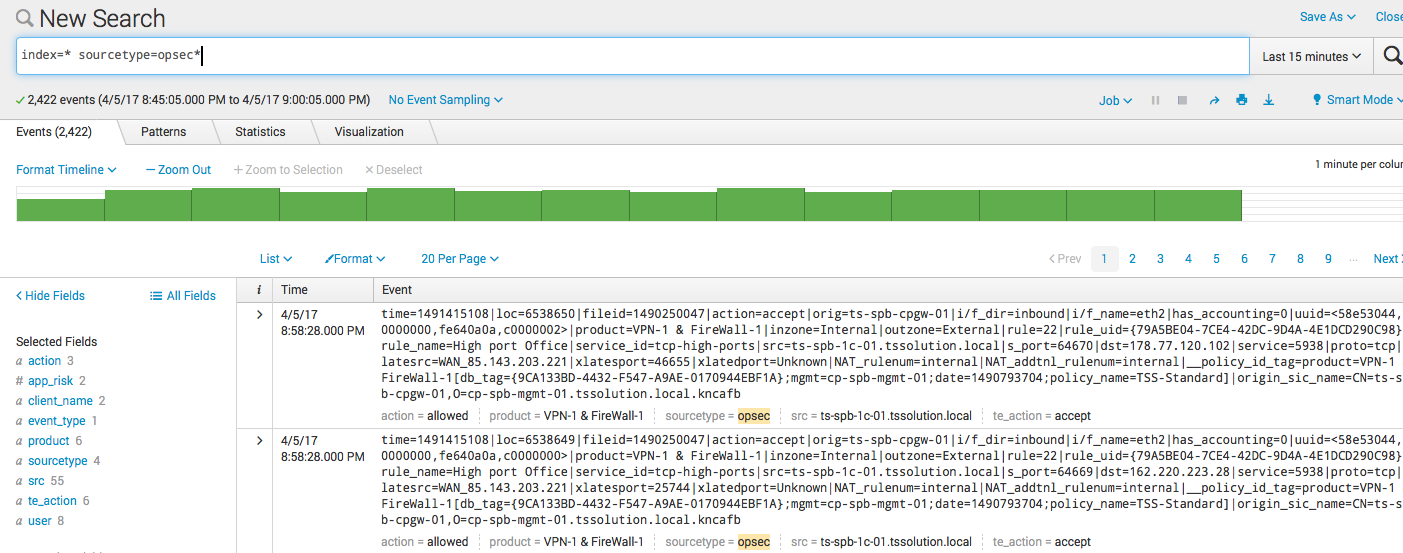

After that, you need to wait a bit and the logs will start to arrive, I will immediately say, on the first day there will be a lot of them, since Splunk will unload the logs in the past few weeks. As a result, you will receive:

index=* sourcetype=opsec*

Filtration

If you have https inspection turned off and there is no sandbox, then 90% of the logs will be logs of the firewall rules, they are actually of little interest. Most interesting are the logs of such blades as Application Control, URL Filter, Anti-Virus, Anti-Bot and, of course, IPS.

In order for Splunk not to index the logs of working out firewall rules, you need to create two text files, props.conf and transforms.conf, and put them in a folder:

opt/splunk/etc/apps/Splunk_TA_checkpoint-opseclea/local Contents of props.conf:

[opsec] TRANSFORMS-security= events-filter Content transforms.conf:

[events-filter] REGEX=(.+product\=VPN\-1\s\&\sFireWall\-1) DEST_KEY=queue FORMAT=nullQueue We will not describe in detail the logic of these files, as this is a rather lengthy process. A detailed description of each of them is on the website with documentation ( props.conf / transforms.conf ).

It is important ! For the changes to take effect, you need to restart the server with Splunk.

Log visualization

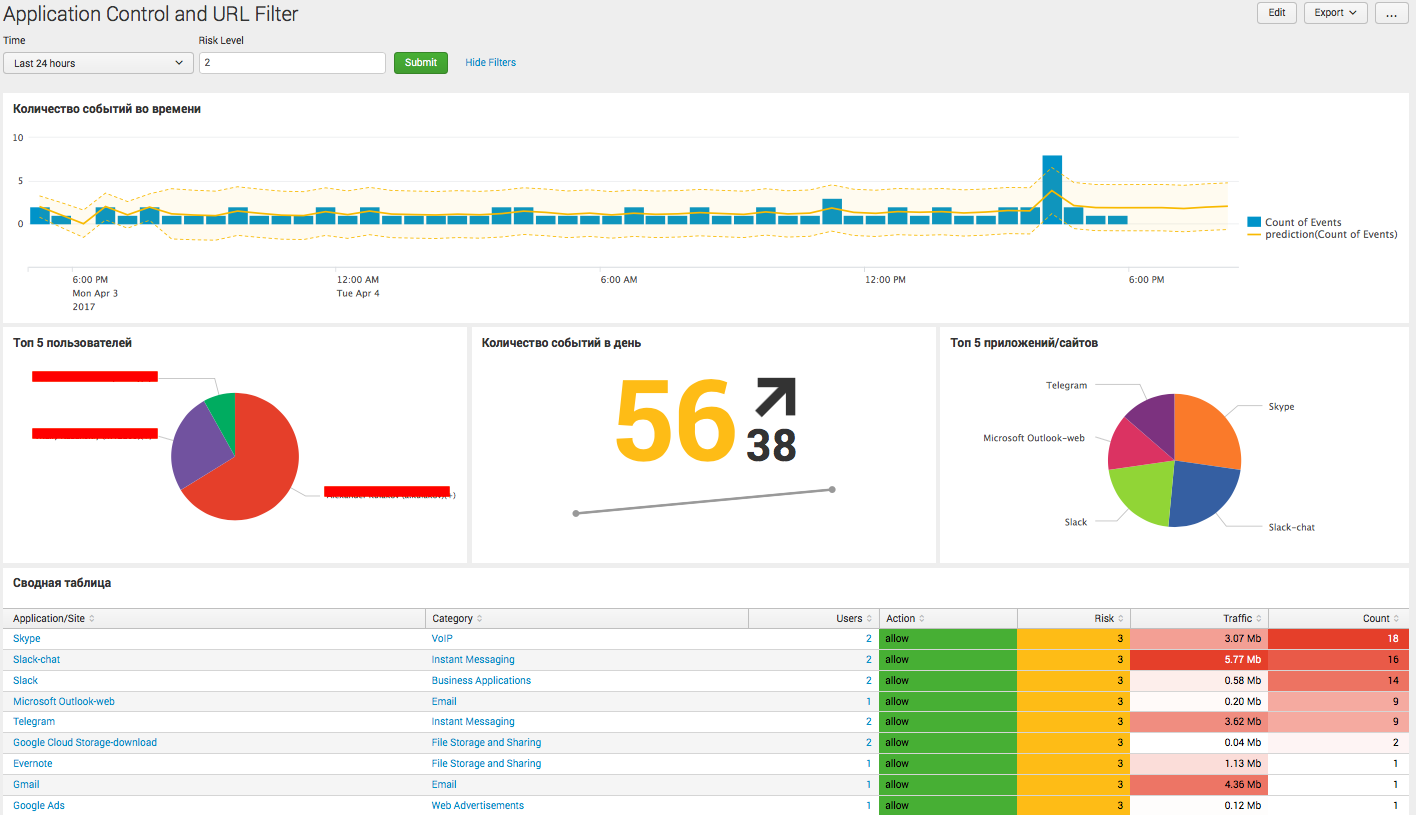

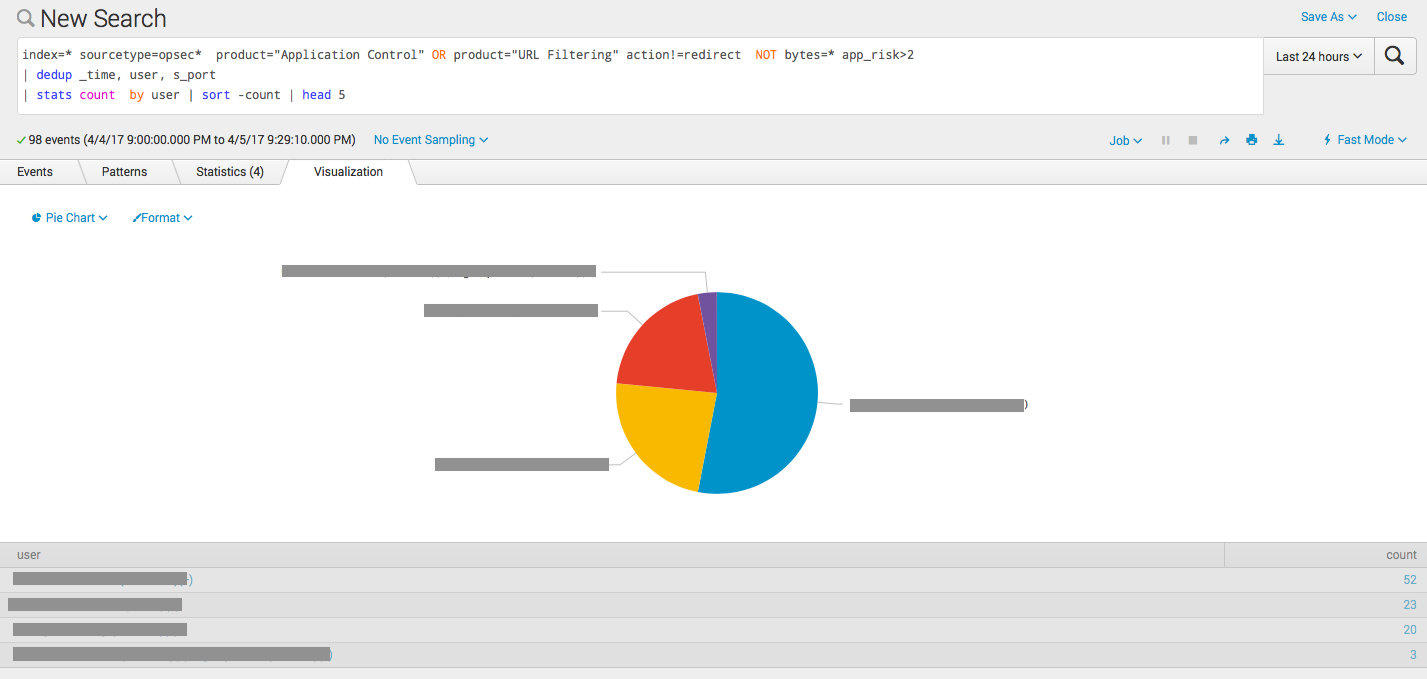

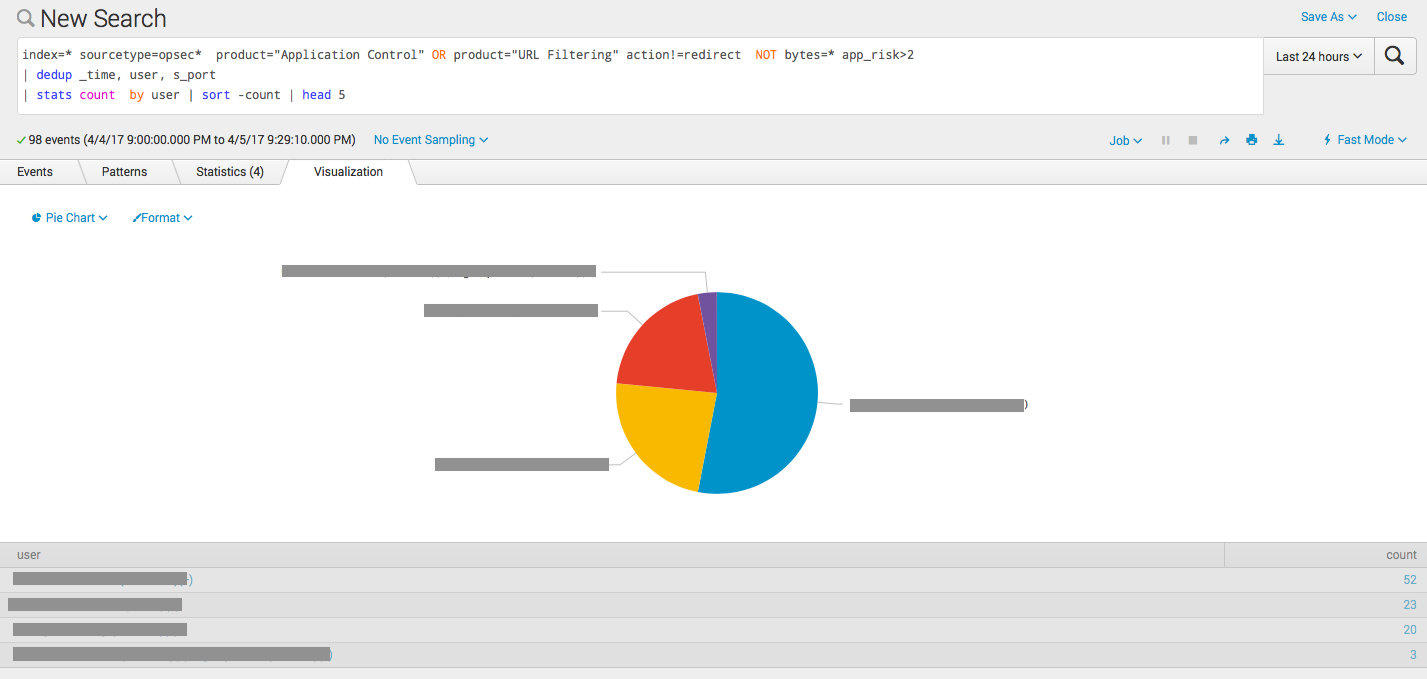

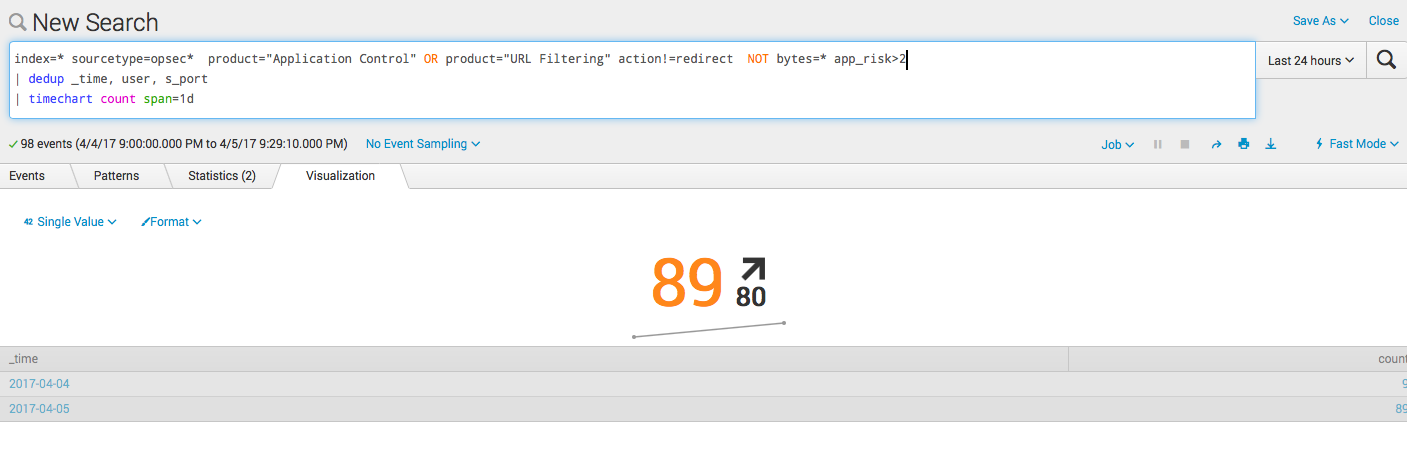

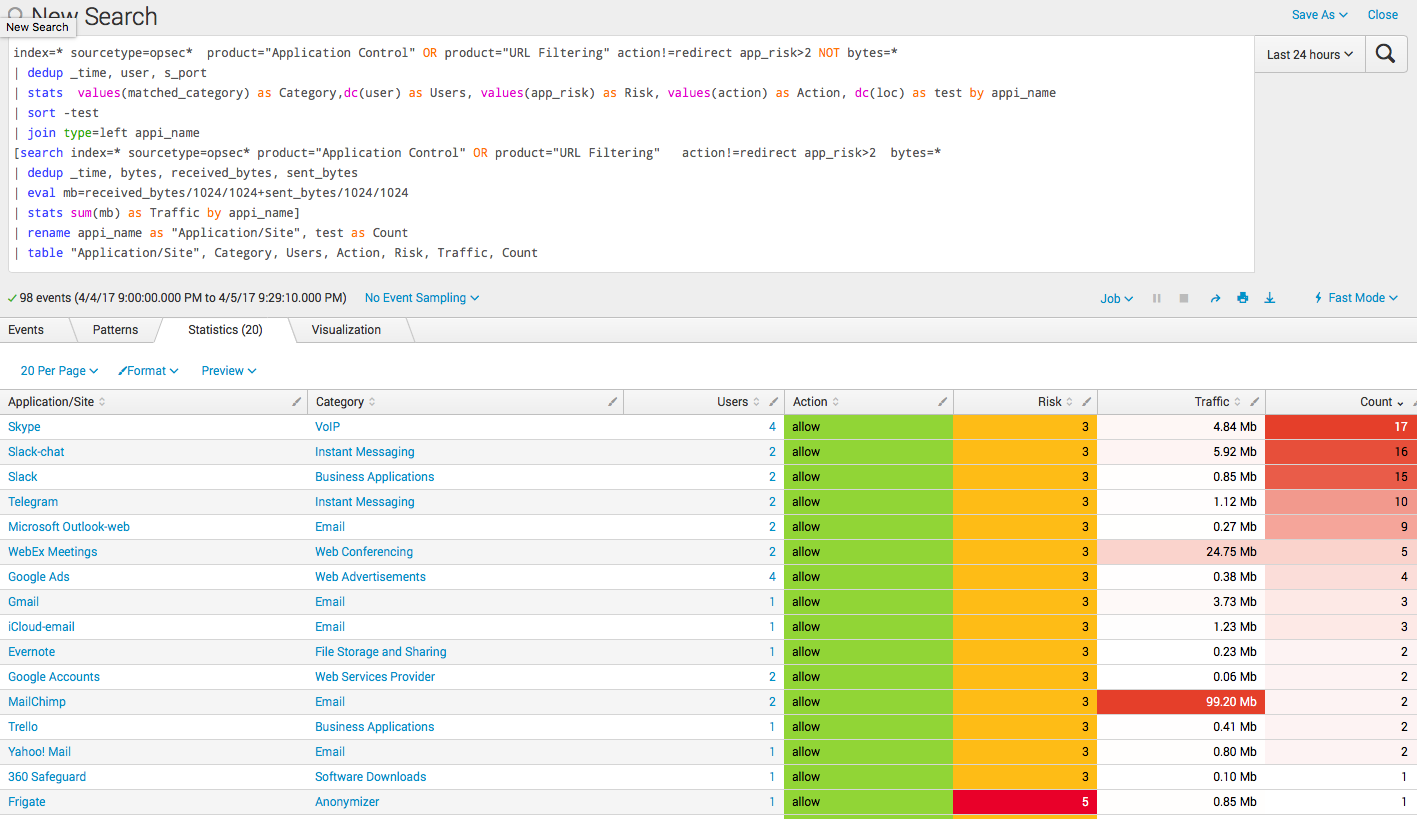

We showed one of the options for representing the logs of Application Controll and URL Filter above. Below are its components along with requests.

The number of events triggering blades in time

index=* sourcetype=opsec* product="Application Control" OR product="URL Filtering" action!=redirect app_risk>2 NOT bytes=* app_risk>2 | dedup _time, user, s_port, src, dst | timechart count as "Count of Events" | predict "Count of Events" Top 5 Users

index=* sourcetype=opsec* product="Application Control" OR product="URL Filtering" action!=redirect NOT bytes=* app_risk>2 | dedup _time, user, s_port | stats count by user | sort -count | head 5 The number of events per day with the trend

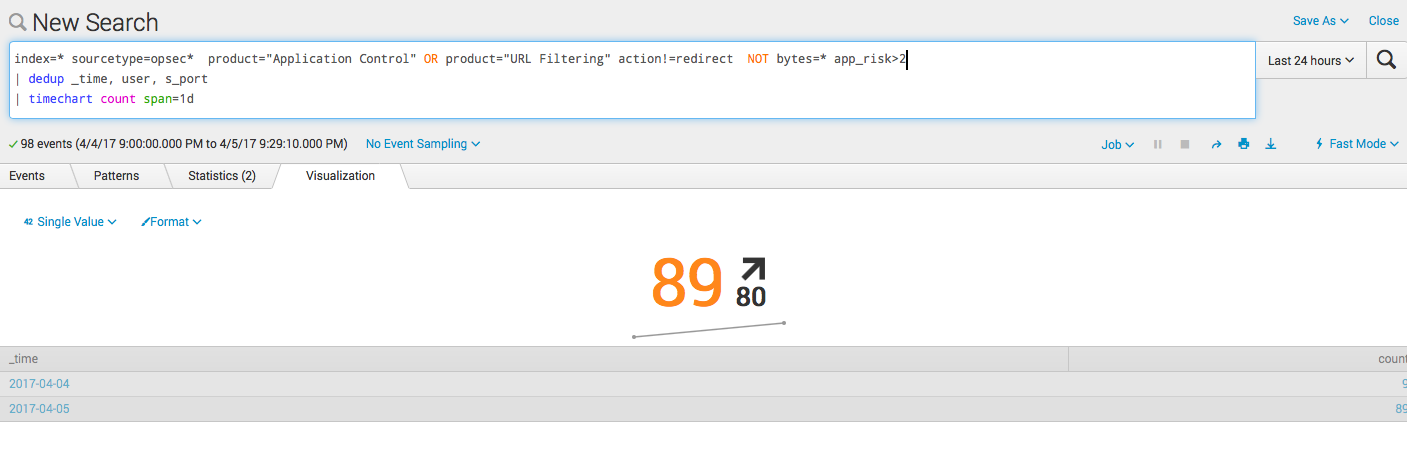

index=* sourcetype=opsec* product="Application Control" OR product="URL Filtering" action!=redirect NOT bytes=* app_risk>2 | dedup _time, user, s_port | timechart count span=1d Top 5 apps / sites

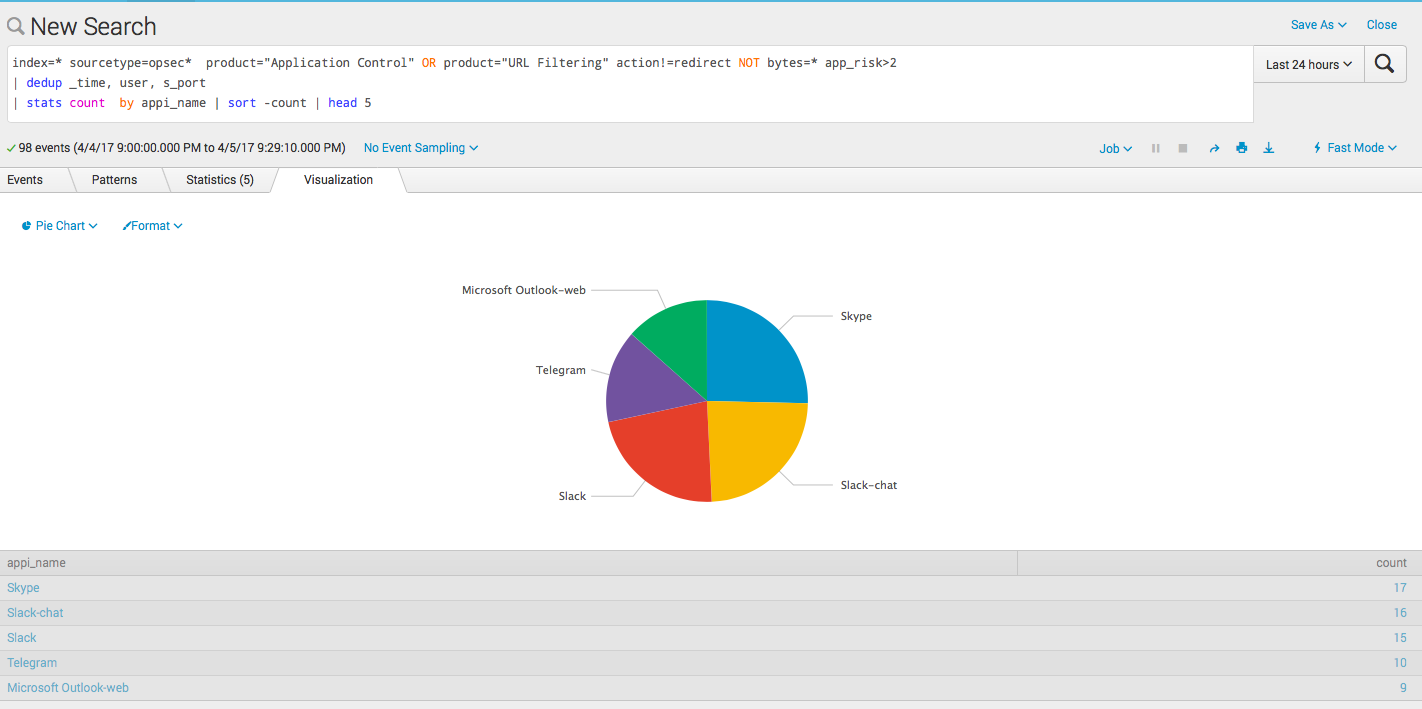

index=* sourcetype=opsec* product="Application Control" OR product="URL Filtering" action!=redirect NOT bytes=* app_risk>2 | dedup _time, user, s_port | stats count by appi_name | sort -count | head 5 Summary table

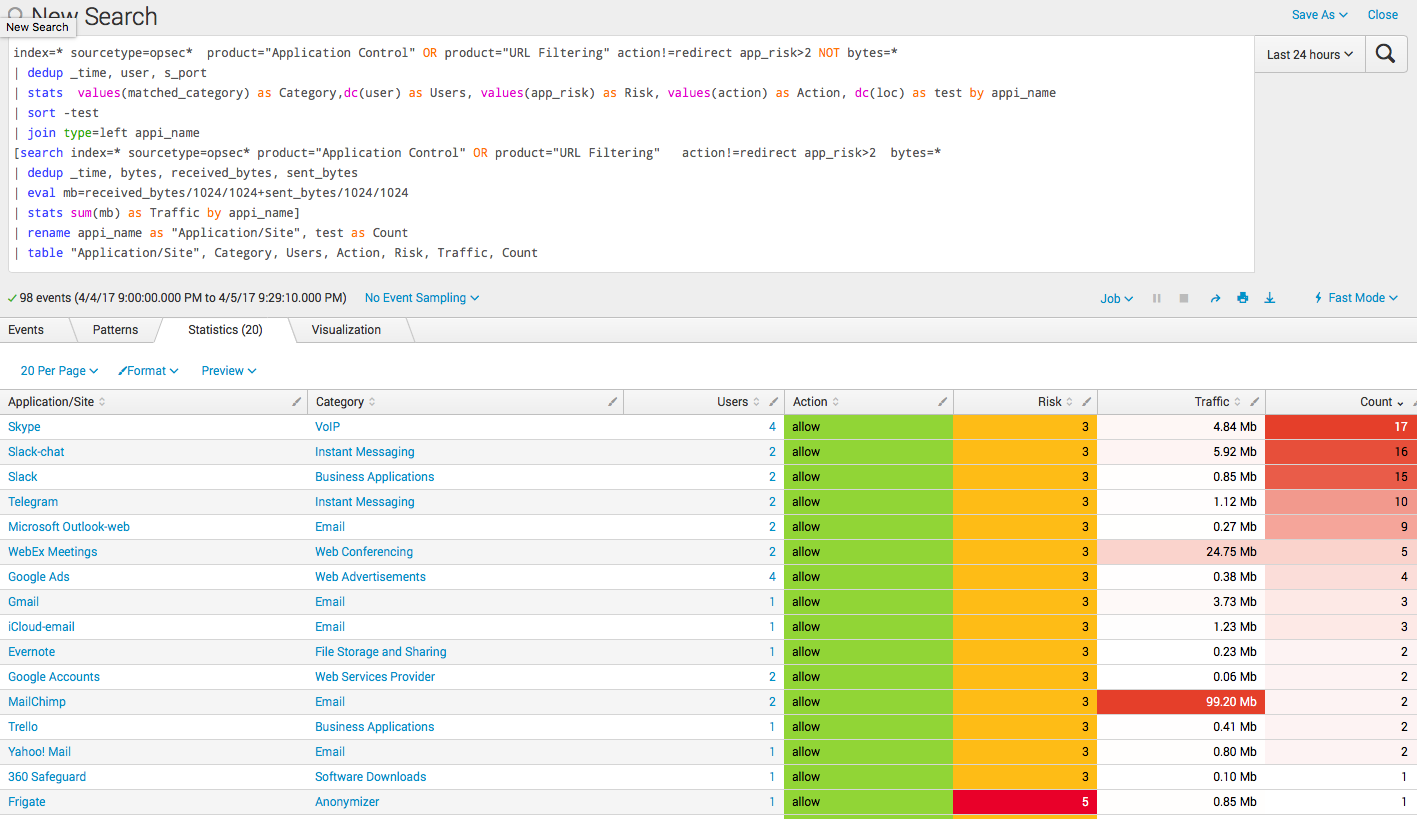

index=* sourcetype=opsec* product="Application Control" OR product="URL Filtering" action!=redirect app_risk>2 NOT bytes=* | dedup _time, user, s_port | stats values(matched_category) as Category,dc(user) as Users, values(app_risk) as Risk, values(action) as Action, dc(loc) as test by appi_name | sort -test | join type=left appi_name [search index=* sourcetype=opsec* product="Application Control" OR product="URL Filtering" action!=redirect app_risk>2 bytes=* | dedup _time, bytes, received_bytes, sent_bytes | eval mb=received_bytes/1024/1024+sent_bytes/1024/1024 | stats sum(mb) as Traffic by appi_name] | rename appi_name as "Application/Site", test as Count | table "Application/Site", Category, Users, Action, Risk, Traffic, Count findings

In this example, we showed how Splunk can be used to analyze Check Point logs. This is a small example relating to only two software blades, but the system’s capabilities are clearly visible from it. We also did not deal with the topic of alerts or running scripts based on query results, but all this is naturally possible.

And yes, in addition to Check Point logs, in the same Splunk you can download logs from another system, such as RSA Authentication and analyze their interconnections, but this is a smooth transition to the SIEM topic and a separate conversation.

PS

On June 28, 2018, “ Splunk Getting Started ” will be taught in Moscow , where in 6 hours the participants will receive a theoretical base and practical skills for working in Splunk. Learn more about learning and register at this link .

Source: https://habr.com/ru/post/325170/

All Articles