Frontera: crawl web framework architecture and current issues

Hello everyone, I am developing Frontera , the first ever framework for large-scale crawling of the Internet made in Python, with open source. With the help of Frontera, you can easily make a robot that can download content at a speed of thousands of pages per second, while following your crawling strategy and using a regular relational database or KV storage to store the link database and queue.

Development Frontera is funded by Scrapinghub Ltd., has a fully open source code (located on GitHub, BSD 3-clause license) and a modular architecture. We are trying to ensure that the development process is also as transparent and open as possible.

In this article I am going to talk about the problems that we have encountered in the development of Frontera and the operation of robots based on it.

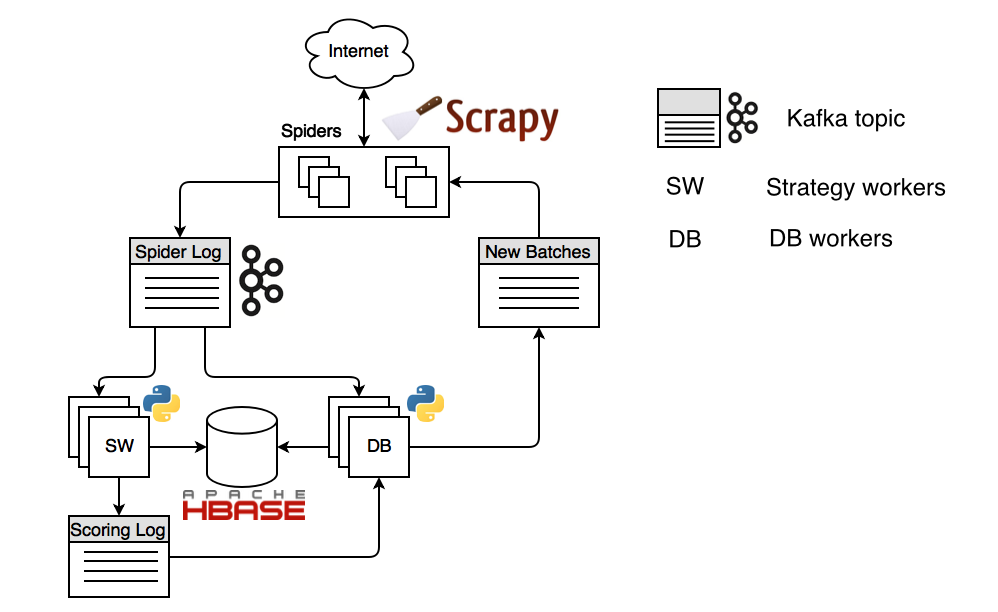

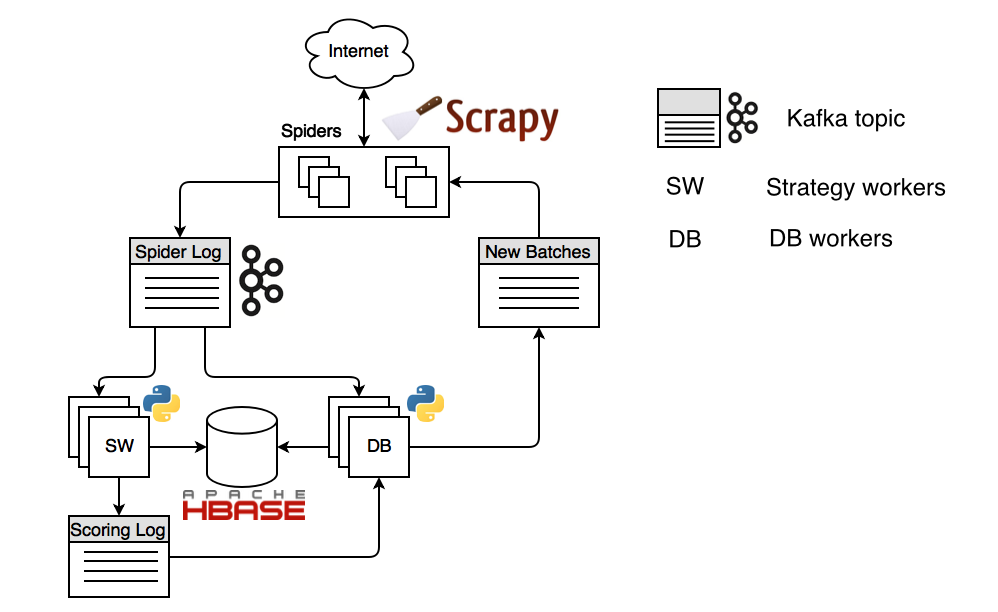

The device of the distributed robot based on Frontera looks like this:

')

The picture shows the configuration with the Apache HBase storage and the Apache Kafka data bus. The process starts from the workaround strategy (SW) worker, which schedules URLs from which to start the workaround. These URLs are “scoring log”, the topic of the Kafka (a dedicated message channel), are consumed by the database worker and they are also planned in the topic “new batches”, from where they come to the spiders on the basis of Scrapy. Scrapy Spider resolves a DNS name, maintains a pool of open connections, receives content and sends it to the topic “spider log”. From where the content is consumed again by the worker of the strategy and depending on the logic encoded in the bypass strategy, he plans new URLs.

If anyone is interested, here is a video of my report on how we circumvented the Spanish Internet with the help of Frontera .

At the moment we are faced with the fact that people who are trying to deploy a robot in their very difficult configuration of distributed components. Unfortunately, the deployment of Frontera in a cluster implies an understanding of its architecture, reading the documentation, proper configuration of the data bus (message bus) and storage for it. All this is very time consuming and not at all for beginners. Therefore, we want to automate the deployment on a cluster. We chose Kubernetes and Docker to achieve these goals. A big plus of Kubernetes is that it is supported by many cloud providers (AWS, Rackspace, GCE, etc.). with the help of it, it will be possible to deploy the Frontera even without having a Kubernetes-configured cluster.

Another problem is that it is like the Frontera water system of a nuclear power plant. It has consumers and manufacturers. It is very important for them to control characteristics such as flow rate and performance in different parts of the system. Here it should be recalled that Frontera is an online system. Classic robots ( Apache Nutch ), on the contrary, work in batch mode: first, a portion is scheduled, then downloaded, then parsed and re-scheduled. Simultaneous parsing and scheduling are not provided for in such systems. Thus, we have the problem of synchronization of the speed of work of different components. It is quite difficult to design a robot that bypasses pages with constant performance with a large number of threads, while keeping them in storage and planning new ones. The speed of the response of web servers, the size and number of pages on the site, the number of links vary; all this makes it impossible to precisely fit the components in performance. As a solution to this problem, we want to make a web interface based on Django. It will display the main parameters and if you can tell what steps need to be taken by the operator of the robot.

I already mentioned modular architecture. Like any open source project, we are committed to a variety of technologies that we support. Therefore, now in Pull Requests we are preparing support for distributed Redis, there have already been two attempts to make support for Apache Cassandra, well, we would like to support RabbitMQ as a data bus.

We hope to solve all these problems at least partially within the framework of Google Summer Of Code 2017. If something seems interesting to you and you are a student, let us know at frontera@scrapinghub.com and submit an application through the GSoC form . Submission of applications starts from March 20 and will last until April 3. The full schedule of GSoC 2017 is here .

Of course, there are also performance problems. At the moment, in Scrapinghub, in the pilot mode, there is a service for massive download of pages based on Frontera. The client provides us with a set of domains to which he would like to receive content, and we download them and put them in the S3 repository. Payment for the downloaded volume. In such conditions, we try to download as many pages as possible per unit of time. When trying to scale the Frontera to 60 spiders, we pushed so that HBase (through the Thrift interface) degrades when we write to it all the links found. We call this data reference base, and we need it in order to know when and what we downloaded, what answer we received, etc. On a cluster of 7 server regions, we have a number of requests up to 40-50K per second, and with such volumes the response time greatly increases. Write performance drops dramatically. Such a problem can be solved in different ways: for example, save to a separate fast log, and later write it to HBase using packet methods, or contact HBase directly bypassing Thrift via your own Java client.

We are also constantly working to improve reliability. Too large a downloaded document or sudden network problems should not cause components to stop. However, this sometimes happens. To diagnose such cases, we have made the SIGUSR1 OS signal handler, which saves the current process stack to the log. Several times this helped us a lot to understand what the problem was.

We plan to further improve the Frontera, and we hope that the community of active developers will grow.

Development Frontera is funded by Scrapinghub Ltd., has a fully open source code (located on GitHub, BSD 3-clause license) and a modular architecture. We are trying to ensure that the development process is also as transparent and open as possible.

In this article I am going to talk about the problems that we have encountered in the development of Frontera and the operation of robots based on it.

The device of the distributed robot based on Frontera looks like this:

')

The picture shows the configuration with the Apache HBase storage and the Apache Kafka data bus. The process starts from the workaround strategy (SW) worker, which schedules URLs from which to start the workaround. These URLs are “scoring log”, the topic of the Kafka (a dedicated message channel), are consumed by the database worker and they are also planned in the topic “new batches”, from where they come to the spiders on the basis of Scrapy. Scrapy Spider resolves a DNS name, maintains a pool of open connections, receives content and sends it to the topic “spider log”. From where the content is consumed again by the worker of the strategy and depending on the logic encoded in the bypass strategy, he plans new URLs.

If anyone is interested, here is a video of my report on how we circumvented the Spanish Internet with the help of Frontera .

At the moment we are faced with the fact that people who are trying to deploy a robot in their very difficult configuration of distributed components. Unfortunately, the deployment of Frontera in a cluster implies an understanding of its architecture, reading the documentation, proper configuration of the data bus (message bus) and storage for it. All this is very time consuming and not at all for beginners. Therefore, we want to automate the deployment on a cluster. We chose Kubernetes and Docker to achieve these goals. A big plus of Kubernetes is that it is supported by many cloud providers (AWS, Rackspace, GCE, etc.). with the help of it, it will be possible to deploy the Frontera even without having a Kubernetes-configured cluster.

Another problem is that it is like the Frontera water system of a nuclear power plant. It has consumers and manufacturers. It is very important for them to control characteristics such as flow rate and performance in different parts of the system. Here it should be recalled that Frontera is an online system. Classic robots ( Apache Nutch ), on the contrary, work in batch mode: first, a portion is scheduled, then downloaded, then parsed and re-scheduled. Simultaneous parsing and scheduling are not provided for in such systems. Thus, we have the problem of synchronization of the speed of work of different components. It is quite difficult to design a robot that bypasses pages with constant performance with a large number of threads, while keeping them in storage and planning new ones. The speed of the response of web servers, the size and number of pages on the site, the number of links vary; all this makes it impossible to precisely fit the components in performance. As a solution to this problem, we want to make a web interface based on Django. It will display the main parameters and if you can tell what steps need to be taken by the operator of the robot.

I already mentioned modular architecture. Like any open source project, we are committed to a variety of technologies that we support. Therefore, now in Pull Requests we are preparing support for distributed Redis, there have already been two attempts to make support for Apache Cassandra, well, we would like to support RabbitMQ as a data bus.

We hope to solve all these problems at least partially within the framework of Google Summer Of Code 2017. If something seems interesting to you and you are a student, let us know at frontera@scrapinghub.com and submit an application through the GSoC form . Submission of applications starts from March 20 and will last until April 3. The full schedule of GSoC 2017 is here .

Of course, there are also performance problems. At the moment, in Scrapinghub, in the pilot mode, there is a service for massive download of pages based on Frontera. The client provides us with a set of domains to which he would like to receive content, and we download them and put them in the S3 repository. Payment for the downloaded volume. In such conditions, we try to download as many pages as possible per unit of time. When trying to scale the Frontera to 60 spiders, we pushed so that HBase (through the Thrift interface) degrades when we write to it all the links found. We call this data reference base, and we need it in order to know when and what we downloaded, what answer we received, etc. On a cluster of 7 server regions, we have a number of requests up to 40-50K per second, and with such volumes the response time greatly increases. Write performance drops dramatically. Such a problem can be solved in different ways: for example, save to a separate fast log, and later write it to HBase using packet methods, or contact HBase directly bypassing Thrift via your own Java client.

We are also constantly working to improve reliability. Too large a downloaded document or sudden network problems should not cause components to stop. However, this sometimes happens. To diagnose such cases, we have made the SIGUSR1 OS signal handler, which saves the current process stack to the log. Several times this helped us a lot to understand what the problem was.

We plan to further improve the Frontera, and we hope that the community of active developers will grow.

Source: https://habr.com/ru/post/325154/

All Articles