We start VMWare ESXi 6.5 under QEMU hypervisor

There is a wonderful VMWare ESXi hypervisor in the world, and everything is fine in it, but the requirements for the hardware, on which it can work, are very immodest. ESXi fundamentally does not support software RAIDs, 100-megabit and cheap gigabit network cards, so you can try what it is like to work by purchasing the appropriate hardware.

However, ESXi is the most “delicious” feature of ESXi when we have more than one ESXi host, but clustering, live migration, VSAN distributed storage, a distributed network switch, and so on. In this case, the cost of the test equipment can already amount to a decent amount. Fortunately, ESXi supports Nested Virtualization - that is, the ability to run from under an already running hypervisor. At the same time, the external hypervisor understands that his guest needs access to hardware virtualization, and ESXi knows that it does not work on bare metal. As a rule, ESXi is also used as the main hypervisor - this configuration has been supported by VMWare for quite some time. We will try to run ESXi using the QEMU hypervisor. The network has instructions on this subject, but, as we will see below, they are slightly out of date.

To begin with, we denote the version of QEMU, on which we will conduct experiments:

user@debian-pc:~$ QEMU emulator version 2.8.0(Debian 1:2.8+dfsg-2) Copyright (c) 2003-2016 Fabrice Bellard and the QEMU Project developers The latest version at the moment, but my focus even turned out to be 2.4.0.

Then, turn off the impolite behavior of the KVM module at times when the guest tries to read machine-specific registers, which are not really present. By default, KVM in response to this, generates inside the guest an exception to the General protection fault , which causes the guest to go to the blue (in our case, pink) death screen. Let's do it under the root:

')

root@debian-pc:/> echo 1 > /sys/module/kvm/parameters/ignore_msrs In some distributions, the kvm module is loaded by default with the necessary parameters, in some - not. In any case, you need to check dmesg for the presence of lines.

user@debian-pc:~$ dmesg | grep kvm [ 6.266942] kvm: Nested Virtualization enabled [ 6.266947] kvm: Nested Paging enabled If these lines are not present, add the line to /etc/modprobe.d/kvm.conf

options kvm-amd npt=1 nested=1 and reboot. For an Intel processor, the line will look like this:

options kvm-intel ept=1 nested=1 What is interesting is that only kvm-amd delivers messages about enabled Nested Paging / Nested Virtualization to dmesg, but kvm-intel does not do this.

Let's try to solve the problem “in the forehead” - let's go to the VMWare website, register there and download the latest VMware-VMvisor-Installer-201701001-4887370.x86_64.iso image.

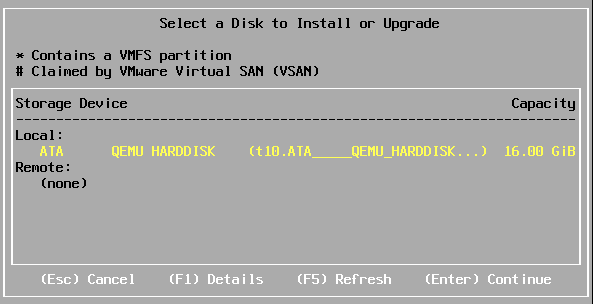

We will not be blunt, we will create an analog “flash drive” on 16Gb, take for sure the supported e1000 network card, put RAM in 4 Gb (with less memory, ESXi is not guaranteed to come up) and assume that at least ESXi will not see the IDE in this configuration -disk:

user@debian-pc:~$ qemu-img create -f qcow2 -o nocow=on /media/storage/VMs/esx_6.5-1.qcow2 16G Formatting '/media/storage/VMs/esx_6.5-1.qcow2', fmt=qcow2 size=17179869184 encryption=off cluster_size=65536 lazy_refcounts=off refcount_bits=16 nocow=on user@debian-pc:~$ qemu-system-x86_64 --enable-kvm -cpu host -smp 2 -m 4096 -hda /media/storage/VMs/esxi_6.5-1.qcow2 -cdrom /media/storage/iso/VMware-VMvisor-Installer-201701001-4887370.x86_64.iso -netdev user,id=hostnet0 -device e1000,netdev=hostnet0,id=net0 And here we are waited by the first surprise - ESXi not only detects our IDE disk, but also successfully installs on it, although, for five minutes, it hangs on 27% of the installation:

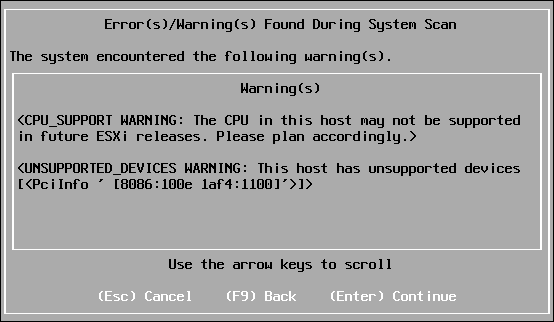

By the way, before starting the installation, I get this message:

Well, with the processor it is clear - I used the option -cpu host, which copies the CPUID of the host processor into a guest, and my host processor is the AMD A8-3850 APU for the deceased socket FM1. It is strange that ESXi is generally placed on such an iron.

But 8086: 100e is the identifier of the chip “Intel 82540EM Gigabit Ethernet Controller”, which for some time was declared unsupported, i.e. He works, but technical support does not work with him.

In general, QEMU supports emulation of various network cards:

user@debian-pc:~$ qemu-system-x86_64 -device help < > Network devices: name "e1000", bus PCI, alias "e1000-82540em", desc "Intel Gigabit Ethernet" name "e1000-82544gc", bus PCI, desc "Intel Gigabit Ethernet" name "e1000-82545em", bus PCI, desc "Intel Gigabit Ethernet" name "e1000e", bus PCI, desc "Intel 82574L GbE Controller" name "i82550", bus PCI, desc "Intel i82550 Ethernet" name "i82551", bus PCI, desc "Intel i82551 Ethernet" name "i82557a", bus PCI, desc "Intel i82557A Ethernet" name "i82557b", bus PCI, desc "Intel i82557B Ethernet" name "i82557c", bus PCI, desc "Intel i82557C Ethernet" name "i82558a", bus PCI, desc "Intel i82558A Ethernet" name "i82558b", bus PCI, desc "Intel i82558B Ethernet" name "i82559a", bus PCI, desc "Intel i82559A Ethernet" name "i82559b", bus PCI, desc "Intel i82559B Ethernet" name "i82559c", bus PCI, desc "Intel i82559C Ethernet" name "i82559er", bus PCI, desc "Intel i82559ER Ethernet" name "i82562", bus PCI, desc "Intel i82562 Ethernet" name "i82801", bus PCI, desc "Intel i82801 Ethernet" name "ne2k_isa", bus ISA name "ne2k_pci", bus PCI name "pcnet", bus PCI name "rocker", bus PCI, desc "Rocker Switch" name "rtl8139", bus PCI name "usb-bt-dongle", bus usb-bus name "usb-net", bus usb-bus name "virtio-net-device", bus virtio-bus name "virtio-net-pci", bus PCI, alias "virtio-net" name "vmxnet3", bus PCI, desc "VMWare Paravirtualized Ethernet v3" < > but not all of them work equally well in ESXi, for example, with the formally supported e1000e, port forwarding does not work in the user-mode network, and half of the packets in vmxnet3 are missing. So let's stop at e1000.

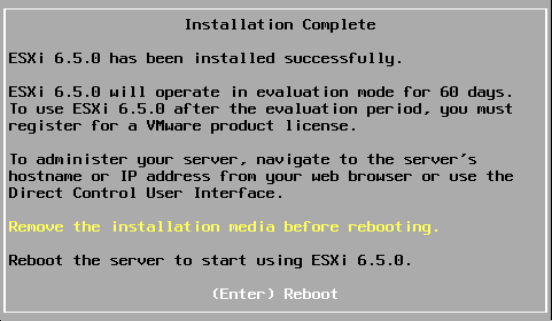

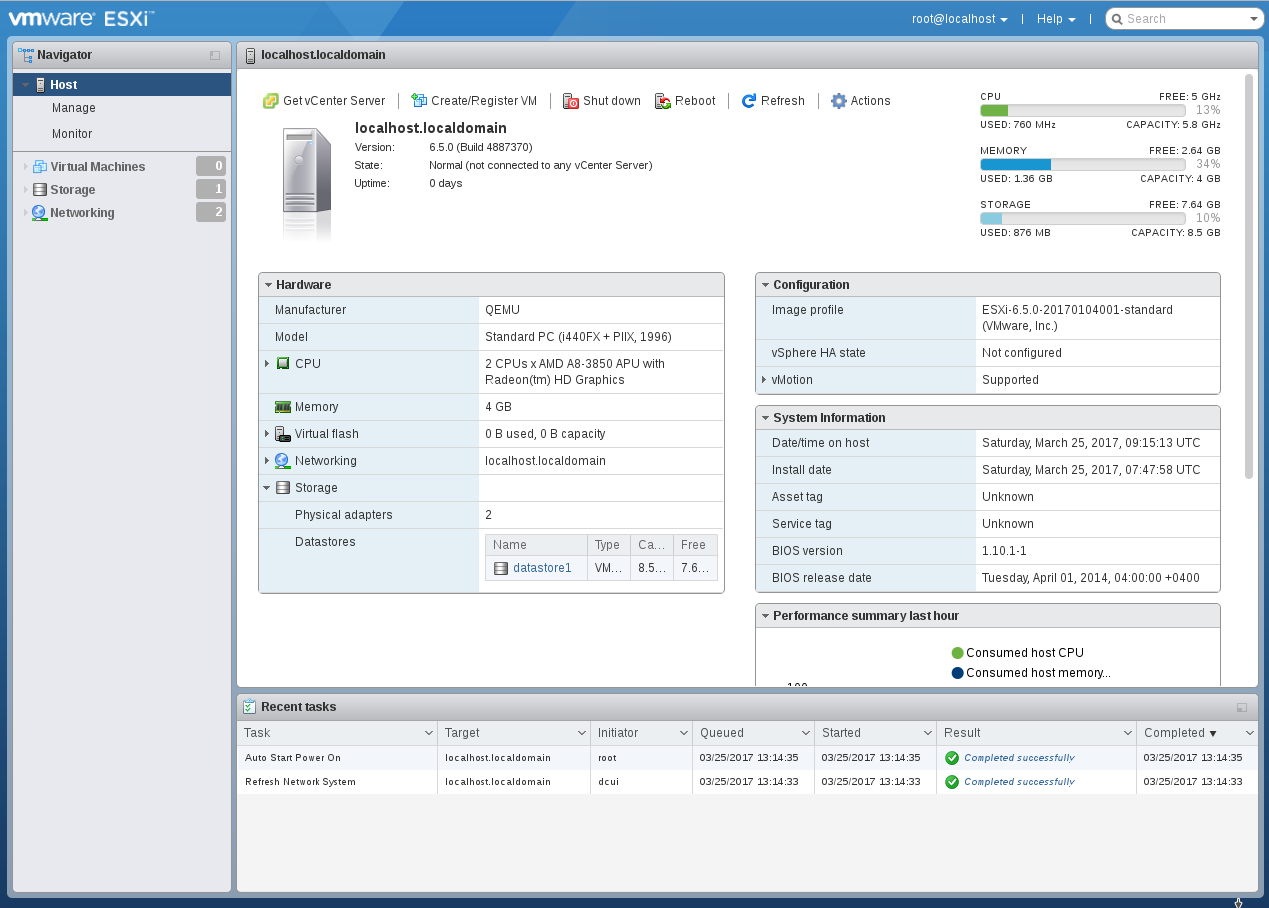

Reboot the VM and see that the hypervisor started successfully. Actually, that's all - QEMU patch for ESXi, as recommended by some manuals, is not necessary.

It should be noted that I use the nocow = on parameter when creating a disk, since the VM disk will lie on btrfs, which itself is a file system with the concept of copy-on-write. If we add to this the fact that a thin-provisioned qcow2 disk also implements this principle, then we get a multiple increase in the number of records per disk. The nocow = on parameter causes qemu-img to create a file with the nocow attribute and thus block the copy-on-write mechanism in btrfs for a specific file.

In the user network mode, a lightweight DHCP server is running inside the VM, so you do not need to assign an address, but you have to forward ports. Go to the QEMU console by pressing Ctrl + Alt + 1, enter the command there

> hostfwd_add tcp::4443-:443 and forward port 443 from the network interface of the virtual machine to port 4443 of the host. Then in the browser we type

https: // localhost: 4443 / ui

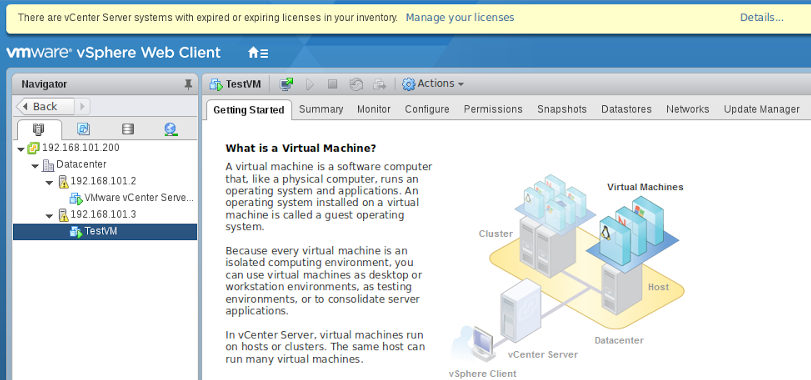

confirm the security exception (ESXi, of course, while using a self-signed certificate for https) and see the hypervisor web interface:

Surprisingly, the ESXi installer even created “storage” in the free disk space as large as 8Gb. First of all, we will install a package with an updated Web-interface, because the development of this most useful component is faster than new versions of ESXi are released. Go to the QEMU console via Ctrl + Alt + 1 and forward port 22 there:

> hostfwd_add tcp::2222-:22 then we switch to the hypervisor console via Ctrl + Alt + 2, press F2 - Troubleshooting Options - Enable SSH and connect with the SSH client:

user@debian-pc:~$ ssh root@127.0.0.1 -p 2222 Password: The time and date of this login have been sent to the system logs. VMware offers supported, powerful system administration tools. Please see www.vmware.com/go/sysadmintools for details. The ESXi Shell can be disabled by an administrative user. See the vSphere Security documentation for more information. Go to the temporary directory

[root@localhost:~] cd /tmp Download the update

[root@localhost:/tmp] wget http://download3.vmware.com/software/vmw-tools/esxui/esxui-offline-bundle-6.x-5214684.zip Connecting to download3.vmware.com (172.227.88.162:80) esxui-offline-bundle 100% |************************************************************************************| 3398k 0:00:00 ETA and put it

[root@localhost:/tmp] esxcli software vib install -d /tmp/esxui-offline-bundle-6.x-5214684.zip Installation Result Message: Operation finished successfully. Reboot Required: false VIBs Installed: VMware_bootbank_esx-ui_1.17.0-5214684 VIBs Removed: VMware_bootbank_esx-ui_1.8.0-4516221 VIBs Skipped: As you can see, the size of the web interface is just over three megabytes.

Now we will try to improve our virtual machine. First of all, we will change the disk controller from IDE to AHCI, because the implementation of the PIIX3 controller of 1996 release in QEMU, as it were, is slightly slow. And the AHCI controller (Intel ICH9 chipset is emulated), firstly, faster, and, secondly, it supports NCQ command queues.

user@debian-pc:~$ qemu-system-x86_64 --enable-kvm -cpu host -smp 2 -m 4096 -device ich9-ahci,id=ahci -drive file=/media/storage/VMs/esxi_6.5-1.qcow2,if=none,id=drive0 -device ide-drive,drive=drive0,bus=ahci.0 -netdev user,id=hostnet0 -device e1000,netdev=hostnet0,id=net0 Even by reducing the load time of the components of the hypervisor, we can see that the gain in speed we received. To celebrate, go to the Web interface and ... how is it no disks? There is an AHCI controller on the “Adapters” tab, but disks are not detected on it. So how did the hypervisor boot up? Very simple - at the initial stage, the loader reads the disk data using the BIOS and it does not need to see the disks directly. After the components are loaded into memory, the loader transfers control to them, and the hypervisor initializes without a disk access.

Anyway, ESXi 6.5 does not see disks on the AHCI controller, but ESXi 6.0 saw these disks - I give a tooth. With the help of Google and such a mother, we find out the reason : in ESXi 6.5, the old ahci driver is replaced with the completely rewritten vmw_ahci driver, which is why SSDs are slowing down a lot of people, and we don’t have disks. According to the advice from the article doing on the hypervisor

[root@localhost:~] esxcli system module set --enabled=false --module=vmw_ahci reboot and ... nothing happens. What did we want? There are no disks, there is nowhere to write the configuration, therefore, our changes have not been preserved. It is necessary to return to the IDE-disk, execute this command and only then boot from AHCI - then the disks will be detected.

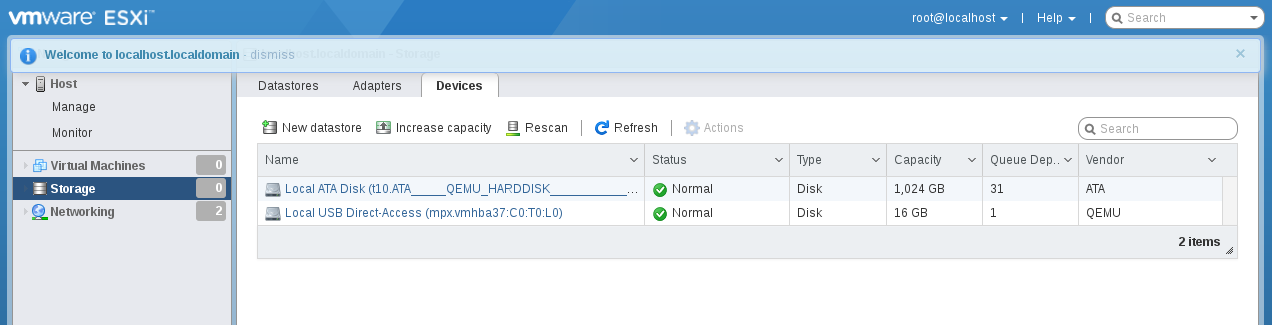

By the way, if we go to the web interface, we will see that the 8-gigabyte “storage” created by the installer is now unavailable. So for different types of controllers, VMWare has different storage definition policies. Now let's try to depict the actual system configuration, when the ESXi is installed on a USB flash drive, and the storage is located on hard drives. We use USB 3.0 emulation:

user@debian-pc:~$ qemu-system-x86_64 --enable-kvm -cpu host -smp 2 -m 4096 -device nec-usb-xhci,id=xhci -drive file=/media/storage/VMs/esxi_6.5-1.qcow2,if=none,id=drive0 -device usb-storage,drive=drive0,bus=xhci.0 -netdev user,id=hostnet0, -device e1000,netdev=hostnet0,id=net0 USB 3.0 drives are also not detected. Apparently, the driver is rewritten here too. Well, we already know what to do. We go to the console hypervisor, we write there

esxcli system module set -m=vmkusb -e=FALSE When the system boots, go to Storage - Devices and see our USB flash drive there. By the way, with the USB 3.0 controller nec-usb-xhci, the system boots much faster than with ich9-usb-ehci2.

So, at least two disk controller drivers in ESXi 6.5 are rewritten compared to ESXi 6.0. And it would seem - only the figure after the point in the version number has changed, one can say, a minor release.

If we add a 1 Tb disk to the virtual machine configuration, we can create a full-fledged storage in addition to the disk with the hypervisor. In order for the system to boot from a usb disk, and not from ahci, we use the bootindex parameter. Usually, the -boot parameter is used to control the boot order, but in our case it will not help, because the disks are “hanging” on different controllers. At the same time, we will replace the platform from the old 440fx chipset to the new Q35 / ICH9.

user@debian-pc:~$ qemu-img create -f qcow2 -o nocow=on /media/storage/VMs/esxi_6.5-1.qcow2 1T user@debian-pc:~$ qemu-system-x86_64 -machine q35 --enable-kvm -cpu host -smp 2 -m 4096 -device nec-usb-xhci,id=xhci -drive file=/media/storage/VMs/esxi_6.5-1.qcow2,if=none,id=drive0 -device usb-storage,drive=drive0,bus=xhci.0,bootindex=1 -device ich9-ahci,id=ahci -drive file=/media/storage/VMs/esxi_6.5-1-1T.qcow2,if=none,id=drive1 -device ide-drive,drive=drive1,bus=ahci.0,bootindex=2 -netdev user,id=hostnet0 -device e1000,netdev=hostnet0,id=net0 We go to the console - here they are, our disks.

Let's continue the experiments: now we need to combine several hypervisors into a network. Some libvirt independently creates a virtual switch and connects machines to it, and we will try to carry out these operations manually.

Let us have two virtual machines, which means we need two virtual adapters

user@debian-pc:~$ sudo ip tuntap add mode tap tap0 user@debian-pc:~$ sudo ip tuntap add mode tap tap1 Now we need a virtual switch. For a long time, it was customary for these purposes to use the virtual switch brctl managed by the Linux kernel. Now it is decided to solve the problem through Open vSwitch - a switch implementation designed specifically for virtual environments. Open vSwitch has built-in support for VLANs, tunneling protocols (GRE, etc.) for combining multiple switches and, most interestingly, OpenFlow technology. In other words, the L2 / L3 filtering rules can be downloaded to the switch in a readable format. Previously, filtering required using iptables / ebtables, but, as they say, the good thing “ebtables” will not be called.

Install Open vSwitch if it is not yet worth it:

user@debian-pc:~$ sudo aptitude install openvswitch-switch Create a virtual switch:

user@debian-pc:~$ sudo ovs-vsctl add-br ovs-bridge Add interfaces to it:

user@debian-pc:~$ sudo sudo ovs-vsctl add-port ovs-bridge tap0 user@debian-pc:~$ sudo sudo ovs-vsctl add-port ovs-bridge tap1 Let's see what happened:

user@debian-pc:~$ sudo ovs-vsctl show e4397bbd-0a73-4c0b-8007-12872cf132d9 Bridge ovs-bridge Port "tap1" Interface "tap1" Port ovs-bridge Interface ovs-bridge type: internal Port "tap0" Interface "tap0" ovs_version: "2.6.2" Run the interfaces:

user@debian-pc:~$ sudo ip link set tap0 up user@debian-pc:~$ sudo ip link set tap1 up user@debian-pc:~$ sudo ip link set ovs-bridge up Now assign the address to the switch interface:

user@debian-pc:~$ sudo ip addr add 192.168.101.1 dev ovs-bridge It would seem that simply changing the type of network in the QEMU command line from user to tap, like this:

-netdev tap,ifname=tap,script=no,downscripot=no,id=hostnet0 -device e1000,netdev=hostnet0,id=net0 and everything will work.

Let's try:

user@debian-pc:~$ qemu-system-x86_64 -machine q35 --enable-kvm -cpu host -smp 2 -m 4096 -device nec-usb-xhci,id=xhci -drive file=/media/storage/VMs/esxi_6.5-1.qcow2,if=none,id=drive0 -device usb-storage,drive=drive0,bus=xhci.0,bootindex=1 -device ich9-ahci,id=ahci -drive file=/media/storage/VMs/esxi_6.5-1-1T.qcow2,if=none,id=drive1 -device ide-drive,drive=drive1,bus=ahci.0,bootindex=2 -netdev tap,ifname=tap0,script=no,downscript=no,id=hostnet0 -device e1000,netdev=hostnet0,id=net0 Go to the ESXi console and assign it the address - 192.168.101.2, and then check the connection:

user@debian-pc:~$ ping 192.168.101.2 PING 192.168.101.2 (192.168.101.2) 56(84) bytes of data. 64 bytes from 192.168.101.2: icmp_seq=1 ttl=64 time=0.582 ms 64 bytes from 192.168.101.2: icmp_seq=2 ttl=64 time=0.611 ms ...

and from the ESXi console - F2- Test Network

Everything works, pings go.

Make a copy of the disk esxi_6.5-1.qcow2 and run the second instance of ESXi:

user@debian-pc:~$ qemu-img create -f qcow2 -o nocow=on /media/storage/VMs/esxi_6.5-2.qcow2 16G Formatting '/media/storage/VMs/esxi_6.5-2.qcow2', fmt=qcow2 size=17179869184 encryption=off cluster_size=65536 lazy_refcounts=off refcount_bits=16 nocow=on user@debian-pc:~$ dd if=/media/storage/VMs/esxi_6.5-1.qcow2 of=/media/storage/VMs/esxi_6.5-2.qcow2 bs=16M 31+1 records in 31+1 records out 531759104 bytes (532 MB, 507 MiB) copied, 10.6647 s, 49.9 MB/s user@debian-pc:~$ qemu-img create -f qcow2 -o nocow=on /media/storage/VMs/esxi_6.5-2-1T.qcow2 1T Formatting '/media/storage/VMs/esxi_6.5-2-1T.qcow2', fmt=qcow2 size=1099511627776 encryption=off cluster_size=65536 lazy_refcounts=off refcount_bits=16 nocow=on user@debian-pc:~$ qemu-system-x86_64 -machine q35 --enable-kvm -cpu host -smp 2 -m 4096 -device nec-usb-xhci,id=xhci -drive file=/media/storage/VMs/esxi_6.5-2.qcow2,if=none,id=drive0 -device usb-storage,drive=drive0,bus=xhci.0,bootindex=1 -device ich9-ahci,id=ahci -drive file=/media/storage/VMs/esxi_6.5-2-1T.qcow2,if=none,id=drive1 -device ide-drive,drive=drive1,bus=ahci.0,bootindex=2 -netdev tap,ifname=tap0,script=no,downscript=no,id=hostnet0 -device e1000,netdev=hostnet0,id=net0 Surprises await us here: ping from the host to the first guest goes only until we start ping to the second guest. After the interruption of the second ping command, the packets to the first guest start walking in 10 seconds. Pings between guests do not go at all.

It is clear that we screwed up with mac-addresses, and indeed, QEMU assigns the same mac-address to all tap adapters, unless otherwise specified.

Turn off both ESXi'a, show them the unique mac'i and run again.

user@debian-pc:~$ qemu-system-x86_64 -machine q35 --enable-kvm -cpu host -smp 2 -m 4096 -device nec-usb-xhci,id=xhci -drive file=/media/storage/VMs/esxi_6.5-1.qcow2,if=none,id=drive0 -device usb-storage,drive=drive0,bus=xhci.0,bootindex=1 -device ich9-ahci,id=ahci -drive file=/media/storage/VMs/esxi_6.5-1-1T.qcow2,if=none,id=drive1 -device ide-drive,drive=drive1,bus=ahci.0,bootindex=2 -netdev tap,ifname=tap0,script=no,downscript=no,id=hostnet0 -device e1000,netdev=hostnet0,id=net0,mac=DE:AD:BE:EF:16:B6 And in another console:

user@debian-pc:~$ qemu-system-x86_64 -machine q35 --enable-kvm -cpu host -smp 2 -m 4096 -device nec-usb-xhci,id=xhci -drive file=/media/storage/VMs/esxi_6.5-2.qcow2,if=none,id=drive0 -device usb-storage,drive=drive0,bus=xhci.0,bootindex=1 -device ich9-ahci,id=ahci -drive file=/media/storage/VMs/esxi_6.5-2-1T.qcow2,if=none,id=drive1 -device ide-drive,drive=drive1,bus=ahci.0,bootindex=2 -netdev tap,ifname=tap0,script=no,downscript=no,id=hostnet0 -device e1000,netdev=hostnet0,id=net0,mac=DE:AD:BE:EF:C3:FD To our great surprise, the problem with pings has not gone away, moreover, the arp command shows that the MAC addresses of the hypervisors have not changed. Now is the time to remember how the network works in ESXi: the physical network card is transferred to “illegible mode” and connected as one of the ports to the virtual switchboard. Another port is connected to this switch vmkernel interface, which is a network card from the point of view of the hypervisor. At the time of installing ESXi, the hardware address of the physical network card is cloned into vmkernel, so as not to confuse the system administrator. After that, it can be changed only by deleting the interface and re-creating it or by specifying the hypervisor that the vmkernel should be reconfigured due to a change in the address of the physical map.

The first way:

Delete:

esxcfg-vmknic -d -p pgName Create:

esxcfg-vmknic -a -i DHCP -p pgName or

esxcfg-vmknic -a -i xxxx -n 255.255.255.0 pgName The second way:

esxcfg-advcfg -s 1 /Net/FollowHardwareMac The essential difference between these methods is that the former does not require a reboot of the hypervisor, and the latter does.

Having executed these simple operations, we will receive two hypervisors in one network.

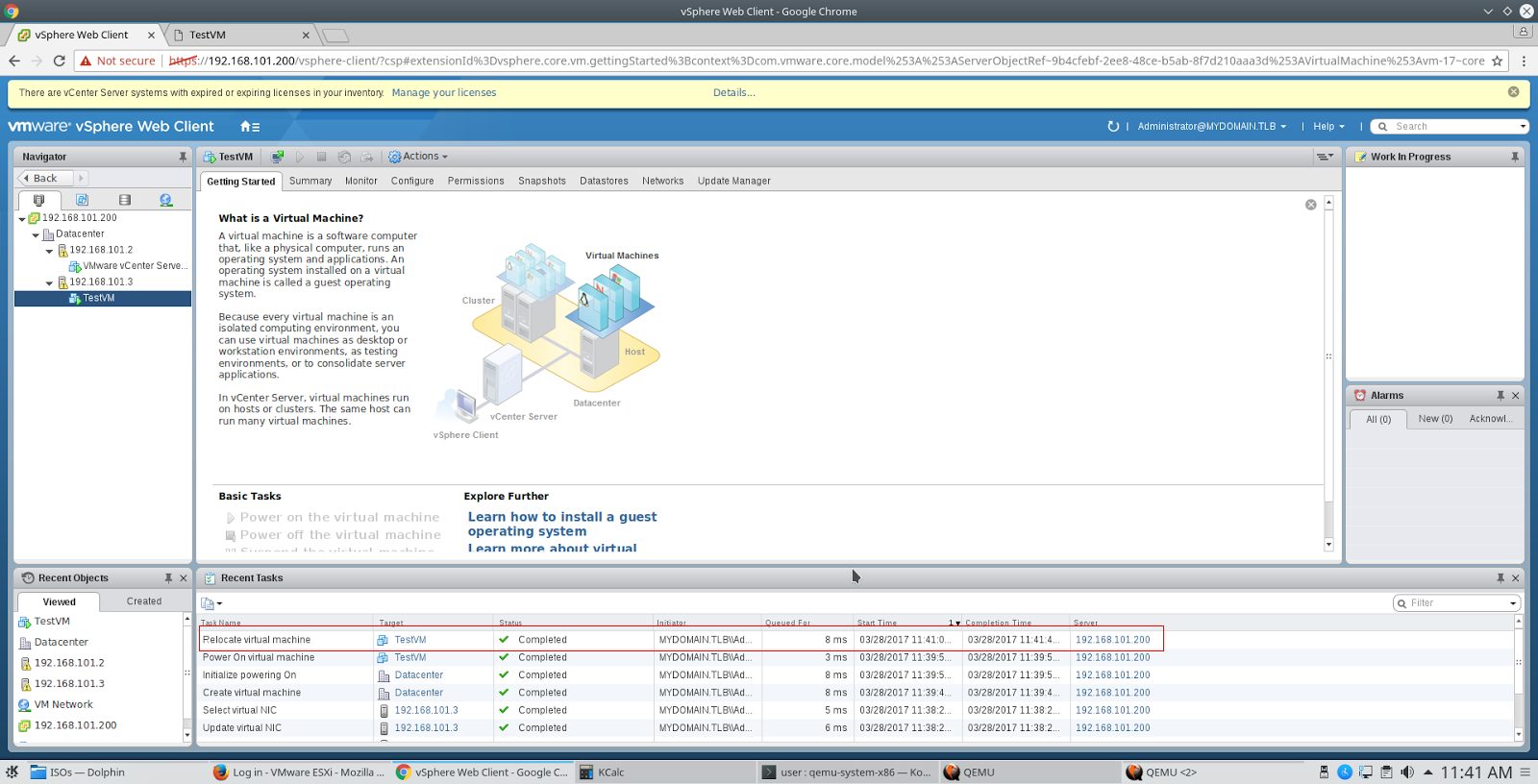

Now you can install vCenter and check “live migration”. For some time, vCenter is available as an image of a Linux virtual machine and the corresponding services on board. This is the option we will try to install. We take the image of VMware-VCSA-all-6.5.0-5178943.iso, mount it in the host OS, run the installer from the vcsc-ui-installer \ lin64 directory and deploy the image, following the wizard’s instructions. The virtual machine will require 10 Gb of RAM, so on the host system it would be nice to have at least 16 Gb. However, my image turned around and on 12 Gb RAM, having eaten all the available memory and drove the system into a swap.

After installing VCSA, go to the Web-based interface with credentials of the form administrator@mydomain.tlb and password, which we specified when configuring SSO. After that we add both hosts to vCenter and we get the simplest cluster in which live migration is running. Configure vMotion on the network cards of both hosts, create a TestVM virtual machine and make sure that it can move from one host to another, changing both the host and the repository.

By the way, on previous versions of ESXi up to and including 6.0, the virtual machine could not be started in nested virtualization mode without adding a line

vmx.allowNested = TRUE in its configuration file. In ESXi 6.5, this is not required, and the virtual machine starts up without issues.

In conclusion, a small life hack. Suppose you need to copy a VM disk file from an ESXi storage somewhere to a backup server, and you cannot use FastSCP from Veeam Backup and Replication. The good old rsync will come to your rescue, you just need to find a binary file that will run on ESXi. Unfortunately, there is a bug in rsync up to version 3.0.9 inclusively, because of which large files on VMFS volumes are incorrectly processed, so you should use rsync version 3.1.0. and higher. You can take it here .

Source: https://habr.com/ru/post/325090/

All Articles