The story of how I went to Google Next 17. A brief pressing on the announcements and the most important

Hello! I want to talk about how I went to the Google Next Conference. I was at the conference for the first time, looking ahead and saying that I was satisfied and almost all of the most interesting technical announcements were on the second day, but let's do everything in order.

Google Next is a conference, as you might have guessed, from Google and is dedicated to the Google Cloud Platform (GCP) product. For those who do not know if there are any on Habré, GCP is a cloud platform from Google, something similar to other platforms like Amazon AWS and Microsft Azure. In contrast to them, it is not so “on hearing” in the territory of the Russian Federation. As it seems to me, first of all because Google does not put any efforts on its promotion and advertising in the Russian market, but, nevertheless, many companies and developers actively use it. Among them, and Voximplant, we use different components of the platform, for example, ML in the form of Speech API for speech recognition and Translate API for translating recognized speech. Many companies use the G-suite, it used to be called Google for work, more recently this component is also part of Google Cloud. You can get acquainted with the platform itself on its page: cloud.google.com .

The conference consisted of 3 days, a lot of new products were announced, almost all technical announcements were made on the second day. I will not describe everything in detail in this article, only a brief retelling and comments. Go!

')

The first day coincided with the International Women's Day, and opened keynout by Senior Vice President GCP Diane Greene. She told about how large the networks and google data centers are, about 10 thousand kilometers of networks in and between data centers, how Google cares about the environment, etc. Then, Google CEO Sundar Pichai briefly emerged, sharing his vision for the development of Google and the GCP. Diana, returning to the stage, in turn, invited partner partners to talk about how they use the Google Cloud Platform. DIsney shared their experiences first. Then the Brend Leukert from SAP appeared on the scene, and interesting announcements followed.

These two announcements surprised the audience, but then there was even more surprise when they announced that ...

I think SAP needs no introduction. Most of the top most successful and large companies use SAP. This partnership is very difficult to underestimate, and this is a very big step towards business. I managed to communicate with SAP representatives, and first of all I asked when the SAP Cloud and its components would be available? We answered honestly that this is rather a story of a couple of months than a couple of weeks, but early access is possible for partners. And SAP Hana express is available now.

A representative from Colgate-Palmolive then told how they use GCP and G-suite. After that, there were also partners such as Verizon, Home Depot, HSBC and Ebay, as well as Tariq Shaukat, president of partner relations. Everyone talked about the fact that they now use GCP, and RJ PIttman from Ebay also showed how you can use Google Home to evaluate and sell things on ebay.

On the stage came Dr. Fei Fei Lee is the director of the Stanford Artificial Intelligence Laboratory, who has recently also been working for Google as Chief Scientist AI / ML. By the way, she built imagenet: www.image-net.org . She said that AI and ML should become more accessible and help people.

The first day was not bad. The first part was almost entirely devoted to business and partners. The news about SAP was very positively received by the audience, as well as the news about the purchase of Kaggle.

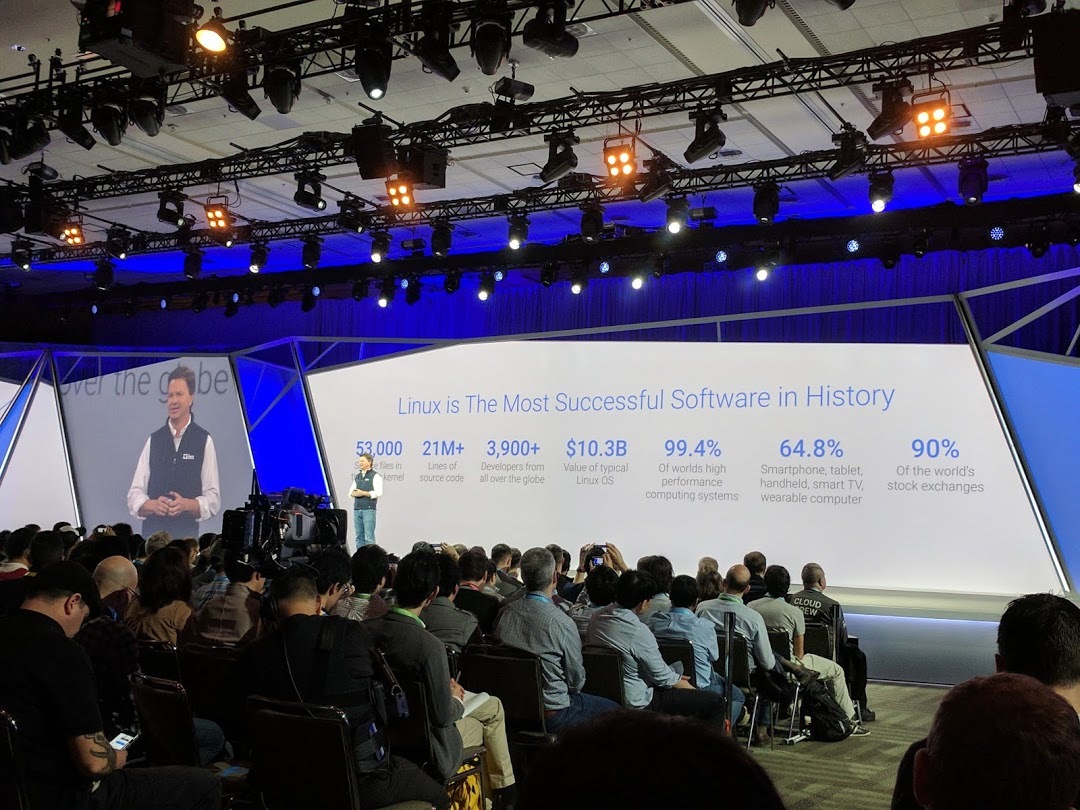

The second day was opened by Urs Holzle and began with the importance of developing the infrastructure, that Google was the first non-telecom company that laid optics around the ocean, and is currently operating one of the largest networks for high-speed data transfer ... A good interconnect between data centers allows end users with high-speed access to various services. Urs almost immediately went to the announcements.

He told about Cloud Spanner , which was already announced a little earlier this year. For those who do not know, Cloud Spanner is a fully distributed relational database service with full support for ACID (Atomicity, Consistency, Isolation, Durability) transactions. This is a very cool thing that combines the capabilities of relational databases and NoSQL, while it is highly accessible, easily scalable and with fully automatic replication. No single CAP theorem is sacrificed.

Then, Ashok Belani from Schlumberger appeared on the scene and told how they use high-performance GPUs for data processing. Schlumberger is engaged in oil and gas exploration and processes hundreds of terabytes of data per day collected from special vessels and sensors.

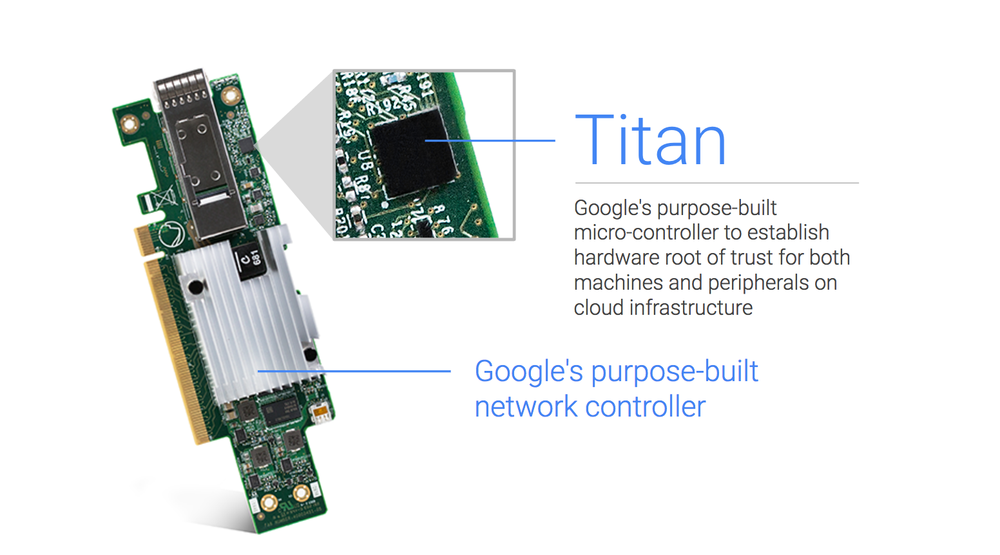

Security is an extremely important topic today and begins with a fence around the data center. But a high level of physical accessibility does not solve all the problems, so all data is stored and transmitted in encrypted form. According to Urs, nobody can provide a level of protection comparable to that provided by Google, and it's not just physical security.

All this is good, but the most common method of obtaining access is now phishing. Security does not happen much, so despite the fairly good basic protection, 2-factor authentication and other mechanisms available now, the following innovations were presented:

Brian Stevens VP GCP came on the scene to tell that many are now moving into the clouds, among them not only start-ups and big business, but also government organizations, the financial sector, health care, etc. But why and why do customers choose GCP? Someone comes for better security, someone for analytics given, but, most importantly, everyone wants tools and platforms that their developers will like. But, if you look from afar, you can distinguish three questions that arise when choosing and moving to the cloud. This is the actual migration itself, cloud architecture and data analysis. The fact is that many do not want to rewrite their products just for the sake of moving to the cloud, and this is not a problem, you can move and so, thanks to Live migration

Brian ʻa quote: “We want to be a great windows platform. We want to be a better windows platform. ”

GCP is pleased to lead Windows developers

Separate and long-awaited news from the world of Open Source.

Then partners from Evernote and Lush (yes, this is cosmetics) told about their move to the cloud without a minute of downtime. Evernote, with more than 200 million users, moved to GCP in 89 days, and Lush did it in 22 days.

But not everyone can afford or just want to take it and move to the cloud, meaning “big enterprise”. For such cases, most cloud providers have tools for building hybrid solutions.

Serverless or without a server architecture is a new and very important concept of building solutions in which developers do not need to think about infrastructure management. Servers or components must be able to scale and adapt to the load. Properly designed such architecture is more reliable, simple and effective.

This approach to building solutions is not new to Google. On the computing side, it is App Engine and Containter Engine. From the side of databases and storage is Datastore and Cloud Sapnner. And of course, BigQuery is also a serverless solution. Each of these services does not use a “server” when there is no load, and when it is, it is easily scaled horizontally. To all of this, one more serverless component is now added.

But what if you have another runtime? Simply. If your runtime is running in a container, you can now bring it with you to GCP and run your applications with your runtime on App Engine.

And of course, they could not tell you about Firebase. Firebase and GCP are even closer.

You can read more about all of the above in the Firebase blog .

However, before collecting data for analysis, it would be nice to prepare them in order not to waste time on this in the future. It would be great to remove all unnecessary and get exactly the idea that we would like to see. That is why a separate tool was presented to prepare the data for further analysis.

After that, Brian was replaced on the stage by VP Apps, Prabhakar Raghavan. This is a G-suite and not only. Perhaps this is part of the Google Cloud is most common with us. A huge number of companies use the G-suite, not only in the world, but also in our country. Well, as you can guess, he began to talk about what productivity is, collaboration, and how important it is. How important it is to develop the tools with which we work, so that they allow us to save time for truly creative work. He also said that for many millions of Gmail users, an average of 1.8 responses fall within the generated ML texts. I can confirm this, I often correspond in English, and these generated answers fit very well! I sometimes supplement them, but it still helps me save time with answers. And then they presented updated and new G-suite products for business.

Next was a not very interesting story about how some make extensions for applications, while others use the G-suite, etc.

If on the first day they talked more about business and partners, then the second day turned out to be more about technology and for techies. Keynout pleased with a large number of announcements and demos.

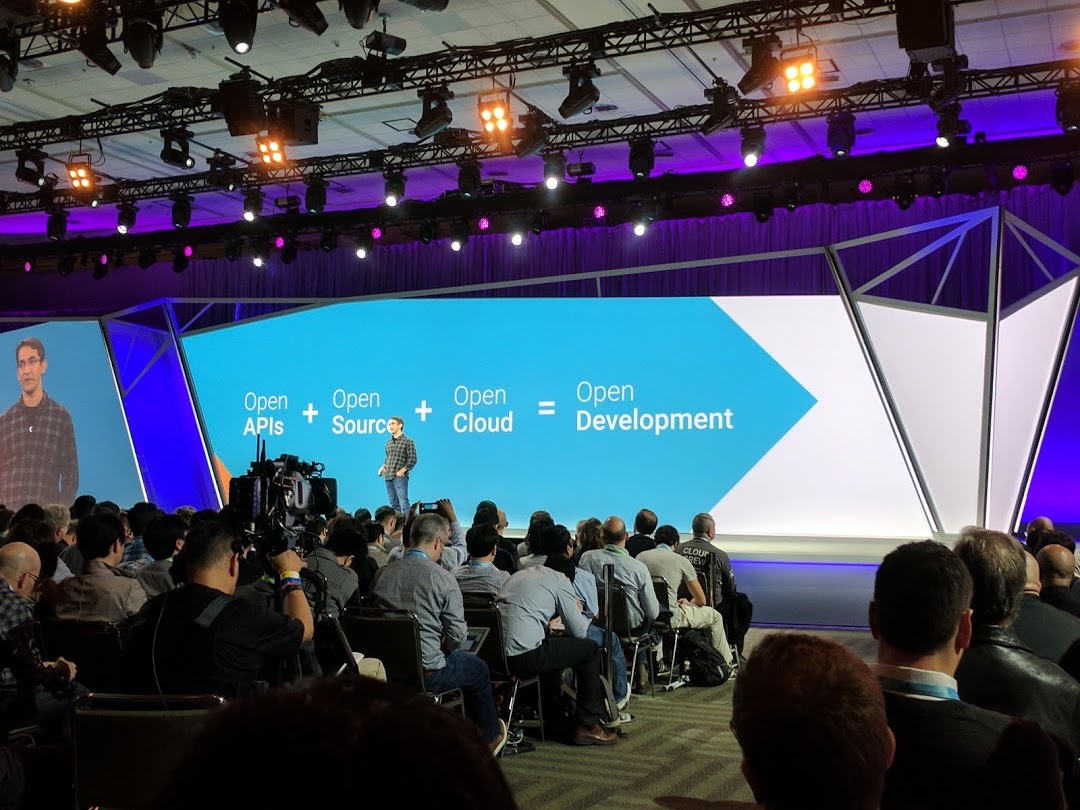

I will not write much about the third day, as there were almost no new announcements on that day, and it was almost entirely devoted to openness, Open Source, working with the community, and how important all this is for Google.

Of the interesting things, Vint Cerf himself talked about the Internet, and how important it is that it is open. Vint Cerf is one of the developers of the TCP / IP protocol stack. Winner of the Turing Award. He is often called the "father of the Internet." Now he is VP and Chief Internet Evangelist on Google.

Google's contribution to open source is very difficult to overestimate, Google opens the source codes of a huge number of its key products. Many of these projects are becoming de facto standards on which other companies already build their products. An excellent example is Google Chrome or TensorFlow, which is currently the Ml number 1 framework on github and not only.

Google not only opens the codes of its developments, but also supports a large number of projects. Most recently, there was a story in Google ’s OpenSource blog about RosenHub , in which 50 Google employees fixed vulnerabilities in Apache Commons Collections. Not to mention about Google summer of code or GSOC - where everyone can apply as a mentor or student and work together on some open source project.

Improved Free Tier:

Read more about this here: cloud.google.com/free .

A huge number of demos and stands with partners. , Google . , .

. , , . , , , .

.

. , , , - , , .

.

- GCP, .

What is this all about Google Next?

Google Next is a conference, as you might have guessed, from Google and is dedicated to the Google Cloud Platform (GCP) product. For those who do not know if there are any on Habré, GCP is a cloud platform from Google, something similar to other platforms like Amazon AWS and Microsft Azure. In contrast to them, it is not so “on hearing” in the territory of the Russian Federation. As it seems to me, first of all because Google does not put any efforts on its promotion and advertising in the Russian market, but, nevertheless, many companies and developers actively use it. Among them, and Voximplant, we use different components of the platform, for example, ML in the form of Speech API for speech recognition and Translate API for translating recognized speech. Many companies use the G-suite, it used to be called Google for work, more recently this component is also part of Google Cloud. You can get acquainted with the platform itself on its page: cloud.google.com .

The conference consisted of 3 days, a lot of new products were announced, almost all technical announcements were made on the second day. I will not describe everything in detail in this article, only a brief retelling and comments. Go!

')

Day 1

First Day Keynote Record

The first day coincided with the International Women's Day, and opened keynout by Senior Vice President GCP Diane Greene. She told about how large the networks and google data centers are, about 10 thousand kilometers of networks in and between data centers, how Google cares about the environment, etc. Then, Google CEO Sundar Pichai briefly emerged, sharing his vision for the development of Google and the GCP. Diana, returning to the stage, in turn, invited partner partners to talk about how they use the Google Cloud Platform. DIsney shared their experiences first. Then the Brend Leukert from SAP appeared on the scene, and interesting announcements followed.

- SAP HANA , in-memory database and analytical platform, will now be available (GA) in the GCP.

- SAP HANA express , a non-licensed developer version will also be available in GCP

These two announcements surprised the audience, but then there was even more surprise when they announced that ...

- SAP Cloud will soon be available in GCP

I think SAP needs no introduction. Most of the top most successful and large companies use SAP. This partnership is very difficult to underestimate, and this is a very big step towards business. I managed to communicate with SAP representatives, and first of all I asked when the SAP Cloud and its components would be available? We answered honestly that this is rather a story of a couple of months than a couple of weeks, but early access is possible for partners. And SAP Hana express is available now.

A representative from Colgate-Palmolive then told how they use GCP and G-suite. After that, there were also partners such as Verizon, Home Depot, HSBC and Ebay, as well as Tariq Shaukat, president of partner relations. Everyone talked about the fact that they now use GCP, and RJ PIttman from Ebay also showed how you can use Google Home to evaluate and sell things on ebay.

On the stage came Dr. Fei Fei Lee is the director of the Stanford Artificial Intelligence Laboratory, who has recently also been working for Google as Chief Scientist AI / ML. By the way, she built imagenet: www.image-net.org . She said that AI and ML should become more accessible and help people.

- Video Inteligence API is a new API that can automatically recognize and extract information about what is happening on the video. Up to this point, pattern recognition has mainly been used for photos, but now it will be possible to work with video. Among other things, the API can also report the actual fact of the “scene” changing on the video. All this means that we will be able to search for videos not only by name, but also by what happens in them. I wonder when this search will appear on YouTube. You can see how it works by clicking on the link: cloud.google.com/video-intelligence . Sign up in beta can be a special link . You can also watch the demo.Video Intelligence API

- Google buys Kagle . Kaggle is the largest community of scientists and enthusiasts of Data Science and Machine Learning founded in 2010. More than 800,000 thousand enthusiasts and experts use Kaggle to exchange, research and analyze datasets. In essence, this is the main and indispensable resource for a person interested in Data Science.

The first day was not bad. The first part was almost entirely devoted to business and partners. The news about SAP was very positively received by the audience, as well as the news about the purchase of Kaggle.

Short review of the first day:

Day 2

Second day keynote recording

The second day was opened by Urs Holzle and began with the importance of developing the infrastructure, that Google was the first non-telecom company that laid optics around the ocean, and is currently operating one of the largest networks for high-speed data transfer ... A good interconnect between data centers allows end users with high-speed access to various services. Urs almost immediately went to the announcements.

- 3 new data center . In the Netherlands, Canada in California, which will increase their total number to 17 in the current year. View the location by following the link: cloud.google.com/about/locations .

He told about Cloud Spanner , which was already announced a little earlier this year. For those who do not know, Cloud Spanner is a fully distributed relational database service with full support for ACID (Atomicity, Consistency, Isolation, Durability) transactions. This is a very cool thing that combines the capabilities of relational databases and NoSQL, while it is highly accessible, easily scalable and with fully automatic replication. No single CAP theorem is sacrificed.

A small Cloud Spanner demo was shown on the stage:

- Up to 64 cores and 416GB of memory can now be added to virtual machines in the GCP. But this is not all, in the near future it will be possible to add an even larger number of cores for the VM and up to 1TB of memory.

- Intel skylake processors are already available in GCP for VMs . This was made possible through a close and strategic partnership between Google and Intel.

- New prices and discounts . From interesting, the longer you use virtuals, the cheaper they are, and the price will decrease with each month, and for this you don’t need to do anything or sign and pay for it. In addition, per-minute billing is available for VMs, you only pay for the time of use. Learn more at link: cloud.google.com/compute/pricing

- Flexible GPUs . Now you can add a GPU to any machine. The word Flexible is here for a reason, you can change such GPU parameters as cores or memory, thereby getting what you need and not overpaying for hardware. Per minute billing is also available for the GPU.

Then, Ashok Belani from Schlumberger appeared on the scene and told how they use high-performance GPUs for data processing. Schlumberger is engaged in oil and gas exploration and processes hundreds of terabytes of data per day collected from special vessels and sensors.

Security is an extremely important topic today and begins with a fence around the data center. But a high level of physical accessibility does not solve all the problems, so all data is stored and transmitted in encrypted form. According to Urs, nobody can provide a level of protection comparable to that provided by Google, and it's not just physical security.

- Titan is a tiny chip specially designed by Google and allows you to protect hardware at the BIOS level, acting as the Hardware Roots of Trust (RoT). These chips are installed on each machine in the DC Google.

All this is good, but the most common method of obtaining access is now phishing. Security does not happen much, so despite the fairly good basic protection, 2-factor authentication and other mechanisms available now, the following innovations were presented:

- Data Loss Prevention API (beta) - recognizes all sorts of documents or context and allows you to hide private data on the fly, replacing them with asterisks.Data Loss Prevention API

- Key Management Service (GA) - the service allows you to generate and manage symmetric encryption keys.

- Identity Aware Proxy (beta) service allows you to configure and provide secure access to applications, replacing VPNs, firewalls, and so on. Acts as a frontend for your applications and runs on top of a load balancer.Identity Aware Proxy

- Security Key Enforcements (GA) - allows you to use physical access keys for additional security of applications and services. Already available in the G-suite.

Brian Stevens VP GCP came on the scene to tell that many are now moving into the clouds, among them not only start-ups and big business, but also government organizations, the financial sector, health care, etc. But why and why do customers choose GCP? Someone comes for better security, someone for analytics given, but, most importantly, everyone wants tools and platforms that their developers will like. But, if you look from afar, you can distinguish three questions that arise when choosing and moving to the cloud. This is the actual migration itself, cloud architecture and data analysis. The fact is that many do not want to rewrite their products just for the sake of moving to the cloud, and this is not a problem, you can move and so, thanks to Live migration

- Live migration - recently, the ability to profitably migrate VMs running on KVM, Hyper-V and VMware was added to the gcloud console, and it doesn't matter if the VM is running on hardware or in another cloud.

Brian ʻa quote: “We want to be a great windows platform. We want to be a better windows platform. ”

GCP is pleased to lead Windows developers

- Active Directory is available in Google cloud

- Visual studio and power shell integration

- .Net core support in both AppEngine and Container Engine

- Microsoft SQL Server Enterprise is publicly available as a service with HA and clustering.

Separate and long-awaited news from the world of Open Source.

- PostgreSQL is now available as a managed service through the CloudSQL component. So far in the form of beta, but it's still great news. Learn more about Postgres, available extensions, beta features, you can look at the link: cloud.google.com/sql/docs/features#postgres

Then partners from Evernote and Lush (yes, this is cosmetics) told about their move to the cloud without a minute of downtime. Evernote, with more than 200 million users, moved to GCP in 89 days, and Lush did it in 22 days.

But not everyone can afford or just want to take it and move to the cloud, meaning “big enterprise”. For such cases, most cloud providers have tools for building hybrid solutions.

- Virtual Private Cloud - allows you to build a hybrid cloud, which will be a continuation of your data center or server, and not some separate and remote component.

Serverless or without a server architecture is a new and very important concept of building solutions in which developers do not need to think about infrastructure management. Servers or components must be able to scale and adapt to the load. Properly designed such architecture is more reliable, simple and effective.

This approach to building solutions is not new to Google. On the computing side, it is App Engine and Containter Engine. From the side of databases and storage is Datastore and Cloud Sapnner. And of course, BigQuery is also a serverless solution. Each of these services does not use a “server” when there is no load, and when it is, it is easily scaled horizontally. To all of this, one more serverless component is now added.

- Cloud functions - in fact, these are just pieces of code that allow you to combine services together. This is not just a service, it is essentially a “platform” for connecting microservices into a single cloud-based server-free solution. Read more about Cloud Functions here .

- App Engine Flexible Environment now supports 7 languages out of the box:

- Node.js

- Ruby

- Java 8

- Python 2.7 and 3.5

- Go 1.8

- PHP 7.1

- C #

But what if you have another runtime? Simply. If your runtime is running in a container, you can now bring it with you to GCP and run your applications with your runtime on App Engine.

And of course, they could not tell you about Firebase. Firebase and GCP are even closer.

- Integration with Cloud Storage. Now you can access Cloud Storage directly from the firebase SDK

- Firebase Analytics integration with BigQuery

- Integration with Cloud Functions

- In the near future, the Firebase will also cover GCP Terms of Service

You can read more about all of the above in the Firebase blog .

What does sharing these components, or How can you use them to upgrade the old law application, can be found in the demo

- BigQuery Data Transfer Service is an automated service for transferring data from SaaS applications to BiGQuery, but does it in a planned and controlled mode. AdWords, DoubleClick and YouTube Analytics connectors are now available.

However, before collecting data for analysis, it would be nice to prepare them in order not to waste time on this in the future. It would be great to remove all unnecessary and get exactly the idea that we would like to see. That is why a separate tool was presented to prepare the data for further analysis.

- Cloud Dataprep is a smart service that allows you to visually examine and clear data. You can use the mouse to indicate that you want a specific json field to be a top-level column in BigQuery. Or you can split the data in the form of an address into separate elements or fields. By itself, the service is not just called smart, it uses ML to find connections, features and the correct presentation of data.

Integrated data platform

After that, Brian was replaced on the stage by VP Apps, Prabhakar Raghavan. This is a G-suite and not only. Perhaps this is part of the Google Cloud is most common with us. A huge number of companies use the G-suite, not only in the world, but also in our country. Well, as you can guess, he began to talk about what productivity is, collaboration, and how important it is. How important it is to develop the tools with which we work, so that they allow us to save time for truly creative work. He also said that for many millions of Gmail users, an average of 1.8 responses fall within the generated ML texts. I can confirm this, I often correspond in English, and these generated answers fit very well! I sometimes supplement them, but it still helps me save time with answers. And then they presented updated and new G-suite products for business.

- Hangouts Chat is a well-known Hangouts, but now rooms have been added to it. Yes, now it looks like Slack. Demo .

- Hangouts Meet is a separate product that is designed to help collect and conduct video calls. They say that they have improved the quality, reduced the CPU consumption. Now you can invite to a video call not only people who do not have an account of the G-suite, but also people by phone (for a video call, you can now generate a call number). Demo .

- Jamboard is a special interactive "cloud" board that allows you to synchronize whiteboard entries for different teams, and also has support for different "chips" such as text recognition, search, maps, and so on. Demo .

Short video about the new in the G-suite

Next was a not very interesting story about how some make extensions for applications, while others use the G-suite, etc.

If on the first day they talked more about business and partners, then the second day turned out to be more about technology and for techies. Keynout pleased with a large number of announcements and demos.

Overview of the second day:

Day 3

Third day keynote recording

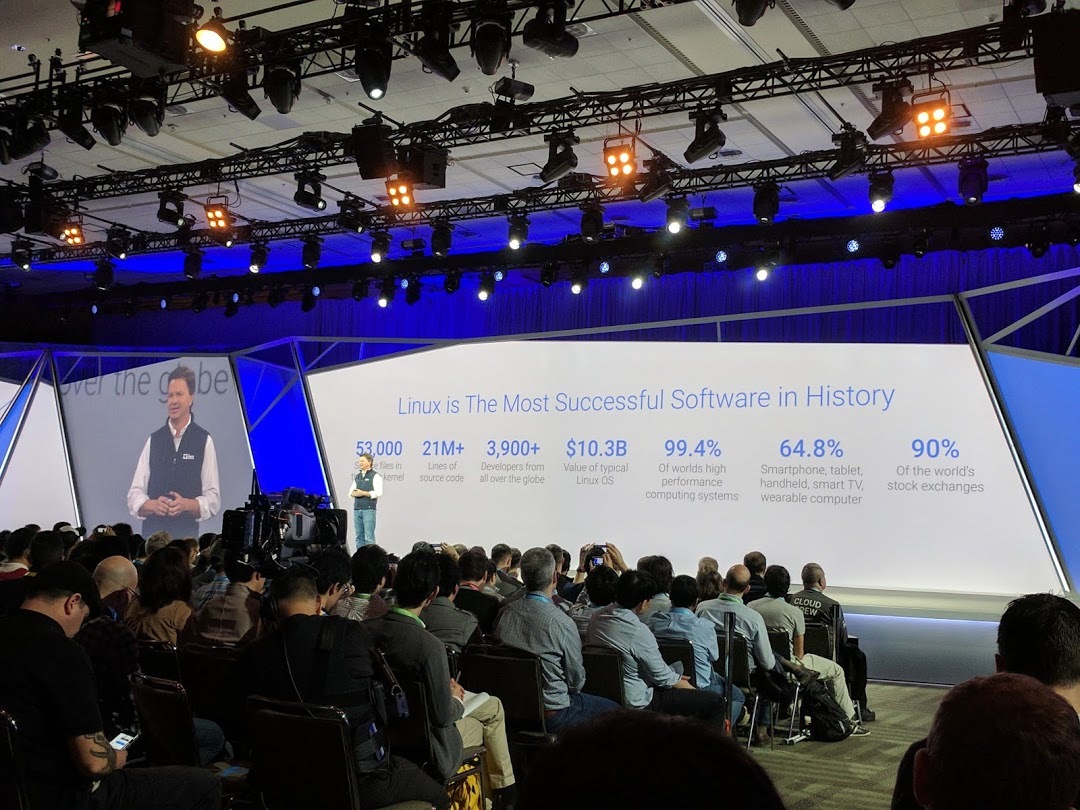

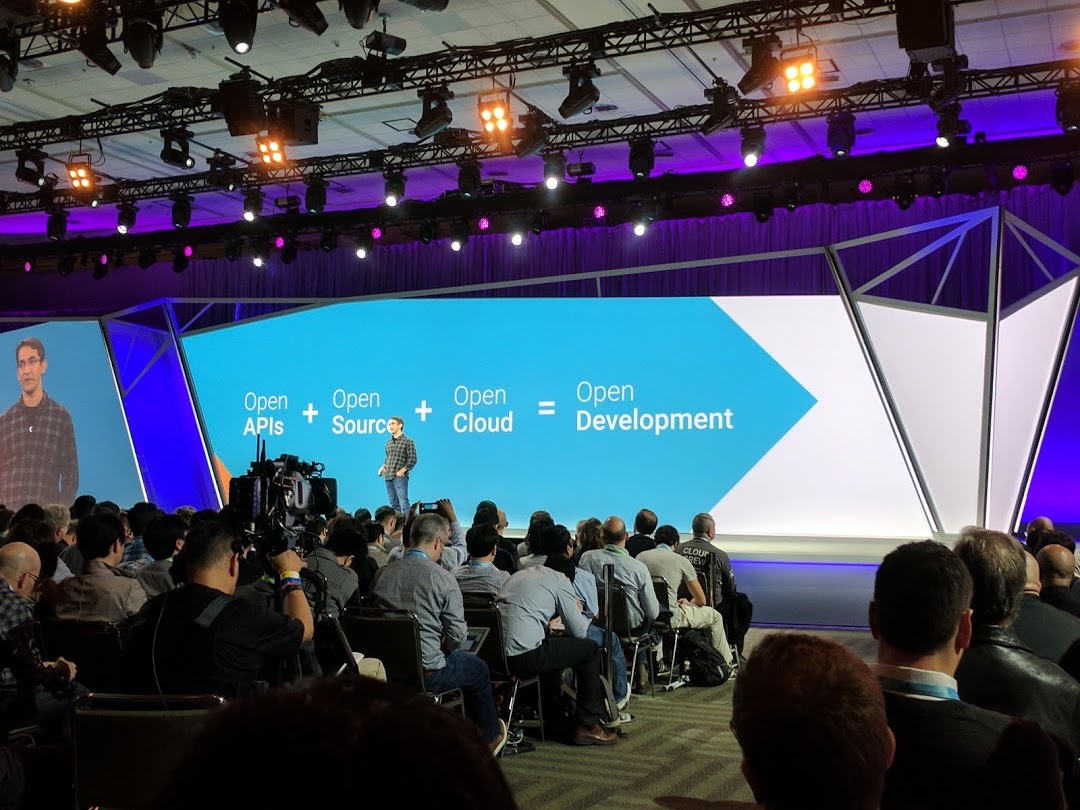

I will not write much about the third day, as there were almost no new announcements on that day, and it was almost entirely devoted to openness, Open Source, working with the community, and how important all this is for Google.

I will give some pictures instead of text:

Of the interesting things, Vint Cerf himself talked about the Internet, and how important it is that it is open. Vint Cerf is one of the developers of the TCP / IP protocol stack. Winner of the Turing Award. He is often called the "father of the Internet." Now he is VP and Chief Internet Evangelist on Google.

Google's contribution to open source is very difficult to overestimate, Google opens the source codes of a huge number of its key products. Many of these projects are becoming de facto standards on which other companies already build their products. An excellent example is Google Chrome or TensorFlow, which is currently the Ml number 1 framework on github and not only.

Google not only opens the codes of its developments, but also supports a large number of projects. Most recently, there was a story in Google ’s OpenSource blog about RosenHub , in which 50 Google employees fixed vulnerabilities in Apache Commons Collections. Not to mention about Google summer of code or GSOC - where everyone can apply as a mentor or student and work together on some open source project.

Improved Free Tier:

- Expanded the free trial from 60 days to 12 months, which allows you to use a preferred $ 300, which is given on the first login, in all services and API GCP.

- New products Always Free - unlimited limits for 15 components that you can use for free testing and application development. Among them are Compute Engine, Cloud Pub / Sub, Cloud Storage, Cloud Functions

Read more about this here: cloud.google.com/free .

Summing up or what else was at the conference?

A huge number of demos and stands with partners. , Google . , .

. , , . , , , .

:

.

. , , , - , , .

.

PS

- GCP, .

Source: https://habr.com/ru/post/324906/

All Articles