Simplest HTTP server on Golang and Elixir. Performance comparison

A couple of weeks ago, I decided to take the simplest example of an HTTP server on Go and measure its performance. Then I bravely took Phoenix , drove on the same tests, and was upset. The results were not in favor of Elixir / Erlang (45133 RPS for Go and just 3065 RPS for Phoenix). But Phoenix is hard. Something must be at least approximately equal in simplicity and development logic to what is on Go: when there is a path - "/" and a handler for it. The logical analogy seemed to me to be the cowboy + plug solution, where we have a Router that also catches "/" and responds to it. The results killed - Elixir / Erlang was again slower:

Golang sea@sea:~/go$ wrk -t10 -c100 -d10s http://127.0.0.1:4000/ ... 452793 requests in 10.03s, 58.30MB read Requests/sec: 45133.28 Transfer/sec: 5.81MB elixir cowboy plug sea@sea:~/http_test$ wrk -t10 -c100 -d10s http://127.0.0.1:4000/ ... 184703 requests in 10.02s, 28.57MB read Requests/sec: 18441.79 Transfer/sec: 2.85MB How to live on? For two weeks I did not sleep and did not eat (almost). Everything that I believed in all these years: perfection vm erlang, OP, green processes, was crushed torn, burned and launched into the wind. A little away from the shock, calm down, and rubbing snot, I decided to break up, what's the matter.

Both the server and the test program were launched on the same virtual machine, inside VirtualBOX, with two dedicated cores.

if it turns out that you will have to work in such or similar conditions, then Go will really show better results

Test computer

As I tested. I work on a laptop with Windows 7 x64, i7 processor, 8 Gb RAM, and Linux - in my case Ubuntu 16, I run inside VirtualBOX. For her, I allocated 1 Gb of RAM and 2 cores. Inside this virtual machine, I started an HTTP server, and on the same machine I started testing ab and wrk . In this situation, the same machine turns out to be loaded with both a server and a test; data transmission over the network does not impose restrictions, because there is no transmission over the network.

As a result, we got a complete rout:

Go: sea@sea:~/go$ wrk -t10 -c100 -d10s http://127.0.0.1:4000/ Running 10s test @ http://127.0.0.1:4000/ 10 threads and 100 connections Thread Stats Avg Stdev Max +/- Stdev Latency 65.18ms 109.44ms 1.05s 86.48% Req/Sec 4.60k 5.87k 25.40k 86.85% 452793 requests in 10.03s, 58.30MB read Requests/sec: 45133.28 Transfer/sec: 5.81MB Elixir cowboy: sea@sea:~/http_test$ wrk -t10 -c100 -d10s http://127.0.0.1:4000/ Running 10s test @ http://127.0.0.1:4000/ 10 threads and 100 connections Thread Stats Avg Stdev Max +/- Stdev Latency 8.94ms 11.38ms 123.57ms 86.53% Req/Sec 1.85k 669.61 4.99k 71.70% 184703 requests in 10.02s, 28.57MB read Requests/sec: 18441.79 Transfer/sec: 2.85MB Go: sea@sea:~/go$ wrk -t10 -c1000 -d10s http://127.0.0.1:4000/ Running 10s test @ http://127.0.0.1:4000/ 10 threads and 1000 connections Thread Stats Avg Stdev Max +/- Stdev Latency 61.16ms 231.88ms 2.00s 92.97% Req/Sec 7.85k 8.65k 26.13k 79.49% 474853 requests in 10.09s, 61.14MB read Socket errors: connect 0, read 0, write 0, timeout 1329 Requests/sec: 47079.39 Transfer/sec: 6.06MB Elixir cowboy: sea@sea:~/http_test$ wrk -t10 -c1000 -d10s http://127.0.0.1:4000/ Running 10s test @ http://127.0.0.1:4000/ 10 threads and 1000 connections Thread Stats Avg Stdev Max +/- Stdev Latency 123.00ms 303.25ms 1.94s 88.91% Req/Sec 2.06k 1.85k 11.26k 71.80% 173220 requests in 10.09s, 26.79MB read Socket errors: connect 0, read 0, write 0, timeout 43 Requests/sec: 17166.03 Transfer/sec: 2.65MB The only thing that could be said in defense of Erlang / Elixir is a smaller number of timeouts. Build application in HiPE did not improve performance. But first things first

Test environment v.2

It became clear that testing is better on a stand-alone system, and not in a virtual machine. A test http server would be better placed on a weaker machine, and the testing machine should be more powerful so that it can completely overwhelm the server, bringing it to the limit of its technical capabilities. It is desirable to have a fast network, so as not to rest on its limitations.

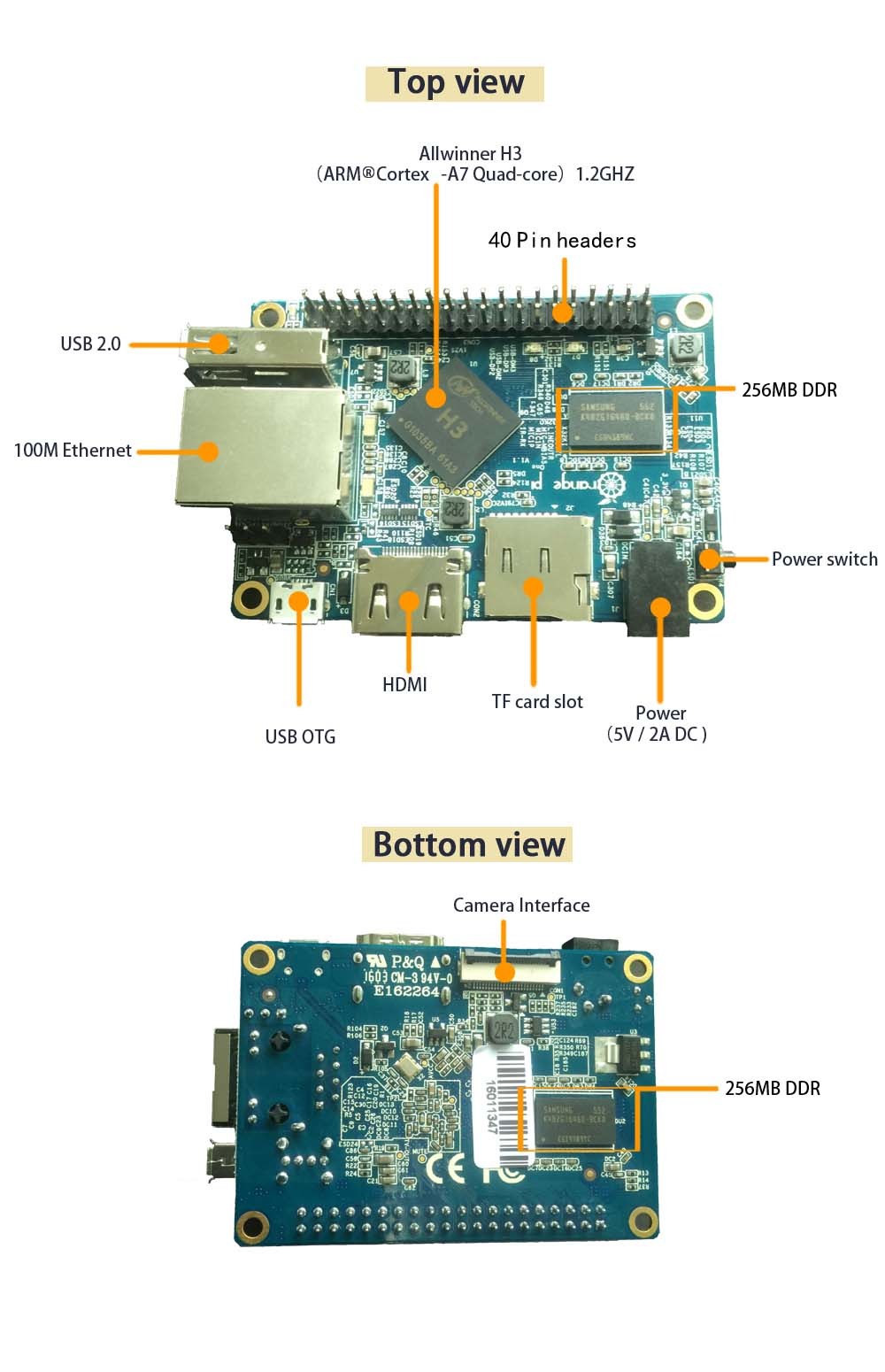

Therefore, as a testing machine, I decided to leave my laptop on i7. And as the server decided to torment the Orange PI One. I assumed that I would rather "put on" its performance than on the rate of exchange over the network. Orange PI One is connected to the router via UTP at a speed of 100 Mbps.

The manufacturer’s website states that the Orange PI One is equipped with an A7 Quad Core processor with a frequency of 1200 MHz. But due to the mistakes of the developers, the entire system suffers from overheating of the kernel alerts, so I clamped down the processor speed to 600 MHz. So it will be even more interesting. The system works stably, but even with nothing doing, its load average: 2.00, 2.01, 2.05 (which is strange). Ubuntu 14 is installed. 512MB of memory, so, just in case, I connected the swap partition to a file on a flash drive.

In order to transfer the project to Go and to Elixir on Orange PI, I immediately created two projects on Github:

https://github.com/UA3MQJ/go-small-http

https://github.com/UA3MQJ/elx-small-http-cowboy

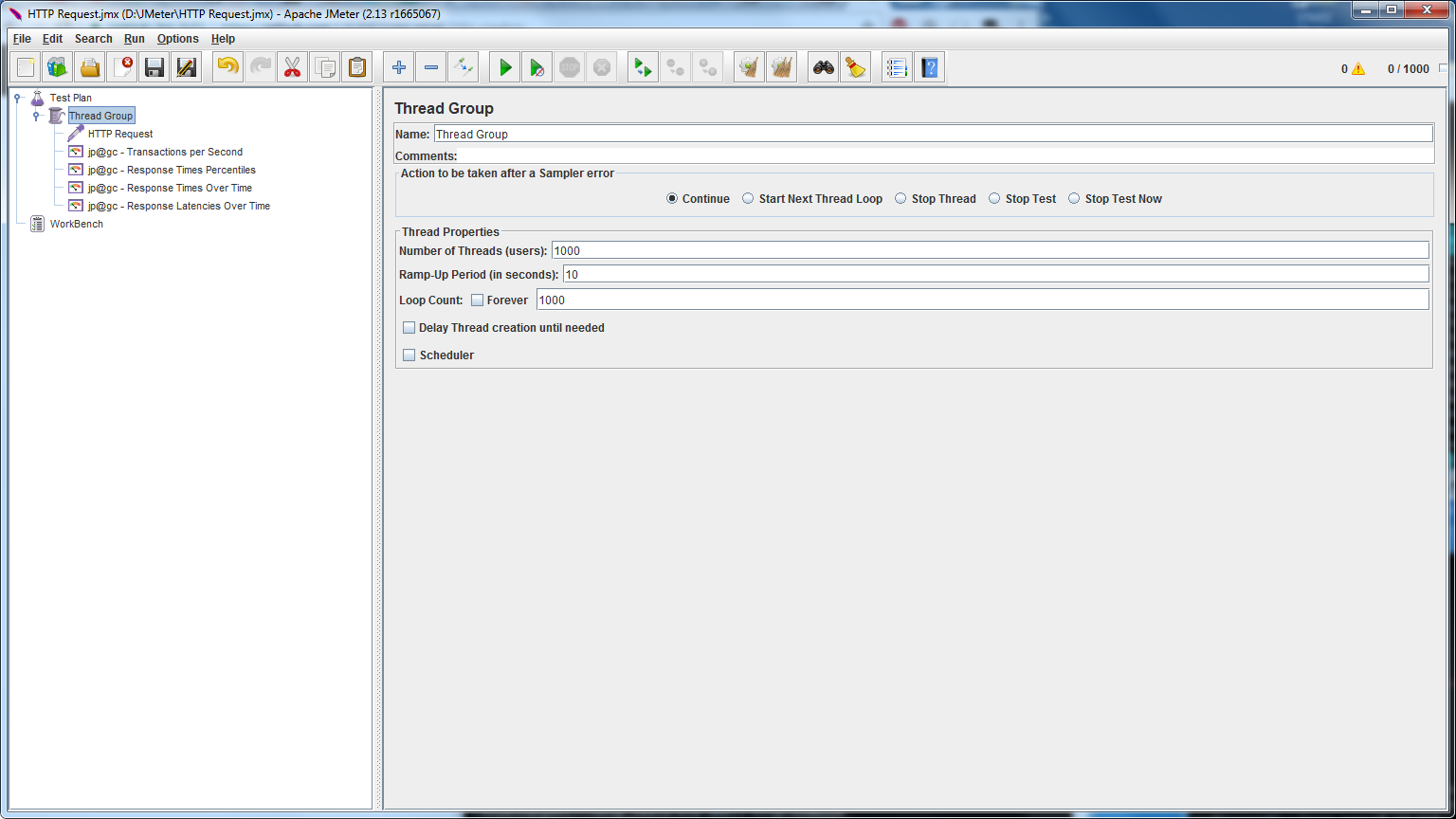

Golang on Orange PI was delivered without problems. But with Erlang / Elixir had a little work. But this work was carried out long ago. Build projects and launch passed without problems. As tests, I took a tool that will work under Windows - this is Jmeter .

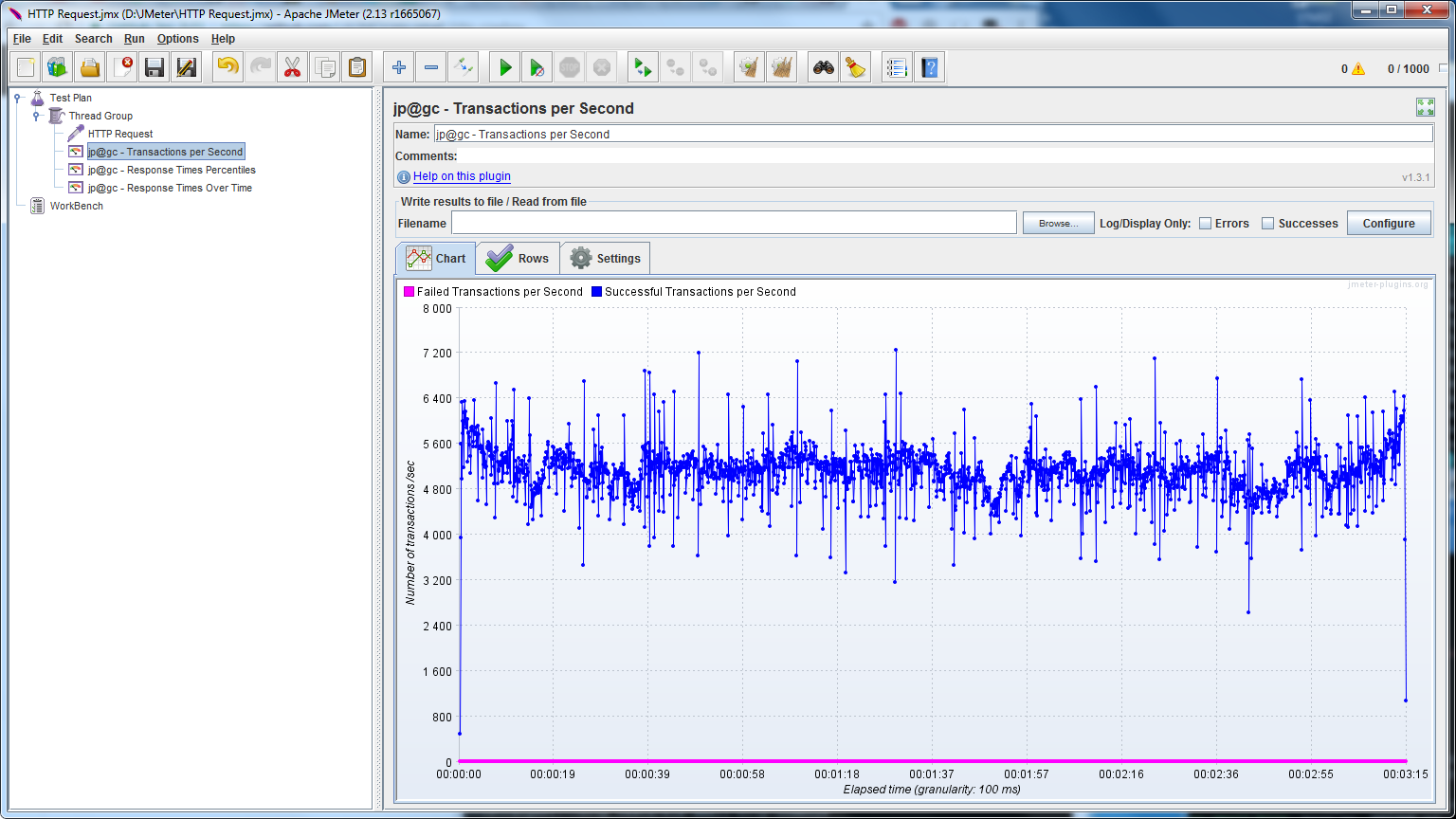

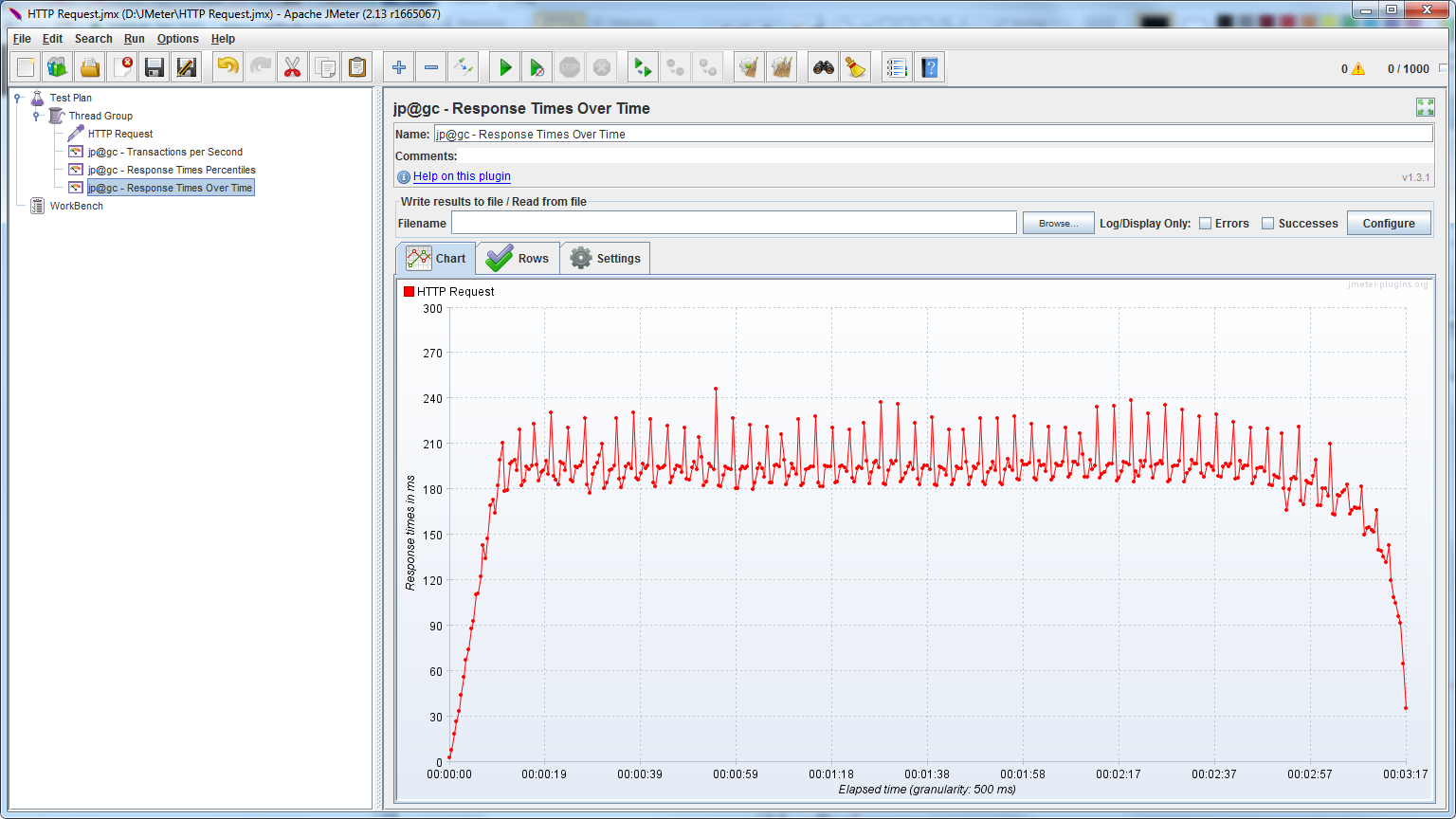

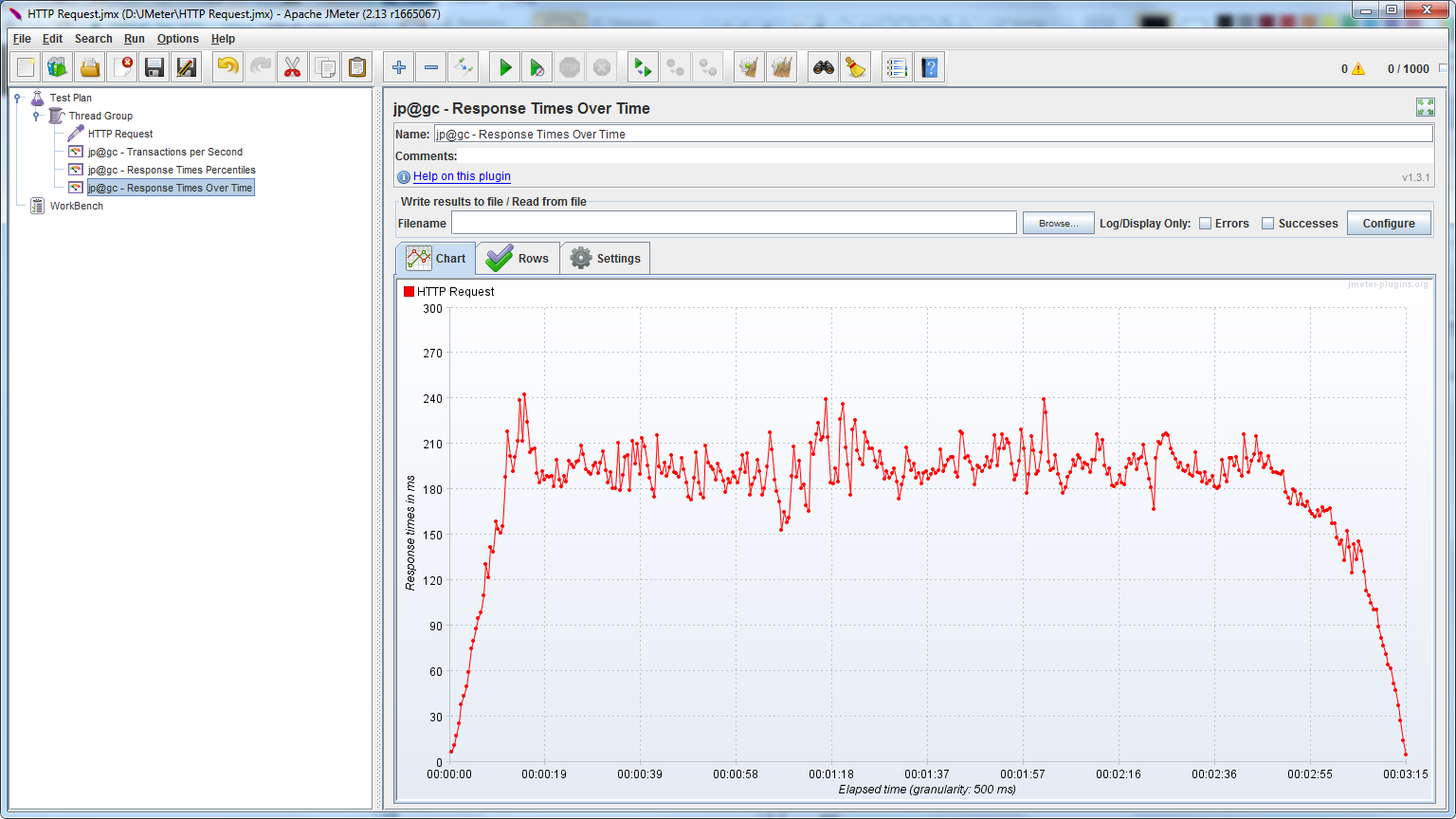

The first tests with the following parameters:

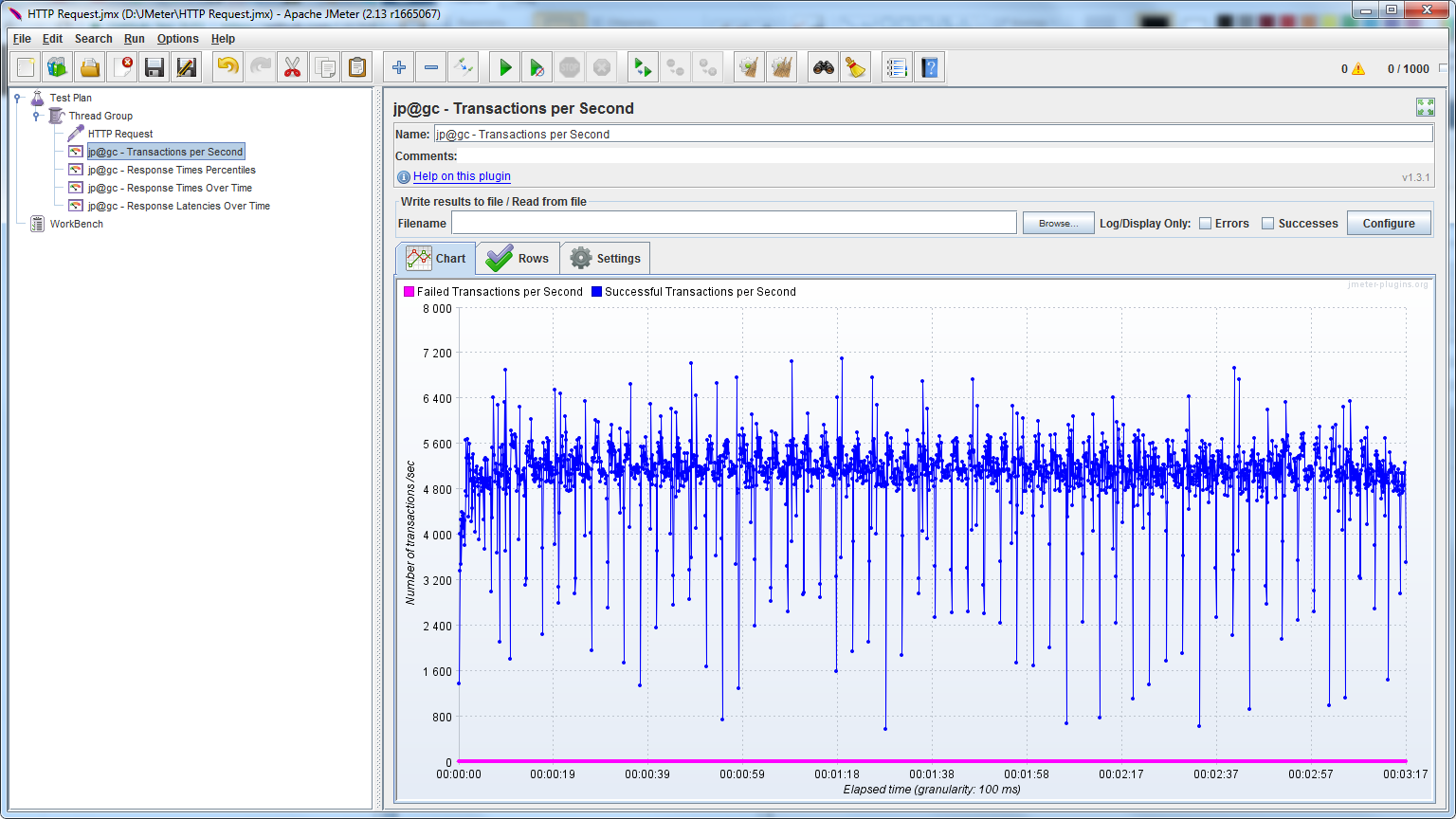

Showed that the forces ... are equal!

RPS - Go:

RPS - Elixir:

Resp Time - Go:

Resp Time - Elixir:

Interesting observations

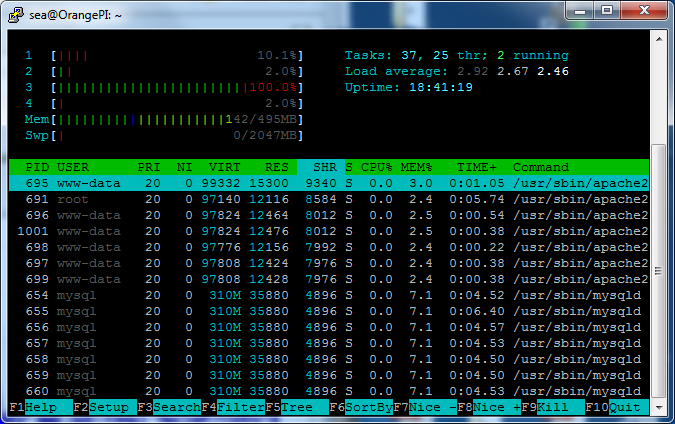

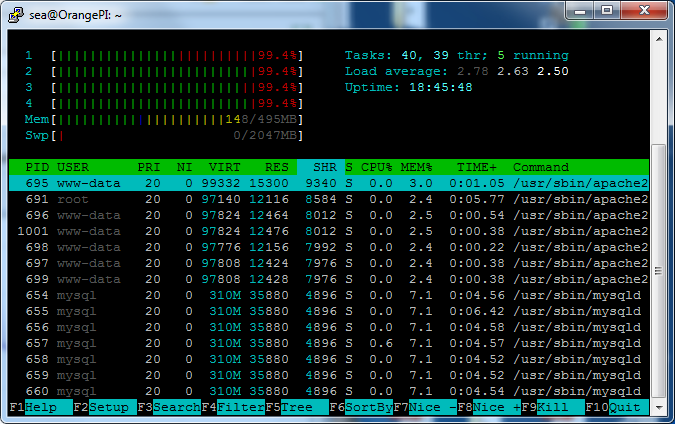

Go has always worked with one core:

While Elixir with all at once:

In this spherical test, it turned out that Elixir won.

Go: sea@sea:~$ wrk -t10 -c100 -d10s http://192.168.1.16:4000/ Running 10s test @ http://192.168.1.16:4000/ 10 threads and 100 connections Thread Stats Avg Stdev Max +/- Stdev Latency 19.04ms 7.70ms 81.05ms 70.53% Req/Sec 531.09 78.11 828.00 77.10% 52940 requests in 10.02s, 6.82MB read Requests/sec: 5282.81 Transfer/sec: 696.46KB Elixir cowboy: sea@sea:~$ wrk -t10 -c100 -d10s http://192.168.1.16:4000/ Running 10s test @ http://192.168.1.16:4000/ 10 threads and 100 connections Thread Stats Avg Stdev Max +/- Stdev Latency 14.27ms 10.54ms 153.60ms 95.81% Req/Sec 753.20 103.47 1.09k 80.40% 74574 requests in 10.04s, 11.53MB read Requests/sec: 7429.95 Transfer/sec: 1.15MB Go: sea@sea:~$ wrk -t100 -c100 -d10s http://192.168.1.16:4000/ Running 10s test @ http://192.168.1.16:4000/ 100 threads and 100 connections Thread Stats Avg Stdev Max +/- Stdev Latency 60.14ms 137.57ms 1.52s 94.28% Req/Sec 38.45 20.62 130.00 60.30% 34384 requests in 10.10s, 4.43MB read Requests/sec: 3404.19 Transfer/sec: 448.79KB Elixir cowboy: sea@sea:~$ wrk -t100 -c100 -d10s http://192.168.1.16:4000/ Running 10s test @ http://192.168.1.16:4000/ 100 threads and 100 connections Thread Stats Avg Stdev Max +/- Stdev Latency 13.32ms 5.25ms 90.37ms 73.31% Req/Sec 75.51 22.04 191.00 67.49% 75878 requests in 10.10s, 11.74MB read Requests/sec: 7512.75 Transfer/sec: 1.16MB Go: sea@sea:~$ wrk -t100 -c500 -d10s http://192.168.1.16:4000/ Running 10s test @ http://192.168.1.16:4000/ 100 threads and 500 connections Thread Stats Avg Stdev Max +/- Stdev Latency 93.81ms 18.63ms 328.78ms 84.98% Req/Sec 53.13 11.12 101.00 77.60% 52819 requests in 10.10s, 6.80MB read Requests/sec: 5232.01 Transfer/sec: 689.77KB Elixir cowboy: sea@sea:~$ wrk -t100 -c500 -d10s http://192.168.1.16:4000/ Running 10s test @ http://192.168.1.16:4000/ 100 threads and 500 connections Thread Stats Avg Stdev Max +/- Stdev Latency 93.24ms 96.80ms 1.26s 94.47% Req/Sec 62.95 23.33 292.00 79.87% 61646 requests in 10.10s, 9.53MB read Requests/sec: 6106.38 Transfer/sec: 0.94MB But what if you start an erlang server using HiPE ?

To do this, you first need to google how to do it. How to do this in Erlang - of course. But about Elixir I had to google it. In addition, those who tested HiPE write that often in HiPE it turns out even slower than in the standard one. This is due to the fact that dependencies can be collected without HiPE (and it is necessary to assemble them in the same mode), plus you need to evaluate the system counter of context switches, and if there are many switches, this will adversely affect performance and show worse results.

Compile project dependencies with HiPE compiler

$ ERL_COMPILER_OPTIONS="[native,{hipe, [verbose, o3]}]" mix deps.compile --force Let's collect the project

$ ERL_COMPILER_OPTIONS="[native,{hipe, [verbose, o3]}]" mix compile Tests have shown that HiPE does not increase, but on the contrary, shows the worst results.

Elixir cowboy: sea@sea:~$ wrk -t100 -c500 -d10s http://192.168.1.16:4000/ Running 10s test @ http://192.168.1.16:4000/ 100 threads and 500 connections Thread Stats Avg Stdev Max +/- Stdev Latency 93.24ms 96.80ms 1.26s 94.47% Req/Sec 62.95 23.33 292.00 79.87% 61646 requests in 10.10s, 9.53MB read Requests/sec: 6106.38 Transfer/sec: 0.94MB Elixir cowboy (HiPE): sea@sea:~$ wrk -t100 -c500 -d10s http://192.168.1.16:4000/ Running 10s test @ http://192.168.1.16:4000/ 100 threads and 500 connections Thread Stats Avg Stdev Max +/- Stdev Latency 111.84ms 160.53ms 1.89s 95.42% Req/Sec 59.19 29.68 383.00 81.63% 56425 requests in 10.10s, 8.72MB read Socket errors: connect 0, read 0, write 0, timeout 34 Requests/sec: 5587.39 Transfer/sec: 0.86MB Go Strikes Back

Why did Go work in a single processor? Maybe a Go package, which goes by default with the old version, when Go was still running on a single processor? And there is!

sea@OrangePI:~$ go version go version go1.2.1 linux/arm Have to update and repeat! The collected Go version 1.7.3 for armv7 was found at https://github.com/hypriot/golang-armbuilds/releases

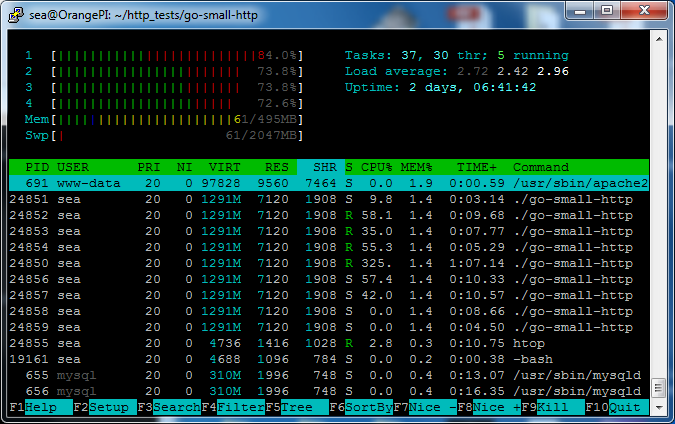

The server on Golang compiled on this version loads all 4 cores already:

Elixir cowboy: sea@sea:~$ wrk -t100 -c500 -d10s http://192.168.1.16:4000/ Running 10s test @ http://192.168.1.16:4000/ 100 threads and 500 connections Thread Stats Avg Stdev Max +/- Stdev Latency 93.24ms 96.80ms 1.26s 94.47% Req/Sec 62.95 23.33 292.00 79.87% 61646 requests in 10.10s, 9.53MB read Requests/sec: 6106.38 Transfer/sec: 0.94MB sea@sea:~/tender_pro_bots$ wrk -t100 -c500 -d10s http://192.168.1.16:4000/ Running 10s test @ http://192.168.1.16:4000/ 100 threads and 500 connections Thread Stats Avg Stdev Max +/- Stdev Latency 70.17ms 57.84ms 754.39ms 90.61% Req/Sec 84.09 31.01 151.00 73.20% 78787 requests in 10.10s, 10.14MB read Requests/sec: 7800.43 Transfer/sec: 1.00MB Go breaks free!

Go and fasthttp

After the recommendation to change the version of Golang, I was advised to fasthttp and gccgo. Let's start with the first.

https://github.com/UA3MQJ/go-small-fasthttp

Let's look at the download. We see that all 4 cores are loaded, but not 100%.

And now wrk

Go fasthttp: sea@sea:~/tender_pro_bots$ wrk -t10 -c100 -d10s http://192.168.1.16:4000/ Running 10s test @ http://192.168.1.16:4000/ 10 threads and 100 connections Thread Stats Avg Stdev Max +/- Stdev Latency 43.56ms 85.95ms 738.51ms 89.71% Req/Sec 676.18 351.12 1.17k 70.80% 67045 requests in 10.04s, 9.78MB read Requests/sec: 6678.71 Transfer/sec: 0.97MB Elixir cowboy: sea@sea:~$ wrk -t10 -c100 -d10s http://192.168.1.16:4000/ Running 10s test @ http://192.168.1.16:4000/ 10 threads and 100 connections Thread Stats Avg Stdev Max +/- Stdev Latency 14.27ms 10.54ms 153.60ms 95.81% Req/Sec 753.20 103.47 1.09k 80.40% 74574 requests in 10.04s, 11.53MB read Requests/sec: 7429.95 Transfer/sec: 1.15MB Go fasthttp: sea@sea:~/tender_pro_bots$ wrk -t100 -c100 -d10s http://192.168.1.16:4000/ Running 10s test @ http://192.168.1.16:4000/ 100 threads and 100 connections Thread Stats Avg Stdev Max +/- Stdev Latency 8.95ms 3.08ms 42.23ms 75.69% Req/Sec 112.61 16.65 320.00 70.18% 112561 requests in 10.10s, 16.42MB read Requests/sec: 11144.39 Transfer/sec: 1.63MB Elixir cowboy: sea@sea:~$ wrk -t100 -c100 -d10s http://192.168.1.16:4000/ Running 10s test @ http://192.168.1.16:4000/ 100 threads and 100 connections Thread Stats Avg Stdev Max +/- Stdev Latency 13.32ms 5.25ms 90.37ms 73.31% Req/Sec 75.51 22.04 191.00 67.49% 75878 requests in 10.10s, 11.74MB read Requests/sec: 7512.75 Transfer/sec: 1.16MB Go fasthttp: sea@sea:~/tender_pro_bots$ wrk -t100 -c500 -d10s http://192.168.1.16:4000/ Running 10s test @ http://192.168.1.16:4000/ 100 threads and 500 connections Thread Stats Avg Stdev Max +/- Stdev Latency 46.44ms 10.69ms 327.50ms 93.21% Req/Sec 107.71 15.10 170.00 82.06% 107349 requests in 10.10s, 15.66MB read Requests/sec: 10627.97 Transfer/sec: 1.55MB Elixir cowboy: sea@sea:~$ wrk -t100 -c500 -d10s http://192.168.1.16:4000/ Running 10s test @ http://192.168.1.16:4000/ 100 threads and 500 connections Thread Stats Avg Stdev Max +/- Stdev Latency 93.24ms 96.80ms 1.26s 94.47% Req/Sec 62.95 23.33 292.00 79.87% 61646 requests in 10.10s, 9.53MB read Requests/sec: 6106.38 Transfer/sec: 0.94MB Golang, along with fasthttp, turned out to be faster than himself and faster than Elixir cowboy.

About the accuracy of measurements

To be more attentive, you can find out that cowboy and Go respond with different number of bytes. This is due to the different HTTP Headers that they issue.

Issuance go:

HTTP/1.1 200 OK Date: Thu, 30 Mar 2017 14:37:08 GMT Content-Length: 18 Content-Type: text/plain; charset=utf-8 Cowboy issue:

HTTP/1.1 200 OK server: Cowboy date: Thu, 30 Mar 2017 14:38:17 GMT content-length: 18 cache-control: max-age=0, private, must-revalidate As you can see, cowboy also provides an additional line "server: Cowboy", which necessarily somehow affects the number of bytes transferred in the case of a cowboy. The transferred data is received more.

UPDADE. Forgot to include in the BEAM option to work with epoll

Thanks nwalker for reminding me about the + K key. We specify it at startup:

sea@OrangePI:~/http_tests/elx-small-http-cowboy$ iex --erl '+K true' -S mix Erlang/OTP 19 [erts-8.1] [source] [smp:4:4] [async-threads:10] [hipe] [kernel-poll:true] This time I will not load 10 seconds, but a minute.

Elixir cowboy: sea@sea:~/tender_pro_bots$ wrk -t100 -c500 -d60s http://192.168.1.16:4000/ Running 1m test @ http://192.168.1.16:4000/ 100 threads and 500 connections Thread Stats Avg Stdev Max +/- Stdev Latency 78.63ms 38.59ms 1.00s 90.17% Req/Sec 65.08 14.19 158.00 54.94% 389764 requests in 1.00m, 60.28MB read Requests/sec: 6485.27 Transfer/sec: 1.00MB Elixir cowboy with epoll: sea@sea:~/tender_pro_bots$ wrk -t100 -c500 -d60s http://192.168.1.16:4000/ Running 1m test @ http://192.168.1.16:4000/ 100 threads and 500 connections Thread Stats Avg Stdev Max +/- Stdev Latency 90.25ms 78.45ms 1.96s 95.36% Req/Sec 59.91 19.71 370.00 64.78% 356572 requests in 1.00m, 55.15MB read Socket errors: connect 0, read 0, write 0, timeout 21 Requests/sec: 5932.94 Transfer/sec: 0.92MB But on OrangePI with epoll turned on, the result was worse.

findings

And everyone will make his own conclusions for himself. Go'shniki will rejoice at Go, and Erlangists and Elixirschiki - for their product. Each will remain with his. An Erlang supporter saw the Go speed, which turned out to be not an order of magnitude higher, and not even 2 times (but slightly less), nor would he give up all the possibilities of Erlang even for the sake of a 10-fold increase. At the same time, Go'shnik would hardly be interested in Erlang, seeing less speed and hearing about all the possible difficulties in studying functional programming.

In the modern world, programmer time is expensive, sometimes even more than the cost of equipment. It requires testing not only in the "spherical" RPS on the "spherical" task, but also the development time, the complexity of the refinement and maintenance. Economic expediency. But sometimes you want to bite it all. horsepower megahertz and arrange several races in a great company! Great ride.

')

Source: https://habr.com/ru/post/324818/

All Articles