Java and Docker: everyone should know

Many developers know, or should know, that Java processes running inside Linux containers (among them are docker , rkt , runC , lxcfs , and others) do not behave as expected. This happens when the JVM ergonomics mechanism is allowed to independently set the parameters of the garbage collector and the compiler, to control the size of the heap. When a Java application is started without a key indicating the need to configure the parameters, say,

In this article we will talk about what the developer needs to know before he will pack his Java applications into Linux containers.

We consider containers in the form of virtual machines, by setting which you can set the number of virtual processors and the amount of memory. Containers are more like an isolation mechanism, where resources (processor, memory, file system, network, and others) allocated to a certain process are isolated from others. This isolation is possible thanks to the Linux cgroups kernel mechanism.

It should be noted that some applications that rely on data obtained from the runtime environment are created before the appearance of cgroups. Utilities like

For demonstration purposes, I created the docker daemon in a virtual machine with 1 GB of RAM using the following command:

')

Next, I executed the

Results from the free -h command

A similar result is obtained even in the cluster Kubernetes / OpenShift. I ran the Kubernetes container group with a memory limit using the following command:

In this case, the cluster was assigned 15 GB of memory. As a result, the total amount of memory reported by the system was 14 GB.

Cluster study with 15 GB of memory

In order to understand the reasons for what is happening, I advise you to read this material about the features of working with memory in Linux containers.

It should be understood that the Docker keys (

In order to reproduce the situation in which the system stops the process after exceeding the specified memory limit, you can run the WildFly Application Server in a container with a memory limit of 50 MB using the following command:

Now, while the container is running, you can run the

Container data

After a few seconds, the execution of the WildFly container will be interrupted, a message will appear:

Run this command:

She will report that the container was stopped due to an OOM (Out Of Memory) situation. Note that the state of the container is

Analysis of the reason for stopping the container

In the Docker daemon that runs on a machine with 1 GB of memory (previously created by the

Let's try to do it:

I prepared the endpoint at

The endpoint will respond something like this:

All this can lead us to at least two questions:

In order to deal with this, you first need to remember what is said about the maximum heap size (maximum heap size) in the JVM ergonomics documentation . It says that the maximum heap size is 1/4 the size of physical memory. Since the JVM does not know what is being executed in the container, the maximum heap size will be close to 260 MB. Given that we added the

Now we need to understand that when the -

You can read about the features of various combinations of memory limit (-

Developers who do not understand the essence of what is happening, are inclined to believe that the problem described above is that the environment does not provide enough memory for the execution of the JVM. As a result, a frequent solution to this problem is to increase the amount of available memory, but this approach, in fact, only worsens the situation.

Suppose we provided a demon with not 1 GB of memory, but 8 GB. To create it, this command is suitable:

Following the same idea, we weaken the restriction of the container, giving it not 150, but 800 MB of memory:

Note that the

MaxHeapSize parameter check

The application will attempt to allocate more than 1.6 GB of memory, which is greater than the container limit (800 MB of RAM and the same in the swap file), as a result, the process will be stopped.

It is clear that increasing the amount of memory and allowing the JVM to set its own parameters is far from always correct when running applications in containers. When a Java application is executed in a container, we must set the maximum heap size on our own (using the

A small change in the Dockerfile allows us to set an environment variable that defines additional parameters for the JVM. Take a look at the following line:

You can now use the

You can set environment variables for Docker using the

In Kubernetes, the environment variable can be set using the

What if the heap size could be calculated automatically based on container constraints?

This is quite achievable if you use the Docker base image prepared by the Fabric8 community. The fabric8 / java-jboss-openjdk8-jdk image uses a script that finds out the container's limitations and uses 50% of the available memory as the upper bound. Please note that instead of 50% you can use a different value. In addition, this image allows you to enable and disable debugging, diagnostics, and more. Let's take a look at how the Dockerfile for the Spring Boot application looks like:

Now everything will work as it should. Regardless of the container memory limits, our Java application will always adjust the heap size according to the container parameters, not based on the daemon parameters.

Using Fabric8 Development

The JVM still does not have the means to determine that it is running in a containerized environment and to take into account the limitations of certain resources, such as memory and processor. Therefore, you cannot allow the JVM ergonomics mechanism to independently set the maximum heap size.

One way to solve this problem is to use the Fabric8 Base image, which allows the system, based on container parameters, to adjust the heap size automatically. This parameter can be set independently, but an automated approach is more convenient.

JDK9 includes experimental support for JVM cgroups memory limitations in containers (in Docker, for example). Here you can find the details.

It should be noted that here we talked about the JVM and the features of memory usage. The processor is a separate topic, it is quite possible, we will discuss it again.

Dear readers! Have you encountered problems when working with Java applications in Linux containers? If faced, please tell us about how you dealt with them.

java -jar myapplication-fat.jar , the JVM will configure some parameters on its own, trying to ensure the best application performance.

In this article we will talk about what the developer needs to know before he will pack his Java applications into Linux containers.

We consider containers in the form of virtual machines, by setting which you can set the number of virtual processors and the amount of memory. Containers are more like an isolation mechanism, where resources (processor, memory, file system, network, and others) allocated to a certain process are isolated from others. This isolation is possible thanks to the Linux cgroups kernel mechanism.

It should be noted that some applications that rely on data obtained from the runtime environment are created before the appearance of cgroups. Utilities like

top , free , ps , and even JVM are not optimized for execution inside containers, in fact, highly restricted Linux processes. Let's see what happens when programs do not take into account the peculiarities of work in containers and find out how to avoid errors.Formulation of the problem

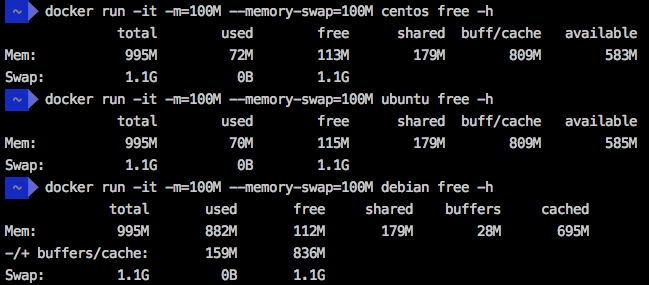

For demonstration purposes, I created the docker daemon in a virtual machine with 1 GB of RAM using the following command:

')

docker-machine create -d virtualbox –virtualbox-memory '1024' docker1024 Next, I executed the

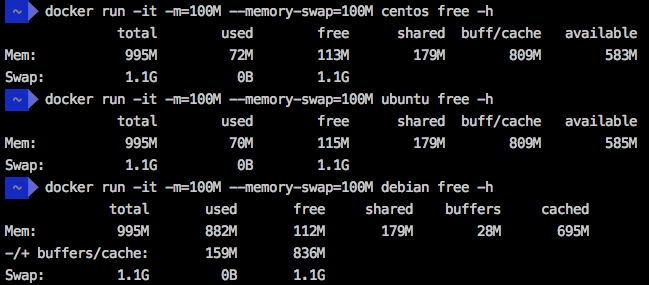

free -h command in three different Linux distributions running in a container, using the 100 MB limit, as specified by the -m and --memory-swap . As a result, they all showed a total memory capacity of 995 MB.

Results from the free -h command

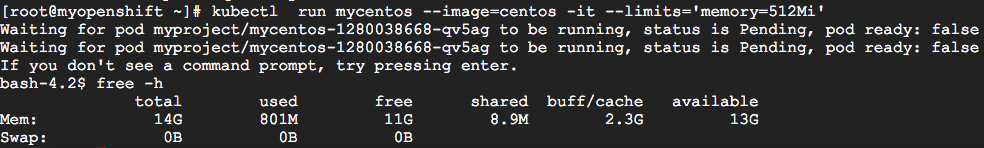

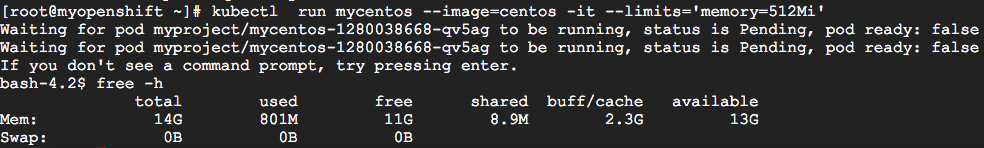

A similar result is obtained even in the cluster Kubernetes / OpenShift. I ran the Kubernetes container group with a memory limit using the following command:

kubectl run mycentos –image=centos -it –limits='memory=512Mi' In this case, the cluster was assigned 15 GB of memory. As a result, the total amount of memory reported by the system was 14 GB.

Cluster study with 15 GB of memory

In order to understand the reasons for what is happening, I advise you to read this material about the features of working with memory in Linux containers.

It should be understood that the Docker keys (

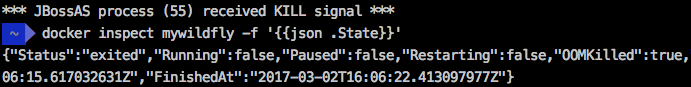

-m, --memory and --memory-swap ), and the Kubernetes key ( --limits) tell the Linux kernel to stop the process if it tries to exceed the specified limit. However, the JVM does not know anything about this, and when it goes beyond these limitations, nothing good can be expected.In order to reproduce the situation in which the system stops the process after exceeding the specified memory limit, you can run the WildFly Application Server in a container with a memory limit of 50 MB using the following command:

docker run -it –name mywildfly -m=50m jboss/wildfly Now, while the container is running, you can run the

docker stats to check the constraints.

Container data

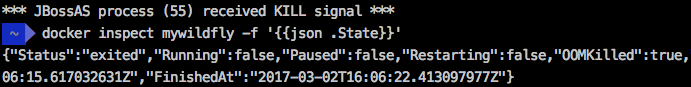

After a few seconds, the execution of the WildFly container will be interrupted, a message will appear:

*** JBossAS process (55) received KILL signal *** Run this command:

docker inspect mywildfly -f '{{json .State}} She will report that the container was stopped due to an OOM (Out Of Memory) situation. Note that the state of the container is

OOMKilled=true .

Analysis of the reason for stopping the container

Impact of memory misuse on Java applications

In the Docker daemon that runs on a machine with 1 GB of memory (previously created by the

docker-machine create -d virtualbox –virtualbox-memory '1024' docker1024 ), but with container memory limited to 150 megabytes, which seems sufficient for the Spring application Boot, the Java application starts with the XX:+PrintFlagsFinal and -XX:+PrintGCDetails specified in the Dockerfile . This allows us to read the initial parameters of the JVM ergonomics mechanism and to learn more about the garbage collection launches (GC, Garbage Collection).Let's try to do it:

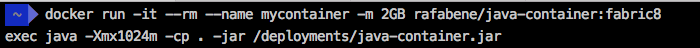

$ docker run -it --rm --name mycontainer150 -p 8080:8080 -m 150M rafabene/java-container:openjdk I prepared the endpoint at

/api/memory/ , which loads JVM string objects into memory to simulate a memory-consuming operation. Perform this call: $ curl http://`docker-machine ip docker1024`:8080/api/memory The endpoint will respond something like this:

Allocated more than 80% (219.8 MiB) of the max allowed JVM memory size (241.7 MiB) All this can lead us to at least two questions:

- Why is the size of the maximum allowed JVM memory equal to 241.7 MiB?

- If the container memory limit is 150 MB, why did it allow Java to allocate almost 220 MB?

In order to deal with this, you first need to remember what is said about the maximum heap size (maximum heap size) in the JVM ergonomics documentation . It says that the maximum heap size is 1/4 the size of physical memory. Since the JVM does not know what is being executed in the container, the maximum heap size will be close to 260 MB. Given that we added the

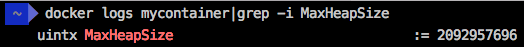

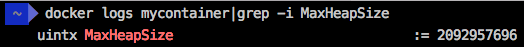

-XX:+PrintFlagsFinal when initializing the container, you can check this value: $ docker logs mycontainer150|grep -i MaxHeapSize uintx MaxHeapSize := 262144000 {product} Now we need to understand that when the -

m 150M parameter is used in the Docker command line, the Docker daemon will limit the size of the memory and swap file to 150 megabytes. As a result, the process will be able to allocate 300 megabytes, which explains why our process did not receive the KILL signal from the Linux kernel.You can read about the features of various combinations of memory limit (-

--memory ) and swap file (- --swap ) --swap in the Docker command line here .Increased memory as an example of an incorrect solution

Developers who do not understand the essence of what is happening, are inclined to believe that the problem described above is that the environment does not provide enough memory for the execution of the JVM. As a result, a frequent solution to this problem is to increase the amount of available memory, but this approach, in fact, only worsens the situation.

Suppose we provided a demon with not 1 GB of memory, but 8 GB. To create it, this command is suitable:

docker-machine create -d virtualbox –virtualbox-memory '8192' docker8192 Following the same idea, we weaken the restriction of the container, giving it not 150, but 800 MB of memory:

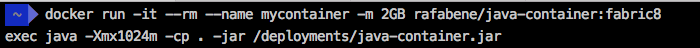

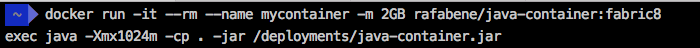

$ docker run -it --name mycontainer -p 8080:8080 -m 800M rafabene/java-container:openjdk Note that the

curl http://`docker-machine ip docker8192`:8080/api/memory command in such conditions cannot even be executed, since the calculated MaxHeapSize parameter for the JVM in an environment with 8 GB of memory will be equal to 2092957696 bytes (approximately 2 GB). You can check this with the following command: docker logs mycontainer|grep -i MaxHeapSize

MaxHeapSize parameter check

The application will attempt to allocate more than 1.6 GB of memory, which is greater than the container limit (800 MB of RAM and the same in the swap file), as a result, the process will be stopped.

It is clear that increasing the amount of memory and allowing the JVM to set its own parameters is far from always correct when running applications in containers. When a Java application is executed in a container, we must set the maximum heap size on our own (using the

--Xmx parameter), based on the needs of the applications and the constraints of the container.The right solution to the problem

A small change in the Dockerfile allows us to set an environment variable that defines additional parameters for the JVM. Take a look at the following line:

CMD java -XX:+PrintFlagsFinal -XX:+PrintGCDetails $JAVA_OPTIONS -jar java-container.jar You can now use the

JAVA_OPTIONS environment variable to inform the system about the size of the JVM heap. This application seems to be enough 300 MB. Later you can look at the logs and find the value of 314572800 bytes (300 MiB ).You can set environment variables for Docker using the

-e switch: $ docker run -d --name mycontainer8g -p 8080:8080 -m 800M -e JAVA_OPTIONS='-Xmx300m' rafabene/java-container:openjdk-env $ docker logs mycontainer8g|grep -i MaxHeapSize uintx MaxHeapSize := 314572800 {product} In Kubernetes, the environment variable can be set using the

–env=[key=value] : $ kubectl run mycontainer --image=rafabene/java-container:openjdk-env --limits='memory=800Mi' --env="JAVA_OPTIONS='-Xmx300m'" $ kubectl get pods NAME READY STATUS RESTARTS AGE mycontainer-2141389741-b1u0o 1/1 Running 0 6s $ kubectl logs mycontainer-2141389741-b1u0o|grep MaxHeapSize uintx MaxHeapSize := 314572800 {product} Improving the right solution

What if the heap size could be calculated automatically based on container constraints?

This is quite achievable if you use the Docker base image prepared by the Fabric8 community. The fabric8 / java-jboss-openjdk8-jdk image uses a script that finds out the container's limitations and uses 50% of the available memory as the upper bound. Please note that instead of 50% you can use a different value. In addition, this image allows you to enable and disable debugging, diagnostics, and more. Let's take a look at how the Dockerfile for the Spring Boot application looks like:

FROM fabric8/java-jboss-openjdk8-jdk:1.2.3 ENV JAVA_APP_JAR java-container.jar ENV AB_OFF true EXPOSE 8080 ADD target/$JAVA_APP_JAR /deployments/ Now everything will work as it should. Regardless of the container memory limits, our Java application will always adjust the heap size according to the container parameters, not based on the daemon parameters.

Using Fabric8 Development

Results

The JVM still does not have the means to determine that it is running in a containerized environment and to take into account the limitations of certain resources, such as memory and processor. Therefore, you cannot allow the JVM ergonomics mechanism to independently set the maximum heap size.

One way to solve this problem is to use the Fabric8 Base image, which allows the system, based on container parameters, to adjust the heap size automatically. This parameter can be set independently, but an automated approach is more convenient.

JDK9 includes experimental support for JVM cgroups memory limitations in containers (in Docker, for example). Here you can find the details.

It should be noted that here we talked about the JVM and the features of memory usage. The processor is a separate topic, it is quite possible, we will discuss it again.

Dear readers! Have you encountered problems when working with Java applications in Linux containers? If faced, please tell us about how you dealt with them.

Source: https://habr.com/ru/post/324756/

All Articles