Meet Buddy

According to the creators, the Buddy robot will be the world's first affordable companion robot for the whole family. Its launch is planned for 2018. In this article, we will share the experience of its developers in deploying architecture with high flexibility, as well as an example of calculating the cost of this solution.

Blue Frog Robotics is a startup founded two years ago in Paris. He is currently developing Buddy. The developers claim that Buddy as a social robot unites and protects all family members, interacting with each of them.

During the 2015 crowdfunding campaign, Blue Frog managed to raise the planned amount of $ 100,000 in just one day, and by the end of the campaign, raise it to $ 620,000. Presales continued on the Blue Frog website, and the total amount reached $ 1.5 million.

')

Control of the distribution of Buddy will remain at Blue Frog Robotics, and the robot will be sold through various regional store chains. That is why it is important to create a system to account for the actual deployment and use of the Buddy robot worldwide. This will ensure the optimum level of its service, depending on popularity.

The cloud solution will take care of storing the collected data (consumption, sensors, and so on) and the placement of various services necessary for the proper functioning of the first 2,200 copies of the robot, which will be put into operation next year. To operate the device efficiently, you need a reliable and scalable internal system. In addition, it is important to develop an architecture that provides an affordable price solution and an optimal quality of service.

During the hackfest, Blue Frog Robotics and Microsoft DX France worked together on a solution from a production perspective, which you will learn about below.

Buddy was developed using popular tools (Arduino, OpenCV and Unity 3D) to make it easier for developers to create projects with it. In addition, the creators are now implementing a full-featured API. To interact with the robot, in addition to software, developers will be able to create hardware solutions.

The kit includes a companion mobile app for family members to connect with Buddy from a distance, supporting video surveillance, video call and remote control functions. It is developed using C # and Unity 3D, and will be available on iOS, Android and Windows.

Buddy features:

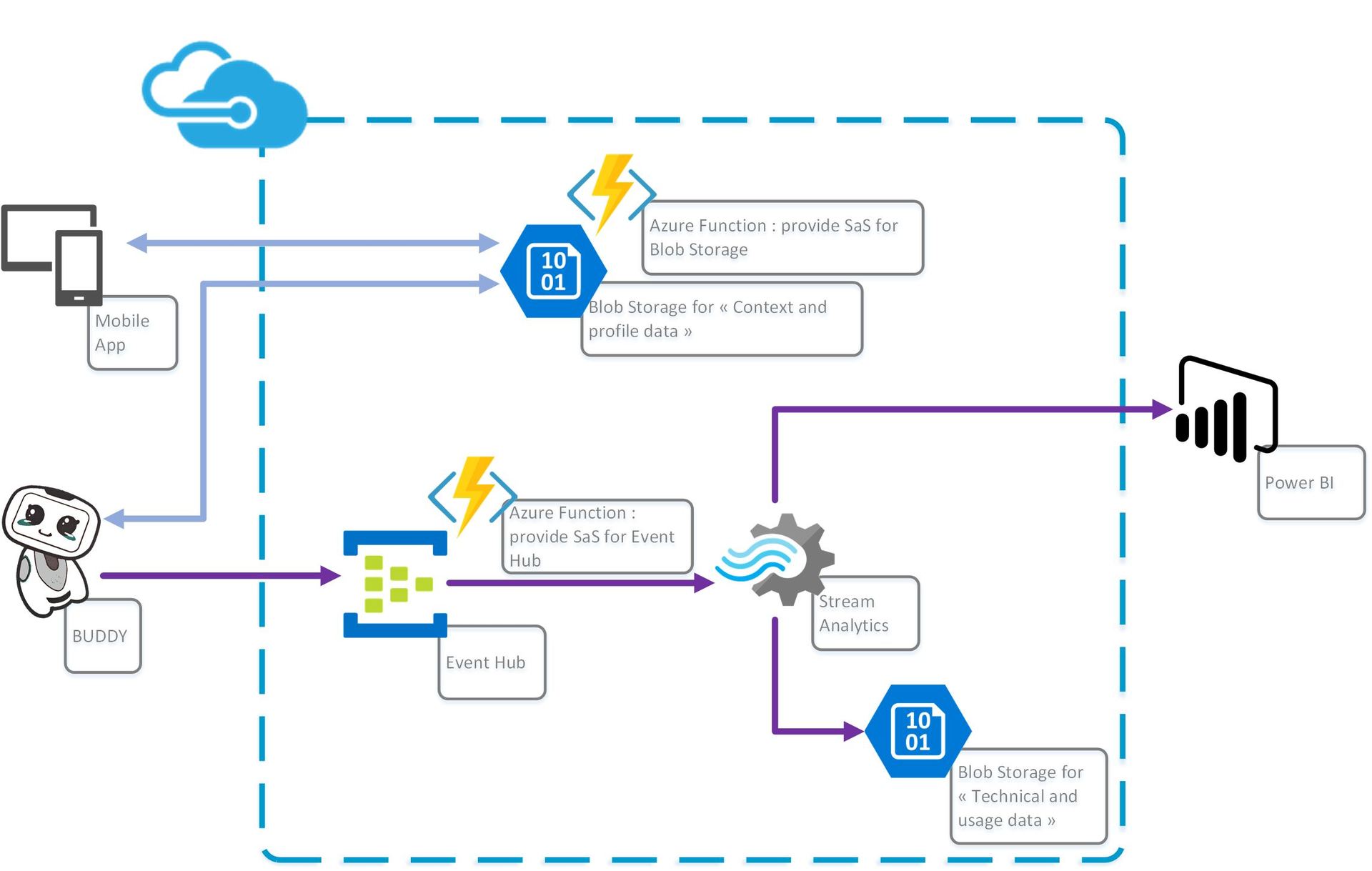

Buddy sends two types of data to the cloud.

Profile and contextual data is a set of data created by Buddy or a companion application for synchronization or backup (map data, user profile, and so on). This data is configured to read and write with a low request rate and is stored in JSON format.

Technical data and usage information are data obtained by a tablet PC and an Arduino map from technical indicators of a robot (for example, battery level, location, servo motor activity). This data is sent with a high frequency, not subject to change and recorded in JSON format. The data is encoded in Base64, which reduces the length of the message.

The main criteria for creating architecture: cost, scaling and security. Therefore, it is important to minimize infrastructure costs.

For all relevant technical aspects, scaling should be considered to avoid performance problems during the global deployment of the robot.

As for security, Buddy software implements several types of encryption and data isolation. To ensure protection in the cloud, the data transferred is anonymous and read and write only exists for authorized systems.

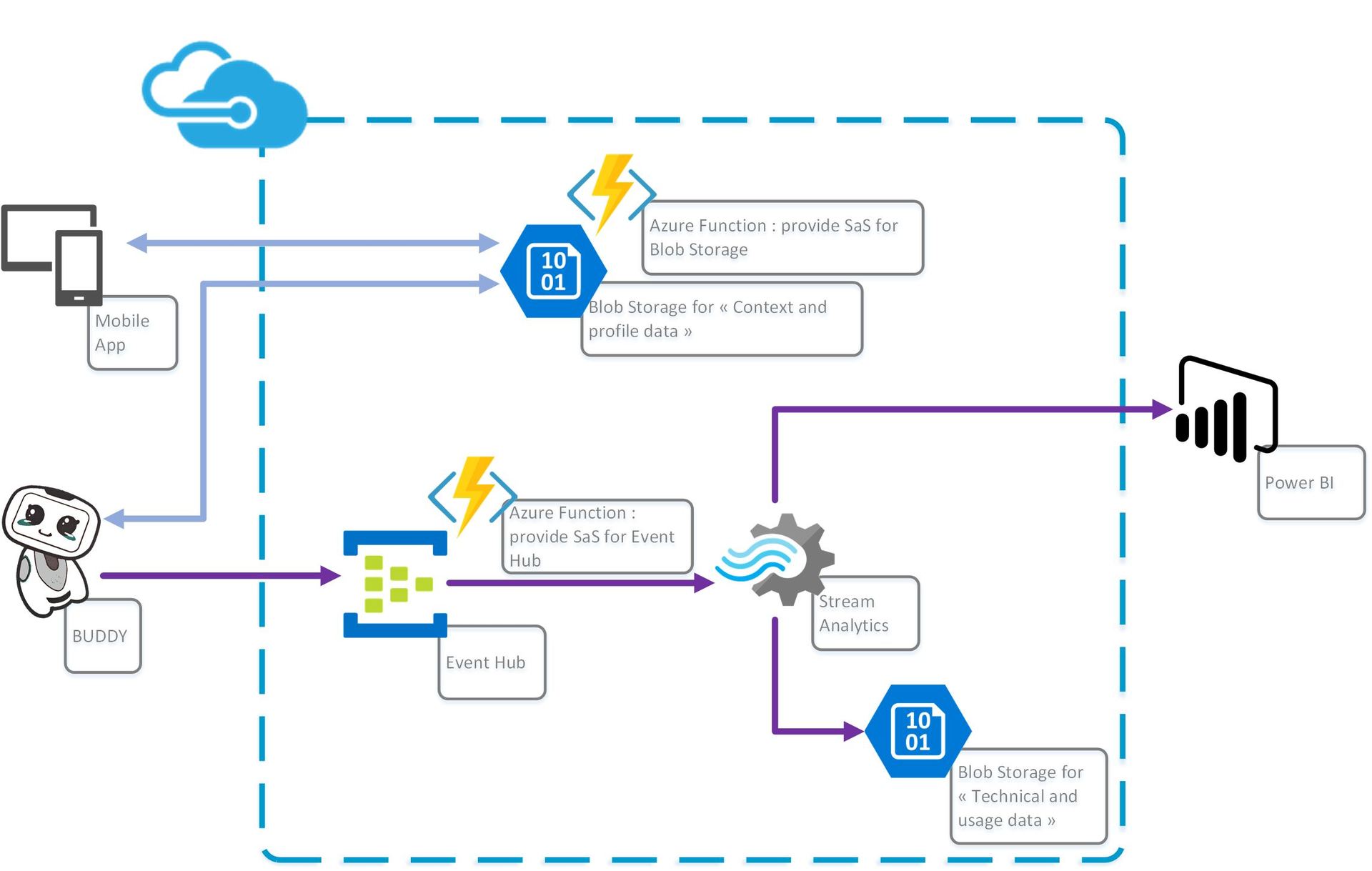

When deploying the selected architecture, three areas were identified:

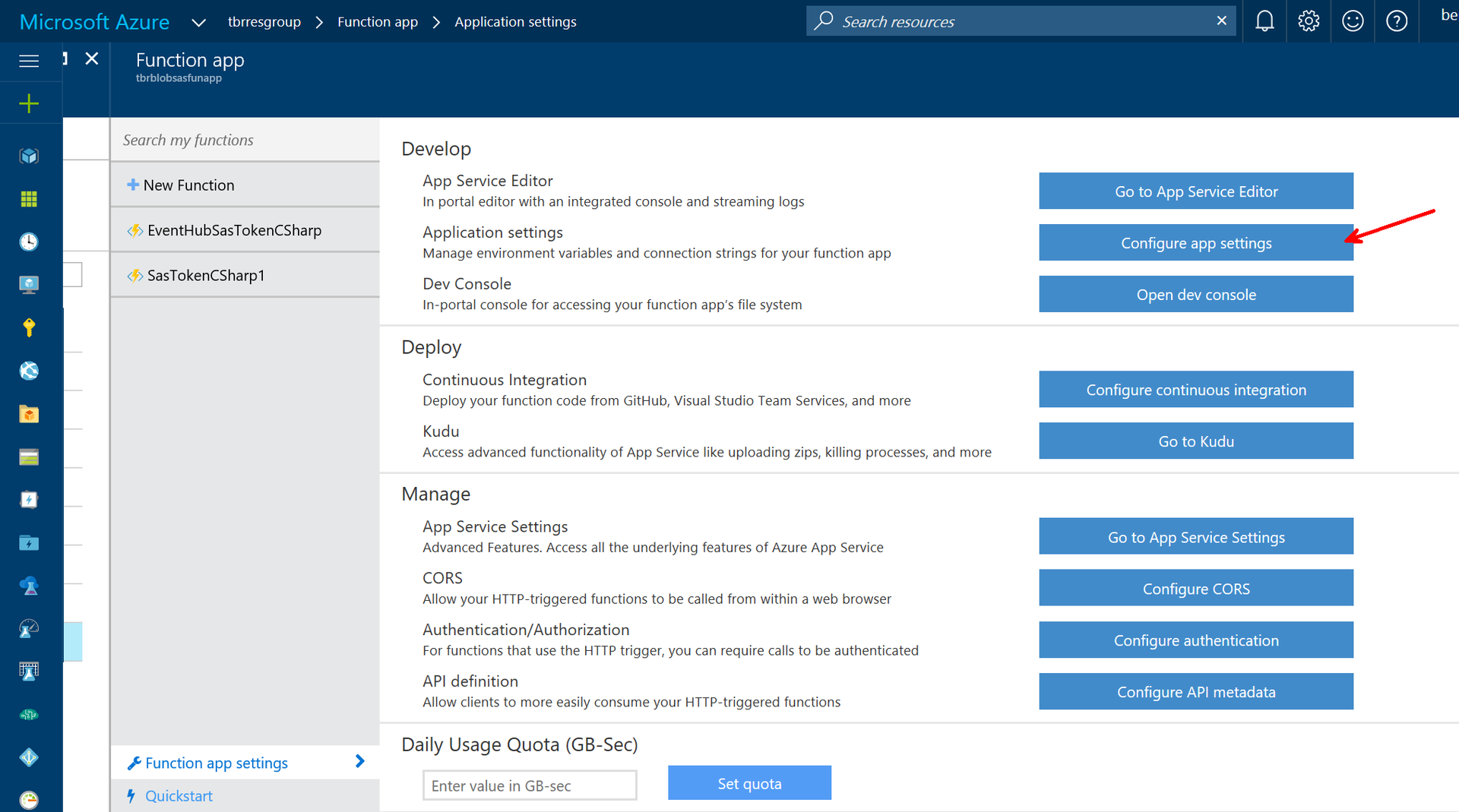

Event hubs were selected as data sink. To send messages to event hubs, each Buddy must receive a shared access signature. SAS is transmitted using the Azure Function.

Sample Azure Function code:

The robot sends data to the event hub.

Using the Unity 3D application, the robot receives the SAS token created above the Azure Function:

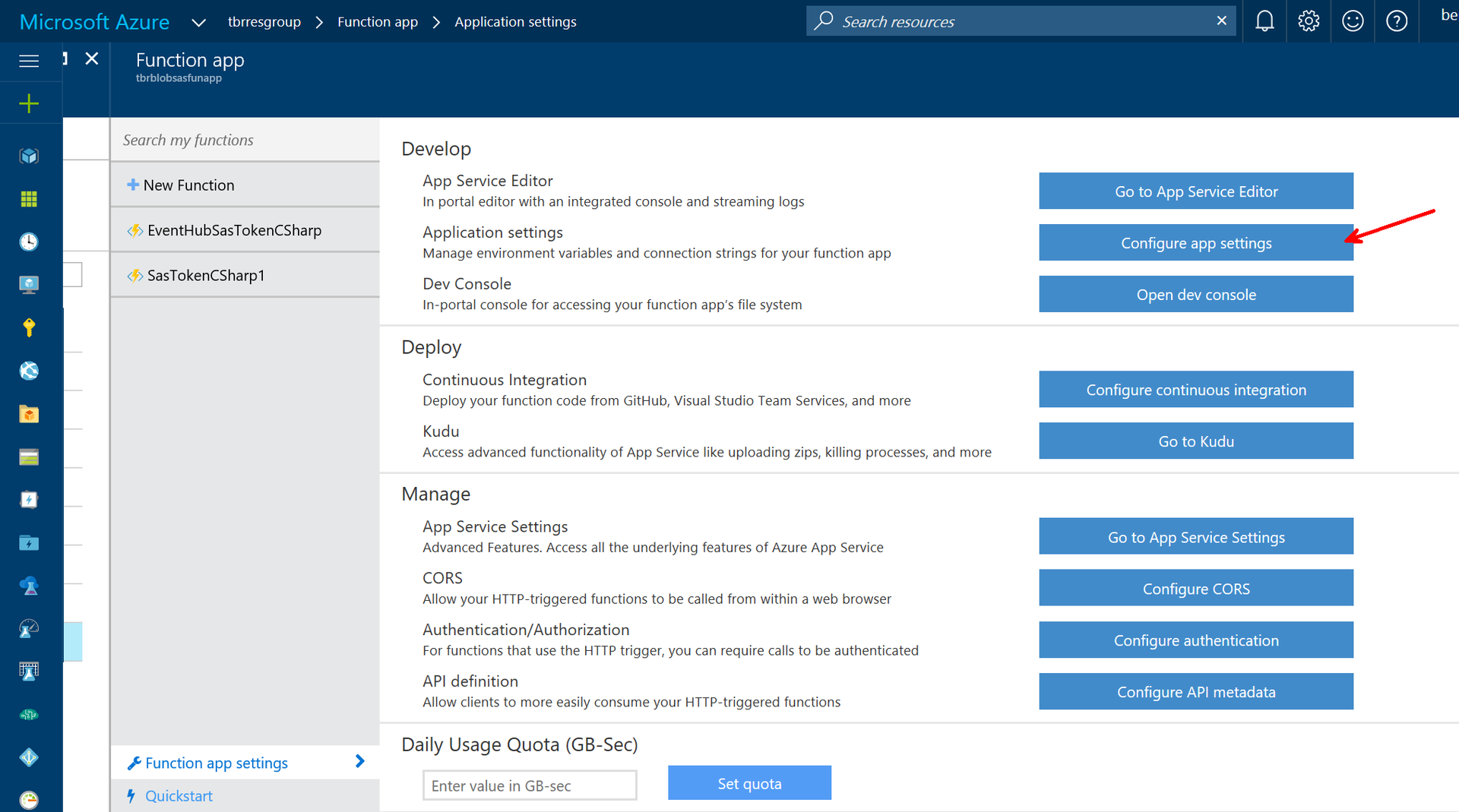

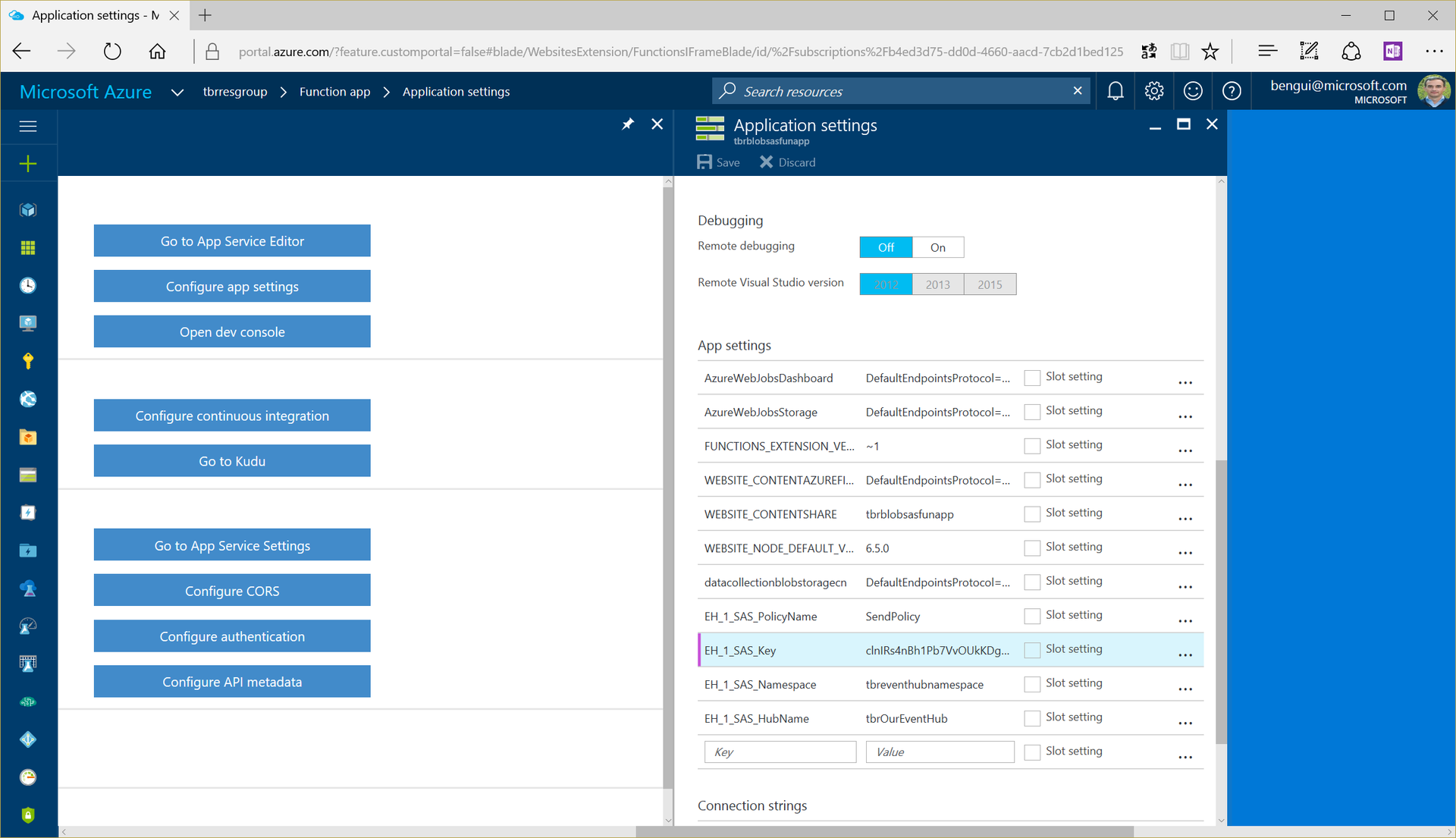

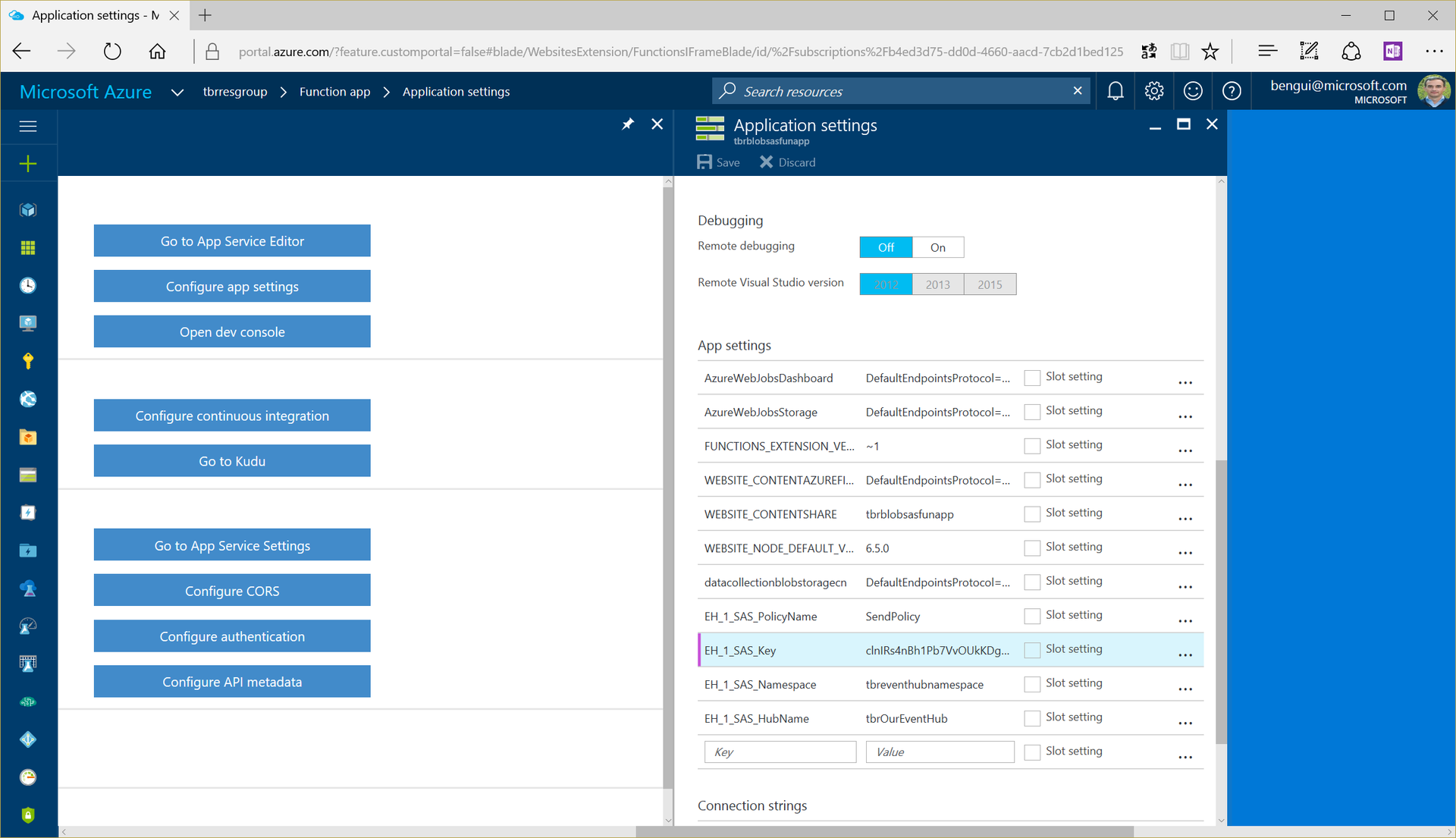

The function has a key to control the event hub:

Data is also sent via a web request from Unity. This is equivalent to cURL:

The data goes to the Azure Event Hub:

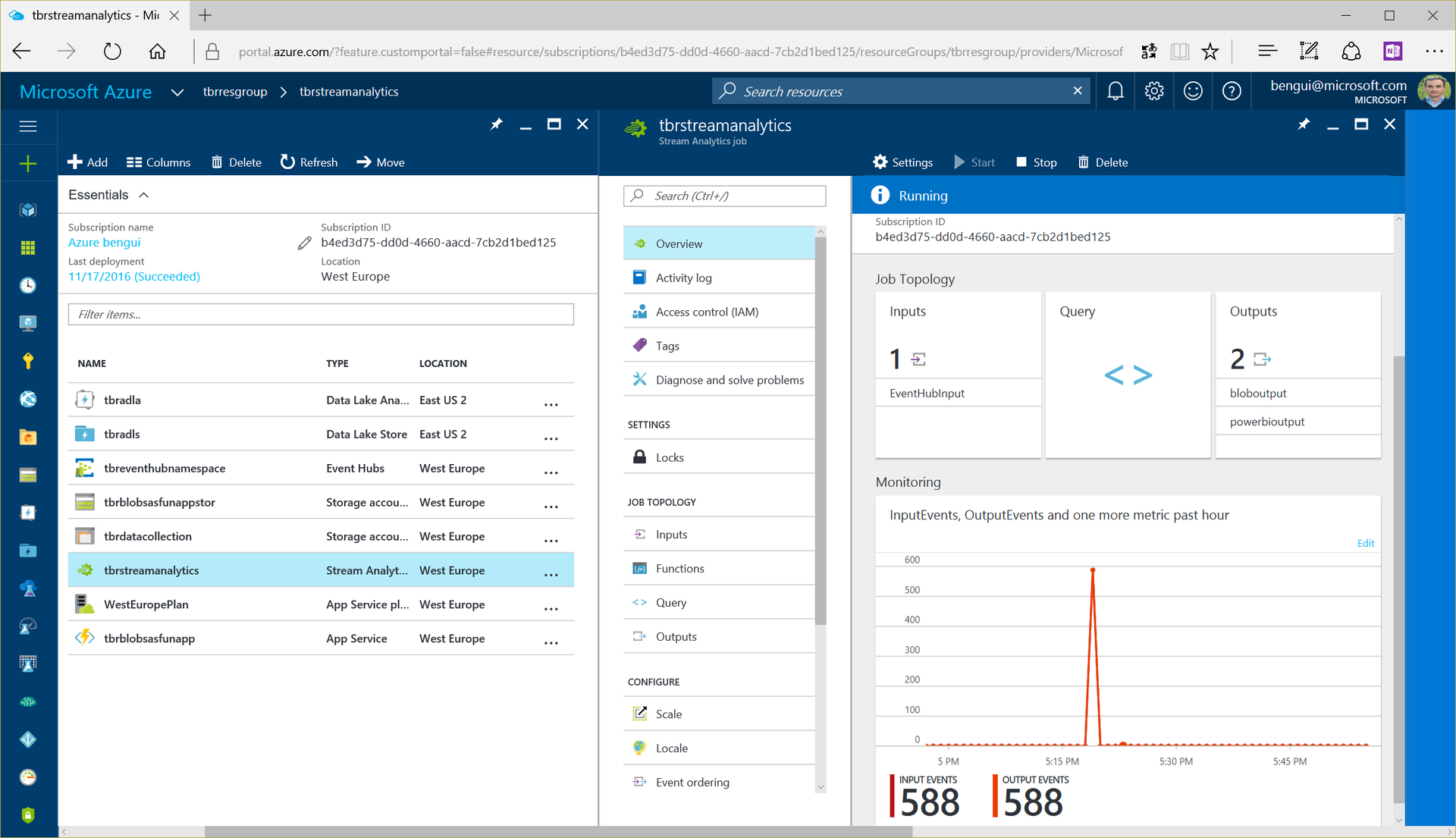

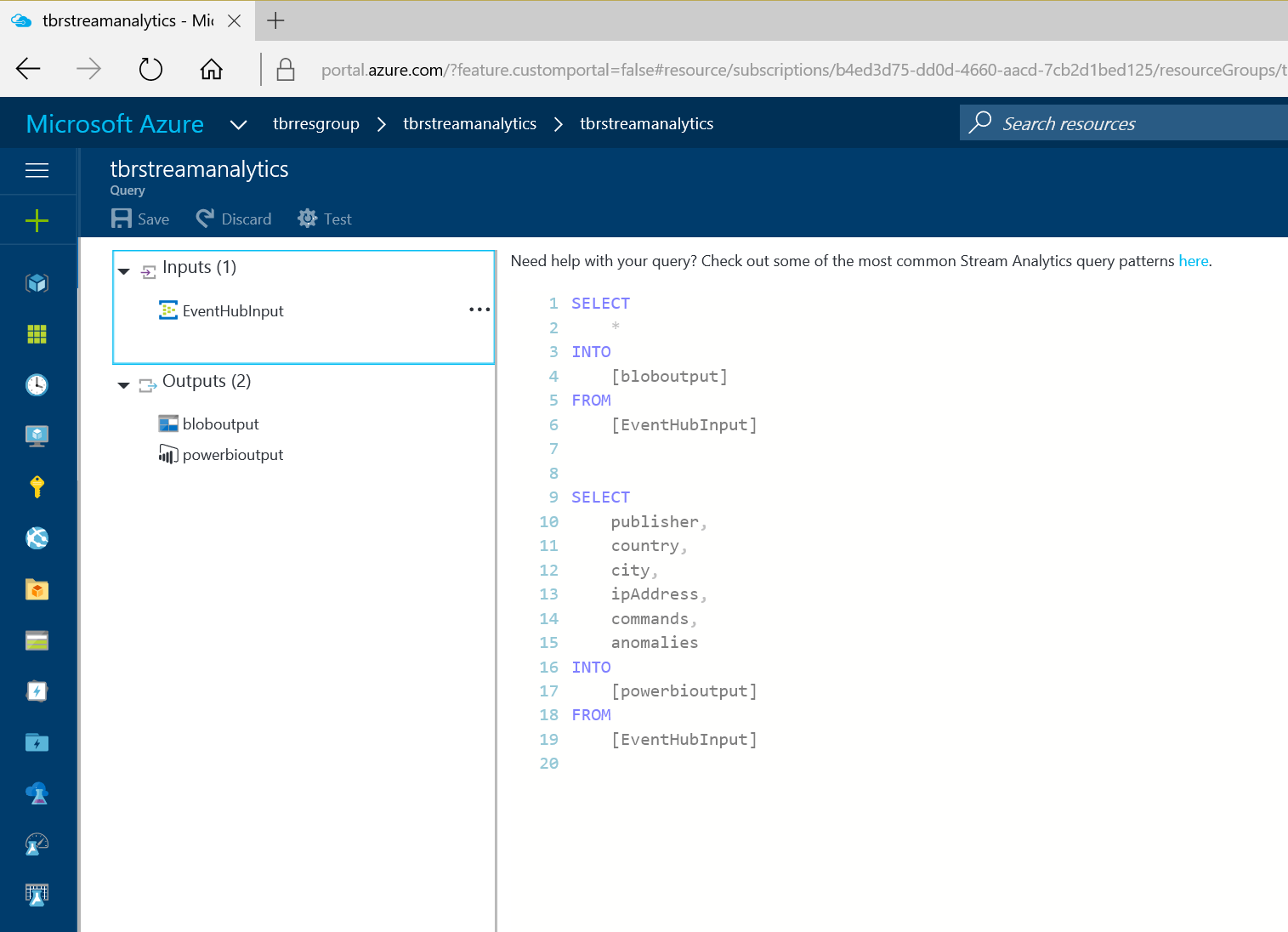

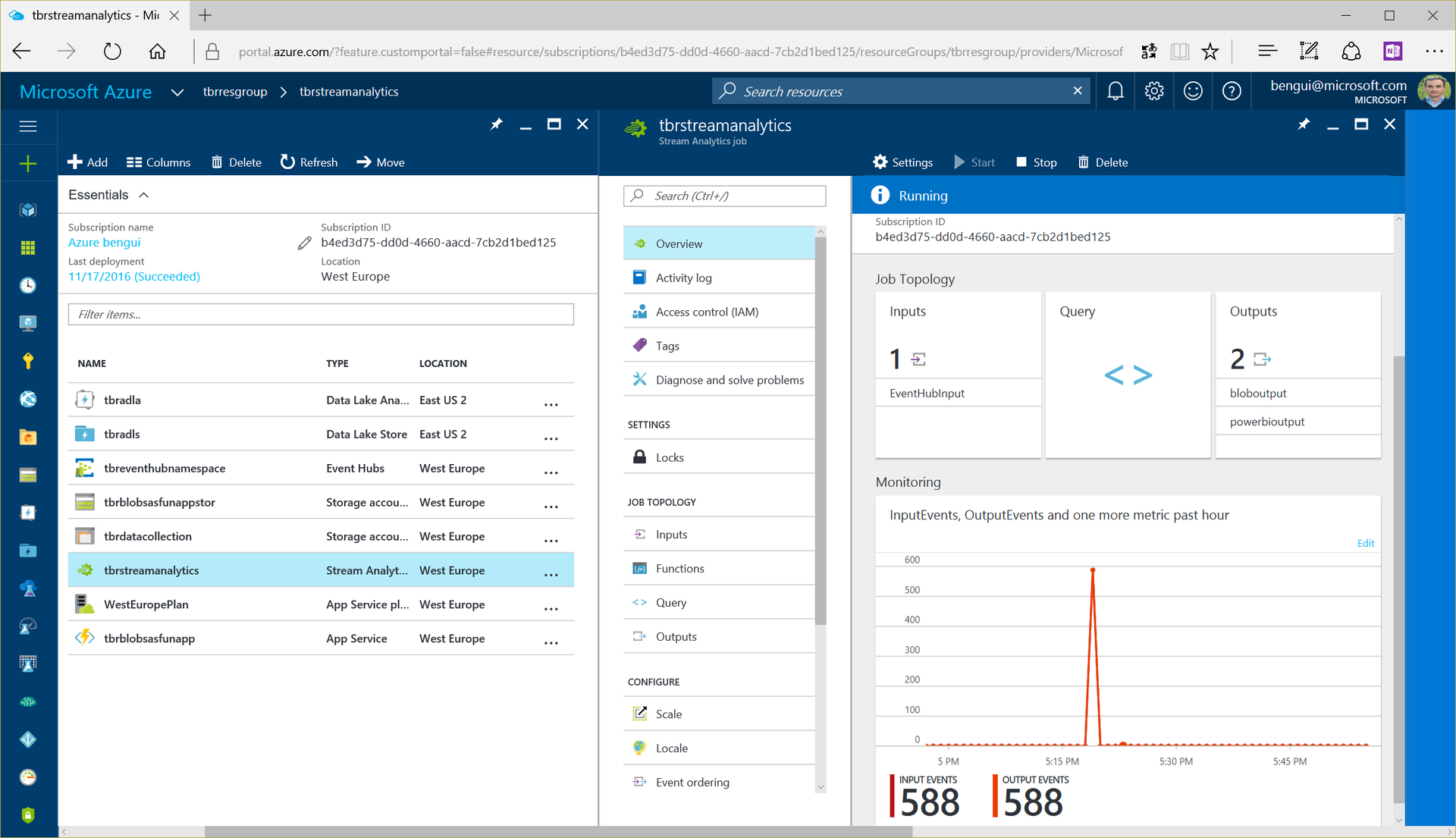

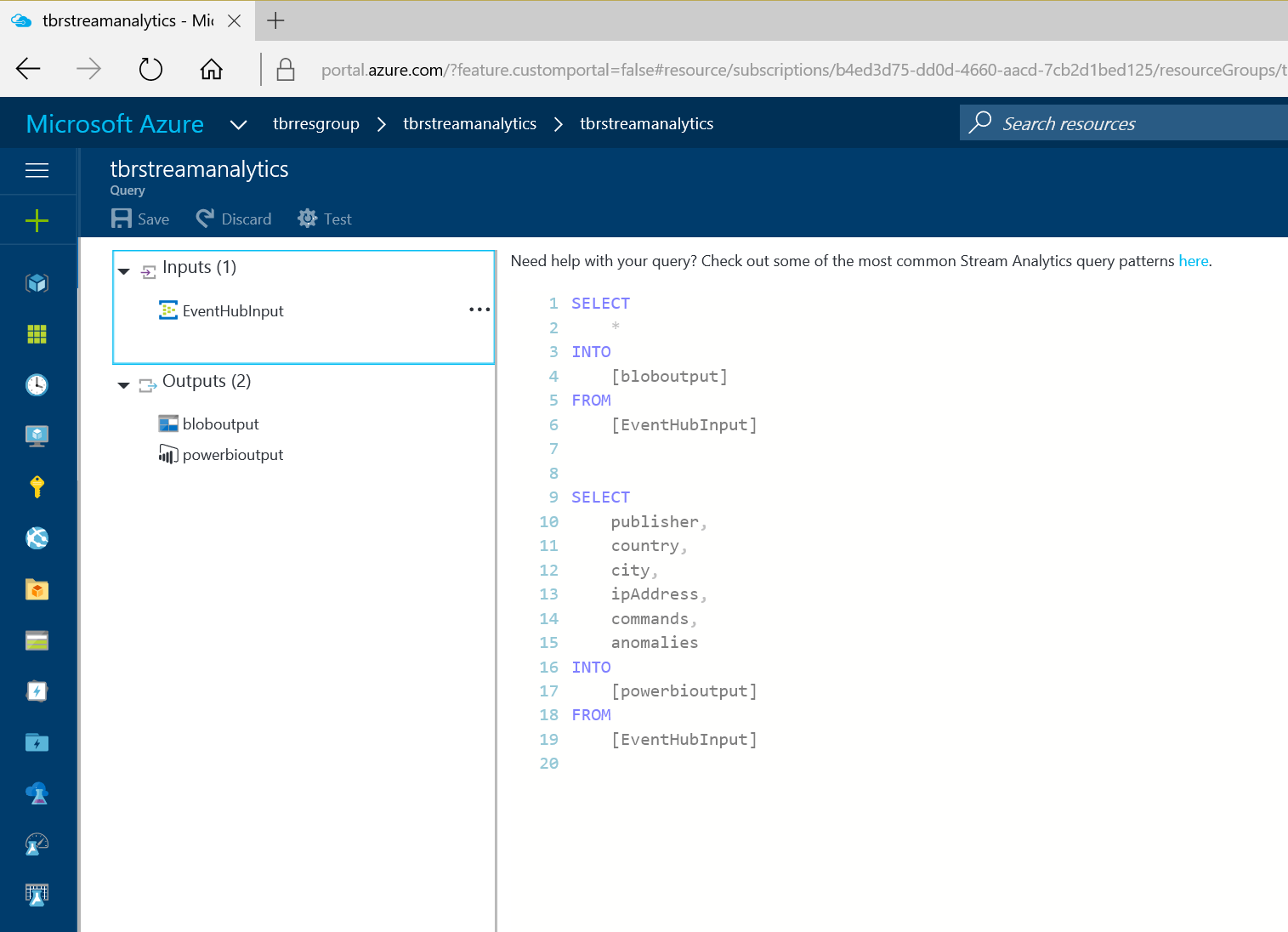

Then they are processed through Azure Stream Analytics:

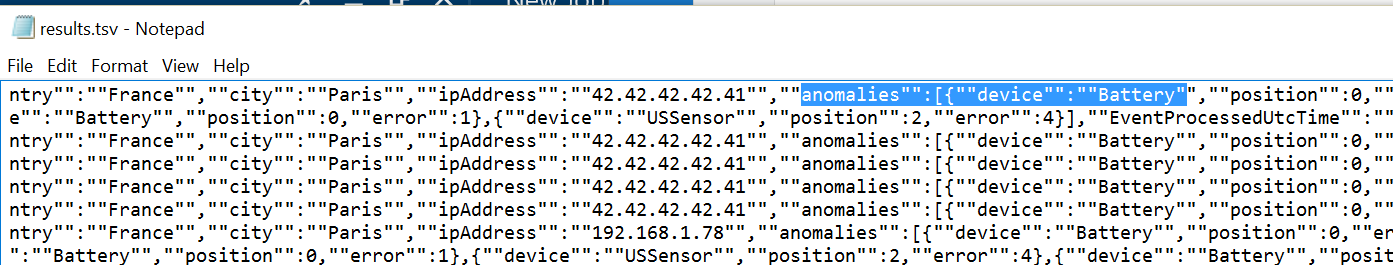

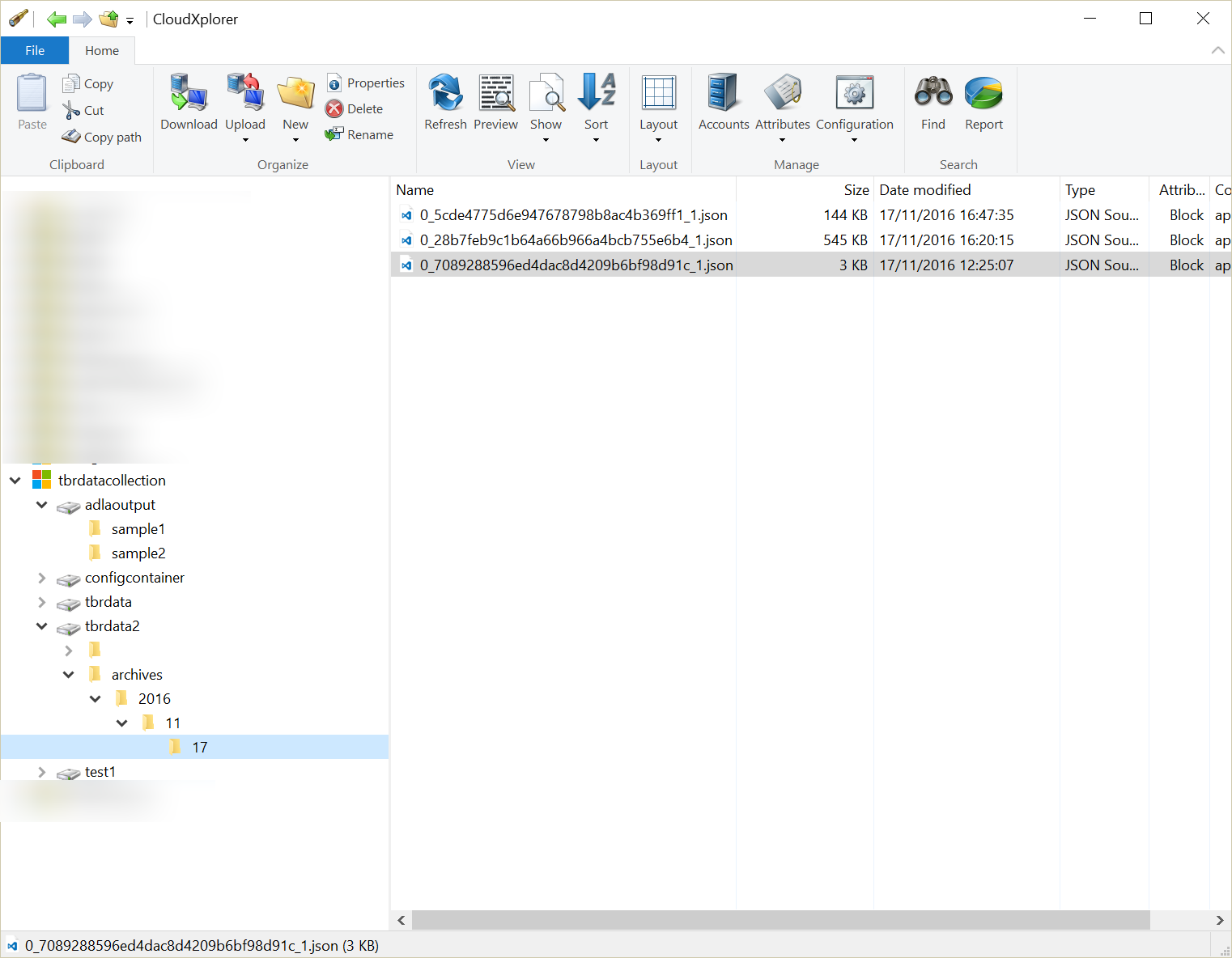

Azure Stream Analytics sends a copy of all data to blob storage:

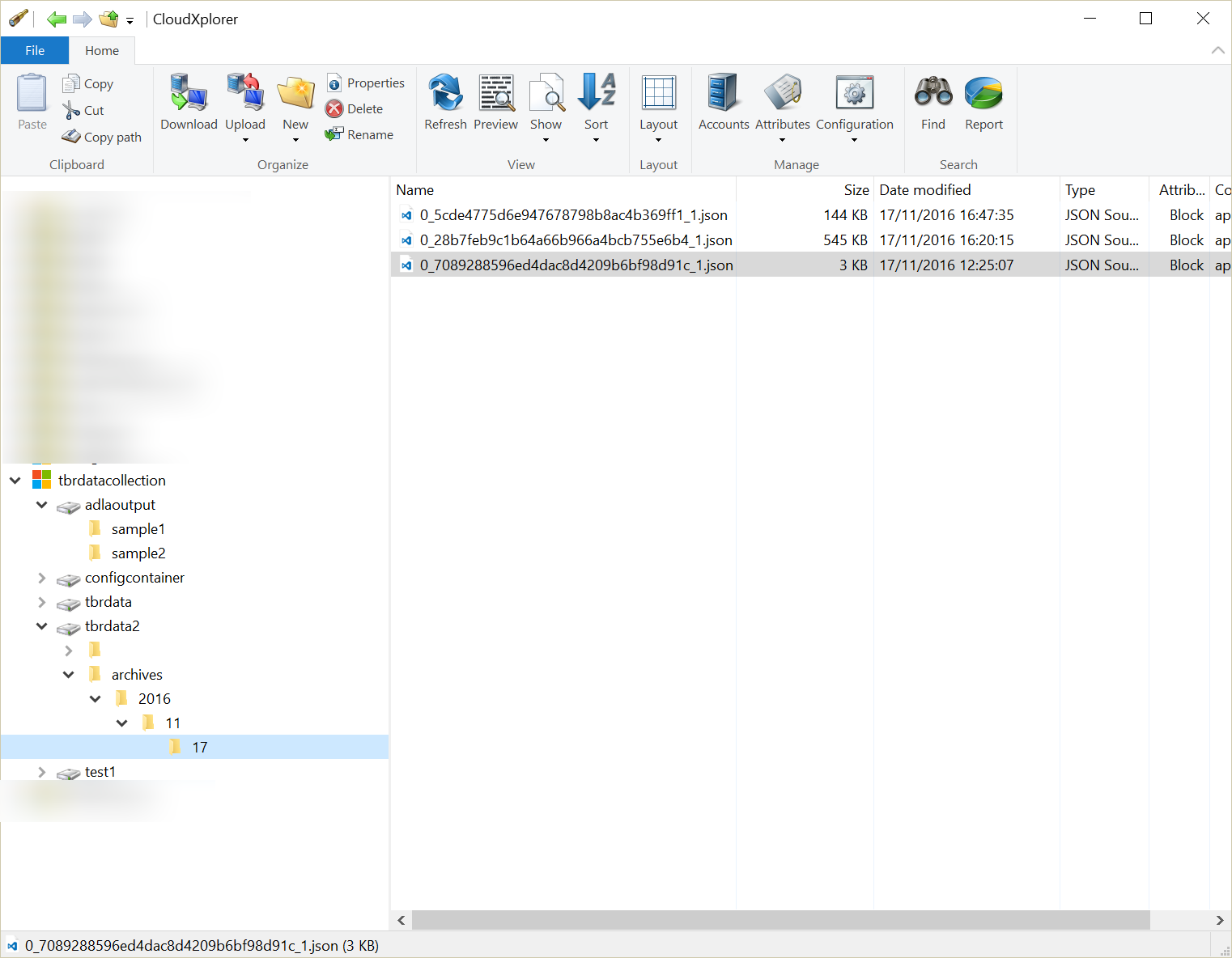

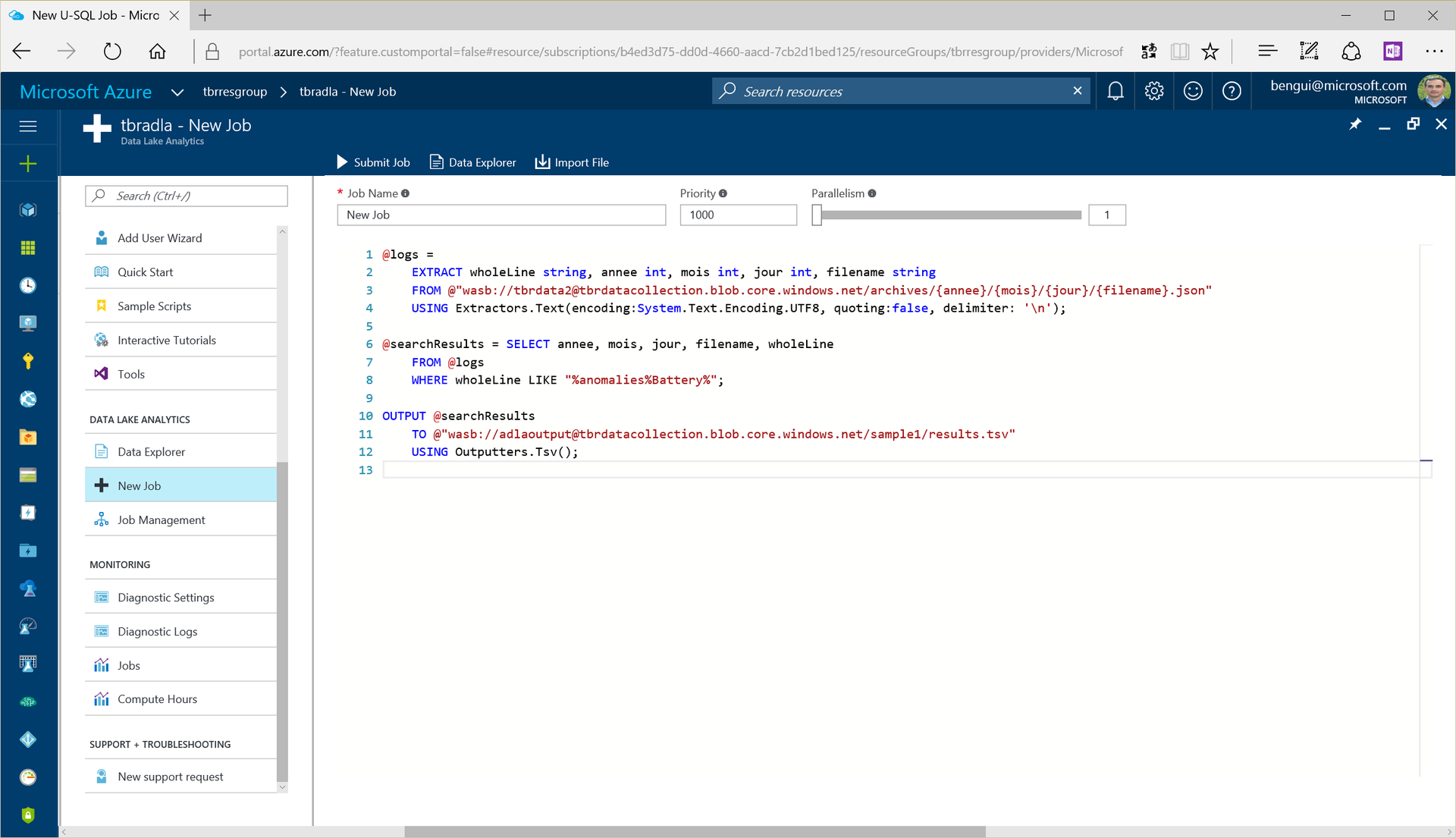

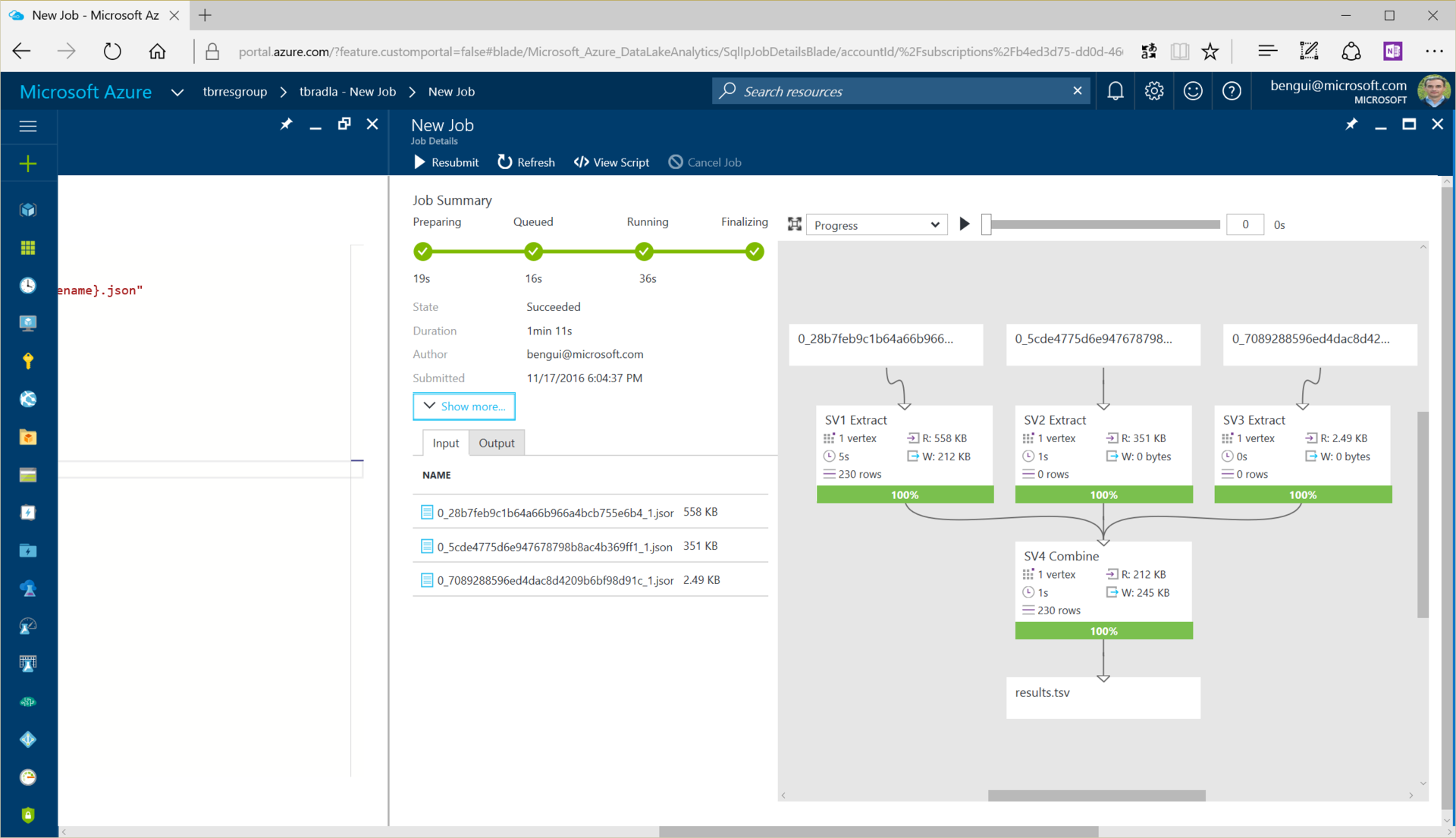

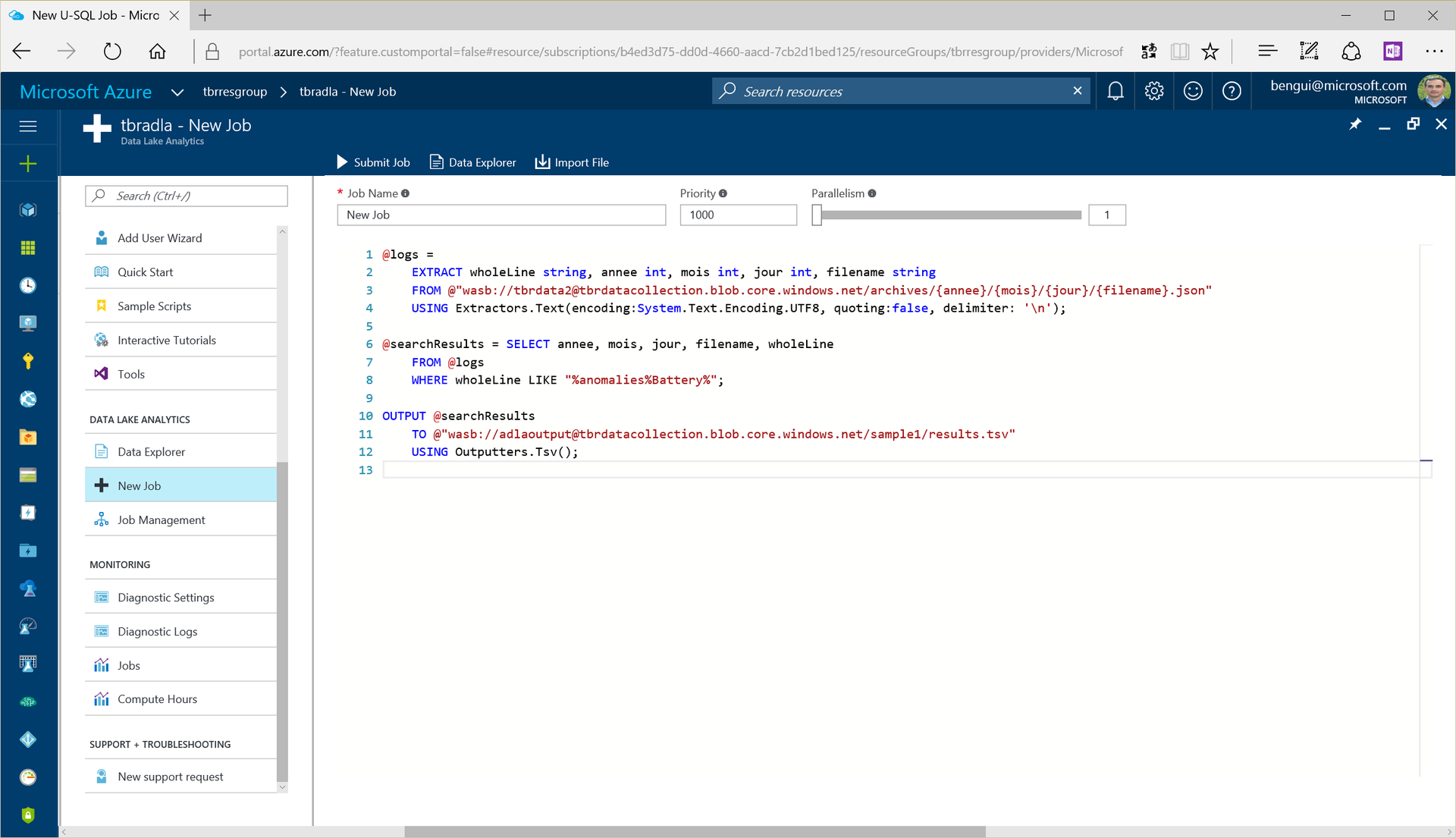

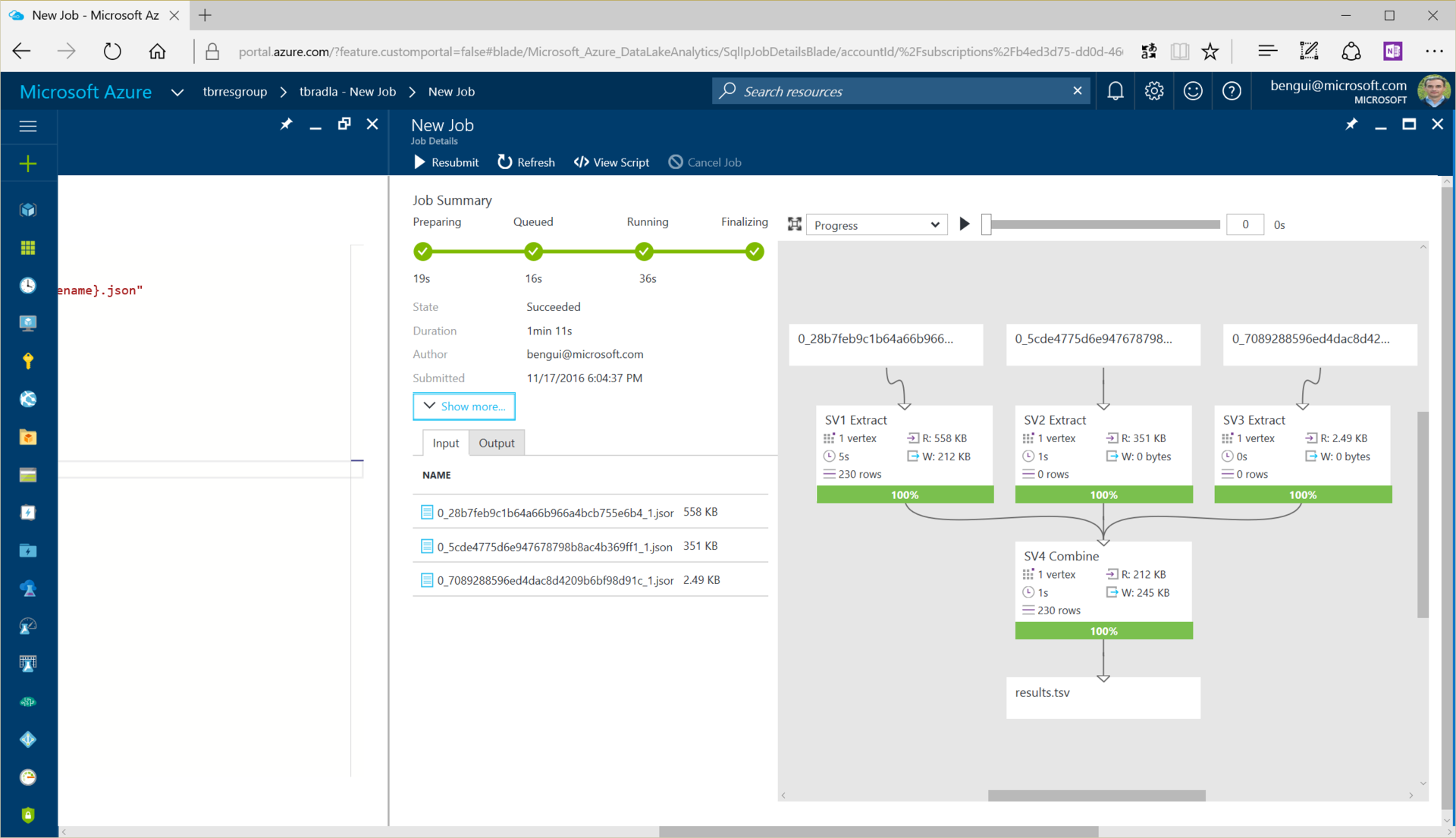

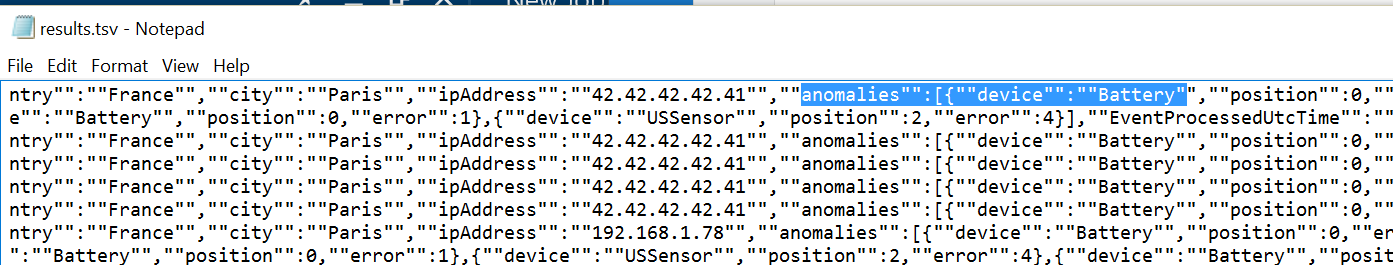

Azure Data Lake Analytics is used for data retrieval:

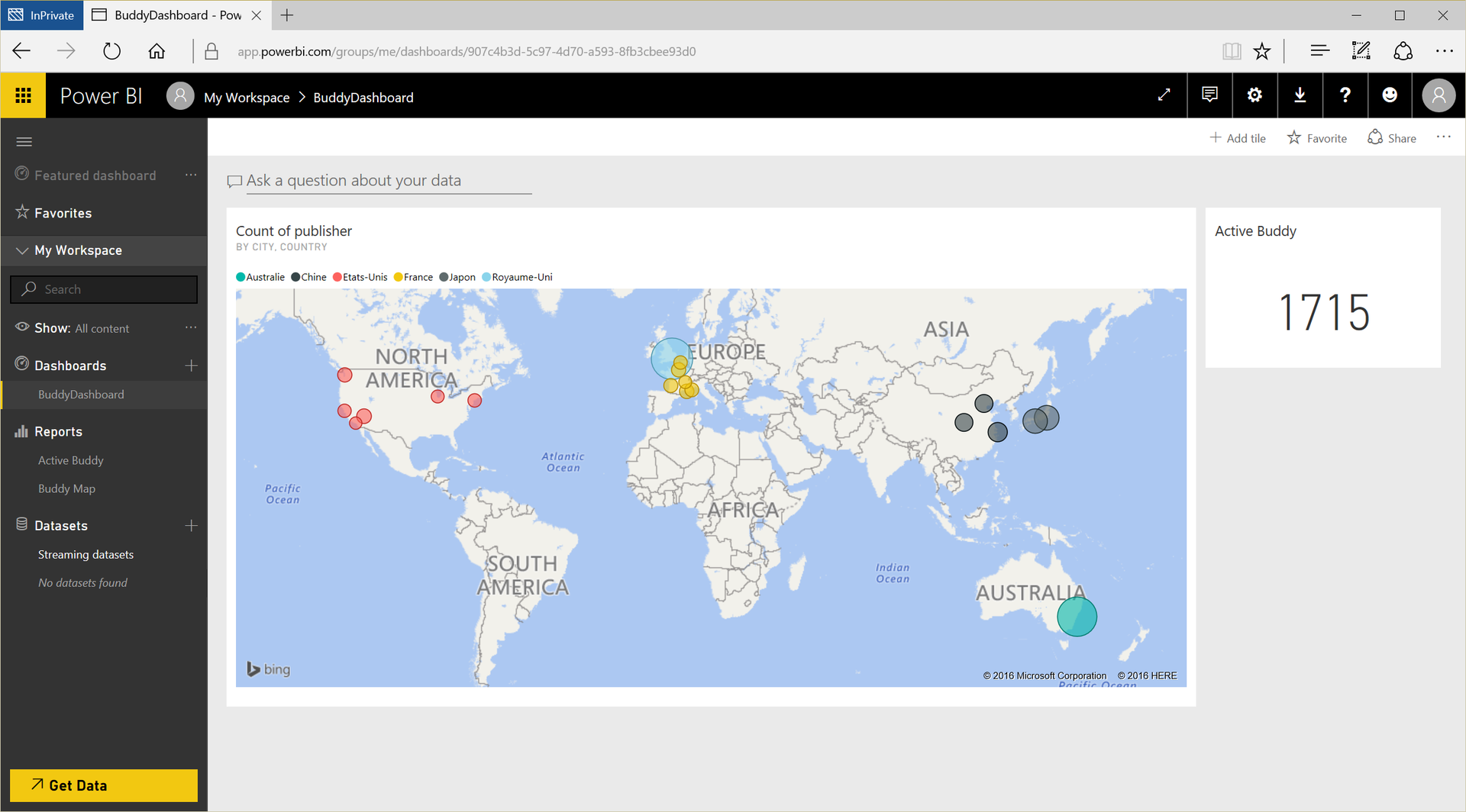

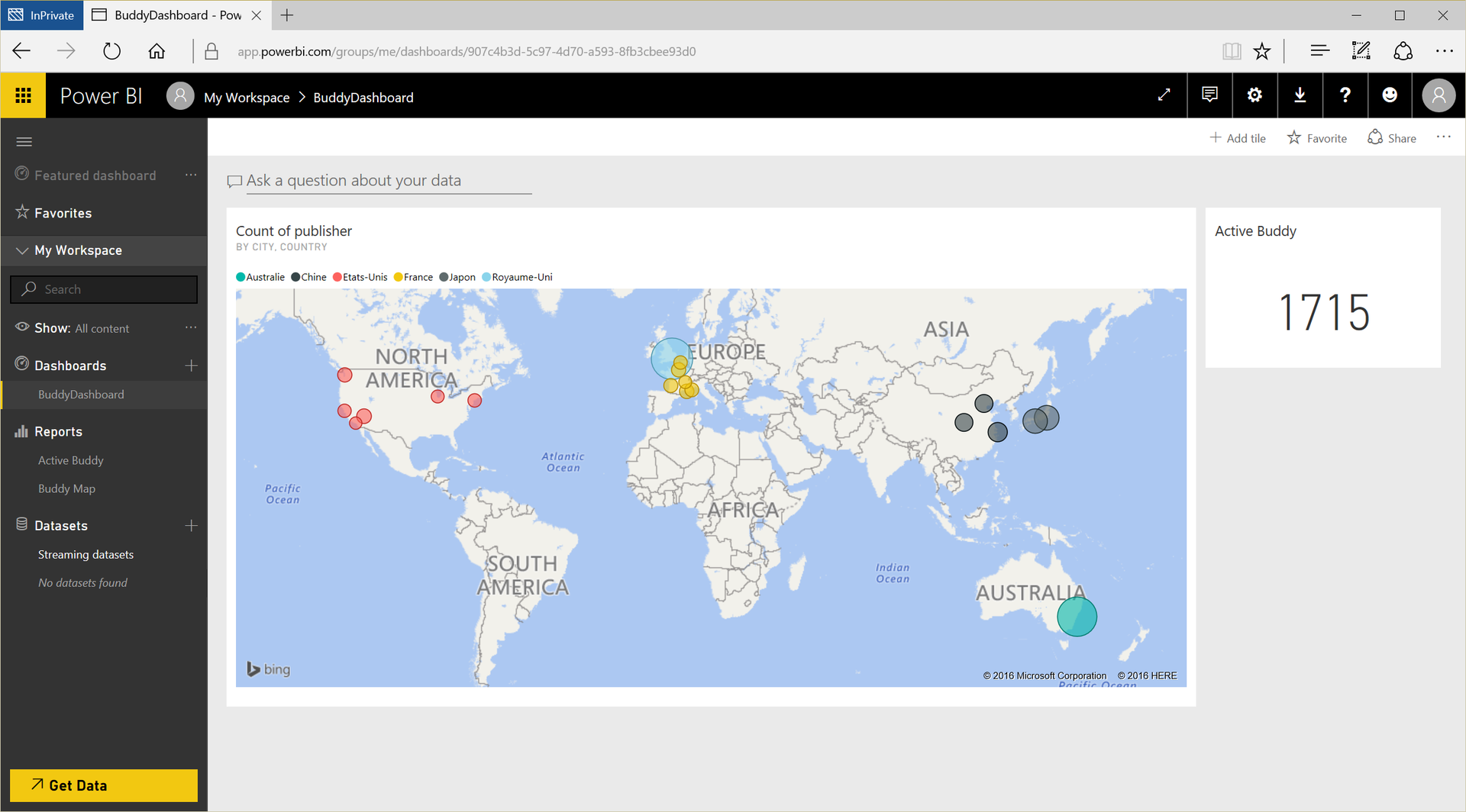

So the data is displayed in Power BI:

To calculate the cost of the robot, you need to select a number of key elements:

Round-the-clock activity for 31 days is also taken into account. This way you will be prepared for the worst case scenario.

Total cumulative costs amounted to $ 122.1797.

Cumulative costs for 1 Buddy per month = 122.1797 / 2200 = $ 0.05554.

A detailed calculation for each cost item can be found in the spoilers below.

In just three days, Blue Frog Robotics set up a full-featured internal system. Now the company will be able to observe the deployment and commissioning of robots after launch, which will take place soon.

The developed architecture is highly flexible for future development, allowing technicians to add new intermediate services, and also supports the automation of the entire development environment and architecture in many regions. The most interesting thing is that the cost of the internal system for one unit of production was very modest.

If you are interested in deploying a similar architecture or its component, the source code is available on GitHub .

We remind you that on March 30 there will be an online conference dedicated to IoT for business , within which you can talk with experts in the field of the Internet of things, machine learning and predictive analytics.

Blue Frog Robotics is a startup founded two years ago in Paris. He is currently developing Buddy. The developers claim that Buddy as a social robot unites and protects all family members, interacting with each of them.

During the 2015 crowdfunding campaign, Blue Frog managed to raise the planned amount of $ 100,000 in just one day, and by the end of the campaign, raise it to $ 620,000. Presales continued on the Blue Frog website, and the total amount reached $ 1.5 million.

')

Control of the distribution of Buddy will remain at Blue Frog Robotics, and the robot will be sold through various regional store chains. That is why it is important to create a system to account for the actual deployment and use of the Buddy robot worldwide. This will ensure the optimum level of its service, depending on popularity.

The cloud solution will take care of storing the collected data (consumption, sensors, and so on) and the placement of various services necessary for the proper functioning of the first 2,200 copies of the robot, which will be put into operation next year. To operate the device efficiently, you need a reliable and scalable internal system. In addition, it is important to develop an architecture that provides an affordable price solution and an optimal quality of service.

During the hackfest, Blue Frog Robotics and Microsoft DX France worked together on a solution from a production perspective, which you will learn about below.

About BUDDY

Buddy was developed using popular tools (Arduino, OpenCV and Unity 3D) to make it easier for developers to create projects with it. In addition, the creators are now implementing a full-featured API. To interact with the robot, in addition to software, developers will be able to create hardware solutions.

The kit includes a companion mobile app for family members to connect with Buddy from a distance, supporting video surveillance, video call and remote control functions. It is developed using C # and Unity 3D, and will be available on iOS, Android and Windows.

Buddy features:

- Height 56 cm, weight 5 kg, battery life - 8-10 hours.

- Integrated Brain - 8-inch smart tablet PC with built-in wireless network and Bluetooth.

- Using the Arduino platform, the mechanical components of the robot perform the commands of the “brain”.

- Three wheels and a multitude of sensors provide complete mobility: a robot can move, learn and interact with the outside world.

- 3D Vision technology. With the help of a standard camera, an infrared camera and an infrared laser transmitter, Buddy easily tracks and recognizes the movements of hands and heads, distinguishes objects, faces, animals, plants, and much more, measures the depth of objects in view.

- The state-of-the-art artificial intelligence technology allows you to recognize faces, objects and track movements.

- The robot can listen, talk and nod.

- The robot has a personality and reacts to what is happening with a wide range of emotions, which allows it to better interact with family members. High level of kawai at the manifestation of any emotion. From the cold, he can even beat his teeth!

Data types

Buddy sends two types of data to the cloud.

Profile and contextual data is a set of data created by Buddy or a companion application for synchronization or backup (map data, user profile, and so on). This data is configured to read and write with a low request rate and is stored in JSON format.

Technical data and usage information are data obtained by a tablet PC and an Arduino map from technical indicators of a robot (for example, battery level, location, servo motor activity). This data is sent with a high frequency, not subject to change and recorded in JSON format. The data is encoded in Base64, which reduces the length of the message.

Creation of architecture

The main criteria for creating architecture: cost, scaling and security. Therefore, it is important to minimize infrastructure costs.

For all relevant technical aspects, scaling should be considered to avoid performance problems during the global deployment of the robot.

As for security, Buddy software implements several types of encryption and data isolation. To ensure protection in the cloud, the data transferred is anonymous and read and write only exists for authorized systems.

When deploying the selected architecture, three areas were identified:

- Blob storage: saving and restoring data from the format of binary objects and vice versa using shared access signature (SAS).

- Collecting messages: collecting records from the event log, commands and monitoring results, creating Power BI reports.

- Automation: Create all Azure Resource Manager files and automation scripts for continuous infrastructure deployment.

Collecting messages: from the robot to Power BI

Event hubs were selected as data sink. To send messages to event hubs, each Buddy must receive a shared access signature. SAS is transmitted using the Azure Function.

Sample Azure Function code:

#r "Microsoft.ServiceBus" using System.Net; using Microsoft.ServiceBus; using System.Configuration; public static async Task<HttpResponseMessage> Run(HttpRequestMessage req, TraceWriter log) { log.Info("Generate Shared Access Signature for Event Hub"); // parse query parameter string publisherName = req.GetQueryNameValuePairs() .FirstOrDefault(q => string.Compare(q.Key, "publisherName", true) == 0) .Value; string tokenTimeToLiveParam = req.GetQueryNameValuePairs() .FirstOrDefault(q => string.Compare(q.Key, "tokenTimeToLive", true) == 0) .Value; // Get request body dynamic data = await req.Content.ReadAsAsync<object>(); // Set name to query string or body data publisherName = publisherName ?? data?.publisherName; tokenTimeToLiveParam = tokenTimeToLiveParam ?? data?.tokenTimeToLive; if( publisherName == null) return req.CreateResponse(HttpStatusCode.BadRequest, "Please pass a publisherName on the query string or in the request body"); TimeSpan tokenTimeToLive; if( tokenTimeToLiveParam == null) {tokenTimeToLive = TimeSpan.FromMinutes(60);} else {tokenTimeToLive = TimeSpan.FromMinutes(double.Parse(tokenTimeToLiveParam));} var appSettings = ConfigurationManager.AppSettings; var sas = CreateForHttpSender( appSettings["EH_1_SAS_PolicyName"], appSettings["EH_1_SAS_Key"], appSettings["EH_1_SAS_Namespace"], appSettings["EH_1_SAS_HubName"], publisherName, tokenTimeToLive); if (string.IsNullOrEmpty(sas)) {return req.CreateResponse(HttpStatusCode.NoContent, "No SaS found!");} else {return req.CreateResponse(HttpStatusCode.OK, sas);} } public static string CreateForHttpSender(string senderKeyName, string senderKey, string serviceNamespace, string hubName, string publisherName, TimeSpan tokenTimeToLive) { var serviceUri = ServiceBusEnvironment.CreateServiceUri("https", serviceNamespace, String.Format("{0}/publishers/{1}/messages", hubName, publisherName)) .ToString() .Trim('/'); return SharedAccessSignatureTokenProvider.GetSharedAccessSignature(senderKeyName, senderKey, serviceUri, tokenTimeToLive); } The robot sends data to the event hub.

Using the Unity 3D application, the robot receives the SAS token created above the Azure Function:

``` /// Methode call with StartCoroutine(); private IEnumerator GetSaS(string publisherName) { WWW wwwSas = new WWW(string.Format("https://tbrblobsasfunapp.azurewebsites.net/api/EventHubSasTokenCSharp?code=YourAccessKey&publisherName={0}", publisherName)); yield return wwwSas; // check for errors if (wwwSas.error == null) { SaS = wwwSas.text.Trim(new Char[] { ' ', '\"' }); Debug.Log("Get SaS OK: " + wwwSas.text.Trim(new Char[] { ' ', '\"' })); } else { Debug.Log("Get SaS Error: " + wwwSas.error); } } ``` The function has a key to control the event hub:

Data is also sent via a web request from Unity. This is equivalent to cURL:

The data goes to the Azure Event Hub:

Then they are processed through Azure Stream Analytics:

Azure Stream Analytics sends a copy of all data to blob storage:

Azure Data Lake Analytics is used for data retrieval:

So the data is displayed in Power BI:

Calculation of the cost of infrastructure

To calculate the cost of the robot, you need to select a number of key elements:

- The number of deployable robots: 2200

- The number of active applications companions for the robot: 2

- Average technical data and usage data: 100 KB

- Average amount of contextual and profile data: 3 MB

- Requests for technical data and usage data: 5 minutes

- Synchronization of context and profile data per day on a robot or device: 1

- SAS Tokens expire: 1 hour

- Average duration of Azure Functions: 100 ms

- Azure Functions Memory Consumption: 512 MB

Round-the-clock activity for 31 days is also taken into account. This way you will be prepared for the worst case scenario.

Total cumulative costs amounted to $ 122.1797.

Cumulative costs for 1 Buddy per month = 122.1797 / 2200 = $ 0.05554.

A detailed calculation for each cost item can be found in the spoilers below.

Blob storage costs for contextual and profile data = $ 14.8896For storage of blobs, “hot” locally redundant storage is used.

A data set usually consists of five files of various sizes and formats (.json, .png, cartography). The cost of Azure storage is calculated by two criteria: storage and access. In this case, the storage cost is $ 0.024 per GB per month. We calculate the total cost of data storage for all robots:Total storage cost = number of robots deployed * average volume of context and profile data * storage cost per GB = 2,200 * 0.003 * 0.024 = $ 0.1584.

The cost of access depends on the type of operation, and each month the exact type and number of operations is difficult to establish. Therefore, we take to calculate the maximum cost.Estimated number of operations per robot or device: 10

Total number of operations per month = number of robots deployed * (number of active companion applications per robot + 1) * estimated number of operations per robot / device * 31 = 2200 * 4 * 10 * 31 = 2 728 000 operations.

Total cost of access = total number of transactions per month / 10,000 * 0.054 = $ 14.7312.

Costs for blob storage for contextual and profile data = $ 14.8896.Event Hub Costs = $ 22.55Event hubs are designed to receive millions of events per second, which allows you to process and analyze huge amounts of data from related devices and applications.

The cost of event hubs is calculated based on the number of inbound events and units of deployed bandwidth. To set the bandwidth, calculate the number of MB / second for an incoming event sent by robots.Mb / s for inbound event = average amount of technical data and usage data (in MB) * number of robots to be deployed / frequency of sending requests for technical data and usage data in seconds = 0.1 * 2200/300 = 0.7333 Mb / s .

For the throughput indicator of one unit, the limit is limited to 1 Mb / s per incoming event. Here the number of incoming requests is 27% below the limit, so we need only 1 unit of bandwidth. Now let's calculate the number of inbound events sent by the robots.Number of incoming events = minutes per day / frequency of sending requests for technical data and usage information * number of robots deployed * days = 1440/5 * 2200 * 31 = 19,641,600 events.

Cost of incoming events = number of incoming events / 1,000,000 * 0.028 = 0.55 US dollars.

A standard bandwidth unit costs approximately $ 22 per month, and the total cost of using event concentrators is:The cost of event hubs = 22 + 0.55 = 22.55 US dollars.Azure Functions Costs = $ 1,1094Azure Functions were used to enable SAS to access blob storage with contextual and profile data and event hubs.

The value of Azure Functions is calculated based on the execution time and the total number of executions. Our SAS token is valid for 1 hour, so each function is called 24 times a day on a robot or application. Functions for accessing blobs are called by robots or applications. Functions for accessing event concentrators are called only by robots.Cumulative number of executions = ((number of robots being deployed + number of robots being deployed * (number of active companion applications per robot) * 24 * days) + ((number of robots being deployed * 24 * days) = ((2200 + 2200 * 2) * 24 * 31) + (2200 * 24 * 31) = 4,910,400 + 1,636,800 = 6,547,200.

Cumulative implementation costs = (6,547,200 - 1,000,000) / 1,000,000 * 0.20 = 1,1094 US dollars.

The Azure Functions monitoring tool on the Azure portal helped to calculate the average execution time. In this case, it is 80 ms. The memory capacity of the functions is 512 MB. This information helped in calculating the cost of the runtime.Resource consumption (in seconds) = runtime * runtime (in seconds) = 6,547,200 * 0.08 = 523.776 seconds.

Resource consumption (GB-s) = resource consumption, converted to GB * runtime (in seconds) = 512/1 024 * 523 776 = 261 888 GB-s.

Resource Consumption Payable = Resource Consumption - Monthly Discount = 261,888 - 400,000 = 0 Gb-s.

The cost of resource consumption each month is $ 0!

Azure Functions costs = cumulative implementation costs + monthly resource consumption costs = $ 1,1094.The cost of Stream Analytics = 25,0281 US dollarsThe cost of Stream Analytics depends on the amount of data being processed and on the number of streaming modules required for processing.The amount of data processed by streaming = the average amount of technical data and usage data (in MB) * the number of robots to be deployed * the frequency of sending requests for technical data and usage data (in days) * days = 0.1 * 2200 * 288 * 31 = 1,964,160 MB = 1964.16 GB.

The cost of the volume of data processed = 1,964.16 * 0.001 = 1.9641 US dollars.

For this amount, you will need only 1 streaming unit:Costs per streaming unit = 0.031 * 24 * 31 = 23.064 US dollars.

The cost of Stream Analytics = the cost of the amount of data processed + the cost per streaming unit = $ 25.0281.Blob storage costs for technical data and usage data = $ 54.7398The blob storage contains only data received from Stream Analytics. The total size of the blob file in the storage is equal to the amount of data processed by Stream Analytics.Storage cost = amount of data processed by streaming * the cost of 1 GB of storage = 1964.16 * 0.024 = $ 47.1398.

To calculate the cost of access, consider 1 unit of access per message processed by inbound events in the event hub to update access.Access cost = number of incoming events * transaction costs (per 10,000) = 19,641,600 / 10,000 * 0.004 = 7.60 US dollars.

Blob storage costs for technical data and usage information = storage cost + access cost = $ 54.7398.Bandwidth costs = $ 3.8628This refers to the cost of moving data to and from the Azure data center, not the cost of the content delivery network or ExpressRoute. The data loading by the mobile application is taken into account. We believe that each application is synchronized with the whole file twice a month.The amount of downloaded data = the average amount of context and profile data in GB * 2 * the number of active companion applications per robot * the number of deployed robots = 0.003 * 3 * 2 * 2200 = 39.6 GB.

The cost of outgoing data transfer = (the amount of downloaded data - a monthly discount) * transfer costs 1 GB = (39.6 - 5) * 0.087 = $ 2,5752.

A small amount of data is transferred between Azure and the robot many times, for example, to obtain a SAS token. It was decided to add another 50% to the costs to cover these costs.Bandwidth costs = outbound data transfer costs + 50% = 2.5752 + 1.2876 = 3.8628 US dollars.

Conclusion

In just three days, Blue Frog Robotics set up a full-featured internal system. Now the company will be able to observe the deployment and commissioning of robots after launch, which will take place soon.

The developed architecture is highly flexible for future development, allowing technicians to add new intermediate services, and also supports the automation of the entire development environment and architecture in many regions. The most interesting thing is that the cost of the internal system for one unit of production was very modest.

If you are interested in deploying a similar architecture or its component, the source code is available on GitHub .

We remind you that on March 30 there will be an online conference dedicated to IoT for business , within which you can talk with experts in the field of the Internet of things, machine learning and predictive analytics.

Source: https://habr.com/ru/post/324700/

All Articles