Statistics on property values - visualization on the map

Introduction

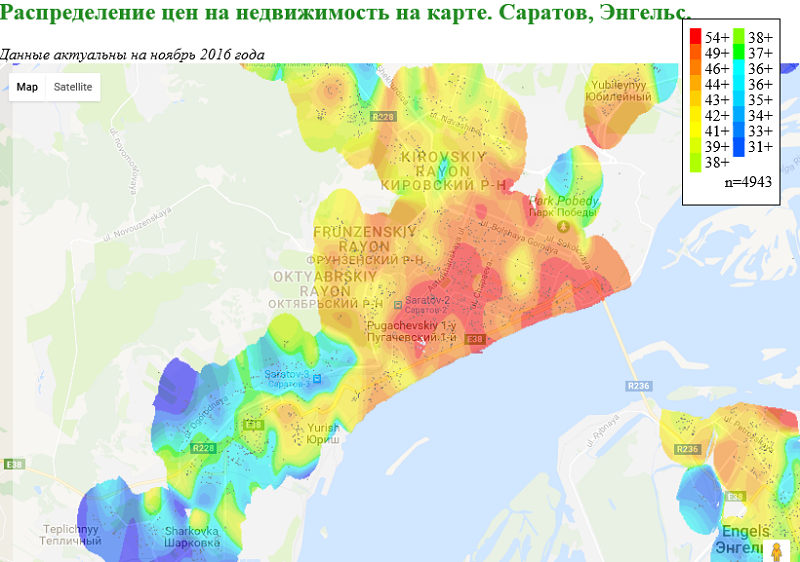

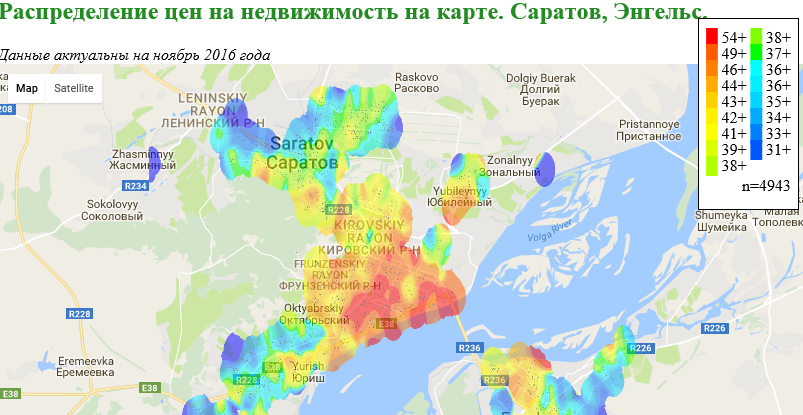

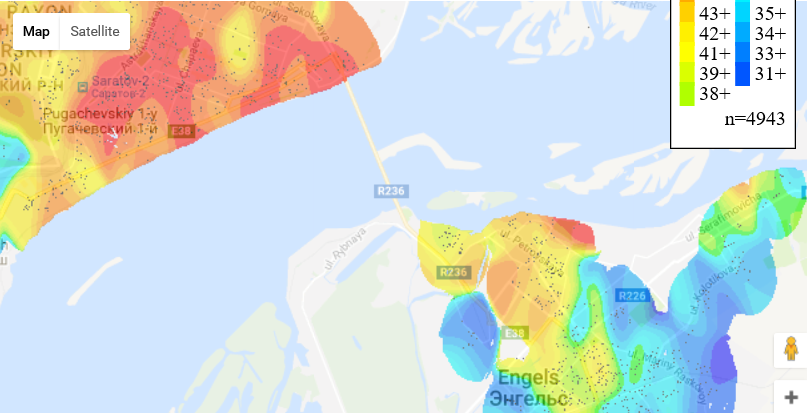

I'll start from the end. This is a screenshot from a web-card that visualizes the average value of real estate in the secondary market of Saratov and Engels:

The colors on the map can be compared with the colors on the “legend”, the color on the “legend” corresponds to the average cost per square meter of the total area in thousands of rubles.

')

The point on the map corresponds to one offer for the sale (on the secondary market) of an apartment with Avito. A total of such points, as can be seen in the “legend”, were used to plot 4943.

An interactive map is available on GitHub .

And now a little background ..

A long time ago…

... when, as a student of the mechanics department, I wrote a paper on statistical data on real estate prices, I accidentally found a useful document - a gradation of areas for real estate prices, something like “Price zones of Saratov”. I do not know who made it up, but it was made up quite plausibly, - the analysis showed that (taking into account other factors), the difference between the average cost per square meter of apartments from neighboring price zones was about 10%. Since then, to act as a real estate appraiser (at the household level), like most adults, had more than once.

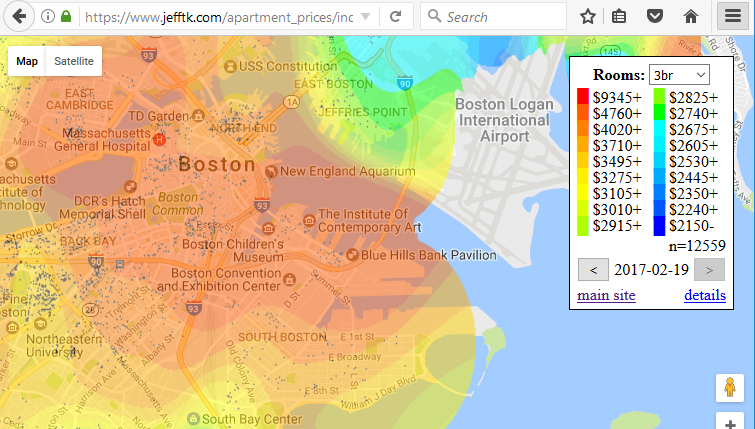

The idea of a more “scientific” approach to this issue came to mind when I saw the publication of Jeff Kaufman Boston Apartment Price Maps .

A friend did the following:

- Python script that parses the site PadMapper data on the cost of renting real estate.

- another script that, using the methods of Spatial interpolation, renders this data in the form of a bitmap image.

- Put this picture on a page with Google Maps

As a result, he got such an interactive map of the distribution of the rental price on the Boston map:

After the first “Wow, cool”, the next thought is “What if you try to do the same, but for Russia, not only at the cost of rent, but at prices per square of real estate”.

Immediately make a reservation, I am rather a database developer for the current occupation, and I am not an expert in GIS data, nor in front-end development, nor in statistics, nor in Python, so be condescending ... I hope this will not be very much and you will write me about it for correction.

And here comes the first major task.

Web Scraping with Avito.

Since with Python at that time I was completely on “you”, after studying the HTML code of Avito pages, I decided to write my own bicycle as a C # library using AngleSharp for parsing.

I will not go deep now in features of implementation, I will notice only that:

- I rendered all the parsing metadata of Avito into a separate configuration class, so that in the future, when the layout structure on Avito is changed, it would be possible to simply change the line in the configuration file.

- in order to avoid the ban from Avito, we had to add artificial delays in 10 seconds after processing each advertisement. (I agree - not the smartest way, but it was not possible to find a suitable pool of proxy-ipishnikov offhand).

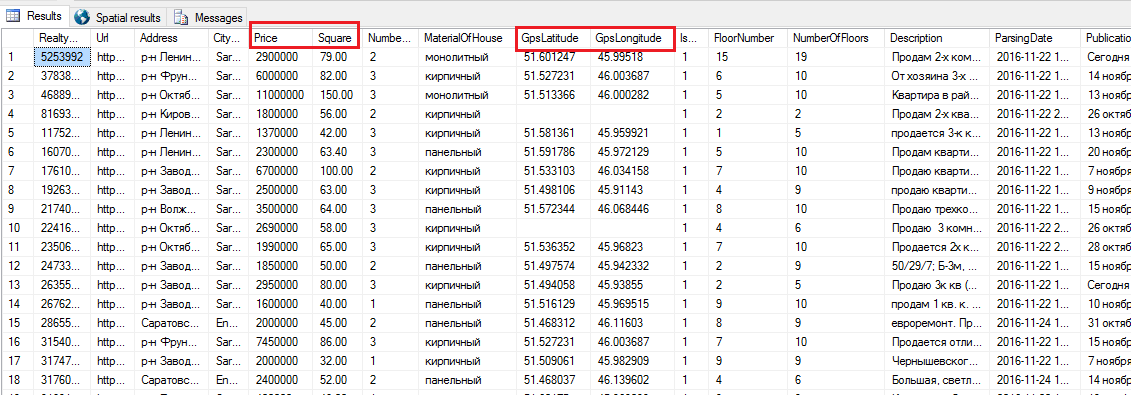

In the end, running the parsil for the night, I received the necessary data recorded in a table in the database

After clearing the data from the “Old Fund”, “Elite”, and all sorts of obviously inadequate offers, I received a sample of five thousand points - sales of apartments on the secondary market of Saratov and Engels. And this made it possible to proceed to the next step:

GIS data processing.

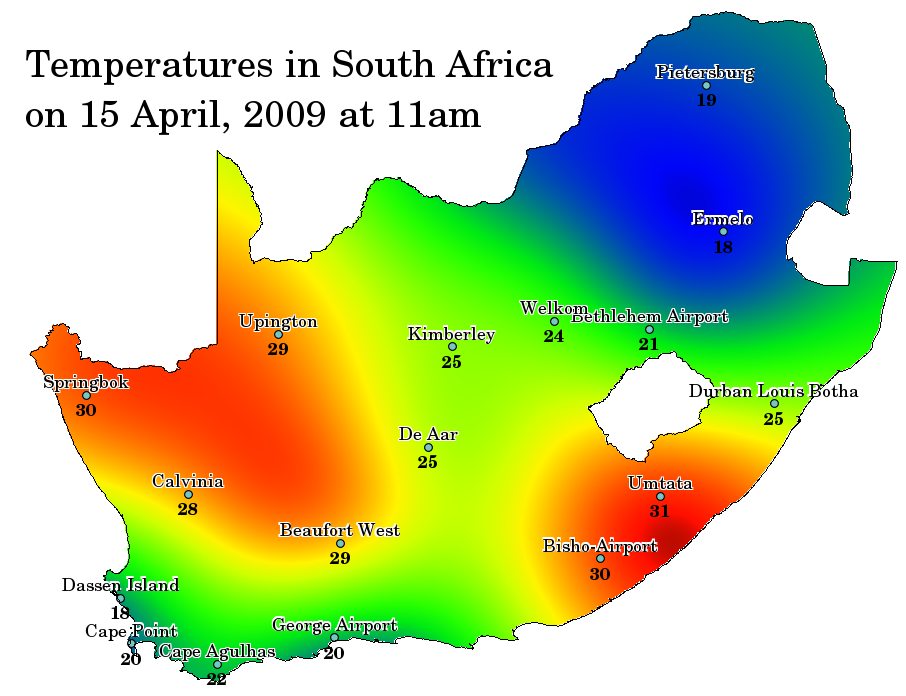

General formulation of the problem:

- there is a discrete set of values of a certain metric (temperature, altitude, substance concentration, etc.), with reference to the coordinates on the plane (X, Y or latitude, longitude),

- you need to be able to predict the value of this metric at an arbitrary point of this plane,

- the result is visualized as a color scheme on the plane. For a color scheme, for convenience, you can sample colors.

Example - at one moment they took readings from temperature sensors in different places, processed, received such a picture:

In fact, tasks of this kind require immersion in the topic “ Spatial interpolation ”. It has its own mathematical apparatus, for example:

- Inverse Distance Weighting (IDW) Interpolation

- Kriging

And there is a toolkit in the composition of popular GIS packages, for example

- Interpolation of qGIS point data

- GeoStatistical Analyst ArcGIS

- Use of SAGA GIS for spatial interpolation (kriging)

- Kriging in R.

IDW in qGIS (see the first of the links) I tried, the result did not satisfy me.

Therefore, in order not to get stuck for a long time in mastering the new toolkit, I preferred to use the ready-made Python script from Jeff Kraufman . For calculating distances and for translating GPS coordinates to X, Y coordinates on a raster, a simplified linear formula is used here, without using the projection used in Google Maps Web Mercator .

As I understand it, the script uses a variation on the Inverse Distance Weighting (IDW) theme, the main part (and the heaviest in the calculation part) in this script is part of this code.

Python code spatial interpolation

gaussian_variance = IGNORE_DIST/2 gaussian_a = 1 / (gaussian_variance * math.sqrt(2 * math.pi)) gaussian_negative_inverse_twice_variance_squared = -1 / (2 * gaussian_variance * gaussian_variance) def gaussian(prices, lat, lon, ignore=None): num = 0 dnm = 0 c = 0 for price, plat, plon, _ in prices: if ignore: ilat, ilon = ignore if distance_squared(plat, plon, ilat, ilon) < 0.0001: continue weight = gaussian_a * math.exp(distance_squared(lat,lon,plat,plon) * gaussian_negative_inverse_twice_variance_squared) num += price * weight dnm += weight if weight > 2: c += 1 # don't display any averages that don't take into account at least five data points with significant weight if c < 5: return None return num/dnm def distance_squared(x1,y1,x2,y2): return (x1-x2)*(x1-x2) + (y1-y2)*(y1-y2) I adjusted my data array to the required format, removed the part that does the calculation of correlations regarding the bedroom_category (number of rooms) from the script, played with the input parameters, started the calculation. On my computer (Intel® Core (TM) i7-6700 CPU, 16GB RAM), the calculation from 5 thousand points at the input and the image resolution at the output of 1000 * 1000 took about half an hour.

It remains to work a bit with the Front-End. We look at HTML source maps of Boston from Jeff Kraufman. We modify it a little to fit our data, and we get just such a “oil painting”.

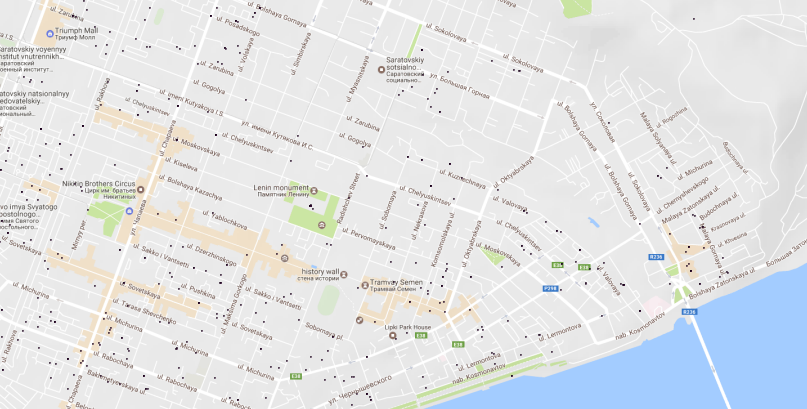

To check for obvious “jambs” in Georeferencing, we load the same set of points into qGIS, load Google Maps, compare pictures.

Having made sure that the points on the drawn map are in their places, and the colors on it faithfully reflect the prices of apartments in our village, we can breathe out - “Yes! Works!".

Only here it is a little sad that the picture got into the Volga. What is natural - the algorithm for calculating anything about the Volga does not know.

So the problem arises:

Crop image on the coastline map

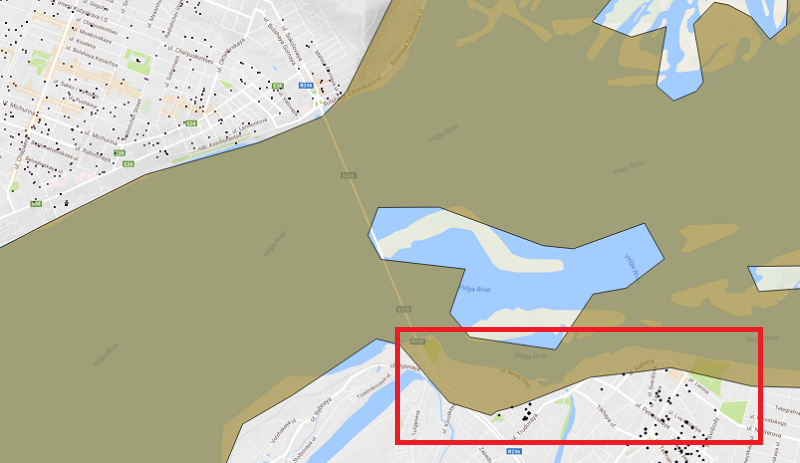

An attempt to find geo-data on the administrative boundaries of cities, taking into account the coastline, was not crowned with success. There is Saratov, there is Engels, and the border between them passes in the middle of the Volga. Okay, we'll go the other way.

We download shape files from open sources with data on coastlines, load as a vector layer in qGIS, select the desired polygons, understand the file structure, pull out polygons that belong to the Volga river near Saratov, fill the data in the database.

A bit of magic type:

DECLARE @Volga geography; select @Volga= PlacePolygon.MakeValid() from PlacesPolygons where PlacesPolygonID =1003 DECLARE @Saratov geography; select @Saratov= PlacePolygon.MakeValid() from PlacesPolygons where PlacesPolygonID =2 select @Saratov.STDifference(@Volga) And we get the desired landfill - the boundaries of the city, taking into account the coastline.

It remains to load this into qGIS, upload the image generated by the script as a raster layer, conduct Georeferencing for this image, and cut it around the polygon with the boundaries of cities, all using standard qGIS tools. Everything would be great if ... I managed to do it. But alas ... I didn’t have a relationship with Georeferencing in qGIS , and after several attempts I decided that using spatial functions from Microsoft.SqlServer.Types, it’s easier to quickly make another bike as a utility on Sharpe.

C # code for trimming the image by GEO polygon

string GPSPolygonVolga = " POLYGON ((46.2324447632 51…. ))"; private void CropByGPS() { Bitmap bmp = new Bitmap(inputFilePath); int w = bmp.Width; int h = bmp.Height; var GpsSizeOfPyxelX = (MAX_LON - MIN_LON) / w; var GpsSizeOfPyxelY = (MAX_LAT - MIN_LAT) / h; var VolgaPolygon = SqlGeography.STPolyFromText(new System.Data.SqlTypes.SqlChars(GPSPolygonVolga), SRID); for (int y = 0; y < h; y++) { for (int x = 0; x < w; x++) { Color c = bmp.GetPixel(x, y); var GPS = SqlGeography.Point(MAX_LAT - GpsSizeOfPyxelY * y, MIN_LON + GpsSizeOfPyxelX * x, SRID); if (VolgaPolygon.STContains(GPS)) // "" bmp.SetPixel(x, y, Color.Transparent); } } bmp.Save(outputFilePath, System.Drawing.Imaging.ImageFormat.Png); } We loaded the source file with a raster, got the same file as output, but in a bit “bitten form”. Laid on Google Maps in the browser, we look, - nothing crawls on the Volga, great! We look a little more attentively and we see ... what seems to be bitten off too much in some places. Jamb in the data or jamb in the procedure? Again we look at the loaded layers in qGIS.

Yes ... indeed, in some places we sometimes ... landfills from the reservoir layer clearly climbs into the city. Straight is not Engels, but some kind of Venice ... Well, there is no striving for perfection, we will go the other way.

Once the vector data is out of trust, we will work with raster ones. We need to superimpose two pictures, and cut from the first those points that correspond to the “blue” in the second.

A picture with a map can give us, for example, Google Maps static API .

We get API_KEY, we find out that:

- maximum resolution for free use = 640 * 640

- parametrization by BoundingBox -, unlike BING static maps, the Google API does not support, and it will be necessary to additionally correlate two figures in the coordinate plane.

Okay, we try. We get from Google Statics Map API some image with a map covering the area of interest to us, we write this code:

C # cropping on the picture

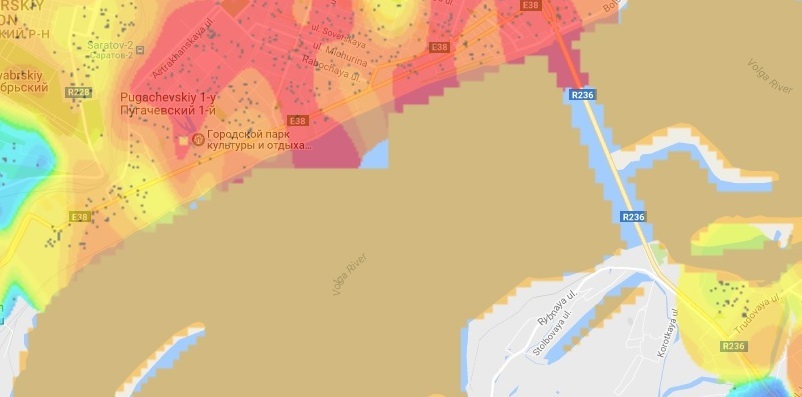

private static void CropByMapImage() { Bitmap bmp = new Bitmap(inputFilePath); Bitmap bmpMap = new Bitmap(mapToCompareFilePath); int w = bmp.Width; int h = bmp.Height; var GpsSizeOfPyxelX = (MAX_LON - MIN_LON) / w; var GpsSizeOfPyxelY = (MAX_LAT - MIN_LAT) / h; int wm = bmpMap.Width; int hm = bmpMap.Height; var cntAll = w * h; var cntRiver = 0; for (int y = 0; y < h; y++) { for (int x = 0; x < w; x++) { var currentLatitude = MAX_LAT - GpsSizeOfPyxelY * y; var currentLongitude = MIN_LON + GpsSizeOfPyxelX * x; if (currentLongitude < MIN_LON_M || currentLongitude > MAX_LON_M || currentLatitude < MIN_LAT_M || currentLatitude > MAX_LAT_M) continue; //todo - something with this ugly code var CorrespondentX = (int)((currentLongitude - MIN_LON_M) / (MAX_LON_M - MIN_LON_M) * wm); var CorrespondentY = (int)((MAX_LAT_M-currentLatitude) / (MAX_LAT_M - MIN_LAT_M) * hm); Color cp = bmp.GetPixel(x, y); //color of current pixel Color mp = bmpMap.GetPixel(CorrespondentX, CorrespondentY); //color of correspondent Map pixel if (Math.Max(Math.Max( Math.Abs(mp.R - WaterMapColor.R) , Math.Abs(mp.G - WaterMapColor.G)) , Math.Abs(mp.B -WaterMapColor.B))< colorDiff /* && cp.A != Color.Transparent.A */)// blue water is not always the same blue { bmp.SetPixel(x, y, Color.Transparent); cntRiver++; } } } bmp.Save(outputFilePath, System.Drawing.Imaging.ImageFormat.Png); } We process our file with a resolution of 1000 * 1000 using a file with a resolution of 640 * 640, we get ... this result (here, for clarity, those points that should be Transparent are made yellow):

The result is sad, including also because the Google Map is scaled discretely and, therefore, covers our generated raster with a large margin. That is, or you need to take to “trim” a lot of maps with a suitable scale, or ... like all the same, find one, but with a good resolution.

I went the second way, that is, simply by taking a screenshot of the desired projection of the usual Google Map from the browser. GPS coordinates of corners (mapping bound), I found out by adding in JavaScript code this:

google.maps.event.addListener(map, 'bounds_changed', function () { console.log(map.getBounds()); I processed our raster with the help of a new map from the saved screenshot, uploaded to the browser and ... the result I was rather pleased. Even a small pond in the local city park was “cleaned out” quite correctly (within the GPS positioning accuracy).

Everything, we will consider the first stage of the quest to be completed, upload the results on GitHub to the daddy shared for hosting, and we can boast to the IT community.

So, what is next?

And then there are many questions that I do not know a clear answer:

- How well does the calculation algorithm work here compared to other methods of spatial interpolations?

- What other factors, besides the location of the property, it makes sense to consider when calculating and how to enter into the calculation model

- Is it possible to make a constant update of the data in the database and build on this some kind of real estate valuation system? (If Avito representatives read me on this site, I would like to hear comments)

Well ... thanks to everyone who has read this far. It was interesting for me to solve a practical problem, which turned out to be “rather challenging for me”, I hope you were interested in reading about my experience.

Source: https://habr.com/ru/post/324596/

All Articles