Power to the people: how to use AI to solve human problems

The impressive results of a number of studies conducted in recent years have attracted the attention of the world community to the topic of machine learning. Since the “ winter of artificial intelligence ”, we have never been so inspired by the capabilities of this technology. But despite the surge of interest, a number of scientists believe that many of us are paying too much attention to the wrong research. Behind all this hype almost imperceptible remained a small group of researchers who quietly lay the foundation for the further use of machine learning, which will solve many problems of humanity.

')

Today's surge of enthusiasm for AI began with success in solving the problem of image classification using deep convolutional neural networks. Usually in this area progress is calculated in units of percent, and this team of researchers completely destroyed this tradition. The aforementioned work gave impetus to other studies, and as a result, significant progress was made in a number of applied areas, including speech and face recognition. The global community of machine learning developers quickly picked up this new set of approaches, and then became obsessed with them.

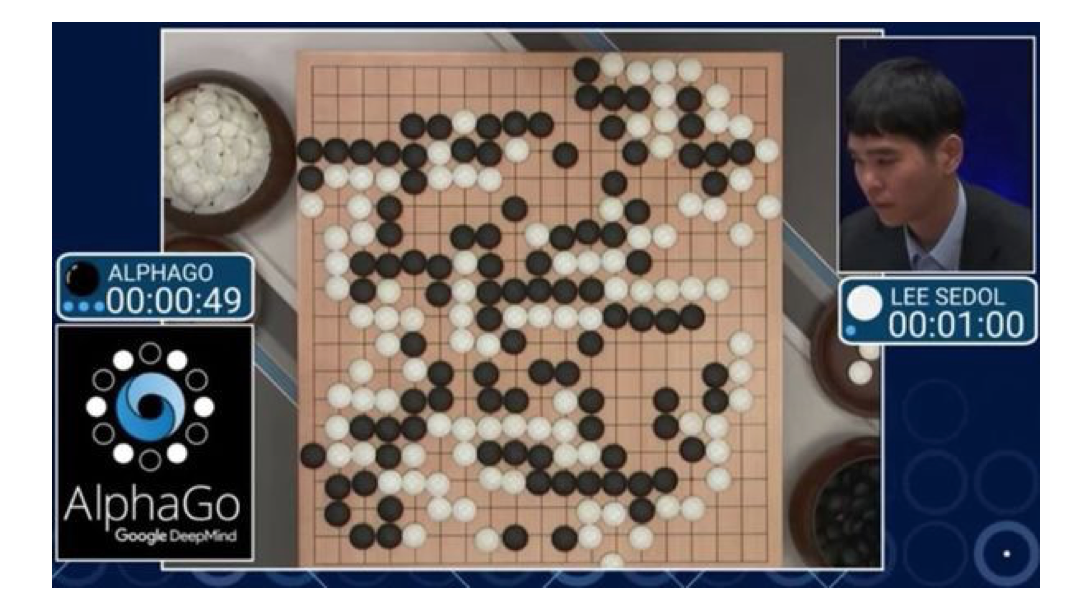

As machine learning began to be engaged in more and more new large companies, including Google and Facebook, the general public began to increasingly talk about quite understandable - and extremely impressive - achievements. AlphaGo won the historic battle with the world champions in the game of Go. IBM Watson won the Jeopardy quiz. Less loud achievements, like Neural Style Transfer and Deep Dream, became sources of visual memes, which quickly spread across social networks.

All these successes threw firewood into the furnace of speculation and unrelenting attention from the press. This attracted the attention of executives, professionals working at the forefront of technology, and architects in many different business segments. Venture investors began to invest in AI-related initiatives. Many startups dream of gaining competitive advantages by creating interfaces for mobile and web applications that are able to carry on a dialogue with the user. Just as many startups want to improve with the help of AI products for the Internet of things. Artificial intelligence is even trying to bring to the solution of problems in the field of marketing.

But despite all the current achievements, AI systems are not yet close enough to solve any of the main problems of humanity on their own. So far this is only a fairly powerful tool that can lead to the creation of unprecedented technologies only under one condition - if we learn to use AI to meet our needs.

What prevents the use of AI in the daily work of tens and hundreds of thousands of companies around the world? There is no shortage of new learning algorithms. Not a shortage of programmers deeply versed in stochastic gradient slopes and backward propagation. And not even in the lack of available software libraries. For widespread AI, we need to understand how to create interfaces that can put the power of this technology into the hands of users. It is necessary to develop a new discipline of hybrid design, allowing you to clearly realize the potential of AI-systems to interact and understand people. To understand how people can correctly or mistakenly use these opportunities, how they can abuse them.

Let's remember the story. In 1978, the VisiCalc program was released. It is the result of using programming capabilities to address the working needs of ordinary people. Thus, the world's first “spreadsheet” was born.

" I thought that I would have a board on which to write and erase numbers, but she would recount everything herself " - Dani Bricklin, author of VisiCalc.

Behind the hype around the successes in the field of in-depth training, an entire research area quietly formed, dedicated to solving the problem of designing human interaction with machine learning systems. This small, but very impressive direction was called Interactive Machine Learning. It lies at the intersection of such areas as research in the field of user interaction experience and machine learning. Today, many people are puzzled over how to implement AI in their business, or in the work toolkit, or in the software product, or in the development methodology. Perhaps today is the most pressing topic in the field of AI.

Recurrent neural networks have surpassed convolutional neural networks. Then came the deep-learning networks with reinforcements (Deep Reinforcement Learning). Soon they will give way to the pedestal of another technology. In the field of AI, everything changes very quickly, and all new algorithms pull out from each other the challenge title “the fastest” in a given task. But at the same time, the principles of design will remain unchanged, allowing people to use the learning systems to solve their problems.

The creation of these principles is just dedicated to interactive machine learning. And you must master them perfectly, if you are engaged in designing, controlling or programming AI to solve applied problems.

First, let's see what has already been done in this direction. Most of what will be discussed below is drawn from the work of Brad Knox (Brad Knox) “ Power to the people: the role of people in interactive machine learning, ” written in collaboration with several researchers.

Note: Unlike most machine learning works, publications in the field of IMO are more friendly to non-specialists. I recommend reading the originals of a number of works .

Active learning as a way to get maximum help from people.

The main task of most machine learning systems is to compile information from data samples created by people. The process begins with the fact that people collect arrays of tagged data: descriptions of the depicted objects are attached to the pictures, names of people to portraits, text transcriptions to voice recordings, and so on. Then begins the training. The algorithm processes the data fed to it. At the end of the training, the algorithm creates a classifier, in fact, a small separate program that gives the correct answers to new input data that are not part of the training set. It is the classifiers that need to be integrated into real projects, when you need to determine the age of a user from a photo, or recognize faces, or transcribe speech when a person is on the phone. In this scheme, human labor in marking a training set is the most scarce resource.

Many impressive results in the field of in-depth training have been achieved in areas where huge amounts of tagged data are available, laid out by billions of users of social networks or collected on the Internet . But if you are not Facebook or Google, then it will not be so easy for you to find tagged data that is suitable for solving your problem. Especially if you are working in a new field, with your jargon, behavior or sources of information. Therefore, it is necessary to collect data from its users. And this entails the creation of an interface that will visually demonstrate examples of text, or images, or other data that you want to classify and correctly describe.

Human labor, especially user work, is in short supply. So, you need to ask users to label data, which will help to maximize the performance of your system. Active Learning is a direction in machine learning that studies the described problem: how to find samples of data, marking which by people will help to improve the system as much as possible. Researchers have already developed a number of algorithmic approaches. This includes techniques:

• search for samples that the system understands the worst

• identification of samples, marking of which will give the greatest effect in terms of recognition by the system

• selection of samples for which the system predicts the highest level of errors, and so on

Here is a link to an article that can be a great introduction to the issue.

Here is a concrete example: a gesture recognition system built using the principles of active learning, when a user is asked to mark gestures for which the system cannot give a clear prediction. Details here and here .

Do not take away reins of power from users

Researchers of active learning demonstrate success in creating more accurate classifiers using fewer tagged data. But from the point of view of interaction design, active learning has an obvious drawback: it makes the learning system dependent on the interaction itself, and not on the user. A person carrying out sample labeling is referred to by researchers in the field of active learning as an oracle. However, their colleagues in the field of interactive machine learning have proven that people do not like this approach.

People don't like it when the robot tells them what to do. It is much more pleasant for them to interact, and if the interaction with the machine depends on them, then they are ready to spend more time on its training.

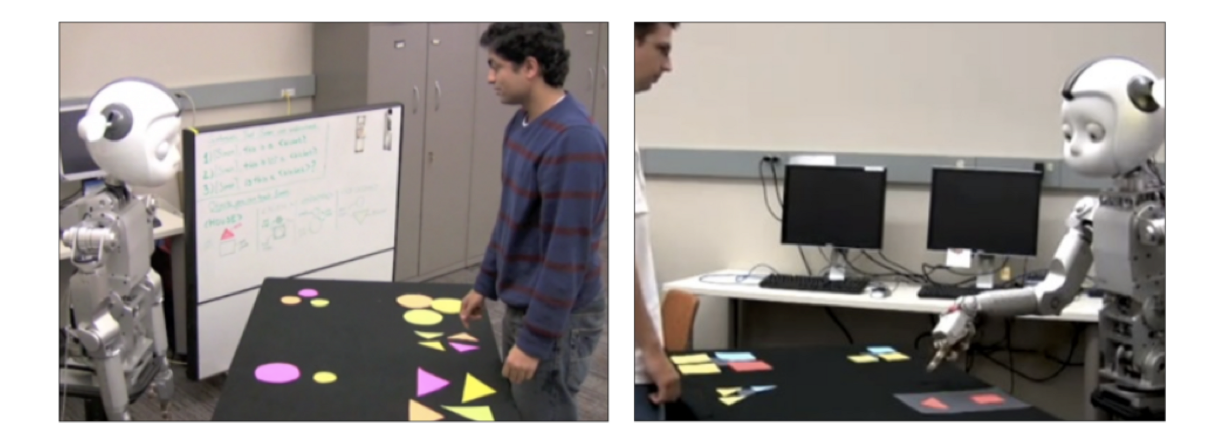

In the 2010 study, “ Designing Interactions for Active Learning of Robots, ” the users' perception of passive and active approaches to learning robots in form recognition was investigated. In one of the options, the initiative was given to the car. The robot itself chose which next object to label the person. He pointed to it and waited for the user's response. In another embodiment, the man himself chose what to show the robot.

When the robot had the initiative, people described its flow of questions as “unbalanced and annoying”. Users also noted that it was more difficult for them to determine the degree of robot training, which reduced their effectiveness as teachers.

Similar sensations were described by the subjects in another study , where the program played the role of a robot: the Netflix film rating system was actively trained.

Choose algorithms for their ability to explain classification results.

Suppose you have any health problems that need to be diagnosed. You can choose one of two AI systems for this. The first has an accuracy of 90% or more available. She takes your medical information and makes a diagnosis. You will not be able to ask questions or find out how she came to this conclusion. You just get a description of your status in Latin and a link to Wikipedia.

The second system has an accuracy of 85%. She also takes your data and makes a diagnosis. But at the same time explains how she came to such a decision. Your blood pressure is higher than normal, certain risks increase at your age, three of five key factors are noted in your family history, and so on. Which of the two systems will you choose?

There is a cliché in marketing: “ half of the advertising budget is wasted, but no one knows which half ”. In the field of machine learning there is a similar cliché: " it is easy to create a system that is right for 80% of the time, it is difficult to calculate which 80% exactly ." Users trust the learning systems more when they understand how they come to their decisions. Users are able to better adjust and improve these systems if they see the inner kitchen of their work.

So if we want to create systems that users trust and that we can quickly improve, we need to choose algorithms not by the frequency of correct answers, but by the ability to explain our “train of thought”.

From this point of view, some machine learning algorithms provide slightly more possibilities than others. For example, neural networks that today are breaking records in accuracy in a number of tasks are almost incapable of such explanations. In fact, these are just black boxes giving out answers. On the other hand, random decision trees algorithms (Random Decision Forests) have excellent capabilities for justifying classification and building interactive control of learning systems. You can calculate which variables were more important, how confident the system was in each prediction, what the degree of similarity of the two samples was, and so on.

You don't choose a database, or a web server, or a JavaScript framework, simply on the basis of performance tests. You will learn the API and appreciate how it supports the interface that you want to provide to your users. Similarly, machine learning developers need access to the internal states of classifiers in order to create more functional, more interactive user interfaces.

In addition to the design of such systems, we need to give users the opportunity to improve the results themselves, to manage them. Microsoft Research Todd Kulesza from Microsoft Research did a lot of work on solving this problem, which he called “ Explanatory Debugging ”. Todd created machine learning systems that could explain why they classified the data in this way. These explanations are then used as an interface, through which users give a reverse response for the sake of improving and, more importantly, personalizing the result. An excellent example of this idea is described in Todd's paper “ Debugging a Naive Bayes Classification of Text by End Users ”.

Give users the ability to create their own classifiers

According to common practice, engineers create classifiers, system architects integrate them into interfaces, and users then interact with what has happened. The disadvantage of this approach is that it separates the practice of machine learning from knowledge on issues and the ability to evaluate the results of the system. Engineers and data processing specialists can understand the algorithms and statistical tests used to evaluate the results, but they can completely capture all the input data and do not notice the problems in the results that are obvious to users.

At best, this approach provides a very slow cyclical improvement in results. With each iteration, engineers turn to users, slowly examine the problem and make incremental improvements. In practice, this cumbersome cycle leads to the fact that machine learning systems are put into operation with obvious flaws for end users. Or the creation of such systems to solve many real-world problems is too expensive.

To avoid this situation, you need to give users the ability to directly create classifiers. To do this, we need to design interfaces that allow labeling samples, select properties, and perform all other operations necessary to harmonize users' mental representations with the workflow. When we understand how to do this, we will achieve incredible results.

One of the most impressive experiments in the field of interactive machine learning was associated with invitations to groups on Facebook: " ReGroup: interactive machine learning to create groups on demand ."

Now an invitation to an event on Facebook is done like this: you create a new event, and then invite friends. The system offers an alphabetical list of hundreds of your friends with a flag next to each name. You look at this sheet in despair, and then click "invite everyone." As a result, all your friends receive invitations to events where they cannot go, because they live in other cities.

In the experiment, it was proposed to radically improve the system of invitations. At first, users were offered the same list of names with flags. But when a person checked a box next to a name, it was marked as a positively labeled pattern. And all missing names are negatively labeled. The obtained data were used to train the classifier, using data from profiles and social connections as additional properties. Then, the system calculated for each contact the probability that he would be invited to an event, and sorted out the list, placing the contacts with the highest probability. Criteria were used for evaluation such as where a person lives, what are his social connections, how long have you been friends with him and so on. And the results of the classifier quickly became really useful for users of the social network. Incredibly elegant combination of the current patterns of user interaction with the system and the data necessary for training the classifier.

Another great example is CueFlik . Its goal: to improve the web image search, giving users the ability to create rules for automatically grouping photos by their visual properties. For example, a user searches for a stereo query and then selects “product photos” (just images on a white background). CueFlik takes examples and teaches the classifier so that it can distinguish product photos from ordinary photos, which the user can then include in other search queries, for example, according to the words “machines” or “phones”.

Conclusion

Thinking about the future of artificial intelligence, it is easy to slip into stereotyped representations from science fiction films and books, like Terminator, Odyssey 2001 or She. But all these fantasies far more reflect our concern about technology, gender, and human nature than the reality of creating modern machine learning systems.

Instead of seeing the recent revolutionary advances in the field of deep learning as gradual steps towards frightening sci-fi scenarios, it's better to think of them as powerful new engines for thousands of projects like ReGroup and CueFlik. Projects that give us unprecedented opportunities in the knowledge and management of our world. Machine learning can make a difference in our whole life, starting with shopping and ending with diagnoses and ways of interacting with other people. But for this we need not only to teach the machine to learn, but also to put the learning results at the service of people.

Source: https://habr.com/ru/post/324574/

All Articles