Guide for novice programmer graphics shaders

The ability to write graphic shaders opens up all the power of modern GPUs, which today already contain thousands of cores that can execute your code quickly and in parallel. Programming shaders requires a slightly different look at some things, but the emerging potential is worth some time spent studying it.

Virtually every modern graphic scene is the result of some code written specifically for the GPU - from realistic lighting effects in the latest AAA games to 2D effects and fluid simulation.

The scene in Minecraft before and after applying several shaders.

')

Shader programming sometimes seems mysterious black magic. Here and there you can find separate pieces of shader code that promise you incredible effects and, perhaps, really are able to provide them - but they do not at all explain what they do and how they achieve such impressive results. This article will try to close this gap. I will focus on basic things and terms related to writing and understanding shader code, so that later you can change the shader code yourself, combine them, or write your own from scratch.

A shader is simply a program that runs on one of the graphics cores and tells the video card how to draw each pixel. Programs are called “shaders” because they are often used to control the effects of lighting and shading (“shading”). But, of course, there is no reason to limit ourselves to these effects.

Shaders are written in a special programming language. Do not worry, you do not need right now to go and learn from scratch a new programming language. We will use GLSL (OpenGL Shading Language), which has a C-like syntax. There are other programming languages for shaders for different platforms, but since their ultimate goal is to run the same code on the GPU, they have quite similar principles.

This article will tell only about the so-called pixel (or fragment) shaders. If it became interesting to you, and what they are still - you should read about the graphics pipeline (for example, in the OpenGL Wiki ).

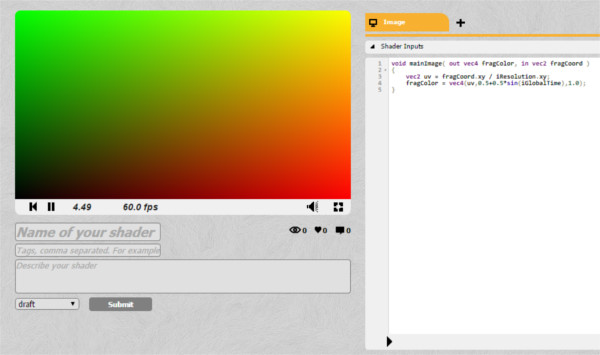

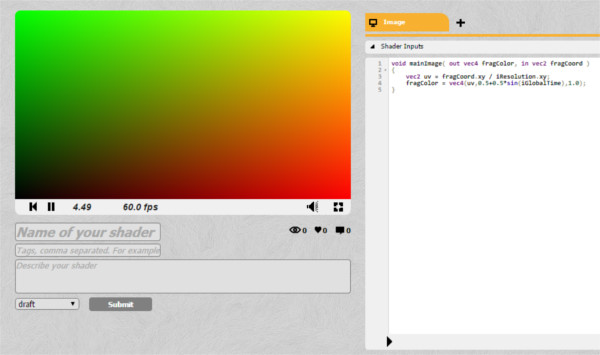

For our experiments, we will use ShaderToy . This will allow you to take and start writing shader code here and now, without delaying the matter until later because of the need to install some specific tools or SDK. The only thing you need is a browser with WebGL support. It is not necessary to create an account on ShaderToy (only if you want to save your code there).

Note : ShaderToy is currently in beta, so at the time you read this article, some of the nuances of its UI may change.

So, press the New button in the right corner, which will lead to the creation of a new shader:

The little black arrow under the code compiles and runs the shader.

I will now explain how the shader works, in exactly one sentence. You are ready? Here it is. The only purpose of a shader is to return four numbers: r, g, b, and a.

This is all that a shader can and should do.

The function that you see above runs for each pixel on the screen. And for each of them, it returns the four numbers above, which become the color of this pixel. This is how pixel shaders work (sometimes also called fragment shaders).

So, now we have enough knowledge to, for example, fill the entire screen with a pure red color. The values of each of the rgba components (red, green, blue and “alpha” - that is, “transparency”) can be in the range from 0 to 1, so in our case we will simply return r, g, b, a = 1.0 0.1 ShaderToy expects the final color of the pixel in the fragColor variable.

My congratulations! This is your first working shader!

Mini task : can you fill the entire screen in gray?

vec4 is just a data type, so we can declare our color as a variable:

This example is not very exciting. We have the power of hundreds or thousands of processing cores capable of working efficiently and in parallel, and we shoot sparrows from this cannon, filling the entire screen with one color.

Let's at least draw a gradient. To do this, as you can guess, we need to know the position of the current pixel on the screen.

Each pixel shader has at its disposal several useful variables . In our case, the most useful will be the fragCoord, which contains the x and y coordinates (and also z, if you need to work in 3D) of the current pixel. To begin with, let's try to paint all the pixels in the left half of the screen black and on the right half red:

Note : to access the components of variables of type vec4, you can use obj.x, obj.y, obj.z, obj.w or obj.r, obj.g, obj.b, obj.a. These are equivalent entries. In this way, we are able to name the vec4 components, depending on what they are in each particular case.

Do you already see a problem with the code above? Try pushing the full screen button. The proportions of the red and black parts of the screen will change (depending on the size of your screen). In order to paint over exactly half of the screen, we need to know its size. Screen size is not a built-in variable, since it is something that the application programmer controls himself. In our case, it is the responsibility of the ShaderToy developers.

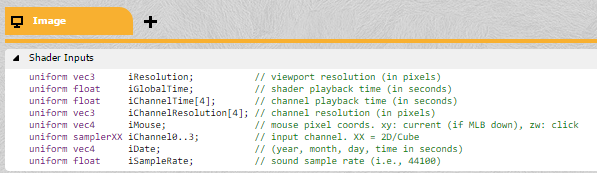

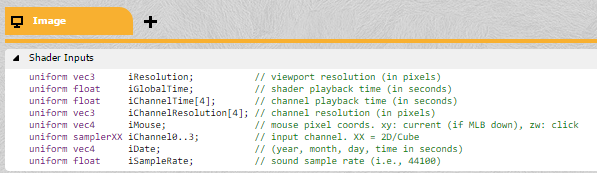

If something is not a built-in variable, you can send this information from the CPU (the main code of your application) to the GPU (your shader). ShaderToy does it for you. You can view all the variables available to the shader in the Shader Inputs tab. In GLSL, they are called uniform variables.

Let's fix our code so that it correctly defines the middle of the screen. To do this, we need the iResolution uniform variable:

Now, even with an increase in the preview window (or switching to full-screen mode), we get a black and red rectangle divided exactly in half.

Changing our code to get a gradient fill is easy. The color components can be in the range from 0 to 1, and our coordinates are now also represented in the same range.

Voila!

Mini-task : do you try to make a vertical gradient yourself? Diagonal? How about switching between more than two colors?

If you have not missed the above task with a vertical gradient, then you already know that the upper left corner has coordinates (0; 1), and not (0; 0), as one would assume. This is important, remember this.

Having fun with color shading is, of course, funny, but if we want to realize some truly spectacular effect, our shader should be able to accept a picture at the input and modify it. Thus, we can write a shader that can affect, for example, the entire frame in the game (draw effects of liquids or color correction) or vice versa, perform only certain operations for some objects of the scene (for example, implement a part of the lighting system).

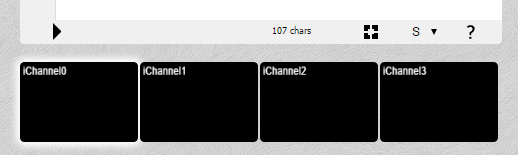

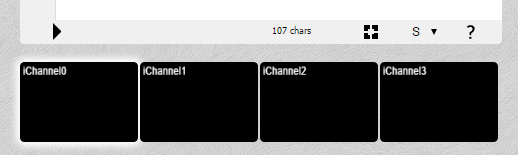

If we wrote shaders on any conventional platform, we would have to transfer the image to the shader as a uniform variable (in the same way as the screen resolution was transmitted). ShaderToy does it for us. There are four input channels below:

Click on the iChannel0 channel and select any texture (image). Now you have a picture that will be transferred to your shader. But there is one problem: we don’t have the DrawImage () function. You remember, all that a shader can do is return the rgba value for one pixel.

So, if we can only return the color value, then how do we draw a picture on the screen? We must somehow relate the pixel in the picture to the pixel for which the shader was called:

We can do this by using the texture (textureData, coordinates) function, which accepts texture and coordinates (x, y) as input, and returns the color of the texture at this point as a variable of type vec4.

You can relate the texture and screen pixels as you like. You can, for example, stretch the texture to a quarter of the screen or draw only a part of it. In our case, we just want to see the original image:

And here it is, our picture!

Now, when you can pull data from a texture, you can manipulate it as you like. You can stretch or compress the image, play with its colors.

Let's add here the gradient we already know:

Congratulations, you just wrote your first post-processing effect!

Mini-task : can you write a shader that converts the input image into a black and white image?

Notice, although we use a static image, what you see on the screen is rendered in real time, many times per second. You can verify this by replacing the static image on the video in the input channel (just click on the iChannel0 channel and select the video).

Up to this point all our effects were static. We can do much more interesting things using the input parameters provided by the ShaderToy developers. iGlobalTime is an ever-increasing variable - we can use it as a basis for periodic effects. Let's try playing with the colors:

GLSL has built-in sine and cosine functions (and many other useful ones). The color components should not be negative, so we use the abs function.

Mini-task : can you make a shader, which will periodically smoothly make the picture black and white, and then full color again?

When writing ordinary programs, you may have used the debugging output or logging, but for shaders this is not very possible. You can find some debugging tools for your specific platform, but in general, it is best to present the value you need in the form of some graphical information that you can see in the output with the naked eye.

We have considered only the basic shader development tools, but you can already experiment with them and try to make something of your own. Look at the effects available on ShaderToy and try to understand (or independently reproduce) some of them.

One of the (many) things that I did not mention in this article is vertex shaders (Vertex Shaders). They are written in the same language, but are launched not for pixels, but for vertices, returning, respectively, the new position of the vertex and its color. Vertex shaders do, for example, display a 3D scene on the screen.

The last mini-task: can you write a shader that will replace the green background (available in some videos on ShaderToy) with another picture or video?

That's all that I wanted to tell in this article. In the following, I will try to talk about lighting systems, fluid simulation, and shader design for specific platforms.

Virtually every modern graphic scene is the result of some code written specifically for the GPU - from realistic lighting effects in the latest AAA games to 2D effects and fluid simulation.

The scene in Minecraft before and after applying several shaders.

')

The purpose of this instruction

Shader programming sometimes seems mysterious black magic. Here and there you can find separate pieces of shader code that promise you incredible effects and, perhaps, really are able to provide them - but they do not at all explain what they do and how they achieve such impressive results. This article will try to close this gap. I will focus on basic things and terms related to writing and understanding shader code, so that later you can change the shader code yourself, combine them, or write your own from scratch.

What is a shader?

A shader is simply a program that runs on one of the graphics cores and tells the video card how to draw each pixel. Programs are called “shaders” because they are often used to control the effects of lighting and shading (“shading”). But, of course, there is no reason to limit ourselves to these effects.

Shaders are written in a special programming language. Do not worry, you do not need right now to go and learn from scratch a new programming language. We will use GLSL (OpenGL Shading Language), which has a C-like syntax. There are other programming languages for shaders for different platforms, but since their ultimate goal is to run the same code on the GPU, they have quite similar principles.

This article will tell only about the so-called pixel (or fragment) shaders. If it became interesting to you, and what they are still - you should read about the graphics pipeline (for example, in the OpenGL Wiki ).

Go!

For our experiments, we will use ShaderToy . This will allow you to take and start writing shader code here and now, without delaying the matter until later because of the need to install some specific tools or SDK. The only thing you need is a browser with WebGL support. It is not necessary to create an account on ShaderToy (only if you want to save your code there).

Note : ShaderToy is currently in beta, so at the time you read this article, some of the nuances of its UI may change.

So, press the New button in the right corner, which will lead to the creation of a new shader:

The little black arrow under the code compiles and runs the shader.

What's going on here?

I will now explain how the shader works, in exactly one sentence. You are ready? Here it is. The only purpose of a shader is to return four numbers: r, g, b, and a.

This is all that a shader can and should do.

The function that you see above runs for each pixel on the screen. And for each of them, it returns the four numbers above, which become the color of this pixel. This is how pixel shaders work (sometimes also called fragment shaders).

So, now we have enough knowledge to, for example, fill the entire screen with a pure red color. The values of each of the rgba components (red, green, blue and “alpha” - that is, “transparency”) can be in the range from 0 to 1, so in our case we will simply return r, g, b, a = 1.0 0.1 ShaderToy expects the final color of the pixel in the fragColor variable.

void mainImage( out vec4 fragColor, in vec2 fragCoord ) { fragColor = vec4(1.0,0.0,0.0,1.0); } My congratulations! This is your first working shader!

Mini task : can you fill the entire screen in gray?

vec4 is just a data type, so we can declare our color as a variable:

void mainImage( out vec4 fragColor, in vec2 fragCoord ) { vec4 solidRed = vec4(1.0,0.0,0.0,1.0); fragColor = solidRed; } This example is not very exciting. We have the power of hundreds or thousands of processing cores capable of working efficiently and in parallel, and we shoot sparrows from this cannon, filling the entire screen with one color.

Let's at least draw a gradient. To do this, as you can guess, we need to know the position of the current pixel on the screen.

Shader Input Parameters

Each pixel shader has at its disposal several useful variables . In our case, the most useful will be the fragCoord, which contains the x and y coordinates (and also z, if you need to work in 3D) of the current pixel. To begin with, let's try to paint all the pixels in the left half of the screen black and on the right half red:

void mainImage( out vec4 fragColor, in vec2 fragCoord ) { vec2 xy = fragCoord.xy; // vec4 solidRed = vec4(0,0.0,0.0,1.0);// if(xy.x > 300.0){// , solidRed.r = 1.0;// } fragColor = solidRed; } Note : to access the components of variables of type vec4, you can use obj.x, obj.y, obj.z, obj.w or obj.r, obj.g, obj.b, obj.a. These are equivalent entries. In this way, we are able to name the vec4 components, depending on what they are in each particular case.

Do you already see a problem with the code above? Try pushing the full screen button. The proportions of the red and black parts of the screen will change (depending on the size of your screen). In order to paint over exactly half of the screen, we need to know its size. Screen size is not a built-in variable, since it is something that the application programmer controls himself. In our case, it is the responsibility of the ShaderToy developers.

If something is not a built-in variable, you can send this information from the CPU (the main code of your application) to the GPU (your shader). ShaderToy does it for you. You can view all the variables available to the shader in the Shader Inputs tab. In GLSL, they are called uniform variables.

Let's fix our code so that it correctly defines the middle of the screen. To do this, we need the iResolution uniform variable:

void mainImage( out vec4 fragColor, in vec2 fragCoord ) { vec2 xy = fragCoord.xy; // xy.x = xy.x / iResolution.x; // xy.y = xy.y / iResolution.y; // 0 1 vec4 solidRed = vec4(0,0.0,0.0,1.0); // if(xy.x > 0.5){ solidRed.r = 1.0; // } fragColor = solidRed; } Now, even with an increase in the preview window (or switching to full-screen mode), we get a black and red rectangle divided exactly in half.

From screen splitting to gradient

Changing our code to get a gradient fill is easy. The color components can be in the range from 0 to 1, and our coordinates are now also represented in the same range.

void mainImage( out vec4 fragColor, in vec2 fragCoord ) { vec2 xy = fragCoord.xy; // xy.x = xy.x / iResolution.x; // xy.y = xy.y / iResolution.y; // 0 1 vec4 solidRed = vec4(0,0.0,0.0,1.0); // solidRed.r = xy.x; // fragColor = solidRed; } Voila!

Mini-task : do you try to make a vertical gradient yourself? Diagonal? How about switching between more than two colors?

If you have not missed the above task with a vertical gradient, then you already know that the upper left corner has coordinates (0; 1), and not (0; 0), as one would assume. This is important, remember this.

Drawing images

Having fun with color shading is, of course, funny, but if we want to realize some truly spectacular effect, our shader should be able to accept a picture at the input and modify it. Thus, we can write a shader that can affect, for example, the entire frame in the game (draw effects of liquids or color correction) or vice versa, perform only certain operations for some objects of the scene (for example, implement a part of the lighting system).

If we wrote shaders on any conventional platform, we would have to transfer the image to the shader as a uniform variable (in the same way as the screen resolution was transmitted). ShaderToy does it for us. There are four input channels below:

Click on the iChannel0 channel and select any texture (image). Now you have a picture that will be transferred to your shader. But there is one problem: we don’t have the DrawImage () function. You remember, all that a shader can do is return the rgba value for one pixel.

So, if we can only return the color value, then how do we draw a picture on the screen? We must somehow relate the pixel in the picture to the pixel for which the shader was called:

We can do this by using the texture (textureData, coordinates) function, which accepts texture and coordinates (x, y) as input, and returns the color of the texture at this point as a variable of type vec4.

You can relate the texture and screen pixels as you like. You can, for example, stretch the texture to a quarter of the screen or draw only a part of it. In our case, we just want to see the original image:

void mainImage( out vec4 fragColor, in vec2 fragCoord ) { vec2 xy = fragCoord.xy / iResolution.xy; // vec4 texColor = texture(iChannel0,xy); // (x;y) iChannel0 fragColor = texColor; // } And here it is, our picture!

Now, when you can pull data from a texture, you can manipulate it as you like. You can stretch or compress the image, play with its colors.

Let's add here the gradient we already know:

texColor.b = xy.x;

Congratulations, you just wrote your first post-processing effect!

Mini-task : can you write a shader that converts the input image into a black and white image?

Notice, although we use a static image, what you see on the screen is rendered in real time, many times per second. You can verify this by replacing the static image on the video in the input channel (just click on the iChannel0 channel and select the video).

Add a little movement

Up to this point all our effects were static. We can do much more interesting things using the input parameters provided by the ShaderToy developers. iGlobalTime is an ever-increasing variable - we can use it as a basis for periodic effects. Let's try playing with the colors:

void mainImage( out vec4 fragColor, in vec2 fragCoord ) { vec2 xy = fragCoord.xy / iResolution.xy; // vec4 texColor = texture(iChannel0,xy); // (x;y) iChannel0 texColor.r *= abs(sin(iGlobalTime)); texColor.g *= abs(cos(iGlobalTime)); texColor.b *= abs(sin(iGlobalTime) * cos(iGlobalTime)); fragColor = texColor; // } GLSL has built-in sine and cosine functions (and many other useful ones). The color components should not be negative, so we use the abs function.

Mini-task : can you make a shader, which will periodically smoothly make the picture black and white, and then full color again?

Shader Debugging

When writing ordinary programs, you may have used the debugging output or logging, but for shaders this is not very possible. You can find some debugging tools for your specific platform, but in general, it is best to present the value you need in the form of some graphical information that you can see in the output with the naked eye.

Conclusion

We have considered only the basic shader development tools, but you can already experiment with them and try to make something of your own. Look at the effects available on ShaderToy and try to understand (or independently reproduce) some of them.

One of the (many) things that I did not mention in this article is vertex shaders (Vertex Shaders). They are written in the same language, but are launched not for pixels, but for vertices, returning, respectively, the new position of the vertex and its color. Vertex shaders do, for example, display a 3D scene on the screen.

The last mini-task: can you write a shader that will replace the green background (available in some videos on ShaderToy) with another picture or video?

That's all that I wanted to tell in this article. In the following, I will try to talk about lighting systems, fluid simulation, and shader design for specific platforms.

Source: https://habr.com/ru/post/324476/

All Articles