Micro-optimizations are important: prevent 20 million system calls

This publication is a logical continuation of the post " How to tune the TZ environment variable avoids thousands of system calls ." Here we look at the characteristic situation where micro-optimizations (for example, deleting a system call) have a very strong effect on performance.

What is a noticeable improvement?

We previously described an environment variable that an application can tune to avoid thousands of additional system calls . This article was greeted with fair questions with some skepticism:

- “Subtle, but does deleting a single system call significantly affect the performance of the entire program?”

- “It looks unnecessary; Linux calls make system calls so fast. ”

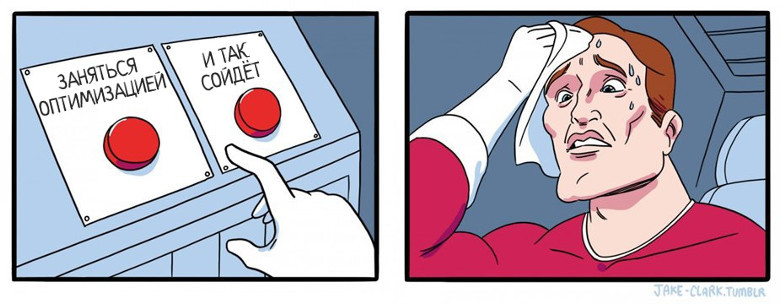

It is difficult to say that each of the developers is investing in the concept of "noticeable improvement" in relation to a specific application. Developers of the kernel and drivers often spend a lot of time micro-optimizing code and data structures in order to use the processor’s cache as fully as possible and reduce the consumption of the CPU itself. Even if most programmers find the benefit very insignificant. Do I need to pay less attention to such optimizations? Someone can even say that micro-optimization can not be regarded as noticeable.

In this article, we define the noticeable as easily measurable and quite obvious. Can we show a real example when deleting a fragment (code path) of a slow ( without vDSO ) system call from a code results in an easily measurable and completely obvious result?

There are many real examples: from package sniffers to runtime (runtime) software languages. Consider such an infamous case as the influence of sigprocmask on the Ruby runtime.

What is sigprocmask ?

sigprocmask is a system call used to check or configure the signal mask of the current process. It allows the program to block or allow signals, which is useful when you need to execute a critical piece of code whose work cannot be interrupted.

This is not a particularly complex system call. Kernel code related to sigprocmask tells us that the call writes sigset_t to a C-structure containing the state of the current process (called as task_struct in the kernel). This is a very fast operation.

Let's create a simple test program that in a small loop calls sigprocmask . We will measure using strace and time :

#include <stdlib.h> #include <signal.h> int main(int argc, char *argv[]) { int i = 0; sigset_t test; for (; i < 1000000; i++) { sigprocmask(SIG_SETMASK, NULL, &test); } return 0; } Compile with gcc -o test test.c First we execute with time , and then with strace and time .

On my test system:

- The execution of

time ./testshowed: 0.047 seconds — real time, 0.012 seconds — user time, 0.036 seconds — system time. - The execution of

time strace -ttT ./testshowed: 52.364 seconds — real time, 9.313 seconds — user time, 14.349 seconds — system time.

In the case of strace for each sigprocmask call (your system will probably be displayed as rt_sigprocmask ), the approximate duration of execution will be shown. They are very small. On my test system, in most cases I received values in the region of 0.000003 seconds - with an unexpected surge of up to 0.000074 seconds.

It is quite difficult to measure the exact time of the system call for a variety of reasons. They go far beyond the issue discussed in this article. So we can assume that all measurements were taken equally incorrectly.

So, what we already know:

sigprocmaskis a system call used to configure or check the signal mask of the current process.- Kernel code is simple and must be executed very quickly.

- Measurements with

timeandstraceshow that when a million calls of sigprocmask are made in a small cycle, each call takes very little time.

So why do we need to get rid of additional sigprocmask , will it be worth our effort?

We will understand more

Sometimes someone else's code that we use in our applications (system libraries, kernel, glibc, etc.) does unexpected things or has side effects that are not obvious at first glance. As an example below, I will show how the indirect use of sigprocmask in a test program leads to a serious decrease in performance. And then I will demonstrate how this manifests itself in a real application.

One of the most prominent examples of how additional sigprocmask calls resulted in an obvious and easily measured performance decrease was associated with Ruby 1.8.7. This was noted in cases where the code was compiled with one particular configure flag.

We start with the default configuration flag values that were used on most operating systems (Debian, Ubuntu, etc.) during the widespread use of Ruby 1.8.7.

sigprocmask testing

Take a look at the test code:

def make_thread Thread.new do a = [] 10_000_000.times do a << "a" a.pop end end end t = make_thread t1 = make_thread t.join t1.join Everything is simple: we create two threads of execution, each of which adds and deletes data from an array 10 million times. If we run with the default configuration flags and strace values, we get amazing results:

$ strace -ce rt_sigprocmask /tmp/test-ruby/usr/local/bin/ruby /tmp/test.rb Process 30018 attached Process 30018 detached % time seconds usecs/call calls errors syscall ------ ----------- ----------- --------- --------- ---------------- 0.50 0.139288 0 20033025 rt_sigprocmask The Ruby virtual machine has generated more than 20 million sigprocmask system calls. You say: “But they took very little time! What's the big deal? ”

As already mentioned, it is not so easy to measure the duration of a system call. Restart the test program with time instead of strace and see how long it will take to complete on my system:

$ time /tmp/gogo/usr/local/bin/ruby /tmp/test.rb real 0m6.147s user 0m5.644s sys 0m0.476s About 6 seconds of real execution. That is about 3.3 million calls sigprocmask per second. Wow.

And if you configure one configure flag, the Ruby virtual machine will build the system, avoiding calls to sigprocmask !

Restart tests with strace and time , but this time we sigprocmask little Ruby so that it avoids calling sigprocmask :

$ strace -ce rt_sigprocmask /tmp/test-ruby-2/usr/local/bin/ruby /tmp/test.rb % time seconds usecs/call calls errors syscall ------ ----------- ----------- --------- --------- ---------------- -nan 0.000000 0 3 rt_sigprocmask Cool! We reduced the number of sigprocmask calls from 20 million to 3. It seems that strace had problems calculating the time it sigprocmask to execute system calls :(

Let's see what time will say:

$ time /tmp/test-ruby-2/usr/local/bin/ruby /tmp/test.rb real 0m3.716s user 0m3.692s sys 0m0.004s Approximately 40% faster (real time) than in the previous example, and less than one sigprocmask call per second.

Excellent result, but several questions arise:

- Isn't the example a bit contrived?

- When is it really relevant in real projects?

- What exactly when setting the configure flags leads to a decrease in the number of calls?

Let's first look at a real-life example, and then look at the details.

Real-life example: Puppet

The bug found in Puppet accurately demonstrates the effect of additional sigprocmask calls on the Ruby virtual machine:

I just ran into a serious performance issue. Puppet is very slow.

First example:

$ time puppet —version 0.24.5 real 0m0.718s user 0m0.576s sys 0m0.140s At this time, hundreds, if not thousands of rt_sigprocmask calls (SIG_BLOCK, NULL, [], 8) were made. And all this for the sake of displaying the version.

If you read the correspondence, you will find other comments in which people complain about the poor performance of Puppet. Questions on Stackoverflow are also devoted to the same problem.

But this problem is not only Ruby and Puppet. Users of other projects also write about such bugs , reporting full CPU usage and hundreds of thousands of sigprocmask calls.

Why it happens? Is it easy to fix?

The point is that calls to sigprocmask made by two functions from glibc (non-system calls): getcontext and setcontext .

They are used to save and restore the state of the processor. They are widely used by programs and libraries that implement exception handling or threads in user space. In the case of Ruby 1.8.7, setcontext and getcontext are needed in the implementation of threads in user space — to switch context between threads.

You might think that these two functions should be performed fairly quickly. After all, they just save or restore a small set of processor registers. Yes, saving is a very fast operation. But, apparently, the implementation of these functions in glibc is such that the sigprocmask system calls are used to save and restore the signal mask.

Recall that Linux provides a mechanism ( vDSO ), which instead of the kernel is responsible for making certain system calls. This reduces the cost of their implementation. Unfortunately, sigprocmask not one of them. All sigprocmask system calls lead to a transition from user space to the kernel.

The cost of such a transition is much higher than the cost of other operations in setcontext and getcontext (representing simple entries in memory). If you call these functions very often, then you will perform a slow operation (in this case, the sigprocmask system call that does not go through vDSO ) every time you need to do something quickly (for example, save or restore a set of processor registers to switch threads of execution).

Why does changing the configuration flag improve the situation?

At the time of widespread use of Ruby 1.8.7, the default --enable-pthread flag was used, activating a separate execution thread at the OS level that was started on a timer (timer thread). It started as needed to intercept (pre-empt) the Ruby virtual machine. Thus, the machine learned that it was time to switch between threads in the user space that were mapped to threads created in Ruby programs. Also, a call to --enable-pthread caused the configure script to find and use the getcontext and setcontext functions.

Without --enable-pthread the configure script will search for and use _setjmp and _longjmp (note the underscores). These functions do not save or restore the signal mask, and therefore do not generate sigprocmask system calls.

So:

--enable-pthreadand--disable-pthreadintended to control multithreading in the user space of the Ruby virtual machine: either an OS-level execution thread was used, or a simpleVTALRMsignal notifying the VM that it was time to switch to another Ruby thread.- An unexpected side effect of switching between these two methods of interception (pre-empt) was that the primitives for the implementation of switching were looking for either

setcontext/getcontextor_setjmp/_longjmp. - This led to the fact that if the

setcontext/getcontextpair was used, then additionalsigprocmaskcalls weresigprocmask. - And this, in turn, reduced productivity.

- What the creator of a number of projects faced, including Puppet.

And all this because of a single flag that enables or disables a single system call.

Conclusion

Micro-optimizations are important. But the degree of their importance, of course, depends on the specifics of the application itself. Keep in mind that libraries and code that you depend on can do what you do not expect (for example, to perform a slow system call). If you know how to identify and correct such problems, it will have a huge impact on your users. And in some cases - on the users of your users.

')

Source: https://habr.com/ru/post/324466/

All Articles