Browser-based WebRTC broadcasts with low latency RTSP IP cameras

According to some data, today, hundreds of millions of IP cameras for video surveillance have been installed in the world. However, not all of them have a critical delay in video playback. Video surveillance, as a rule, occurs “static” - the stream is recorded in the storage and can be analyzed for movement. For video surveillance, many software and hardware solutions have been developed that do their job well.

In this article, we will look at a slightly different application of the IP camera , namely the application in online broadcasts, where low communication delay is required .

First of all, let's eliminate the possible misunderstanding in the terminology about webcams and IP cameras.

A webcam is a video capture device that does not have its own processor and network interface. A webcam requires a connection to a computer, smartphone, or other device that has a network card and processor.

')

An IP camera is a standalone device that has its own network card and processor for compressing captured video and sending it to the network. Thus, the IP camera is a stand-alone mini-computer that fully connects to the network and does not require connection to another device, and can directly broadcast to the network.

Low latency is quite a rare requirement for IP cameras and online broadcasts. The need to work with low latency appears, for example, if the source of the video stream actively interacts with the viewers of this stream.

Most often, low latency is required in game use scenarios. Examples include real-time video auction, live dealer video casino, interactive online TV show with presenter, quadcopter remote control, etc.

Dealer live online casinos at work.

A regular RTSP IP camera, as a rule, shakes video in H.264 codec and can work in two modes of data transport: interleaved and non-interleaved .

Interleaved mode is the most popular and convenient, because In this mode, video data is transmitted via TCP protocol within the network connection to the camera. In order to distribute from an IP camera in interleaved, you just need to open / forward one RTSP port of the camera (for example, 554) to the outside. The player can only connect to the camera via TCP and pick up the stream already inside this connection.

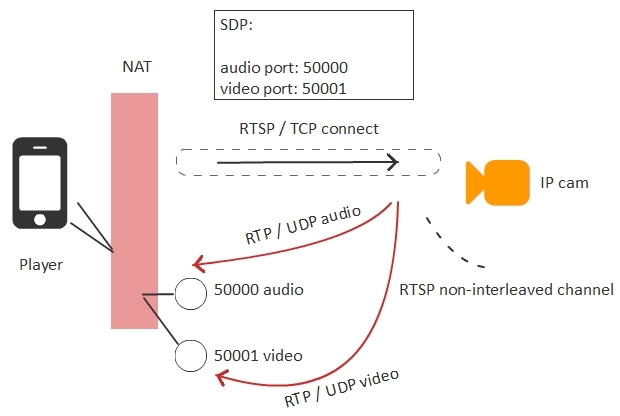

The second mode of the camera is non-interleaved . In this case, the connection is established using the RTSP / TCP protocol , and the traffic is already separate, using the RTP / UDP protocol outside the established TCP channel.

Non-interleaved mode is more favorable for broadcasting videos with minimal latency, as it uses RTP / UDP , but at the same time is more problematic if the player is located behind NAT .

When you connect a player that is behind NAT to the IP camera, the player needs to know what external IP addresses and ports it can use to receive audio and video traffic. These ports are specified in the SDP text config file, which is transmitted to the camera when an RTSP connection is established. If the NAT was opened correctly and the correct IP addresses and ports are defined, then everything will work.

So, in order to pick up video from a camera with a minimum delay, you need to use non-interleave mode and receive video traffic over UDP.

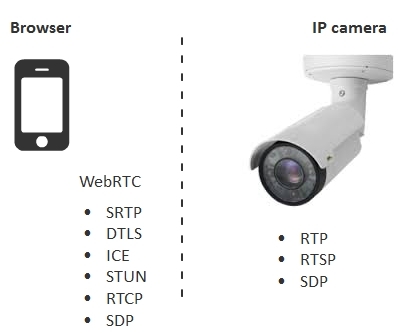

Browsers do not support the RTSP / UDP protocol stack directly, but they support the protocol stack of the embedded WebRTC technology.

Browser and camera technologies are very similar, in particular, SRTP is encrypted RTP . But for the correct distribution to the browsers, the IP camera would need partial support for the WebRTC stack.

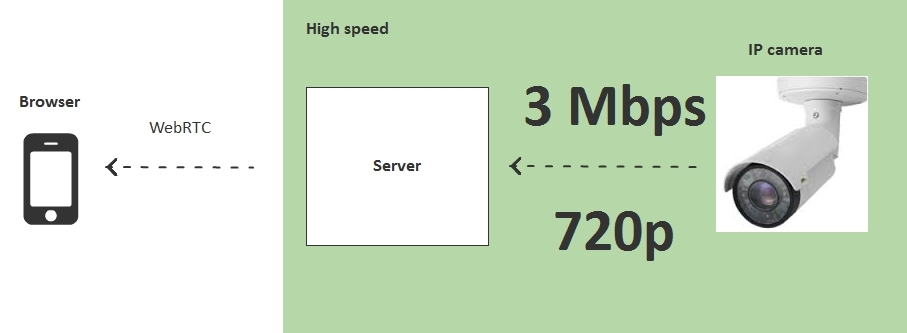

To eliminate this incompatibility, an intermediate relay server is required, which will be a bridge between the IP camera protocols and browser protocols.

The server picks up the stream from the IP camera to itself via RTP / UDP and sends it to connected browsers via WebRTC.

The WebRTC technology works over the UDP protocol and thus provides low latency in the direction Server> Browser . The IP camera also works under the RTP / UDP protocol and provides low latency in the direction Camera> Server .

The camera can give a limited number of streams, due to limited resources and bandwidth. Using an intermediate server allows you to scale the broadcast from an IP camera to a large number of viewers.

On the other hand, when using a server, two communication legs are included:

1) Between viewers and server

2) Between server and camera

This topology has a number of "features" or "pitfalls." We list them below.

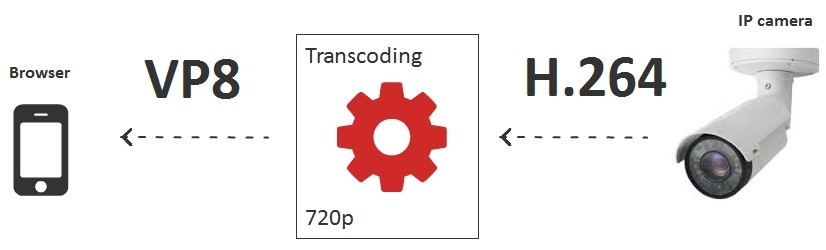

Reef # 1 - Codecs

Obstacles to working with low latency and the causes of the deterioration of the overall system performance can be used codecs.

For example, if the camera gives 720p video stream in H.264, and connects the Chrome-browser on an Android smartphone with support for only VP8.

When transcoding is turned on, a transcoding session must be created for each of the connected IP cameras, which decodes H.264 and encodes in VP8 . In this case, the 16th nuclear dual-processor server will be able to serve only 10-15 IP cameras, from the approximate calculation 1 camera per physical core.

Therefore, if the server capacity does not allow transcoding the planned number of cameras, then transcoding should be avoided. For example, to serve only browsers with H.264 support, and the rest to suggest using a native mobile application for iOS or Android, where there is support for the H.264 codec.

As an option to bypass transcoding in a mobile browser, you can use HLS . But HTTP streaming doesn’t have a low latency at all and currently cannot be used for interactive broadcasts.

Reef # 2 - Camera Bitrate and Loss

UDP protocol helps to cope with the delay, but allows for loss of video packets. Therefore, despite the low latency, with large losses in the network between the camera and the server, the picture can be spoiled.

In order to eliminate losses, you need to make sure that the video stream generated by the camera has a bitrate that fits in the dedicated band between the camera and the server.

Reef # 3 - Viewers' Bitrate and Loss

Every broadcast viewer connected to the server also has a certain bandwidth on Download.

If the IP camera sends a stream that exceeds the capabilities of the viewer's channel (for example, the camera sends 1 Mbps , and the viewer can receive only 500 Kbps ), then this channel will have large losses and, as a result, video friezes or strong artifacts.

In this case, there are three options:

- Transcode a video stream individually for each viewer under the required bitrate.

- Transcode streams are not for everyone connected, but for a group of spectators.

- Prepare camera streams in advance in several resolutions and bitrates.

The first option with transcoding for each viewer is not suitable, since it consumes CPU resources already with 10-15 connected viewers. Although it should be noted that this option provides maximum flexibility with maximum CPU usage. Those. This is ideal, for example, if you are streaming only 10 geographically distributed people, each of them receives a dynamic bit rate and each of them needs a minimum delay.

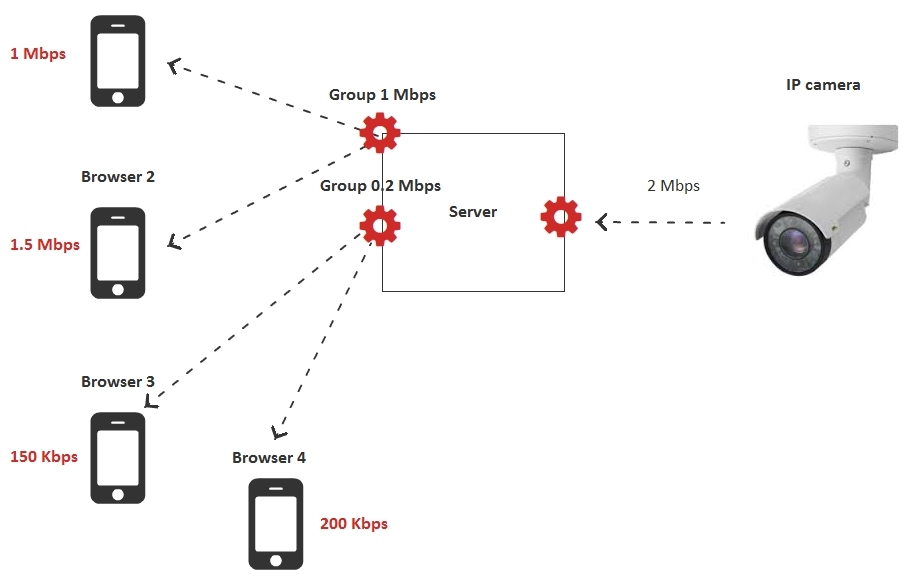

The second option is to reduce the load on the server's CPU using transcoding groups. The server creates several bit rate groups, for example, two:

- 200 Kbps

- 1 Mbps

If the viewer does not have enough bandwidth, he switches to the group in which he can comfortably receive the video stream. Thus, the number of transcoding sessions is not equal to the number of viewers as in the first case, but is a fixed number, for example 2, if there are two transcoding groups.

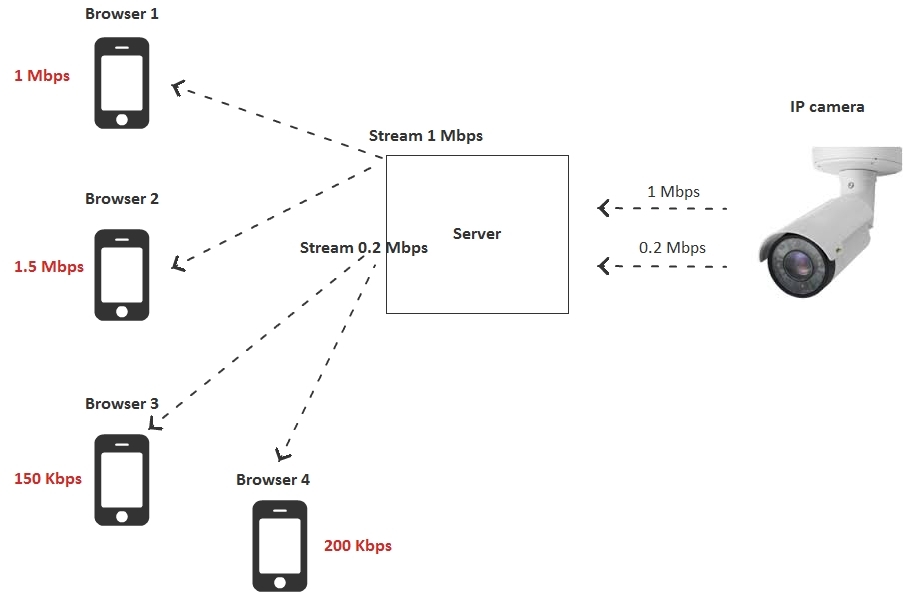

The third option involves the complete abandonment of transcoding on the server side and the use of already prepared video streams in various resolutions and bit rates. In this case, the camera is configured to return two or three streams with different resolutions and bitrates, and viewers switch between these streams depending on their bandwidth.

In this case, the transcoding load on the server goes and shifts to the camera itself, since the camera is now forced to encode two or more streams instead of one.

As a result, we considered three options for adjusting the spectator bandwidth. If we assume that one transcoding session takes 1 server core, we get the following CPU load table:

| Adjustment method | Number of cores on the server | |

| one | Transcode a video stream for each viewer under the desired bitrate | N - number of viewers |

| 2 | Transcode video streams into groups of users | G - the number of groups of spectators |

| 3 | Prepare camera streams in advance in several resolutions and bitrates | 0 |

From the table it can be seen that we can shift the transcoding load on the camera or transfer the transcoding to the server. Options 2 and 3 at the same time look the most optimal.

RTSP testing as WebRTC

It is time to conduct several tests to identify the actual picture of what is happening. Take a real IP camera and conduct a test to measure the delay in broadcasting.

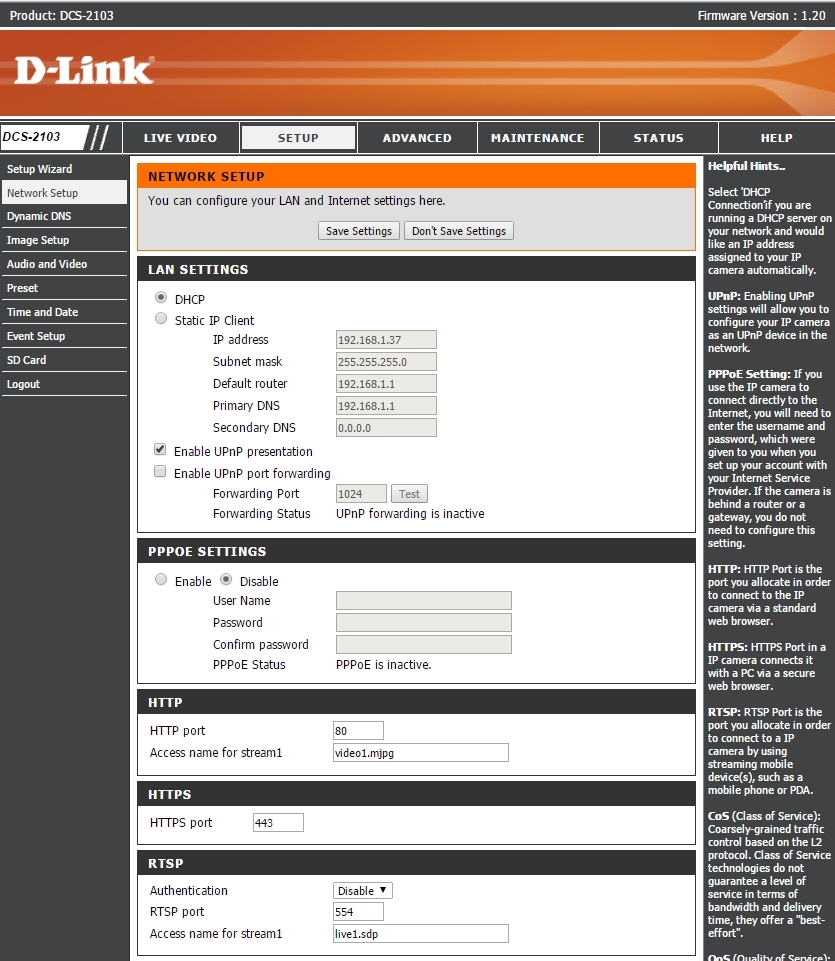

For testing, let's take the old D-link DCS-2103 IP camera with RTSP support and H.264 and G.711 codecs.

Since the camera lay for a long time in the closet with other useful devices and wires, I had to send it to Reset by pressing and holding the button on the back of the camera for 10 seconds.

After connecting to the network, a green light on the camera caught fire and the router saw another device on the local network with the IP address 192.168.1.37.

Go to the web interface of the camera and set the codecs and resolution for testing:

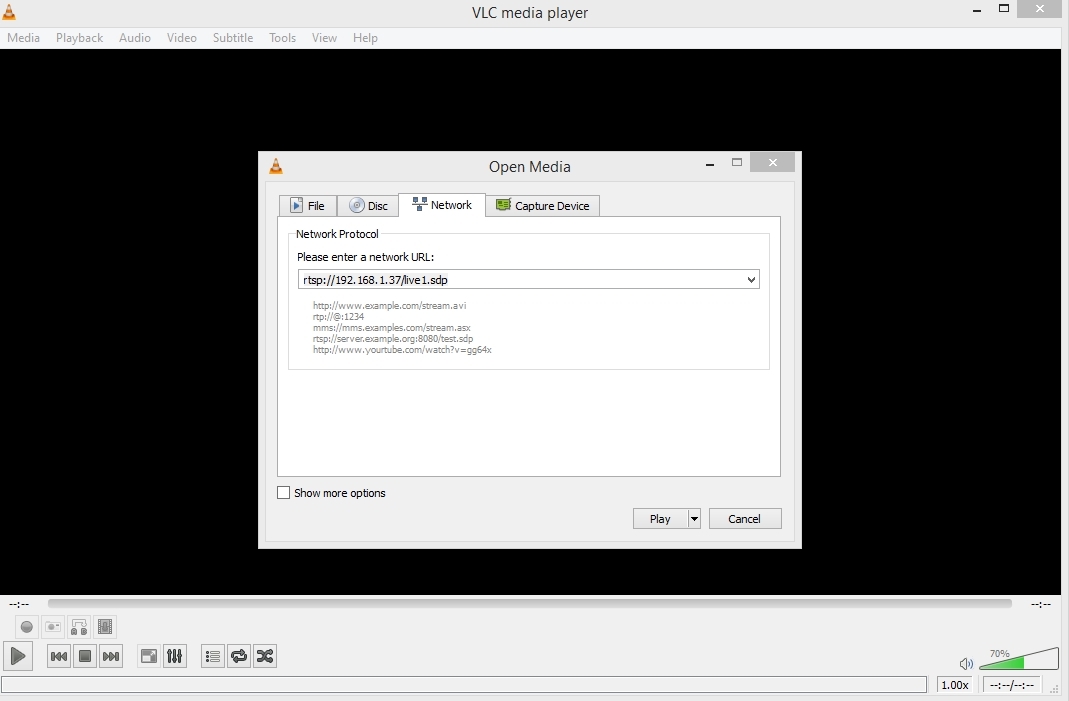

Next, go to the network settings and find out the RTSP address of the camera. In this case, the RTSP address is live1.sdp , i.e. The camera is available at rtsp: //192.168.1.37/live1.sdp

The availability of the camera is easy to check with the VLC player . Media - Open Network Stream.

We made sure that the camera works and gives video via RTSP.

As a server for testing we will use Web Call Server 5 . This streaming server supports RTSP and WebRTC protocols. It will connect to the IP camera via RTSP and pick up the video stream. Next, distribute the stream via WebRTC .

You can install a Web Call Server on your host or run a ready-made Amazon EC2 instance .

After installation, you need to switch the server to RTSP non-interleaved mode, which we discussed above. This can be done by adding settings.

rtsp_interleaved_mode=false This setting is added to the flashphoner.properties config and requires a restart of the server:

service webcallserver restart Thus, we have a server that works according to the non-interleaved scheme, receives packets from an IP camera via UDP, and then distributes via WebRTC (UDP).

The test server is located on a VPS server located in the data center of Frankfurt and has 2 cores and 2 gigabytes of RAM.

The camera is located on the local network at 192.168.1.37.

Therefore, the first thing we need to do is to forward port 554 to the address 192.168.1.37 for incoming TCP / RTSP connections, so that the server can establish a connection to our IP camera. To do this, in the settings of the router, we add only one rule:

The rule tells the router to redirect all incoming traffic on port 554, to 37 - the IP address.

Then it remains to find out your external IP address. This can be done in 5-15 seconds, googling by the word whatismyip

If you have a friendly NAT and you know the external IP address, then you can start the tests with the server.

The standard demo player in Google Chrome browser looks like this:

To start playing an RTSP stream, you just need to enter its address in the Stream field.

In this case, the stream address is: rtsp: //ip-cam/live1.sdp

Here ip-cam is the external IP address of your camera. The server will try to connect to this address.

Testing VLC Delays vs WebRTC

After we set up the IP camera and tested it in VLC , set up the server and tested the RTSP stream through the server with distribution via WebRTC , we can finally compare the delays.

For this we will use a timer that will show on the monitor screen a split second. We turn on the timer and play the video stream simultaneously on VLC locally and on the Firefox browser through a remote server.

Ping to server 100 ms .

Ping locally 1 ms .

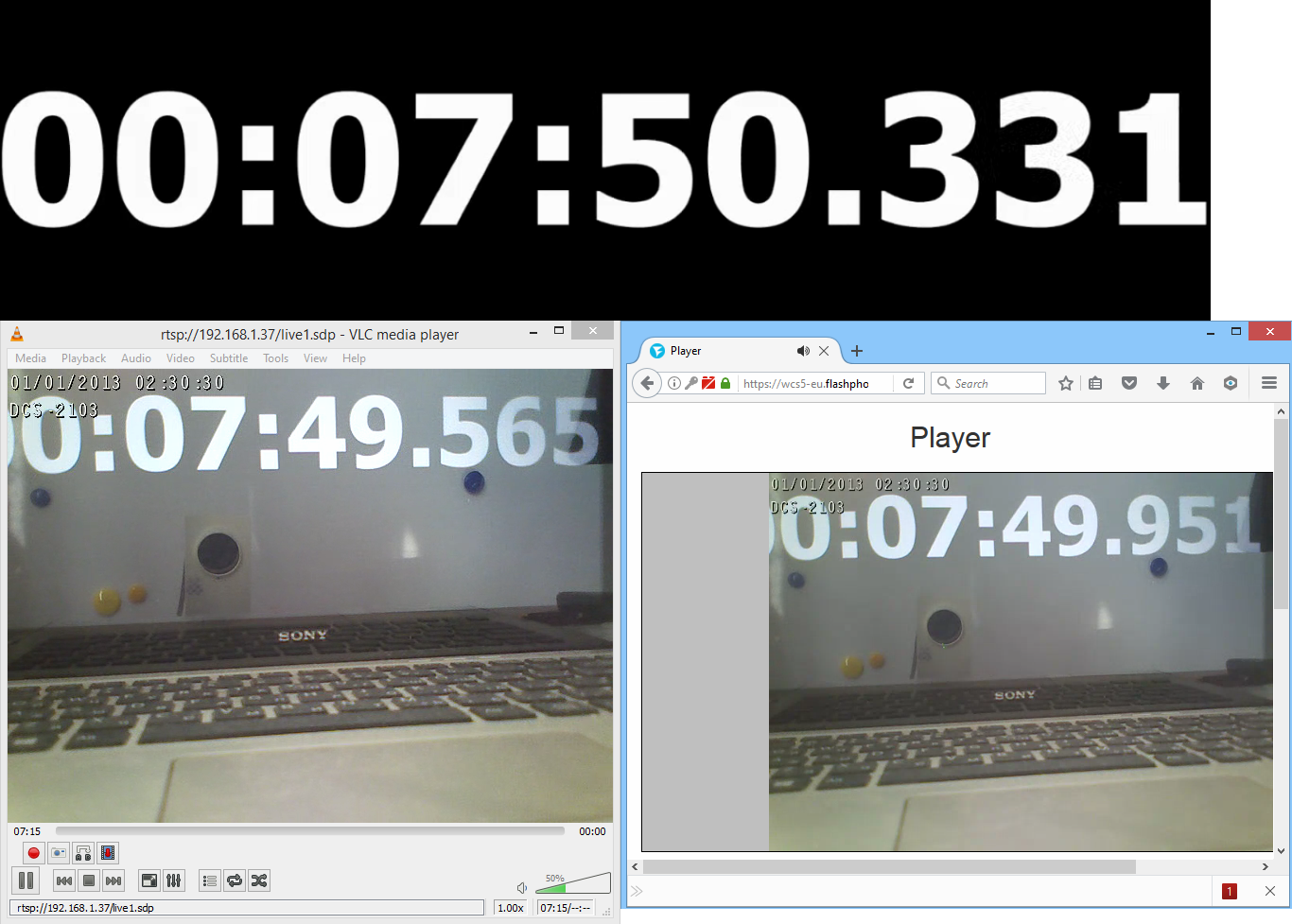

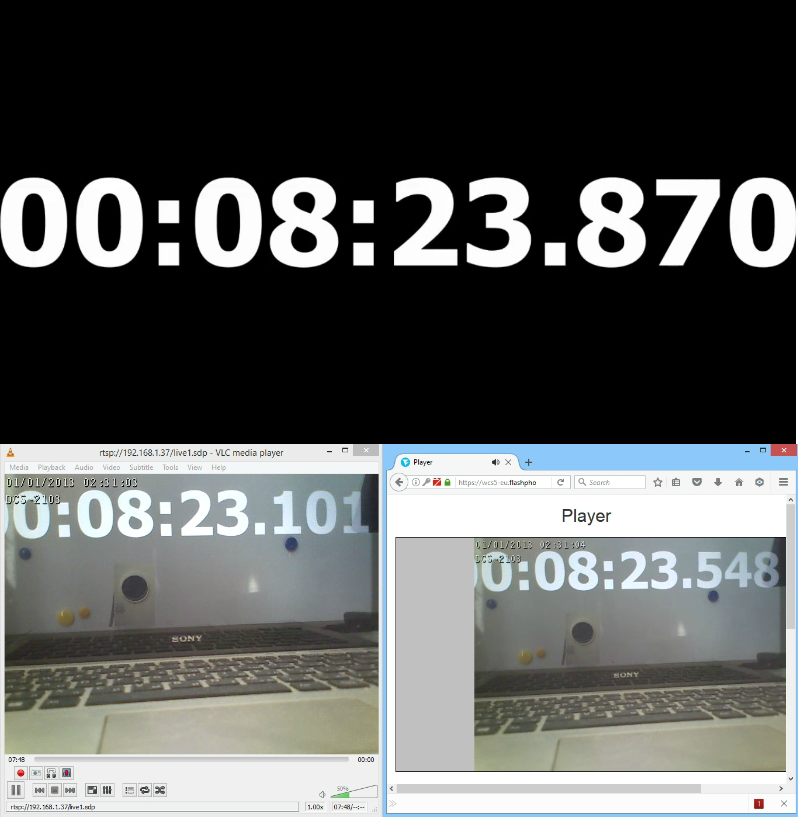

The first test using the timer looks like this:

On a black background is the original timer, which shows a zero delay. On the left, VLC , on the right, Firefox , receiving a WebRTC stream from a remote server.

| Zero | VLC | Firefox WCS | |

| Time | 50.559 | 49.791 | 50.238 |

| Latency ms | 0 | 768 | 321 |

We took a few snapshots to capture the delay values:

The measurement results look like this:

| Metric | Zero | VLC | Firefox WCS | |

| Test1 | Time | 50.559 | 49.791 | 50.238 |

| Latency | 0 | 768 | 321 | |

| Test2 | Time | 50.331 | 49.565 | 49.951 |

| Latency | 0 | 766 | 380 | |

| Test3 | Time | 23.870 | 23.101 | 23.548 |

| Latency | 0 | 769 | 322 | |

| Average | 768 | 341 | ||

Testing RTMP vs WebRTC delays

We will conduct similar tests with an RTMP player via a Wowza server and a simultaneous test with a WebRTC player via a Web Call Server .

On the left we are picking up a video stream from Wowza in an RTMP connection. On the right we collect the stream via WebRTC. Above the absolute time (zero delay).

Test - 1

Test - 2

Test - 3

Test - 4

Test results can be displayed in a familiar table:

| Metric | Zero | RTMP | Webrtc | |

| Test1 | Time | 37.277 | 35.288 | 36.836 |

| Latency | 0 | 1989 | 441 | |

| Test2 | Time | 02.623 | 00.382 | 02.238 |

| Latency | 0 | 2241 | 385 | |

| Test3 | Time | 29.119 | 27.966 | 28.796 |

| Latency | 0 | 1153 | 323 | |

| Test4 | Time | 50.051 | 48.702 | 49.664 |

| Latency | 1349 | 387 | ||

| Average | 1683 | 384 | ||

Thus, the average delay when playing an RTSP stream in Flash Player via RTMP was 1683 milliseconds . Average WebRTC delay is 384 milliseconds . Those. WebRTC was on average 4 times better in latency.

Links

WebRTC technology

RTSP - RFC

RTSP interleaved - RFC, 10.12 Embedded (Interleaved) Binary Data

RTMP specification

Web Call Server - WebRTC media server with RTSP support

VLC player for RTSP playback

Source: https://habr.com/ru/post/324294/

All Articles