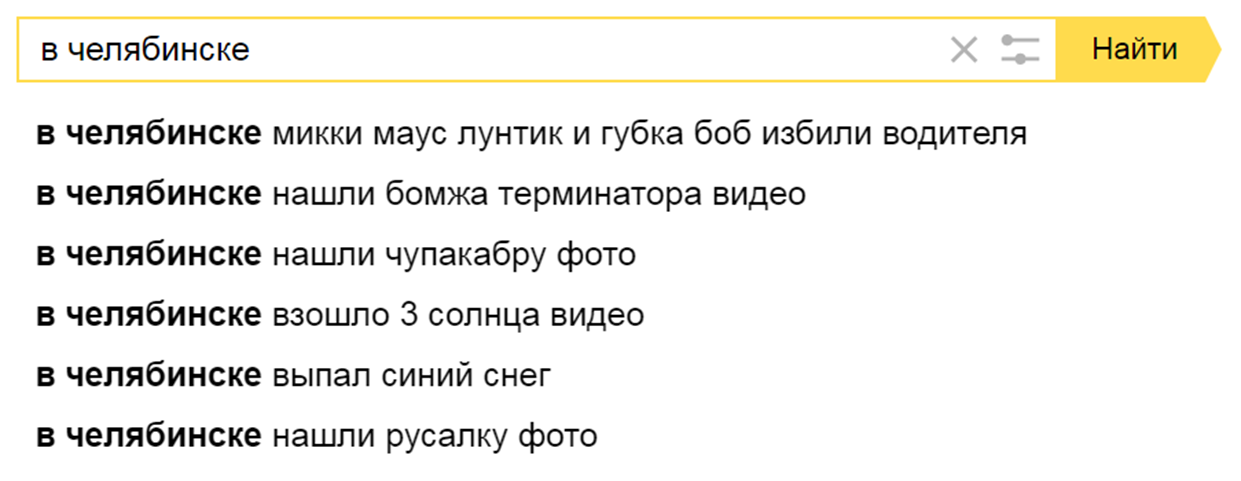

Do hint services suggest well? We measure the usefulness of autocompletion web services

About a month ago, I had one episode that made me think about the usefulness of autocompletion functions, which are often embedded on websites of online stores. Usually it looks like this: you start to place an order, enter your name, phone number and delivery address, and while you are slowly typing the address, before you pop up prompts with street names so that you do not strain the keyboard and select the desired address from the list.

About a month ago, I had one episode that made me think about the usefulness of autocompletion functions, which are often embedded on websites of online stores. Usually it looks like this: you start to place an order, enter your name, phone number and delivery address, and while you are slowly typing the address, before you pop up prompts with street names so that you do not strain the keyboard and select the desired address from the list.So, in February I needed to quickly feed a large group of friends at home, and I decided to order pizza at one fairly popular institution. In general, I usually adhere to the principles of healthy eating, but the situation was exceptional ...

You will say Habr is not a place for pizza stories, and you will be absolutely right, but this story is not quite about pizza, it is more about modeling human behavior, about load testing, a little about programming, and more about numerical evaluation of the usefulness of several modern ajax-services autocompletion and tips.

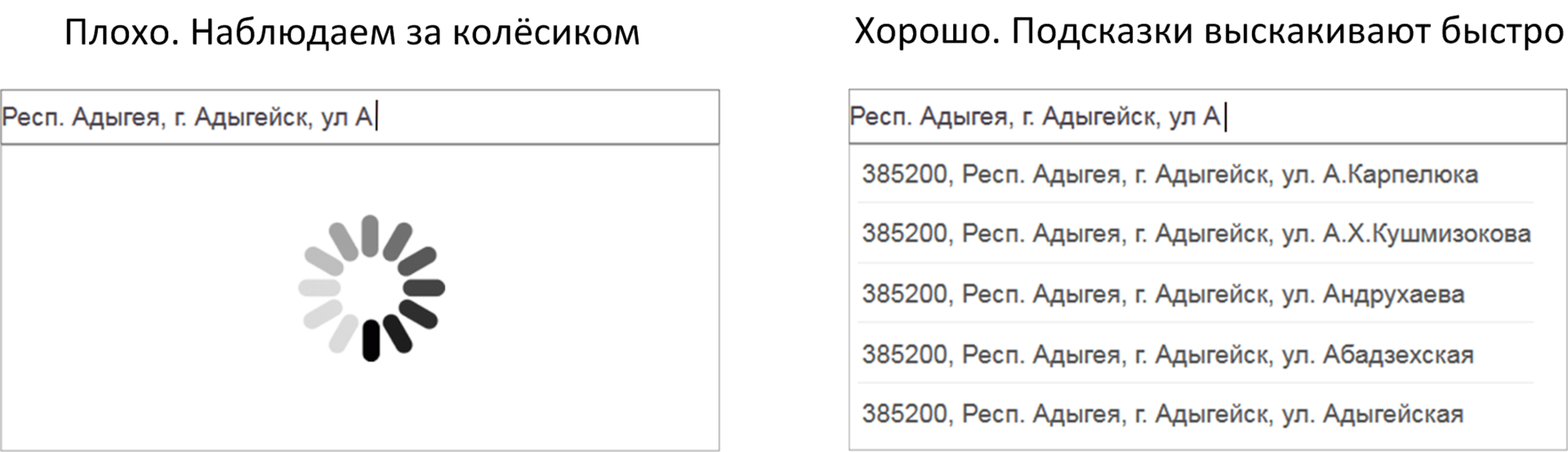

But the impetus for writing this article was, after all, pizza and the fact that I had to place its order twice due to the fact that the site used hints on the website when filling in the delivery address field. The trick was that until you select a street from the list, you will not be given the number of the house. Because of the habit of my address, I dial very quickly, and the prompts on that site worked very slowly, it turned out that the whole ajax-mechanism with uploading prompts did not have time to work out. As a result, I could not specify the house number. I had to press F5, place an order again and re-enter my address slowly but surely.

')

The whole story ended up safely, and the pizza was delivered to the address, but the sediment remained. I decided to ask myself whether it is possible to somehow measure or evaluate the usefulness of using such services, because the auto-completion functions are found everywhere.

As a result, this issue resulted in a full-fledged study, with the development of scripts for testing the usefulness of six currently available online email address autocompletion services. The results of these studies and is devoted to this article.

Formula utility

The utility study should begin with the definition of the formula for calculating it. Purely intuitively, the following.

- If you enter data, and instead of prompts you observe a spinning wheel - this is not very useful. And vice versa, if the prompts pop up faster than you can type the text, that's good.

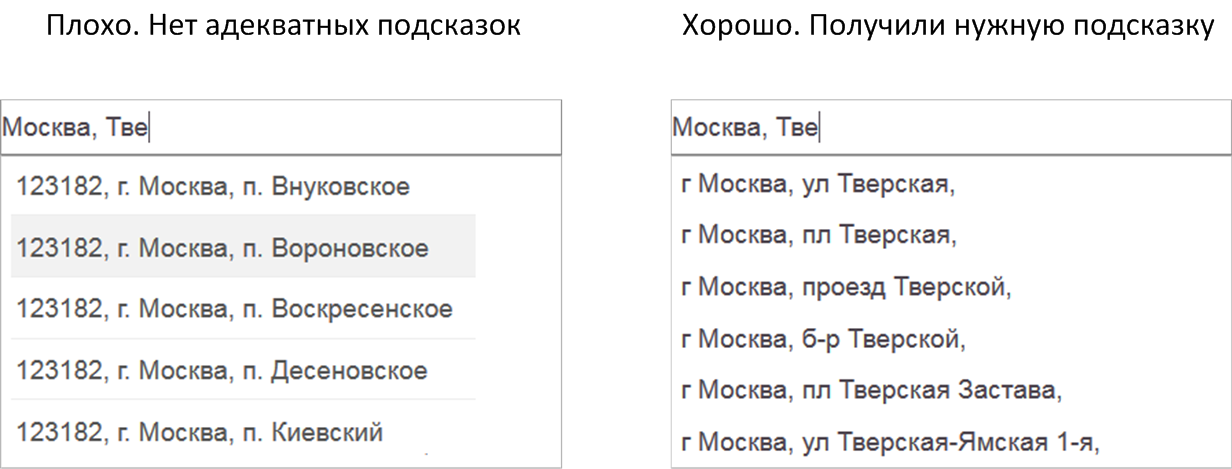

- If you fill out the form letter by letter for a long time and persistently, but the prompts do not suggest what is needed, then the benefits of them are questionable. Conversely, if you entered a couple of letters, and the desired materialized in the tips, then there is an obvious profit from their use.

All these thoughts led me to the following formula, evaluating the usefulness of the autocompletion service.

Us=1− frac(Tu+Ts)To

Here Tu - total time that the user spends on entering the address when using prompts, Ts - the total time that the user spends waiting for prompts from the service, To - the total time that the user would spend on data entry without using prompts.

You will ask why allocate separately. Ts because ajax is asynchronous and the response time does not delay the user typing. But, as noted above, this article is about modeling human behavior. When a user sees that they are offered hints, his work behind the keyboard changes, namely, it looks like this:

- Pressed the key with the next letter entered.

- Waited for tips.

- I made sure that the tips were not correct, and returned to point 1.

As you can see, quite a synchronous work is obtained, so that the time that the user spends on data input is determined precisely by the sum (Tu+Ts) .

In good condition, when the amount (Tu+Ts) less denominator To , the value of the function falls in the range [0 ... 1] and, in fact, shows the percentage of time that the user saves using prompts. But there are also abnormal states (we recall the pizza), which we will consider later. For now we will write down what it is To and Tu .

Will take To=No∙tk where No - the number of letters that make up the address and which the user needs to enter, tk - the time that an average user spends searching for a letter on a keyboard and pressing the corresponding button. This time depends on the user's ability to work with the keyboard. We will distinguish three classes of users: “slug”, “middling” and “hurry”. For each of these classes, we later set the appropriate value. tk as a result, the utility value will be separately obtained for each class of users.

Will take Tu=Nu∙tk+S∙(C∙tk) Where Nu - the number of letters of the address that the user actually has to enter using prompts. When receiving the correct hint, the user stops typing the address, instead he simply selects it from the list provided. In this case Nu<No . If the user has not received a suitable hint, then he has to enter the address completely, in such situations Nu=No . Second term S∙(C∙tk) reflects the time the user spends choosing from a list of suitable prompts. In general, the user can use the tips. S once, for example, when entering the city - the first time, and when entering the street - the second time. When selecting a prompt, the user, as a rule, presses the down arrow button several times on the keyboard to select the desired prompt, then presses a button (for example, Enter) to confirm his choice. These actions do not require finding the right buttons on the keyboard, so this time can be taken as a constant that depends on the user’s class: the slow-moving player has a poor keyboard skills, so it’s slower to push the arrows compared to the torpedo. Therefore, I attached this constant to tk and coefficient C was chosen empirically (see the results of experiments at the end of the article). It is important to remember that S=0 in situations where the service did not return suitable prompts and, as a result, the user did not perform actions related to the selection of prompts from the list.

Substituting all these expressions into a utility formula, we get the following

Us=1− fracNu+S∙CNo− fracTsNo∙tk

Total, to assess the usefulness of the autocompletion service, you need to measure its time Ts which he spends on generating and issuing hints, get the number of letters Nu which the user actually has to enter when typing the address, as well as the number of times S , which the user has to choose prompts. It follows from the formula that the smaller these indicators, the higher the usefulness of the prompts will be. In an ideal situation, when the user has not yet begun to enter anything, and the form with the address is already filled with the correct data, Nu=0 , Ts=0 and S=0 . In this case, the utility is equal to 1. This situation is ideal and at the moment, it is probably not possible to achieve it using earthly technologies, but there is something to strive for.

In the worst case, the user enters the address entirely, while after entering each letter, he additionally expects a hint in the hope that it will save him from further input. In this case Nu=No and S=0 as a result, we get negative utility Us=− fracTsNo∙tk which reflects the extra time spent by the user due to the useless waiting for prompts. In such a situation, if there were no prompts at all, the user would enter the address faster.

Overview of autocompletion services

So, the formula I invented needed to be checked for something. Searching on the Internet, I found six suitable services that allow me to send fragments of address data through the REST API and receive hints for them (another service was recommended to me in the comments, so that they turned out to be seven of them). All the services I have reviewed work in the same type, namely:

- GET or POST receive an HTTP request with the address fragment already entered.

- Return in JSON format an array with suggested hints.

Of course, the structure of the JSON response for each service is different, but the semantics are more or less the same. To simulate user experience with each of these services, I wrote a corresponding script. This script simulates a set of mailing addresses on the keyboard, and in the process of such imitation analyzes the returned prompts for their adequacy. The sources of all scripts can be taken on github, the link is attached at the end of the article.

All considered services, with the exception of Yandex and Google, use the KLADR or FIAS address directories as a data source, or both at the same time. Both directories are maintained by the Federal Tax Service of Russia. You can find information on them at the following sites: KLADR - http://gnivc.ru , FIAS - fias.nalog.ru . Yandex and Google most likely use data from their maps to generate hints.

A brief overview of all the services that participated in my experiments is given below. In the course of the review and in the results of experiments, the services are sorted alphabetically.

Achanter

The service is available at ahunter.ru . According to whois, the service appeared in RuNet in early 2009. Hints for addresses are offered free of charge, it is said separately about the limits on the site that they are not.

You can test hints directly in the demo section of the service here . For the introduction of tips on third-party sites, webmasters recommend using the jQuery-Autocomplete plugin, which can be downloaded from the github by following the link . To write a bot script, I used directly the documentation on the service API, which you can read here .

In addition to prompts for addresses, the authors of the service propose to correct and structure addresses according to KLADR and FIAS and to receive geo-coordinates for them, but this is already for a fee.

Google Places API Web Service

In fact, under this name hides several services, of which we are interested in "Clues places." Information on this service is available here . Quite serious quotas have been established for using the service; only 1000 requests per day can be processed for free. However, if you specify your credit card information in your account, then 150,000 requests per day will be available free of charge. Anything more than this is charged separately.

To embed on your site you need to use the JavaScript library of addresses in Google Maps. Information on it is available here . There is also an opportunity to test and watch the demo. To write a bot script, I used the service API documentation, available from the link above.

Additionally, using the other services of this group, you can separately request the geo-coordinates of the addresses received in prompts.

Dadata

The service is available at dadata.ru . According to whois, the service appeared in RuNet at the end of 2012. To use the tips you need to buy a subscription for a year, or fit into the daily free limit.

A demo hint page is available, it is available at the following link . To implement on their website, the authors of the service suggest using a jQuery plugin of its own design. For writing a bot script, I used the documentation for the service API, available at the following link .

For a fee, some of the addresses on the website offer the same additional options as on Akhanter.

KLADR in the cloud

The service is available at kladr-api.ru . According to whois, the service appeared in early 2012. The authors propose to buy a subscription for three months or a year, or fit into free daily limits.

A demo page where you can get tips can be found at the following link . To use hints on third-party sites, the authors propose to use a plugin developed specifically for this service jQuery, which can be taken on the github here .

To develop a bot script, I used the documentation on the site of the service, available here , it is not very detailed, but you can figure it out.

Fias24

The service is available at fias24.ru . According to whois, this is still a young service, it appeared in RuNet at the end of 2016. To use the tips you need to buy a subscription for a year.

There is a demo hint page, it is available on the main page of the service. For the introduction on the sites, the authors of the service suggest using a jQuery plugin of its own design. There is no full-fledged REST API documentation on the site, so in order to write a bot script, I had to include intuition and accumulated experience in working with other services.

Yandex maps

Here, I think, additional descriptions are not required, because the service is well known even among housewives. There were no obvious limits in the terms of use of this service, but Yandex warns that for large projects it is necessary to negotiate separately, well, in which case it may at any time limit access to its own discretion. When conducting my experiments, access was denied.

How tips in Yandex Maps work, you can look at ... Yandex Maps, so I will not give a link to the "demo". Yandex’s hints have a separate API for embedding on third-party websites, documentation can be found here https://tech.yandex.ru/maps/doc/jsapi/2.1/ref/reference/SuggestView-docpage/ .

Incredibly, but besides the hints, the service offers additional options for working with coordinates, although you need to use another API for this.

Iqdq

This service was added to the article at the request of its authors after the publication of the article on Habré. The service is available at iqdq.ru. According to whois, the service appeared at the end of 2013. Tips for addresses are offered free of charge.

A demo page where you can get tips can be found at the following link . To use hints on third-party sites, authors provide examples of javascript code using jQuery. To write a bot script, I used the documentation for the service API available here .

For a fee, some of the addresses on the site offer additional options, the same as on the services of Akhanter and Dadata.

Experiment Description

For each service from this review, we need to measure response time. Ts and the number of letters Nu , which the user has to enter before he receives a suitable prompt, or until the address is typed in its entirety. For this, I prepared a test sample. It included a total of 3591 streets from 406 cities in Russia. For each city, no more than 10 streets were selected, and the streets were chosen so as to cover the entire alphabet if possible. Small settlements (villages and settlements) were not included in the test sample because the potential target audience of prompting services is still residents of fairly large cities.

Test sample description

The test sample can be downloaded along with the source. The sample is a JSON array, each element of which has a test address, the input of which we will emulate to receive hints. An example with a description of the fields of these addresses is given below:

{ "id" : 1, "reg" : "", "reg_type" : "", "reg_kladr" : "0100000000000", "city" : "", "city_type" : "", "city_kladr" : "0100000200000", "street" : "8 ", "street_type" : "", "street_kladr" : "01000002000003800" } Here id is the unique identifier of the test address within the sample, reg is the name of the region to which the address belongs, reg_type is the region type, reg_kladr is the code for the KLADR region. Similarly, the city, city_type, city_kladr fields are entered for the city, and the street, street_type, street_kladr fields are entered for the street.

Testing algorithm

For each address of our sample, you need to create an imitation of its input, in order to track the prompts received from the service being tested. At the same time, we believe that the user enters only the name of the city and street, he does not need to enter the name of the region, because he lives in one of 406 fairly large cities in Russia. In our imitation first, letter by letter, the name of the city is typed. Each new letter added to the end of the entered name generates a new request to the service. Among the hints received, we look for the expected address, which we know in advance, because it is for this that it is present in the test sample.

If among the first five prompts of the received list is the entered city, then the further imitation of the dialing of the name of the city stops. It is believed that the user saw the name and chose it. Therefore, the user then proceeds to a set of street names. To do this, the imitating script sequentially, letter by letter, builds up the street name and adds it to the city selected in the previous step. After each added letter, the resulting address fragment is sent to the service again.

Well, then, by analogy with the city, we are looking for the entered street among the first five clues, if it got into the issue, then the further imitation of entering the street is stopped, otherwise we go to the next letter and send the request again.

Such a simple test allows you to measure the service response time Ts and the number of letters Nu that the user needs to enter in order to get the desired address in the prompts. Separately, you should specify the situation when the user did not receive a suitable hint until the end of entering the city name. In this case, our simulated user understands that the service is not able to help him when entering this particular address. Therefore, further input of the street is performed without the use of prompts. In this case, the user does not expect anything from the service, so the response time ceases to influence his behavior and in this case, you can accept Ts=0 however, the user will have to type in the street name completely, so Nu will match with No .

Source texts

All scripts are written in Perl. Sources have the following structure.

- The run_test.pl script is the main and only script that needs to be run in order to run a test for any service.

- The TestWorker.pm package contains the implementation of the test algorithm described above.

- The TestStorage.pm package is needed to work with the repository of test results. All results are stored in the sqlite database so that after passing all the tests you can perform all the necessary calculations.

- The packages AhunterAPI.pm, GoogleAPI.pm, DadataAPI.pm, KladrAPI.pm, Fias24API.pm, YandexAPI.pm and IqdqAPI.pm contain the implementation of working with the API of the corresponding service. Which service corresponds to each package, you can guess by its name.

- suggest_test_full.json - the file containing our 3591 test address.

The test start line for some service is as follows:

run_test.pl < > < API- > < API> For example, to test the service from Google, you need to run the test as follows.

run_test.pl /home/user/suggest_test_full.json GoogleAPI ABCDEFG Here /home/user/suggest_test_full.json is the full path to the file with the tests, GoogleAPI is the name of the package corresponding to the service under test, ABCDEFG is the key for working with the service through the API that needs to be received in the personal account of your account. The Akhanter and Yandex services do not require registration, so you can send an arbitrary string as the API key for them.

Features of passing tests

When passing the tests, each service identified

In Akhanther in prompts for addresses from the Chuvash Republic, the name of this region is returned as follows: “Republic of Chuvash (Chuvashia)”. Therefore, within the framework of AhunterAPI.pm, it was necessary in a special way to implement a comparison of prompts for this republic with its reference name.

Google also has features in the names of several regions, for example, “Tyva” returns as “Tuva”, and “Udmurt Republic” returns as “Udmurtia”. For them, also had to write a separate comparison. In addition, it was not possible to get hints for all addresses from the Republic of Crimea. A potential user will have to enter all addresses from this region entirely without prompts, this automatically increases Nu and reduces the total utility of the service. The situation is similar with the cities of New Moscow, for which Google returns tips from the Moscow region. All these tests were not passed, because Formally, the service suggests the wrong address.

The Dadat service has a problem with tips for cities whose names coincide with the names of streets or districts in other cities. For example, for the city “Mirny” offers addresses like “Samara region, Tolyatti city, Mirny 2 passage”, and for the city “Berezovsky” offers the address “Krasnodar city, Berezovsky rural district”. In this case, the name of the city could not be obtained in the prompts, even if you enter it completely. Because of this Nu growing, and the utility falls, because in the absence of prompts, such addresses are entered completely.

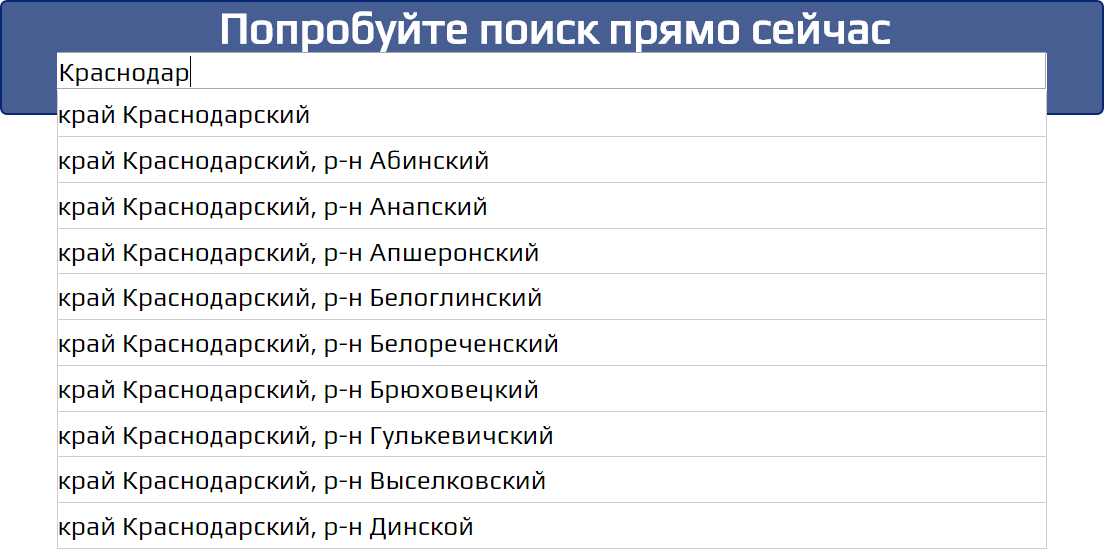

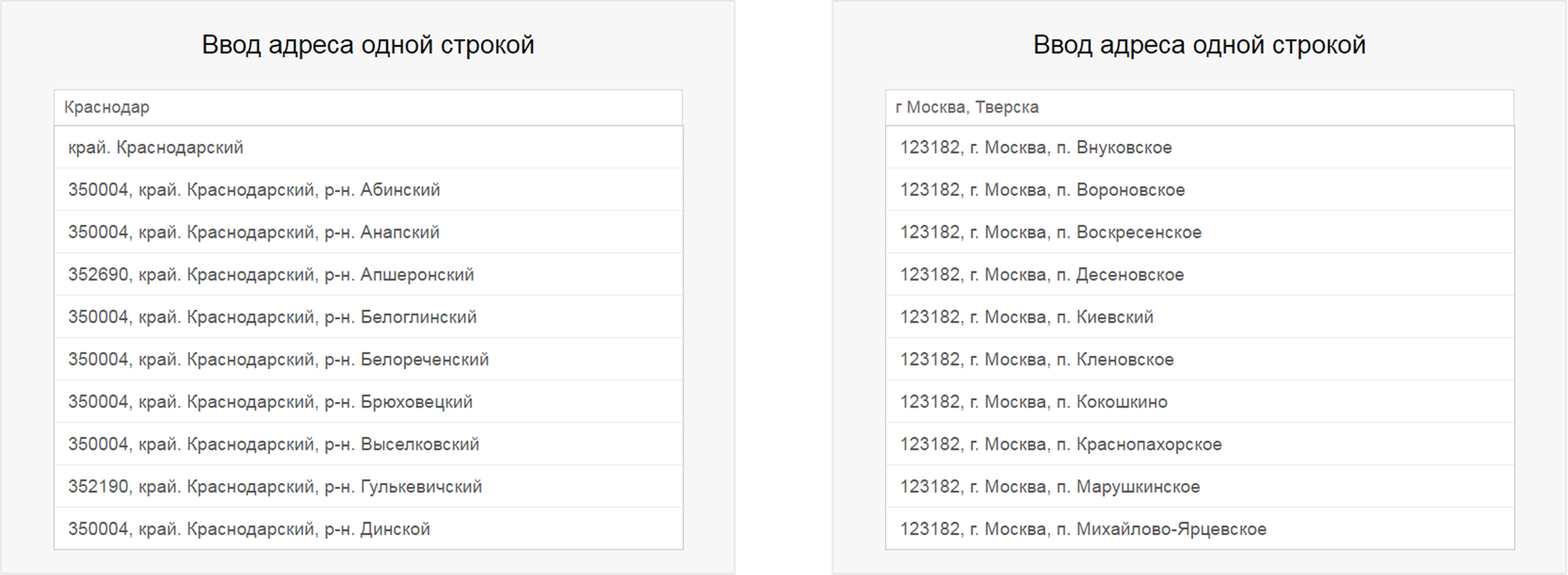

Fias24 found similar problems with cities whose names coincide with districts, or when the city name is a prefix for the name of the region to which this city belongs. For example, for the city of Krasnodar, the service returns options from the Krasnodar Territory, but there are no tips in the city itself. This can be seen on the service demo page.

Because of this problem, for approximately 370 tests, no clues were received for the city name. As a result, when calculating the utility formula, based on our algorithm, the set of streets for these test addresses was carried out without regard to prompts. This affects the increase Nu .

The “KLADR in the cloud” service has a similar situation, only about 700 tests have not been passed. Also this service does not prompt the streets without explicit indication of their types. Examples can be found on the service demo page:

Therefore, an exception was made specifically for this service; when forming queries on the street name, its type was pre-inserted before each name. Consequently Nu for this service there will always be more by the number of letters that make up the street type name.

Approximately the same number of tests was not passed by the Iqdq service. This is mainly due to similar problems - the name of the city, even after a full input, either does not fall into the issue at all (for example, “Izhevsk), or does not fall into the first 5 prompts of the issue (for example,“ Bryansk ”).

In addition, there are the same problems as the Dadat service, when the district of the same name returns instead of the city, for example, for the “city of Zhukovsky” we get “Bryansk region, Zhukovsky district”. Additionally, you can highlight the addresses where, instead of the expected city, the village of the same name is returned, for example, instead of the “city of Pioneer” service returns “Resp. Udmurt, Igrinsky district, Pioneer displaced”. All this leads to the fact that such problematic cities have to be entered completely without prompts. According to the conditions of our tests, the streets in such addresses also have to be entered without prompts. As a result, all such addresses Nu increases to No , and this reduces utility.

Yandex also, like the other services discussed above, has problems with some cities. He instead returns the names of the streets of other cities. For example, for the city of "October" returns "Russia, Moscow region, Lyubertsy, October prospect." There was also a problem with tips for streets with initials in their names. For example, for the query “Russia, the Republic of Adygea, Adygeysk, Cheech" you can get the answer “Russia, the Republic of Adygea, Adygeysk, P.S. Chicha. If this reply is resubmitted as a request, then Yandex returns an empty result. Because of this feature, we had to exclude all street names with initials from the test sample and repeat all the tests for all services again.

Separately, you should make a reservation for services that use maps as sources of prompts. These sources cover the address space of Russia worse than the rest of the survey participants. As a consequence, one of the main reasons due to which these services did not return prompts is the lack of a test address on the map. This in turn automatically increases Nu , because without prompting the user has to enter all letters of the address.

Experimental results

After the test is executed, the run_test.pl script displays various statistical indicators describing the result of passing the test. Also, the screen displays three final values of the utility of the service for the three relevant classes of users - “slug”, “middling” and “hurry”.

The first four indicators that are displayed on the screen have the following meaning.

- city_avg ( Ts ) - the average waiting time of prompts from the service when entering the name of the city, measured in milliseconds.

- street_avg ( Ts ) - similar to the previous one, but for street names.

- city_avg ( Nu ) - the average number of letters by which the service guesses the name of the city.

- street_avg ( Nu ) - the average number of letters by which the service guesses the name of the street.

These indicators are not directly involved in the utility formula, they are interesting in their own right. Below is a table with their values for all tested services.

| Achanter | Dadata | KLADR in the cloud | Fias24 | Yandex | Iqdq | ||

|---|---|---|---|---|---|---|---|

| city_avg ( Ts ) (ms) | 15.98 | 85.93 | 78.55 | 104.05 | 73.88 | 22.46 | 176.73 |

| street_avg ( Ts ) (ms) | 18.69 | 86.76 | 106.79 | 154.68 | 189.70 | 30.67 | 277.28 |

| city_avg ( Nu ) (letters) | 2.48 | 2.71 | 3.89 | 4.27 | 3.68 | 4.42 | 4.75 |

| street_avg ( Nu ) (letters) | 1.63 | 2.18 | 1.61 | 3.28 | 1.90 | 2.20 | 1.84 |

The first two indicators reflect the average service responsiveness. By them it is possible to judge whether this service will be useful for users with high typing speed on the keyboard. The shorter the response time of the service, the more users it will be useful. It is also noticeable here that all services spend less time forming tips for the city, while tips for the street take more time and effort from them.

But of particular interest are the indicators city_avg ( Nu ) and street_avg ( Nu ). It turns out that guessing the name of a city is less than five letters, since for all services city_avg takes place ( Nu ) <5. At best, about two and a half letters are enough to guess the name of the city correctly! At least, this is how much Achanter and Google demonstrate, for the first city_avg ( Nu ) = 2.48, and in the second city_avg ( Nu ) = 2.71. To guess the street name correctly, even fewer letters are required. The four considered services (Akhanter, Dadat, Fias24 and Iqdq) predict the street in less than two letters.

Now let's look at the characteristics that need to be directly substituted into the utility formula, so I’ll bring this formula again so that it is in front of my eyes.

Us=1− fracNu+S∙CNo− fracTsNo∙tk

Value Nu must contain the total number of letters that the user had to type on the keyboard during the input of all addresses of our sample. This value corresponds to the indicator sum(Nu) The script displays. This indicator is calculated as follows.

For all tests, the total amount of letters in the names of cities and streets that were typed during the test is determined. If a hint was not received for any city or street, then the sum of the entire name of this city or street is included in this amount, since it is assumed that without a prompt, the user enters the name entirely. Otherwise, the total amount includes only the number of letters that were actually typed before receiving the hint.

Additionally, this amount includes the lengths of regions from those tests for which it was not possible to get a hint for the city. I assume that if a user does not receive a hint for a city, then he must enter not only the name of the city, but also the name of the region in which the city is located. For cities for which the correct hint has been received, it is not necessary to enter the region, therefore regions of successful tests do not contribute to this amount.

Then in the numerator of the first fraction of the formula of utility there is a term S∙C . Coefficient C reflects the time costs associated with the choice of tips from the list. Earlier, I said that this coefficient was chosen empirically. This constant is sewn up in the script code and has the value 3. That is, the user spends the time choosing the hint as much as he takes to enter 3 arbitrary letters of the address. This coefficient is multiplied by S - the number of times that hints were used during the recruitment process. For this test script produces a characteristic sum(S) so what we have S∙C=3∙sum(S) . Ideally sum(S) should be equal to twice the number of addresses in our sample, since Ideally, prompts should be used twice for each address - once when entering a city, and a second time - when entering a street. But in practice, the services showed a lower value. sum(S) .

Further in the utility formula we meet Ts , this is the total time that the user waited for prompts from the service. To get it, the script gives the characteristic sum(Ts) , so that Ts=sum(Ts) . During the execution of tests, the script notes the time it takes to wait for a response from the service for each request sent. All this information for all queries is stored in a local sqlite database, and at the end of the test is summarized.

The last member of the formula utility - No , is simply the total number of letters of all the sample addresses. This value does not depend on the test result, it only characterizes the sample itself. The script displays this number under the name sum(No) . For our sample, it has the value 137704. Exactly so many letters are contained in the names of the regions, cities and streets of all the tests in the sample, as well as in the names of their types.

The totals of the characteristics described above are shown in the following table. For clarity, time sum(Ts) translated in minutes.

| Achanter | Dadata | KLADR in the cloud | Fias24 | Yandex | Iqdq | ||

|---|---|---|---|---|---|---|---|

| sum(Nu) | 14808 | 33191 | 22397 | 50863 | 32820 | 36549 | 50092 |

| sum(S) | 7180 | 5848 | 7043 | 5588 | 6387 | 6057 | 5415 |

| sum(Ts) | 4.20 (min) | 39.29 (min) | 29.19 (min) | 58.81 (min) | 39.29 (min) | 14.67 (min) | 82.70 (min) |

| Achanter | Dadata | KLADR in the cloud | Fias24 | Yandex | Iqdq | ||

|---|---|---|---|---|---|---|---|

| Slug | 73% | 61% | 67% | 48% | 60% | 59% | 48% |

| Midget | 73% | 60% | 66% | 46% | 59% | 59% | 46% |

| Hurry | 72% | 57% | 64% | 42% | 56% | 58% | 40% |

The problems of "KLADR in the cloud" were described above, the main contribution to the low utility made great importance sum(Nu) , because when recruiting a street for this service, you need to enter its type - “ul”, “lane”, etc. So totally, the user spends more time entering. Nevertheless, even with this in mind, a time saving of 42% is a good indicator.

Iqdq has had a great response time for low rates, as well as problems with missing cities in issuing with prompts. Because of this, all addresses from these cities were entered completely, this led to an increase in the number of letters sum(Nu) which the user had to type.

I was surprised that the usefulness of prompts from Google was not greatly affected by problems with addresses from New Moscow and Crimea, according to which this service did not issue the correct prompts. This is reflected in the overall good guessing ability of this service. If you look at the first table (see above), you will see that Google guesses the city in fewer letters than some of the considered services, which, by virtue of their specialization, should do this in theory better.

The resulting utility values from Yandex are primarily due to the fact that this service guesses the city in more letters than all other services. I used to write that instead of prompting for the city, it pulls to offer streets from other cities.

If we analyze the utility of services in terms of classes of users, then it is clear that all services have greater utility in relation to users who have little keyboard skills. Indeed, the “sluggers” will be happy with any prompts, just to not enter the data manually. However, for users who skillfully use the keyboard, the usefulness of the tips is slightly lower.To bring maximum benefit to them, the service must have a short response time.

In this section, all services can be divided into three groups - fast, medium and slow. In fast services (Akhanter and Yandex), the utility does not greatly depend on the speed of the user. Indeed, even if the user quickly typing on the keyboard, fast services have time to return the correct hints, as a result, he gets the opportunity to use them. Medium-sized services (Google, Dadat, Fias24) are slightly more sensitive to the speed of the user's work, and these services will be less useful to the “rush” than to the “slow moving” and “middle peasants”. But for slow services, this effect is noticeable even more.

Conclusion

Returning to the question posed in the title of the article, you can definitely answer "Yes", modern web services prompts well. I managed to quantify the benefits they bring to ordinary Runet ordinary people. The resulting numbers reflect the time savings that can be achieved by connecting these types of services to web forms, where you need to type postal addresses. In this case, you can save up to 70% of user time.

At the same time, bearing in mind my story with pizza, I would like to remind you that the formula proposed at the beginning of the article implies negative utility values. I know at least one hint service for which the utility will be close to zero, and maybe even negative. Therefore, I would like to appeal to the authors who have already made or are making similar decisions: use my test and check if your development is as useful as you think.

Sources of tests and test samples can be taken on github here . I also want to appeal to those who are planning to repeat my experiments. If you manage to find inaccuracies or errors in the algorithms, please let me know, I will readily make corrections and correct the results.

Well, thank you for your attention. I will be glad to answer questions in the comments.

Source: https://habr.com/ru/post/323936/

All Articles