Data centers as art: data centers at the bottom of the ocean

We are starting a series of articles on various data centers that, in their own way, can be considered works of art. This will be an expanded version of the article we have previously published: The most unusual data centers: data centers, as an art , supplemented with a maximum of interesting details, dialogues with experts and comments from people who sometimes have the opposite opinion.

Oceans are cold, dark and filled with kinetic energy, which makes them an ideal place to build data centers. Just over a year ago, Microsoft plunged a cabinet of servers encased in a sealed metal container into the ocean. A few months later they lifted the capsule to the surface, it was covered with algae and mollusks, as if not several months had passed, but many years. But the servers inside continued to work in coolness and dryness. Is this the future of data centers? A team of Microsoft engineers working on the Natick project believes that this is the future.

')

It all started in 2013 when Microsoft employee Sean James, who introduced new technologies to data centers, who served on a US Navy submarine, proposed the idea of locating server farms under water, which raised doubts among some of his colleagues. But not with him.

Sean James (Cloud Infrastructure & Operations Team)

The implementation of this idea, in his opinion, not only had to reduce the cost of cooling machines - huge costs for many data center operators (about 40% of the total electricity bill - cooling), but also construction costs, simplify the management of these facilities using renewable energy and even improve their performance.

Together with Todd Rawlings, another Microsoft engineer, James distributed an internal document promoting this concept. It explained how creating data centers under water could help Microsoft and other providers using cloud technologies to meet phenomenal growth needs while maintaining a high degree of environmental friendliness.

Natick Team: Eric Peterson, Spencer Fowers, Norm Whitaker, Ben Cutler, Jeff Kramer (left to right)

In many large companies, such outlandish ideas are probably quietly dying in the bud, but not in Microsoft, where researchers already have a history of solving problems vital for the company in innovative areas, even if the project is far beyond the core Microsoft activity and in the implementation which is not yet due experience. The key to success is that Microsoft gathers teams of engineers, not only among its specialists, but also among partner companies.

Four people formed the core of the team tasked with testing the far-reaching idea of James. And already in August 2014, a project began to be organized, which soon became known as Natick, simply because the research team liked to call the projects differently, like the names of cities in Massachusetts. And after only 12 months, the prototype of the data processing center was ready, which was to be loaded onto the bottom of the Pacific Ocean.

There was no shortage of difficulties in implementing the Natick project. The first problem was, of course, the provision of dry space inside a large steel container immersed in water. Another problem was cooling. It was necessary to figure out how best to use the surrounding seawater to cool the servers inside. And finally, there was the question of the struggle with shells and other forms of marine life, which will inevitably envelop the sunk ship - a phenomenon that is familiar to everyone who has ever kept a boat in the water for a long period of time. The sticky crustaceans and the like became a problem because they interfered with the removal of heat from the servers into the environment (water). At first, these problems were frightening, but were gradually resolved, often with the use of time-tested solutions from the marine industry.

Installing the server rack in the Natick project container

But why all these difficulties? Of course, server cooling with seawater will reduce cooling costs and can improve performance at the expense of other benefits as well, but diving the data center into the water involves obvious costs and inconveniences. Trying to put thousands of servers on the seabed really makes sense? We think it has, and there are several reasons for this.

The first is that this will provide Microsoft with the ability to quickly increase capacity, where and when it is needed. Corporate planning would be less burdensome, since there was no need to build facilities long before they were actually required, in anticipation of later demand. For an industry that spends billions of dollars a year building a constantly growing number of data centers, a quick response time can deliver huge savings.

The second reason is that subsea data centers can be built faster than terrestrial data centers, which is quite simple to understand. Today, the construction of each such installation is unique. Equipment may be the same, but building codes, taxes, climate, labor, electricity and network connections are different everywhere. And these variables affect the duration of construction. We also observe their impact on the performance and reliability of data centers, where identical equipment demonstrates different levels of reliability depending on where the data center is located.

As we see, Natick will consist of a set of "containers" - steel cylinders, each of which will contain possibly several thousand servers later. Together they would form an underwater data center, located a few kilometers from the coast and located between 50 and 200 m under water. The containers could either float above the seabed at some intermediate depth, and be moored by cables to the ocean floor, or they could be located on the very seabed.

After the data center module is deployed, it will remain in place until it is time to replace the servers it contains. Or perhaps market conditions will change, and they will decide to move it somewhere else. This is a real isolated environment, which means that system administrators will work remotely, and no one will be able to correct the situation or replace a piece of equipment during the operation of the module.

Now imagine that a “just in time” production process is applied to this concept. Containers could be built at the factory, provided with servers and prepared for shipment to anywhere in the world. Unlike on land, the ocean provides a very homogeneous environment, wherever you are. Therefore, no container configuration would be required, and we could install them quickly wherever the computational power is in short supply, gradually increasing the size of the underwater installation to meet the performance requirements as they grow. Natick's goal is to be able to run data centers at coastal sites anywhere in the world within 90 days of the deployment decision.

Most of the new data centers are built in places where electricity is inexpensive, the climate is cool enough, the land is cheap, and the object does not interfere with people living nearby. The problem with this approach is that it is very often necessary to set up data processing centers far from human settlements, which reduces the speed at which servers respond to requests due to an increase in latency.

For interactive applications that involve online user interaction, these delays can be significant and cause problems for large values. We want web pages to load quickly, and video games like Minecraft or Halo to be fast and not lag. In the coming years, more and more applications will appear with more interactions, including those supported by HoloLens Microsoft and other augmented reality technologies. So it really is necessary that the servers be closer to the people they serve, which rarely happens today.

It may seem surprising, but almost half of the world's population lives no further than 100 km from the seashore. Thus, the placement of data-centers near the coast near coastal cities will make them much closer to users than today.

If this base is not enough, consider the cooling cost savings. Historically, cooling systems in data centers consumed almost as much energy as the servers themselves, as mechanical cooling systems were used.

Recently, many data center operators have switched to cooling with the help of air taken from the environment — free-cooling; instead of mechanical cooling, they simply use purified air from the outside. This greatly reduces the energy costs of cooling, which are no longer 50% of the total consumption, but lie in the range of 10-30%. However, in some places the ambient temperature is too high or has seasonal fluctuations, thus such data centers can be effective only at high latitudes, far from settlements, which increases the delays to users.

Moreover, data centers using free-cooling type of cooling can consume quite a lot of water. As evaporation is often used to cool down a little the air blown through the servers. This is problematic in drought prone areas such as California, or where a growing population is depleting local aquifers, as is the case in many developing countries. But even if the water is abundant, adding it to the air makes the electronics more susceptible to corrosion.

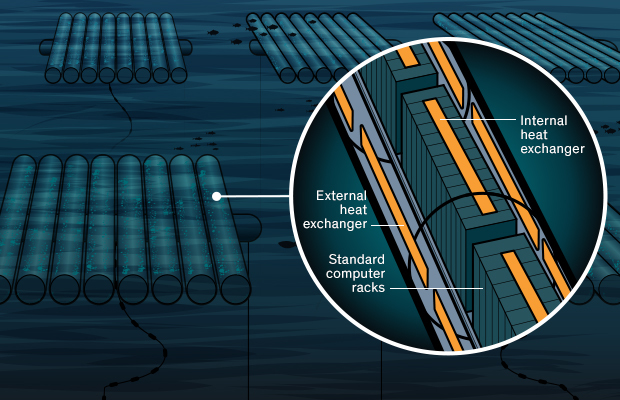

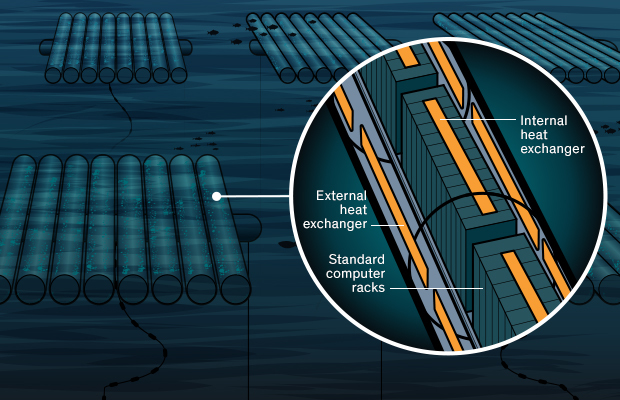

The architects of the project Natick solve all these problems. The interior of the data center container consists of standard computer racks to which heat exchangers are attached, which remove heat from the air into a heat-conducting and heat-intensive liquid, similar to ordinary water. This fluid is then pumped into heat exchangers on the outside of the container, which, in turn, transfer heat to the surrounding ocean. The cooled liquid is then returned to the internal heat exchangers for recycle.

Of course, the colder the surrounding ocean, the better this scheme will work. To gain access to cold sea water even in the summer or in the tropics, it will be necessary to place the equipment containers deep enough. For example, at a depth of 200 meters off the east coast of Florida, the water temperature does not rise above 15 ° C throughout the year.

The tests of the prototype of the Natick installation, called “Leona Philpot” (in honor of the Xbox game character), began in August 2015. The installation was submerged to a depth of only 11 meters in the Pacific Ocean, near the city of San Luis Obispo in California, where the water temperature fluctuates between 14-18 ° C throughout the year.

The server rack used in the prototype of the module of the underwater data center was filled with both real servers and dummy loads that consume the same amount of energy as the server

The server rack used in the prototype of the module of the underwater data center was filled with both real servers and dummy loads that consume the same amount of energy as the server

During this 105-day experiment, it was shown that it is possible to keep the servers cooled to the same temperatures as with the use of mechanical cooling, but with incredibly low power consumption - only 3 percent. These energy costs are significantly lower than those of any industrial or experimental data center that is known today.

In addition, there was no need to maintain personnel in the field, parking places, alarm buttons and security guards. The container created an atmosphere without the use of oxygen, which makes the fire impossible, and the equipment can be controlled from a comfortable office by Microsoft employees. Moreover, all water vapor and dust were also removed from the container atmosphere. This made the environment very comfortable for electronics, which minimized problems with heat distribution and connector corrosion.

A look into the future. Future data centers will be much longer than the prototype, and each of them will contain a large number of server racks. The electronics will be cooled by transferring the waste heat to the surrounding sea water using internal and external heat exchangers .

Microsoft is committed to protecting the environment. For example, the company uses as much renewable energy sources as possible to meet its electricity needs. To the extent possible. However, it still has to offset carbon emissions. In accordance with this philosophy, the company plans to deploy future underwater data centers near coastal sources of renewable energy - whether it is a coastal wind power station or some marine energy system that uses the force of tides, waves or currents.

These energy sources are usually present in abundance on the shelf, which means that it will be possible to place equipment close to people and have access to a large amount of green energy at the same time. Just as terrestrial data centers are becoming the impetus for the construction of new infrastructures for the provision of renewable energy sources, the same can happen with underwater data processing centers. Probably, their presence will stimulate the construction of new infrastructure facilities that will provide ecologically clean energy not only underwater data centers, but also the public.

Another factor to consider is that traditionally generated electricity is not always readily available, especially in developing countries. For example, 70 percent of sub-Saharan African people do not have access to electricity at all. Therefore, if you want to build a data center and make services available for such a population, you will probably also need to provide them with electricity.

Typically, electricity is transmitted over long distances using high voltage transmission lines and voltages of 100,000 volts or more to reduce losses, but ultimately the servers use the same low voltage as your home computer. To reduce the voltage in the network to values suitable for server hardware, usually three separate devices are required. You will also need backup generators and plenty of batteries to provide power in the event of a power failure.

Placing underwater data centers near coastal sources of energy would allow engineers to simplify infrastructure. First, it would be possible to connect to a lower voltage grid, which would eliminate some voltage converters on the way to the servers. Secondly, by connecting servers to independent power grids powered by wind energy or marine turbines, you can ensure fault tolerance. This will reduce both electrical losses and investment in general in the overall data center architecture, which will protect against local power failure.

An additional advantage of this approach is that only a fiber optic cable or two cables that will be used to transfer data from an underwater data center will have an impact on the earth.

The first question asked by almost everyone who finds out about this idea: But how will the electronics remain dry? The truth is that maintaining a dry climate inside the server container is not difficult. The maritime industry has learned how to keep equipment dry in the ocean long before the first computers appeared, often in much more adverse conditions than those that may be in the framework of this project.

The second question, which was at an early stage, is how to cool the servers most effectively? The project team investigated a number of exotic approaches, including the use of special dielectric fluids and materials with a phase transition, as well as unusual coolants, such as high-pressure helium gas and supercritical carbon dioxide. Although such approaches have their advantages, they also cause big problems.

Researchers are continuing research on the use of exotic materials for cooling, but in the short term, there is no real need for them. Water cooling and the use of radiator heat exchangers in the Natick project provide a very economical and efficient cooling mechanism that works well with standard servers.

More importantly, in our opinion, the fact that the underwater Dada center will attract marine life, forming an artificial reef. This process of colonization by marine organisms, called biofouling, begins with single-celled creatures, followed by several larger organisms that feed on these cells, and so on along the food chain.

After 105 days in the water, parts of the capsule with the equipment became covered in marine life as a result of the biological fouling phenomenon familiar to yachtsmen.

When researchers plunged the Natick prototype to the bottom, crabs and fish gathered around during the day and began to settle down in a new dwelling. We investigated were happy to create a home for these creatures, so one of the main difficulties in the design was the question of how to maintain this habitat, without hindering the ability of the underwater data center to keep the servers inside cool enough.

In particular, it is known that the biological fouling of external heat exchangers will interfere with the removal of heat from these surfaces. Thus, the explored began to explore the use of various anti-fouling materials and coatings - even active deterrents using sound or ultraviolet light - in the hope of complicating the appearance of living organisms. Although physically heat exchangers can be cleaned, relying on such interventions would be unwise, given the goal - to simplify maintenance as much as possible.

Fortunately, the heat exchangers of the Natick container remained clean during the first deployment, despite the fact that they were in a very unfavorable environment - shallow water and near the shore, where oceanic life is most common. However, biological fouling remains an area of active research, and researchers continue to study it with a focus on approaches that will not harm the marine environment.

The biggest problem that researchers encountered during testing was the failure of equipment and the inability to replace a particular server in a server rack or hard drive, network card or other component of a particular server. You only have to respond to hardware failures remotely and autonomously. Therefore, in this direction, active work is carried out even in ordinary ground-based Microsoft data centers, in order to increase the detection ability and ensure the possibility of eliminating failures without human intervention. Similar methods and experiences will be applied to Natick data centers in the future.

What about security? Are data securely protected from virtual or physical theft if they are placed under water? Absolutely. Natick will provide the same encryption and other security guarantees as Microsoft’s ground data centers. At the same time, since no one will be physically present next to containers with equipment, Natick will receive information about changes in the environment using sensors, including notifying you of any unexpected visitors.

You may also be wondering if the heat generated by the subsea data center will have a negative impact on the local marine environment. Most likely no. Any heat generated by the Natick container will quickly mix with cool water and carry over. Water a few meters downstream of the Natick container will be warmer by only a few thousandths of a degree at best.

Therefore, the impact on the environment will be very small. This is important because in the future, much more underwater data centers will be built and it is likely that people will start working under water to support and support them.

At first glance, the idea of managing data centers that are located on a cold ocean floor looks very attractive: a capsule filled with racks with servers requires constant and precise temperature control. Why not shift this work to the cold ocean floor, where the water temperature is close to 0 degrees Celsius?

The Natick team, working on the implementation of this solution, is precisely this opinion. Their idea is to locate data centers in the ocean near the coast: “more than half of the world's population lives no further than 200 km from the coastline,” says one of the project’s engineers in the film, arguing that servers are located in the ocean near the coast:

The main task is to reduce the distance between users and the requested data, to be able to deploy cloud infrastructure in isolated containers for 90 days in the desired location around the world, which will save on data delivery to users and become closer to them, as well as save on cooling and power, make data centers more environmentally friendly.

Will it work out? For sure. Already it turned out, the only question is when the underwater data centers will be distributed on an industrial scale. Today, Microsoft owns more than 100 data centers around the world that host over a million servers, more than $ 15 billion is spent on IT infrastructure annually, perhaps in a short time, the data centers will still start building and underwater.

The article was published with the assistance of ua-hosting.company hosting provider. Therefore, taking this opportunity, we want to remind you about the action:

VPS (KVM) with dedicated drives (full-fledged analog of dedicated entry-level servers from $ 29) in the Netherlands and the USA free for 1-3 months for all + 1 month bonus for geektimes

Do not forget that your orders and support (cooperation with you) will allow you to publish in the future even more interesting material. We would appreciate your feedback and criticism and possible orders. ua-hosting.company - happy to make you happier.

Oceans are cold, dark and filled with kinetic energy, which makes them an ideal place to build data centers. Just over a year ago, Microsoft plunged a cabinet of servers encased in a sealed metal container into the ocean. A few months later they lifted the capsule to the surface, it was covered with algae and mollusks, as if not several months had passed, but many years. But the servers inside continued to work in coolness and dryness. Is this the future of data centers? A team of Microsoft engineers working on the Natick project believes that this is the future.

')

The origin of the idea

It all started in 2013 when Microsoft employee Sean James, who introduced new technologies to data centers, who served on a US Navy submarine, proposed the idea of locating server farms under water, which raised doubts among some of his colleagues. But not with him.

Sean James (Cloud Infrastructure & Operations Team)

The implementation of this idea, in his opinion, not only had to reduce the cost of cooling machines - huge costs for many data center operators (about 40% of the total electricity bill - cooling), but also construction costs, simplify the management of these facilities using renewable energy and even improve their performance.

Together with Todd Rawlings, another Microsoft engineer, James distributed an internal document promoting this concept. It explained how creating data centers under water could help Microsoft and other providers using cloud technologies to meet phenomenal growth needs while maintaining a high degree of environmental friendliness.

Natick Team: Eric Peterson, Spencer Fowers, Norm Whitaker, Ben Cutler, Jeff Kramer (left to right)

In many large companies, such outlandish ideas are probably quietly dying in the bud, but not in Microsoft, where researchers already have a history of solving problems vital for the company in innovative areas, even if the project is far beyond the core Microsoft activity and in the implementation which is not yet due experience. The key to success is that Microsoft gathers teams of engineers, not only among its specialists, but also among partner companies.

Four people formed the core of the team tasked with testing the far-reaching idea of James. And already in August 2014, a project began to be organized, which soon became known as Natick, simply because the research team liked to call the projects differently, like the names of cities in Massachusetts. And after only 12 months, the prototype of the data processing center was ready, which was to be loaded onto the bottom of the Pacific Ocean.

Difficulties in project implementation

There was no shortage of difficulties in implementing the Natick project. The first problem was, of course, the provision of dry space inside a large steel container immersed in water. Another problem was cooling. It was necessary to figure out how best to use the surrounding seawater to cool the servers inside. And finally, there was the question of the struggle with shells and other forms of marine life, which will inevitably envelop the sunk ship - a phenomenon that is familiar to everyone who has ever kept a boat in the water for a long period of time. The sticky crustaceans and the like became a problem because they interfered with the removal of heat from the servers into the environment (water). At first, these problems were frightening, but were gradually resolved, often with the use of time-tested solutions from the marine industry.

Installing the server rack in the Natick project container

But why all these difficulties? Of course, server cooling with seawater will reduce cooling costs and can improve performance at the expense of other benefits as well, but diving the data center into the water involves obvious costs and inconveniences. Trying to put thousands of servers on the seabed really makes sense? We think it has, and there are several reasons for this.

The first is that this will provide Microsoft with the ability to quickly increase capacity, where and when it is needed. Corporate planning would be less burdensome, since there was no need to build facilities long before they were actually required, in anticipation of later demand. For an industry that spends billions of dollars a year building a constantly growing number of data centers, a quick response time can deliver huge savings.

The second reason is that subsea data centers can be built faster than terrestrial data centers, which is quite simple to understand. Today, the construction of each such installation is unique. Equipment may be the same, but building codes, taxes, climate, labor, electricity and network connections are different everywhere. And these variables affect the duration of construction. We also observe their impact on the performance and reliability of data centers, where identical equipment demonstrates different levels of reliability depending on where the data center is located.

As we see, Natick will consist of a set of "containers" - steel cylinders, each of which will contain possibly several thousand servers later. Together they would form an underwater data center, located a few kilometers from the coast and located between 50 and 200 m under water. The containers could either float above the seabed at some intermediate depth, and be moored by cables to the ocean floor, or they could be located on the very seabed.

After the data center module is deployed, it will remain in place until it is time to replace the servers it contains. Or perhaps market conditions will change, and they will decide to move it somewhere else. This is a real isolated environment, which means that system administrators will work remotely, and no one will be able to correct the situation or replace a piece of equipment during the operation of the module.

Now imagine that a “just in time” production process is applied to this concept. Containers could be built at the factory, provided with servers and prepared for shipment to anywhere in the world. Unlike on land, the ocean provides a very homogeneous environment, wherever you are. Therefore, no container configuration would be required, and we could install them quickly wherever the computational power is in short supply, gradually increasing the size of the underwater installation to meet the performance requirements as they grow. Natick's goal is to be able to run data centers at coastal sites anywhere in the world within 90 days of the deployment decision.

Reduced latency to users

Most of the new data centers are built in places where electricity is inexpensive, the climate is cool enough, the land is cheap, and the object does not interfere with people living nearby. The problem with this approach is that it is very often necessary to set up data processing centers far from human settlements, which reduces the speed at which servers respond to requests due to an increase in latency.

For interactive applications that involve online user interaction, these delays can be significant and cause problems for large values. We want web pages to load quickly, and video games like Minecraft or Halo to be fast and not lag. In the coming years, more and more applications will appear with more interactions, including those supported by HoloLens Microsoft and other augmented reality technologies. So it really is necessary that the servers be closer to the people they serve, which rarely happens today.

It may seem surprising, but almost half of the world's population lives no further than 100 km from the seashore. Thus, the placement of data-centers near the coast near coastal cities will make them much closer to users than today.

Cost savings on cooling

If this base is not enough, consider the cooling cost savings. Historically, cooling systems in data centers consumed almost as much energy as the servers themselves, as mechanical cooling systems were used.

Recently, many data center operators have switched to cooling with the help of air taken from the environment — free-cooling; instead of mechanical cooling, they simply use purified air from the outside. This greatly reduces the energy costs of cooling, which are no longer 50% of the total consumption, but lie in the range of 10-30%. However, in some places the ambient temperature is too high or has seasonal fluctuations, thus such data centers can be effective only at high latitudes, far from settlements, which increases the delays to users.

Moreover, data centers using free-cooling type of cooling can consume quite a lot of water. As evaporation is often used to cool down a little the air blown through the servers. This is problematic in drought prone areas such as California, or where a growing population is depleting local aquifers, as is the case in many developing countries. But even if the water is abundant, adding it to the air makes the electronics more susceptible to corrosion.

The architects of the project Natick solve all these problems. The interior of the data center container consists of standard computer racks to which heat exchangers are attached, which remove heat from the air into a heat-conducting and heat-intensive liquid, similar to ordinary water. This fluid is then pumped into heat exchangers on the outside of the container, which, in turn, transfer heat to the surrounding ocean. The cooled liquid is then returned to the internal heat exchangers for recycle.

Of course, the colder the surrounding ocean, the better this scheme will work. To gain access to cold sea water even in the summer or in the tropics, it will be necessary to place the equipment containers deep enough. For example, at a depth of 200 meters off the east coast of Florida, the water temperature does not rise above 15 ° C throughout the year.

Prototype

The tests of the prototype of the Natick installation, called “Leona Philpot” (in honor of the Xbox game character), began in August 2015. The installation was submerged to a depth of only 11 meters in the Pacific Ocean, near the city of San Luis Obispo in California, where the water temperature fluctuates between 14-18 ° C throughout the year.

The server rack used in the prototype of the module of the underwater data center was filled with both real servers and dummy loads that consume the same amount of energy as the server

The server rack used in the prototype of the module of the underwater data center was filled with both real servers and dummy loads that consume the same amount of energy as the serverDuring this 105-day experiment, it was shown that it is possible to keep the servers cooled to the same temperatures as with the use of mechanical cooling, but with incredibly low power consumption - only 3 percent. These energy costs are significantly lower than those of any industrial or experimental data center that is known today.

In addition, there was no need to maintain personnel in the field, parking places, alarm buttons and security guards. The container created an atmosphere without the use of oxygen, which makes the fire impossible, and the equipment can be controlled from a comfortable office by Microsoft employees. Moreover, all water vapor and dust were also removed from the container atmosphere. This made the environment very comfortable for electronics, which minimized problems with heat distribution and connector corrosion.

A look into the future. Future data centers will be much longer than the prototype, and each of them will contain a large number of server racks. The electronics will be cooled by transferring the waste heat to the surrounding sea water using internal and external heat exchangers .

Protecting the environment and providing energy

Microsoft is committed to protecting the environment. For example, the company uses as much renewable energy sources as possible to meet its electricity needs. To the extent possible. However, it still has to offset carbon emissions. In accordance with this philosophy, the company plans to deploy future underwater data centers near coastal sources of renewable energy - whether it is a coastal wind power station or some marine energy system that uses the force of tides, waves or currents.

These energy sources are usually present in abundance on the shelf, which means that it will be possible to place equipment close to people and have access to a large amount of green energy at the same time. Just as terrestrial data centers are becoming the impetus for the construction of new infrastructures for the provision of renewable energy sources, the same can happen with underwater data processing centers. Probably, their presence will stimulate the construction of new infrastructure facilities that will provide ecologically clean energy not only underwater data centers, but also the public.

Another factor to consider is that traditionally generated electricity is not always readily available, especially in developing countries. For example, 70 percent of sub-Saharan African people do not have access to electricity at all. Therefore, if you want to build a data center and make services available for such a population, you will probably also need to provide them with electricity.

Typically, electricity is transmitted over long distances using high voltage transmission lines and voltages of 100,000 volts or more to reduce losses, but ultimately the servers use the same low voltage as your home computer. To reduce the voltage in the network to values suitable for server hardware, usually three separate devices are required. You will also need backup generators and plenty of batteries to provide power in the event of a power failure.

Placing underwater data centers near coastal sources of energy would allow engineers to simplify infrastructure. First, it would be possible to connect to a lower voltage grid, which would eliminate some voltage converters on the way to the servers. Secondly, by connecting servers to independent power grids powered by wind energy or marine turbines, you can ensure fault tolerance. This will reduce both electrical losses and investment in general in the overall data center architecture, which will protect against local power failure.

An additional advantage of this approach is that only a fiber optic cable or two cables that will be used to transfer data from an underwater data center will have an impact on the earth.

Questions and surveys

The first question asked by almost everyone who finds out about this idea: But how will the electronics remain dry? The truth is that maintaining a dry climate inside the server container is not difficult. The maritime industry has learned how to keep equipment dry in the ocean long before the first computers appeared, often in much more adverse conditions than those that may be in the framework of this project.

The second question, which was at an early stage, is how to cool the servers most effectively? The project team investigated a number of exotic approaches, including the use of special dielectric fluids and materials with a phase transition, as well as unusual coolants, such as high-pressure helium gas and supercritical carbon dioxide. Although such approaches have their advantages, they also cause big problems.

Researchers are continuing research on the use of exotic materials for cooling, but in the short term, there is no real need for them. Water cooling and the use of radiator heat exchangers in the Natick project provide a very economical and efficient cooling mechanism that works well with standard servers.

Fouling and environmental influences

More importantly, in our opinion, the fact that the underwater Dada center will attract marine life, forming an artificial reef. This process of colonization by marine organisms, called biofouling, begins with single-celled creatures, followed by several larger organisms that feed on these cells, and so on along the food chain.

After 105 days in the water, parts of the capsule with the equipment became covered in marine life as a result of the biological fouling phenomenon familiar to yachtsmen.

When researchers plunged the Natick prototype to the bottom, crabs and fish gathered around during the day and began to settle down in a new dwelling. We investigated were happy to create a home for these creatures, so one of the main difficulties in the design was the question of how to maintain this habitat, without hindering the ability of the underwater data center to keep the servers inside cool enough.

In particular, it is known that the biological fouling of external heat exchangers will interfere with the removal of heat from these surfaces. Thus, the explored began to explore the use of various anti-fouling materials and coatings - even active deterrents using sound or ultraviolet light - in the hope of complicating the appearance of living organisms. Although physically heat exchangers can be cleaned, relying on such interventions would be unwise, given the goal - to simplify maintenance as much as possible.

Fortunately, the heat exchangers of the Natick container remained clean during the first deployment, despite the fact that they were in a very unfavorable environment - shallow water and near the shore, where oceanic life is most common. However, biological fouling remains an area of active research, and researchers continue to study it with a focus on approaches that will not harm the marine environment.

Equipment failure, safety and environmental impact

The biggest problem that researchers encountered during testing was the failure of equipment and the inability to replace a particular server in a server rack or hard drive, network card or other component of a particular server. You only have to respond to hardware failures remotely and autonomously. Therefore, in this direction, active work is carried out even in ordinary ground-based Microsoft data centers, in order to increase the detection ability and ensure the possibility of eliminating failures without human intervention. Similar methods and experiences will be applied to Natick data centers in the future.

What about security? Are data securely protected from virtual or physical theft if they are placed under water? Absolutely. Natick will provide the same encryption and other security guarantees as Microsoft’s ground data centers. At the same time, since no one will be physically present next to containers with equipment, Natick will receive information about changes in the environment using sensors, including notifying you of any unexpected visitors.

You may also be wondering if the heat generated by the subsea data center will have a negative impact on the local marine environment. Most likely no. Any heat generated by the Natick container will quickly mix with cool water and carry over. Water a few meters downstream of the Natick container will be warmer by only a few thousandths of a degree at best.

Therefore, the impact on the environment will be very small. This is important because in the future, much more underwater data centers will be built and it is likely that people will start working under water to support and support them.

FINDINGS

At first glance, the idea of managing data centers that are located on a cold ocean floor looks very attractive: a capsule filled with racks with servers requires constant and precise temperature control. Why not shift this work to the cold ocean floor, where the water temperature is close to 0 degrees Celsius?

The Natick team, working on the implementation of this solution, is precisely this opinion. Their idea is to locate data centers in the ocean near the coast: “more than half of the world's population lives no further than 200 km from the coastline,” says one of the project’s engineers in the film, arguing that servers are located in the ocean near the coast:

The main task is to reduce the distance between users and the requested data, to be able to deploy cloud infrastructure in isolated containers for 90 days in the desired location around the world, which will save on data delivery to users and become closer to them, as well as save on cooling and power, make data centers more environmentally friendly.

Will it work out? For sure. Already it turned out, the only question is when the underwater data centers will be distributed on an industrial scale. Today, Microsoft owns more than 100 data centers around the world that host over a million servers, more than $ 15 billion is spent on IT infrastructure annually, perhaps in a short time, the data centers will still start building and underwater.

The article was published with the assistance of ua-hosting.company hosting provider. Therefore, taking this opportunity, we want to remind you about the action:

VPS (KVM) with dedicated drives (full-fledged analog of dedicated entry-level servers from $ 29) in the Netherlands and the USA free for 1-3 months for all + 1 month bonus for geektimes

Do not forget that your orders and support (cooperation with you) will allow you to publish in the future even more interesting material. We would appreciate your feedback and criticism and possible orders. ua-hosting.company - happy to make you happier.

Source: https://habr.com/ru/post/323826/

All Articles