Functional safety, part 6 of 7. Evaluation of indicators of functional safety and reliability

A source

Continuing the series of publications on functional safety , in today's article we will look at how functional safety is quantified on the basis of statistical data on random hardware failures. For this purpose, the mathematical apparatus of the theory of reliability is used, which, as is known, is one of the applications of probability theory. Therefore, we will periodically refer to the provisions known from the theory of reliability.

We will consider the following questions:

')

- connection of attributes of reliability, information and functional safety;

- the transition from risk analysis to the measurement of functional safety indicators;

- examples of the calculation of indicators of reliability and functional safety.

Attributes of reliability, information and functional safety

In order to better understand exactly which properties we will evaluate, consider the structure and interrelation of reliability attributes, information security and security features.

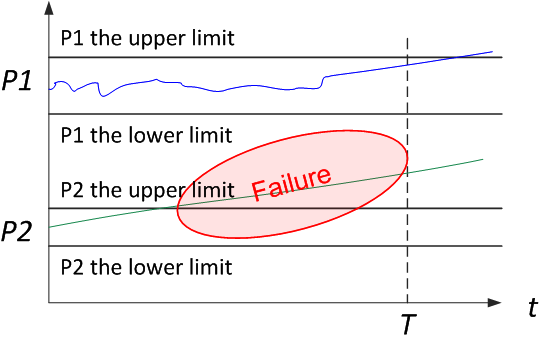

Let's start with the definition of reliability. Reliability is the property of an object to preserve in time, within the established limits, the values of all parameters characterizing the ability to perform the required functions in specified modes and conditions of use, maintenance, storage and transportation. This can be demonstrated in the form of a simple scheme. For the system, the service life and the limiting values of the parameters are specified. While the parameters are within the specified limits, the system is operational and vice versa, if the parameters are beyond the limits, then a failure has occurred

(Picture 1).

Figure 1. Graphical interpretation of the definition of reliability

About the relationship between dependability and reliability properties should be said separately, since in the area of standardization of this property, Western and Soviet science in their time took several different paths. The correct translation of the term reliability is dependability, since both reliability and dependability are considered as complex properties. Reliability is the correct translation for the term reliability, which is important, but still only one of the components of reliability. Reliability is the property of an object to continuously maintain a healthy state for some time or an operating time, i.e. reliability can be generalized with reliability only for unattended systems.

Besides reliability, the composite properties of reliability are maintainability (Maintainability), durability (Durability) and persistence (Storability). Availability is a combination of dependability and maintainability.

All these provisions were set forth in one of the best standards for the harmony of presentation, which I have ever held in my hands, GOST 27.002-89 . “Reliability in technology. Basic concepts. Terms and Definitions". Unfortunately, the "non-selective" adaptation of Western standards as GOST R has led to the fact that the achievements of the Soviet school of reliability are now forgotten (at least in the field of formal standardization). In 2009, the standard GOST R 27.002-2009 was released (for some reason, the initial number for it was GOST R 53480-2009, then historical justice prevailed), which is also a copy of the rather ancient dictionary of the International Electrotechnical Commission, IEC 60050-191: 1990 Progress does not always happen progressively, and you can judge the quality of the presentation yourself by comparing the presentation of the basic terms (Figure 2). DSTU 2860-94, which corresponds to GOST 27.002-89, is currently operating in Ukraine.

Figure 2. Comparative analysis of reliability attributes according to GOST 27.002 versions

from 1989 and 2009

We emphasize that we consider precisely random hardware failures, to which the mathematical apparatus of probability theory can be applied. The theory of reliability gives a practical picture of the world in which you can build reliable systems from not quite reliable components (as a rule, with methods of redundancy and diagnostics). The situation is different with systematic failures, which obviously cannot be described within the framework of the theory of reliability. It is these failures that make up the biggest problem because they are unpredictable. In the 1980s and 1990s, there were attempts to apply probabilistic models to assess the reliability of software, operator errors, and then IS indicators. Until now, this path has not yielded practically applicable results.

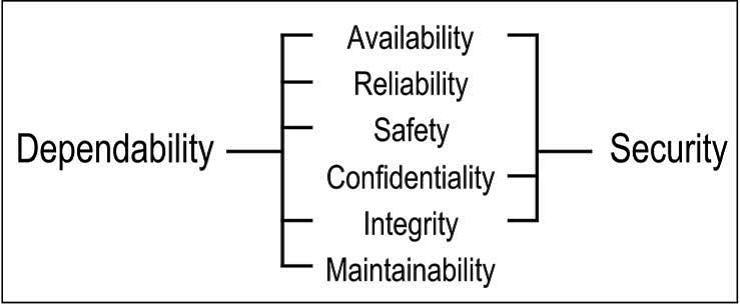

Another approach to the analysis of reliability attributes is the so-called RAMS approach, which stands for Reliability (availability), Availability (readiness), Maintainability (maintainability), and Safety (safety). Sometimes Integrity, integrity or completeness is added to these four attributes, for this is how the word is translated in the Russian version of IEC 61508. The simplest definitions for these properties are:

- Readiness is suitability for proper operation;

- Reliability is the continuity of proper maintenance;

- Maintainability is the ability to be modified and repaired.

- Safety is the absence of disastrous consequences for the user and the environment;

- Integrity is the absence of inappropriate system changes.

Security (IB) is a collection of attributes of confidentiality, integrity and availability (the so-called CIA triad). Readiness or availability is considered for authorized actions to access information, and integration is considered for correct work with data, excluding their unauthorized modification. Confidentiality is an additional attribute, as compared to reliability, which means the absence of unauthorized disclosure of information. Thus, the simplest model describing dependability (i.e., reliability) and security (i.e., information security) is represented by only six attributes (Figure 3).

Figure 3. RAMS & CIA Attributes

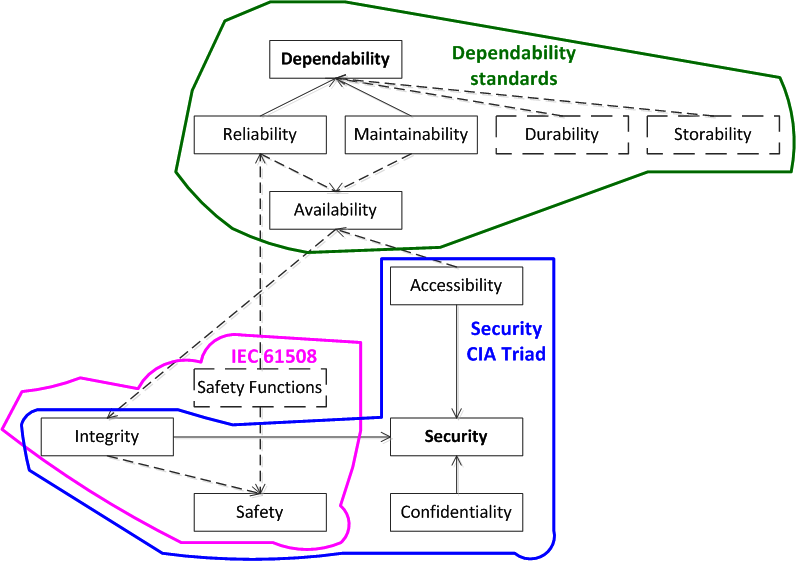

Now let's do another iteration and try to present all the attributes we know in the form of a single diagram (Figure 4).

Figure 4. The generalized taxonomy of the attributes of reliability, information and functional safety

Normal lines mark attributes and links corresponding to the model of six attributes just considered. Dashed line added additional attributes. One of the attribute groups is related to dependability. FB (Safety), according to IEC 61508, includes safety functions & integrity, and, through the safety functions, FB is associated with reliability, availability and reliability, and the integration of the performance of functions provides a number of properties, including information security. Thus, between the attributes of reliability, information security and security, there are mutual influence and certain connections that we will take into account when quantifying.

Risk analysis and functional safety indicators

Now let's turn to safety indicators. The basic concept and indicator of FB is risk, which is a combination of the probability of an undesirable event and its consequences.

Risk assessment is quantitative and qualitative, with a qualitative one operating with such categories as “high”, “medium”, “low”, etc.

If the undesirable event and the damage from it are fixed, then the risk becomes numerically equal to the probability P (t) of the occurrence of fixed damage. For example, the risk of a nuclear power plant accident with the release of radioactive substances into the atmosphere today is not more than 10 -7 1 / year.

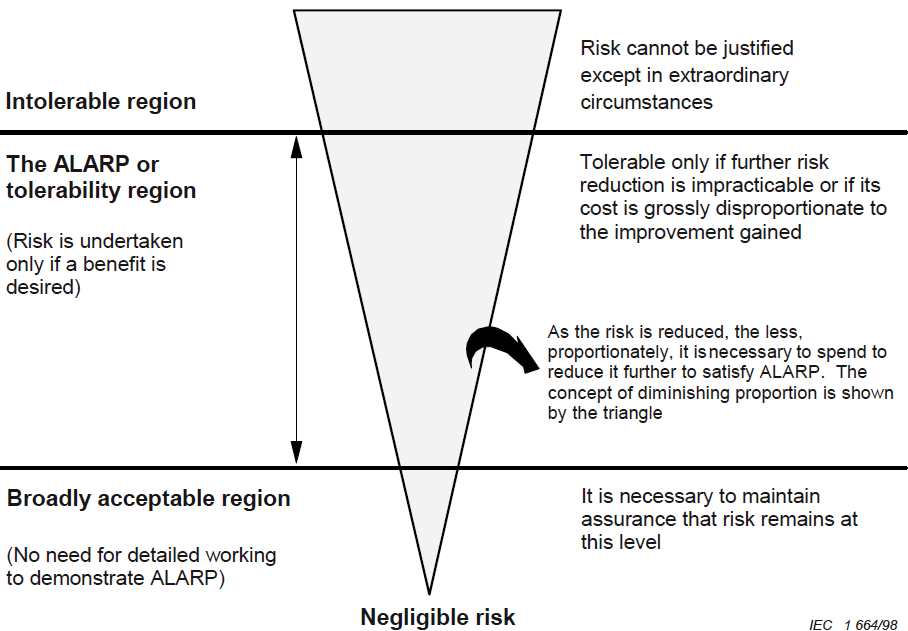

The so-called ALARA principle (ALARP) (as low as reasonably applicable / practicable) is widely used for risk assessment and management. It is an approach to risk management that implies that it is as low as possible and is achieved by actually available (limited) resources (Figure 5) .

Figure 5. Risk reduction based on the ALARP method (as low as reasonably practicable), IEC 61508-5

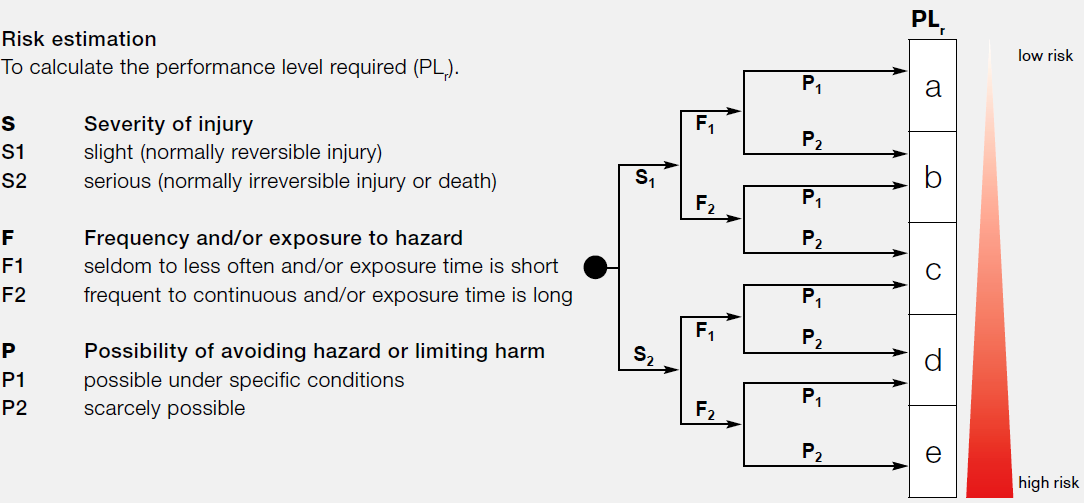

A convenient model is the risk graph (Figure 6). An example is taken from the industrial equipment safety standard (EN ISO 13849-1 Safety - Part 1: General principles for design). In addition to the likelihood and consequences of events, the possibility of avoiding hazards and damage was also taken into account. These three categories have high and low values, as a result we get six combinations, each of which corresponds to one or another Performance Level (PL), from a to e, which is analogous to Safety Integrity Level (SIL).

Figure 6. Risk graph, EN ISO 13849-1

This is a qualitative approach to risk assessment, now consider how the quantitative values of safety indicators are standardized in IEC 61508. If we consider the control systems, the events associated with the risk are the failures of the safety functions, so it is logical that the probability indicators for safety functions are chosen as safety indicators.

Let us return to the basic concepts of the theory of reliability. Reliability theory is an applied field of probability theory, where the time to system failure is considered as a random variable.

One of the most important indicators is the probability of failure-free operation, by which is meant the probability that a failure will not occur within the set MTTF time, called the time between failures: P (t) = P {MTTF> t}. Like any probability, the probability of failure-free operation takes values from 1 to 0, moreover, it is equal to one at the initial moment of time, and equal to zero as time tends to infinity.

The probability of failure is the probability that a failure occurs within a specified time T, i.e. the probability of failure complements the probability of failure-free operation to one (failure either occurs or not, that is, we have the full group of events): F (t) = 1 - P (t).

The failure rate - the conditional density of distribution (ie, the derivative with respect to time) of time to failure, provided that the failure did not occur, has the dimension of 1 / hour:

(t) = f (t) / P (t) = - [1 / P (t)] • [dP (t) / dt] = - [1 / (1 - F (t)] • [dF (t / dt]. In statistical evaluation, the failure rate is defined as the ratio of the number of failed single-type products to the length of the time interval during which these failures were observed (for example, if 10 products failed within 1000 hours, then = 10/1000 = 0.01 1 / hour).

An important assumption of the theory of reliability is the use of the so-called exponential distribution of time to failure, when the failure rate is considered constant over time.

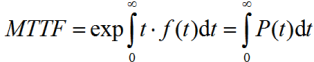

MTTF to failure time is calculated as a definite integral from zero to infinity for the probability of trouble-free operation over time:

Sometimes MTTF is treated as average or guaranteed system operation time, but this is not the case, since the probability of failure-free operation at time MTTF is 1 / e, which is approximately equal to 0.37. This means that for a single device, the probability that the device will remain in service after the MTTF expires is only 0.37. For a group of devices of the same type, this means that only 37% of them will remain operational after the MTTF expires.

The availability factor is the probability that the object will be in working condition at an arbitrary point in time, except for the planned periods during which the use of the facility for its intended purpose is not foreseen. The availability factor is calculated as the ratio of time to failure to the amount of time to failure (MTTF) and mean time to recover from failure (MTTR):

A = MTTF / (MTTF + MTTR).

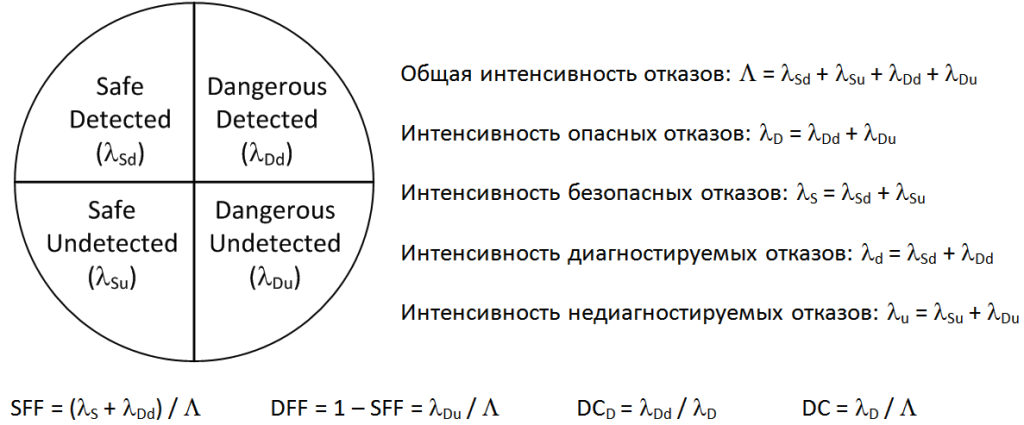

To understand the relationship between reliability and safety, let us turn to the classification of failures considered in IEC 61508 (Figure 7). Failures can be dangerous and safe, as well as diagnosable and non-diagnosable. In terms of reliability, all types of failures are considered. From a security point of view, we are only interested in dangerous failures, and it is important that such failures are diagnosable, and if they are detected, the system can go into a safe state.

Figure 7. Failure classification and safety performance in accordance with IEC 61508

IEC 61508 talks about the following safety indicators.

First, this is the so-called hardware failure resistance (Hardware Fault Tolerance, HFT). This is a very simple indicator, which indicates how much hardware failures can occur in the system before it fails. In essence, this is equivalent to the number of additional backup channels. That is, if the system is unreserved, then any failure disables it, HFT = 0. If there are two backup channels to the system, then one of them is additional, redundant. After a single failure, the system will remain operational, i.e. HFT = 1, etc.

Second, the safe failure rate (SFF) should be determined. In terms of IEC 61508, this is the ratio of the intensity of safe and dangerous diagnosed failures to the total failure rate (see Figure 7). It turns out that, in terms of IEC 61508, dangerous undiagnosed failures are primarily taken into account, and dangerous diagnosed failures in the fraction of safe failures are considered safe.

Accordingly, the proportion of dangerous failures (Dangerous Failure Fraction, DFF) can be determined, supplementing the fraction of safe failures to one and calculated as the ratio of the intensity of dangerous undiagnosed failures to the total failure rate (see Figure 7).

Diagnostic coverage (Diagnostic Coverage, DC D ) in IEC 61508 is determined only on the basis of the intensity of dangerous failures; this is the ratio of the intensity of the dangerous diagnosed failures to the intensity of the dangerous failures (see Figure 7).

In technical diagnostics, a more familiar approach is when the diagnostic coverage (DC) is defined as the ratio of the intensity of diagnosed failures to the total failure rate (see Figure 7). However, IEC 61508 declares a diagnostic coverage based on the proportion of decreasing probability of dangerous failures due to in-built diagnostics.

Based on the obtained Safe Failure Fraction, the maximum achievable SIL safety integrity level can be determined, depending on the redundant or unreserved configuration (Figure 8).

Figure 8. Maximum achievable SIL based on Safe Failure Fraction (SFF) and Hardware Fault Tolerance (HFT), IEC 61508-2

For example, for a safe failure rate of 90% -99% for a non-reserved configuration (HFT = 0), the maximum SIL2 safety integrity level can be achieved. In the duplicated system (HFT = 1), SIL3 can be achieved, and in the triple system, SIL4 (HFT = 2) can be achieved. Typically, this approach is used by developers of PLC and other equipment for safety control systems. Resistance to random hardware failures corresponds to SIL2 level for non-redundant configuration and SIL3 level for duplicate configuration. However, it should be remembered that in this case the resistance to systematic failures caused by the implementation of life-cycle processes must also correspond to the SIL3 level.

Another gradation established in IEC 61508 is the separation of equipment into types A and B (Type A & Type B). Type A includes the simplest, mostly mechanical and electrical components. All programmable electronic components are Type B.

In addition to the considered requirements, there are also requirements for the numerical values of safety indicators.

From the basic definitions of IEC 61508, we recall that there are three modes of equipment operation: with a low demand rate (low demand mode), in which the frequency of requests for the execution of the safety function does not exceed one per year, with a high demand rate (high demand mode), in which the frequency of requests for the safety function exceeds one per year, and continuous mode (continuous mode). It turns out that IEC 61508 recommends various reliability measures for these modes.

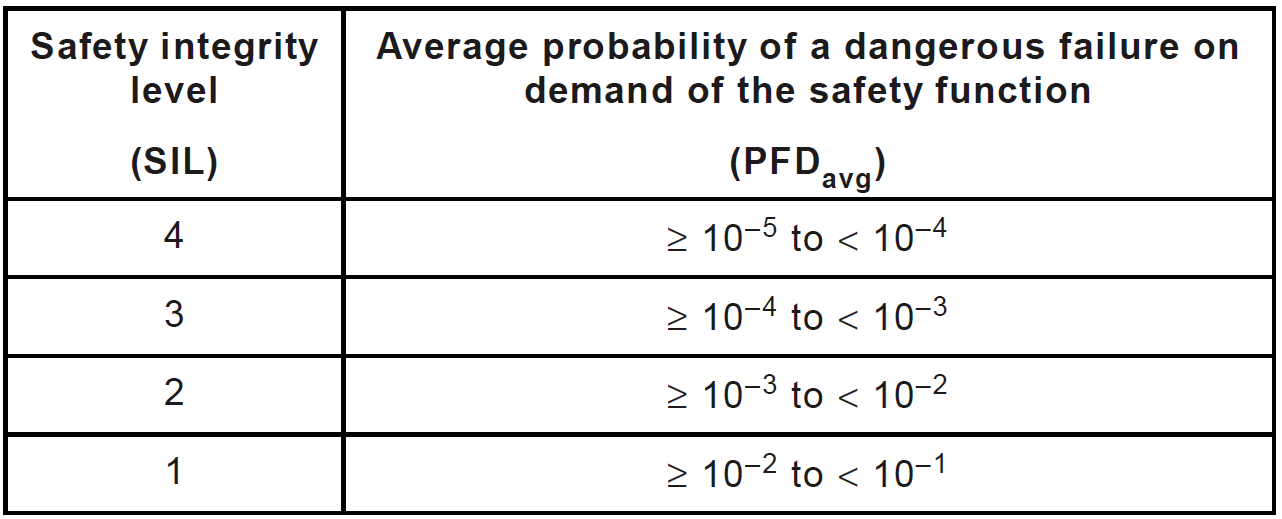

For systems operating with a low request rate, the average probability of a dangerous failure of a security function upon request should be determined as a target indicator (Figure 9). For SIL1 safety integrity level, this indicator should not exceed 0.1. With an increase in SIL each time the probability of a dangerous failure should decrease by a factor of 10. Thus, for the SIL4 safety integrity level, the probability of a dangerous failure should be between 10 -5 and 10 -4 .

If we draw a parallel with the indicators we have already considered, this indicator is equivalent to the unavailability coefficient, i.e. supplement the availability factor to unity. However, it should be remembered that this is not about all failures, but only about dangerous undiagnosable.

Figure 9. Dependence of the SIL level on the value of the average probability of the dangerous failure of an on-demand safety function (low demand mode), IEC 61508-1

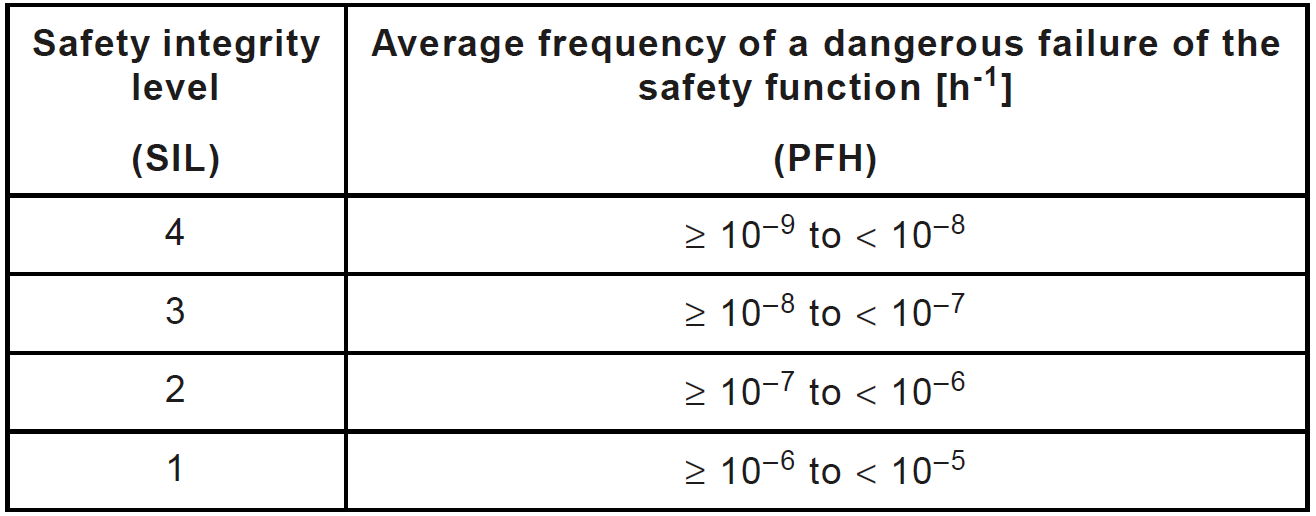

For systems operating with a high request rate or in continuous mode, the average frequency (or intensity) of dangerous failures of the safety function is determined (Figure 10). For the SIL1 safety integrity level, this figure should not exceed 10 -5 1 / hour, which is equivalent to one failure per 11.4 years. With an increase in SIL each time, the intensity of a dangerous failure should decrease 10 times. For the SIL4 safety integrity level, the hazardous failure rate should be from 10 −9 to 10 −8 1 / hour, that is, no more than one failure per 11,400 years. Of course, for a single system, this sounds somewhat absurd, but, given that thousands of systems of the same type are being used in the world, even with such a low failure rate, dangerous failures are quite likely that we see in reality.

This indicator is equivalent to the intensity of unspecified dangerous failures.

Figure 10. Dependence of the SIL level on the value of the average intensity of dangerous failures of the safety function (high demand mode and continuous mode), IEC 61508-1

All the tasks of calculating safety indicators are linked together within the framework of the analysis of types, consequences and criticality of failures (Failure Mode, Effect and Criticality Analysis, FMECA). The main provisions of this methodology are described in the IEC 60812: 2006 standard analysis methods for failure rate and effects analysis (FMEA). In the Russian Federation, GOST R 51901.12-2007 “Risk Management. Method of analyzing types and consequences of failures ” , which is an adaptation of IEC 60812.

Baseline data for FMECA can be obtained by applying methods such as reliability block diagrams, failure tree analysis and Markov analysis.

Examples of calculating indicators of functional safety and reliability

Now we will consider several examples on the definition of safety indicators, I have adapted them somewhat on the basis of the examples given in Yu.N. Fedorov.

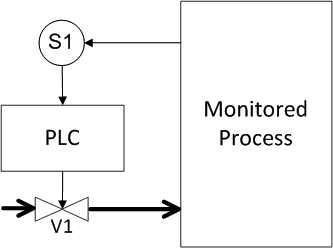

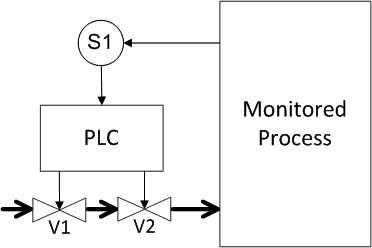

Let it be necessary to calculate the security of a simple process control system. We have a certain reservoir (for example, a boiler) with a pressure sensor, into which some liquid flows through a pipe (Figure 11). If the specified pressure level is exceeded, the shut-off valve should operate and shut off the liquid supply to the tank. A programmable logic controller (PLC) is used to process the signal from the sensor and issue a trigger signal to the valve. For specifics, let us set the probabilities of failures, let for the sensor and the valve we have the probability of failure of 10 -3 . To make it more convenient to explore approaches to the redundancy of field equipment, we will move the PLC beyond the brackets, i.e we will assume that the PLC is absolutely reliable and we will not take its influence into account.

Regarding equipment failures, it should be noted that they can be of two types, firstly, a dangerous failure, that is, a failure when the equipment should work and, secondly, a false failure, that is, an operation when the equipment should not work. For both types of failure, we have the same probability.

Now let's say a few words about one of the gaps in IEC 61508. It turns out that IEC 61508 does not put forward any requirements for the reliability and availability of control systems, it only contains requirements for safety. It would seem that this is the same thing, the more reliable the system, the safer it is. However, this is not entirely true; an absolutely safe system, that is, not functioning, is absolutely safe. Engineering tasks for the development of security systems include the optimization of safety performance (probability of dangerous failure) and readiness (probability of false positive). In our example, we consider the simplest architecture of control systems in terms of the probability of dangerous failures and false positives.

Figure 11. Example 1: Unserved system

Determine the probability of a dangerous failure and the probability of a false positive (response under the spoiler)

Answer for Figure 11

A dangerous failure occurs when either a sensor or a valve fails. In this case, the probabilities add up, that is, for the system, the probability of a dangerous failure is 2 • 10 -3 . The probability of false positives is determined by the exact same event pattern and is also equal to 2 • 10 -3 .

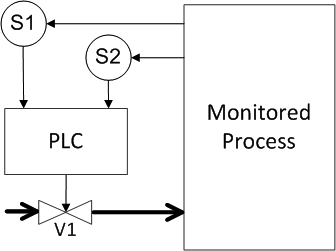

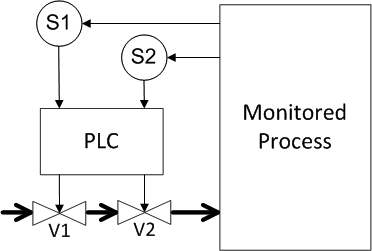

Now we define the probabilities for different types of redundancy. First we introduce redundancy for the sensor (Figure 12). We assume that the redundant components are identical, that is, the probabilities of their failure are equal. Try to determine what the probabilities of dangerous failure and false positives for this case?

Figure 12. Example 2: Sensor Backup

Determine the probability of a dangerous failure and the probability of a false positive (response under the spoiler)

Answer for Figure 12

A dangerous failure occurs in the event that either both sensors or a valve fails. The probabilities of sensor failures are multiplied because both failures must occur for a failure. Then the result of multiplication is added with the probability of valve failure, we get as a result 10 -6 + 10 -3 = 1,001 • 10 -3 , that is, the value is close to 10 -3 . Thus, the redundancy of only one component of the system has reduced by half the probability of dangerous failures. Now what happens with the false positives? The probability of false positives has increased one and a half times compared to the original scheme, since false positives can occur for any of the three components, therefore, the probabilities are added up and we get 3 • 10 -3 .

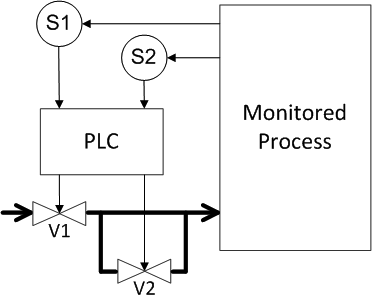

Now let's consider what happens if the valve is not reserved but the sensor (Figure 13)? What are probabilities equal to?

Figure 13. Example 3: Valve Redundancy

Determine the probability of a dangerous failure and the probability of a false positive (response under the spoiler)

Answer for Figure 13

As expected, the result is identical to the previous backup scheme, the probability of dangerous failures is 1.001 • 10 -3 , the probability of false positives

3 • 10 -3 .

3 • 10 -3 .

Now consider the scheme where both sensors and valves are reserved. We assume that, according to the data of each sensor, a control signal is generated for each of the valves (Figure 14). What do we get?

Figure 14. Example 4: Sensor and valve redundancy (1st method)

Determine the probability of a dangerous failure and the probability of a false positive (response under the spoiler)

Answer for Figure 14

A dangerous failure will occur if both sensors fail or both valves fail. Thus, the probabilities of failures for sensors and for valves are multiplied, and these results are added up; we get

2 • 10 -6 , i.e. we have reduced the likelihood of dangerous failures by a factor of 1000 compared with the original unreserved system. But a false positive will occur when any of the system components are triggered, i.e. all failure probabilities add up, and we get 4 • 10 -3 . Those. no matter how paradoxical it may sound, but in the security system, redundancy reduced the system availability by half compared to the original system.

2 • 10 -6 , i.e. we have reduced the likelihood of dangerous failures by a factor of 1000 compared with the original unreserved system. But a false positive will occur when any of the system components are triggered, i.e. all failure probabilities add up, and we get 4 • 10 -3 . Those. no matter how paradoxical it may sound, but in the security system, redundancy reduced the system availability by half compared to the original system.

For a shut-off valve, another type of redundancy is possible when they are installed in parallel, and then the supply of product to the tank is stopped when both valves are activated (Figure 15). How in this case to determine the probability of dangerous failure and false positives?

Figure 15. Example 5: Sensor and valve redundancy (method 2)

Determine the probability of a dangerous failure and the probability of a false positive (response under the spoiler)

Answer for Figure 15

A dangerous failure occurs when both sensors or any of the valves fail. , . 2,001•10 -3 . , , 2,001•10 -3 . , - , , , .

Thus, in security systems, it is necessary to analyze not only safety indicators, but also reliability indicators and make a choice of structures taking into account all the available information. Otherwise, the system will be safe, but its operation may not be economically feasible.

findings

Today we looked at how quantitative estimation of indicators of FB is carried out.

Functional security attributes, which include security features and security completeness, are part of a more extensive attribute system, which also includes information security and reliability.

Functional safety indicators are also related to information safety and reliability indicators. When developing safety systems, it is necessary to conduct a comprehensive analysis of measurable indicators and to identify possible contradictions between the properties where it is necessary to optimize and find a compromise.

When evaluating and ensuring information security, probabilistic indicators can be used, first of all, to analyze the availability (readiness) of certain physical devices.

Risk is a universal indicator of functional safety. Depending on the type of system, the risk can be transformed into a target value of the availability factor and failure rate, which vary depending on the SIL safety integrity level.

Analysis of types, consequences and criticality of failures (FMECA) is the most effective approach for quantitative and qualitative assessment of safety.

PS To explain the main aspects of functional safety, the following cycle of articles

is being developed: - Introduction to the subject of functional safety;

- Standard IEC 61508: terminology ;

- IEC 61508 Standard: requirements structure ;

- The relationship between information and functional safety of the process control system ;

- Management processes and functional safety assessment ;

- The life cycle of information and functional security ;

- The theory of reliability and functional safety: basic terms and indicators ;

- Methods to ensure functional safety .

Here you can watch video lectures on the topic of publication.

Source: https://habr.com/ru/post/323776/

All Articles