When the “cloud” breaks down: what can be done in this situation?

Most recently, due to problems with the Amazon S3 service, there was a real "cloudiplis". Failure of work has caused the fall of a large number of sites and services of those companies that are Amazon customers. The problems began on the evening of February 28, which could be learned from social networks. Then, messages about broken Quora, IFTTT, Sailthru, Business Insider, Giphy, Medium, Slack, Courser, etc. mailings began to appear in large numbers.

Not only services and websites failed, many IoT devices turned out to be impossible to control via the Internet (in particular, due to the broken IFTTT). The most interesting thing is that until the last moment the status of Amazon S3 was shown as normal. But many hundreds or even thousands of companies whose resources were affected by the problem realized that sooner or later even a very reliable “cloud” could collapse, covering everyone with its fragments. Is it possible to do something in this situation?

Information security experts say yes. How? This is already a more complex question, to which you can give several answers at once.

')

Ways to avoid problems with their servers in the event that the cloud in which they operate, drops, are very different from the methods used by data centers to improve uptime and "stress tolerance" (for example, duplication of different systems). To protect your services and remote data, you can use copies hosted on virtual machines in data centers from different regions, as well as use a database that spans several data centers.

This method can also be used as part of working with one provider, but it is more reliable to use the services of other cloud companies, including Microsoft Azure or the Google Cloud Platform, in addition to AWS. It is clear that it is more expensive, but here, as in the usual case, it is worth considering whether the game is worth the candle. If yes, for example, the service or site should work all the time, then such precautionary methods can be taken. “Multicolour” architecture, as it can be called.

In many cases, you can protect yourself by using the services of a CDN provider like Cloudflare (by the way, we will soon open our own CDN), which saves copies of important data that are stored with other companies.

After the fall of Amazon S3, those AWS customers who worked with Cloudflare had almost no problems.

Recall that it all started with S3 in 2006. The first public service that Amazon launched is cloud file storage. Virtuals (EC2) appeared much later.

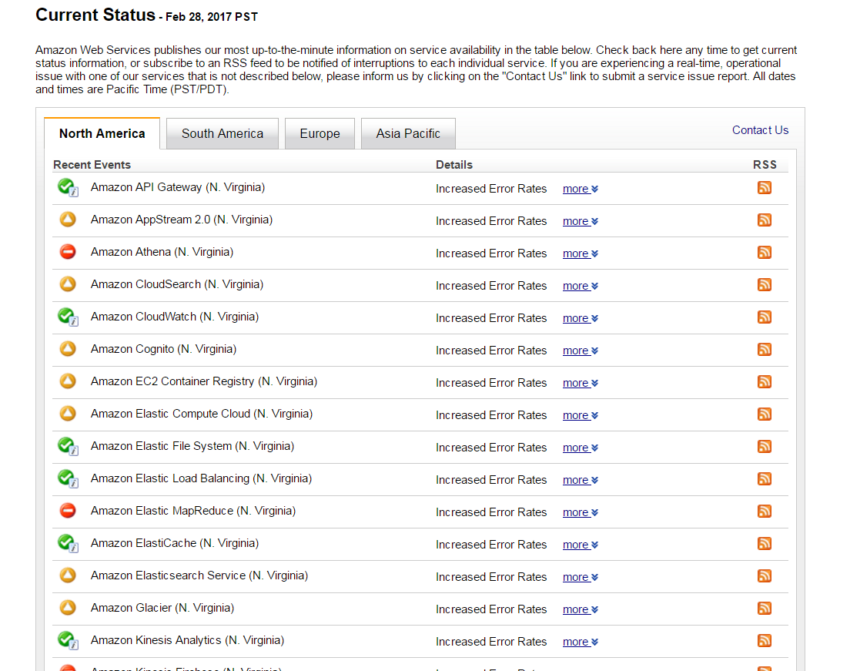

Here is another screenshot from Amazon’s client, a Russian IT company. 45 services did not work for them:

Data can be stored in trucks (also from Amazon), but this is not always the way out.

Multi-cloud infrastructure is now beginning to be used by an increasing number of companies, whose services and sites must operate continuously. Of course, duplication costs money, but in some cases losses due to service downtime can significantly exceed the costs of duplication. Now the duplication of cloud information and the security of this data, protecting it from hackers are two main problems.

Analysts say that a number of companies no longer want to remain within the same cloud of one company, so they are trying to duplicate their systems in different clouds. And this trend is becoming more pronounced.

By the way, multi-exposure is not always a panacea either. For example, now many companies declare that they use such a model of work. But at the same time, different clouds can be used for different purposes. For example, AWS for development and testing, and the cloud from Google for the deployment of the service and ensuring its continued operation.

Another trend associated with the previous ones is the emergence of an increasing number of container orchestration tools, such as Docker, Kubernetes, and Mesosphere DC / OS. They should also be tested in work, with them the principle of multi-cloud infrastructure is much easier to organize than in the usual case.

Human factor

This is, as always, the main problem. It was the man who caused the fall of the Amazon servers, which the company admitted on its website. A team of engineers worked on debugging the billing system, for which a number of servers needed to be taken offline. Due to a typo, many more servers were transferred to this mode than was originally required.

It is difficult to call it a catastrophe, but the whole metadata management system of a large region has failed. Now, Amazon has protected its servers by adding a number of settings that exclude the false triggering of a shutdown command in the event of a typo.

But who knows what awaits us in the future, what else can a small typo affect? And here, multi-oblachnost can show itself from the best side, leaving the services and websites of companies that have taken care of their security in advance.

Source: https://habr.com/ru/post/323660/

All Articles