10 Docker Myths That Scare Developers

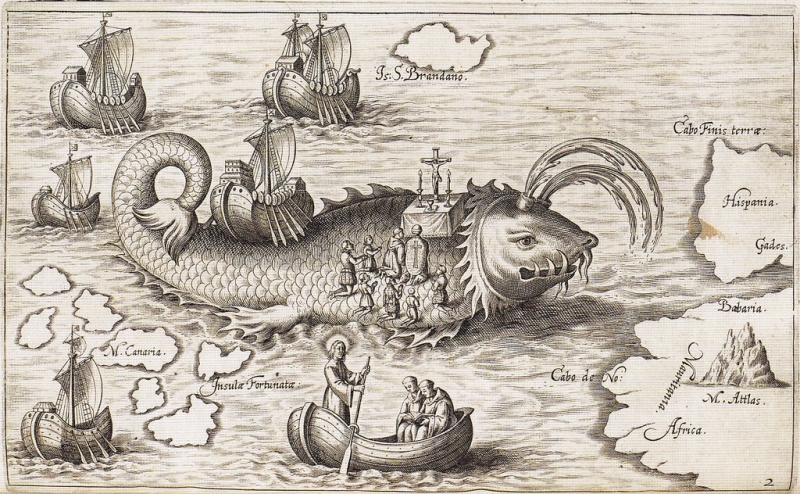

Source: 'Nova typis transacta navigatio' (Linz: sn, 1621), p.12 (British Library, G.7237).

Often during conversations about Docker I hear opinions with which I do not quite agree.

“Docker is essentially designed for large companies.”

"Under OSx, he has experimental support, under Windows it is barely running"

"I'm not sure I can quickly deploy it locally."

')

... and much more.

In these statements there is a grain of truth (see below myths 3 and 5), but it is small, and for the most part the real picture is distorted.

And there are also jargon-filled articles on how to use 10k of requests per second when using a considerable number of frameworks. And this is with the help of only 30k containers when automating 5k microservices hosted on six hundred cloud virtual machines ...

Well, it is not difficult to guess why Docker is surrounded by so many myths.

Unfortunately, these myths are very tenacious. And their main achievement is that they frighten developers and prevent them from using Docker.

Let's talk about the most common myths - those that I encountered and believed in - and try to find the truth in them, as well as solutions, if any.

Myth number 10: With Docker, I will not be able to develop ...

because I can't edit the dockerfile

For development I need special tools and environment settings. At the same time, I was quite rightly told that it is impossible to edit the Dockerfile used in industrial operation.

Combat Docker image must be configured only for the needs of industrial operation and no more.

How to be? If I can't add my tools and settings to the Dockerfile, how can I even develop in Docker at all?

I can copy the combat Dockerfile into my own and make the necessary changes to the copy. But duplication can lead to unpleasant consequences.

Decision

Instead of copying Dockerfile, which can lead to additional difficulties, it is better to use the Docker functionality to create some images based on others.

I already create a production-image with my application on the basis of another image (something like “node: 6”). So why not make a “dev.dockerfile” that will create the desired image based on it?

Dockerfile

FROM node:6 # ... production configuration Production build

$ docker build -t myapp . dev.dockerfile

FROM myapp # ... development configuration Development build

$ docker build -t myapp:dev -f dev.dockerfile . Now I can modify dev.dockerfile on my own, knowing that it uses exactly the same configuration as in the production image.

Want to see how this is done?

Watch the episode of Creating a Development Container , which is part of the Guide to Building Node.js Apps in Docker .

Myth number 9: I do not see anything in this container

because I can't look inside the container at all!

Docker is application virtualization (containerization), there are no full-fledged virtual machines.

But the developer often needs to interact with the container as if it were a virtual machine.

I need logs (in addition to the console output of my application), debugging messages and general confidence that all changes to the image worked correctly.

If the container is not a virtual machine, how can I understand what is happening? How do I see files, environment variables and other necessary things?

Decision

Although the Docker container is not a full-fledged virtual machine, the Linux distribution is running under its hood.

Yes, this distribution can be very truncated (for example, as Alpine Linux), but it still has at least access to the simplest command shell, which makes it possible to look inside the container.

In general, there are two ways to do this.

Method number 1: Connect to the command shell of a running container

If the container is already running, I can issue the “docker exec” command to get full access to it.

$ docker exec -it mycontainer /bin/sh This gives access to the contents of the container, similar to what I get when I log on to the system under the usual Linux distribution.

Method number 2: Run the shell as a container command

If I do not have a working container, I can launch a new shell with the shell as a start command.

$ docker run -it myapp /bin/sh Now I have a new container with a command shell, which allows you to see what's inside.

Want to see how this works?

Watch episodes from the Guide to Learning Docker and the Guide to Building Node.js Apps in Docker .

Myth number 8: I will have to write code inside the Docker container

and I will not be able to use my favorite editor ?!

When I first saw the Docker container in which my Node.js code was executed, I was very interested and pleased with its capabilities.

But the joy quickly disappeared when I began to think about how to move the modified code into the container after creating the image.

Need to re-create an image each time? But it will take too much time, so this option disappears.

Maybe you need to connect to the command shell of the container and code in vim? You may have to use the wrong version that I need.

Let's say it works. And if I need an IDE or better editor?

If I only have access to the container console, how can I use my favorite text editor?

Decision

In Docker containers with the option "volume mount" you can mount directories from the host system.

$ docker run -v /dev/my-app:/var/app myapp With this command, “/ var / app” will point to the local directory “/ dev / my-app”. Changes made to the host system in “/ dev / my-app” (of course, with the help of my favorite editor) will be immediately visible in the container.

Want to see how this works?

Watch the episode of editing code in a container , which is part of the Guide to Building Node.js Apps in Docker .

Myth number 7: I have to use the console debugger ...

and I want the one that is in my IDE

We can already edit the code inside the container and connect to its command shell. One step left before debugging.

I have to run the debugger inside the container, right?

Of course, inside the Docker container you can use the console debugger for the selected programming language, but this is not the only option.

How, then, in the container to launch a debugger from my IDE or favorite editor?

Decision

The short answer is “remote debugging”.

The expanded response is highly dependent on the language and the working environment.

With Node.js, for example, I can use remote debugging over TCP / IP (port 5858). To configure the debugging of the code inside the Docker container, I need to open the corresponding port in the image created with the help of “dev.dockerfile”.

dev.dockerfile

# ... EXPOSE 5858 # ... Now I can connect to the shell of the container and start the Node.js debugging service, and then use it with my favorite debugger.

Want to see the debugging of Node.js code in container in Visual Studio?

Watch the episode Debugging in a Container with Visual Studio Code , which is part of the Guide to Building Node.js apps in Docker .

Myth number 6: I have to run the “docker run” every time

and I won't be able to remember all the “docker run” options ...

Without a doubt, Docker has a huge number of command line options. Scrolling through his reference resembles a reading of a decrepit volume on the mythology of a vanished civilization.

When it comes time to run a container, I, not surprisingly, often get confused and even get angry because I can't find the right options the first time.

Moreover, each launch of the “docker run” creates a new container instance from the image.

This is great if I need a new container.

But when I want to run a previously created container, I don’t like the result of the “docker run” at all.

Decision

There is no need to do the “docker run” every time you need a container.

Instead, containers can be stopped (stop) and run (start).

This also maintains the state of the container between launches. If any files were changed in it, these changes will be saved after stopping and restarting.

Want to see how this works?

There are many Guide to Learning Docker and Node.js Apps in Docker episodes on WatchMeCode that use this technique.

But if the idea is new to you, I recommend to look at basic image and container management , which tells about stopping and starting one instance of the container.

Myth number 5: Docker under macOS and Windows does not really work ...

and I just use Mac / Windows

A few months ago, in general, it was true.

In the past, Docker for Mac and Windows required the use of a full-fledged virtual machine with the “docker-machine” utility and additional software for exchanging data with a virtual environment.

It worked, but with very big losses of productivity and a limited set of functions.

Decision

Fortunately, Docker developers are well aware of the need to support not only Linux as the base OS.

In the second half of 2016, Docker official releases for Mac and Windows were released.

Now installing Docker on these platforms is easy. Updates are regularly released, and the functionality is almost at the level of the Linux version, and I don’t remember when I last needed the option that was not available on the Docker for Mac or Windows.

Do you want to install Docker on Mac or Windows?

On WatchMeCode you can find free episodes for installation on both platforms (as well as on Ubuntu Linux!)

Myth # 4: You can only work with Docker on the command line.

GUI is more convenient for me

Since Docker is originally from the Linux world, it is not surprising that the command line is its main interface.

However, the abundance of commands and options sometimes discourages. For a developer who uses the console irregularly, this can cause a decrease in productivity.

Decision

As the Docker community grows, more and more tools appear that satisfy a variety of user requests, including utilities with a graphical interface.

Docker for Mac and Windows has basic Kitematic integration tools, for example, a GUI for managing images and containers on a local machine.

Kitematic allows you to easily find images in Docker repositories, create containers, as well as manage the settings of installed and running containers.

Want to see Kitematic in action?

Watch the Kitematic episode in the Guide to Learning Docker .

Myth number 3: I can not deploy a database in a container.

There will be problems with scaling ... and I will lose my data!

Containers are inherently ephemeral: they must be destroyed without hesitation and re-created as needed. But if my database data is stored inside a container, deleting it will result in its loss.

Moreover, database management systems have their own characteristics when scaling: vertically (more powerful server) and horizontally (more servers).

Docker seems to specialize in horizontal scaling: when you need more power, more containers are created. On the other hand, most DBMS require specific settings when scaling.

Yes, that's it. Docker is better not to deploy the combat base.

Nevertheless, my first successful experience with Docker was associated with the database.

Oracle, to be exact.

I needed Oracle for development needs, but I didn’t manage to deploy it in a virtual machine. I took up this question in fragments for about two weeks, but I didn’t even come close to success.

And 30 minutes after I learned that there was an Oracle XE image for Docker, I had a working base.

In my environment to develop .

Decision

Docker may not be the best solution for deploying a combat database, but for developers it can work wonders.

I worked with MongoDB, MySQL, Oracle, Redis, as well as other systems requiring state saving between restarts, and everything was fine with me.

Well, if we talk about the "ephemerality" of Docker containers, then we should not forget about the possibility of connecting external volumes.

Similar to the story with code editing: connecting volumes provides a convenient tool for storing data on a local machine and using it in a container.

Now I can at any time create and delete containers, knowing that I will continue from the same place where I stopped.

Myth number 2: I can not use Docker in my project

because the docker is "all or nothing"

When I first saw Docker, I thought: either to develop, debug, deploy and "devops" everything with Docker (and two hundred additional tools and frameworks so that it all works automatically), or not to use Docker at all.

The case of Oracle XE proved the opposite.

Any tool or technology that at first glance requires an “all or nothing” approach will not be overly overestimated. In extremely rare cases, “all or nothing” actually turns out to be true. And if it still turns out, it may not be worth investing in such a product.

Decision

Docker, like most developer tools, can begin to be used gradually.

Start small.

Run the development database in the container.

Then create a library in the container and find out how it works.

Next, create a microservice, but one that requires only a few lines of code.

Go to more serious projects, already involving a small team of specialists.

No need to rush into the pool with his head.

Myth # 1: I can't get the benefit of the Docker ... Actually ...

because Docker is “enterprise” and “devops”

This was the most serious mental barrier that I needed to overcome after becoming acquainted with Docker.

In my understanding, Docker was a tool designed for the most advanced teams that deal with scalability issues that I’ll never come across in my life.

And it is not at all surprising that I thought so.

In blogs and at conferences every now and then there is a sensation like “Such a corporation automated 10,000,000 microservices using Docker and Kubernetes”, etc., etc.

Docker can be a great tool for “enterprise” and “devops”, but a regular developer - like you and me - can take advantage of it.

Decision

Try Docker.

Again, start small.

I have one virtual machine running with 12 GB of RAM, which hosts 3 web projects for one of the clients. By today's standards, this is a very modest server. But I see Docker — a pure Docker by itself — as a way to use this server more efficiently.

I have a second client who has five developers who are partly busy (all together, not working 40 hours a week). This small team also uses Docker to automate the build and deployment processes.

Currently, I am writing most of my open source libraries on Node.js under Docker.

Every day with Docker, I find new and often more convenient ways to manage the software installed on my laptop.

Remember:

Do not pay attention to the hype and do not believe the myths

Docker-mythology appeared due to compelling reasons. But it does not help developers.

If you are still in doubt after reading this article, I ask you to take some time and try to reconsider your attitude towards Docker.

If you have questions about how a developer can use Docker effectively, contact me . I will be glad to try to help you.

If you want to learn the basics of Docker and the methods for developing applications using it, you can start by viewing the Guide to Learning Docker (from scratch) and the Guide to Building Node.js Apps in Docker on WatchMeCode.

The original text is presented at: derickbailey.com/2017/01/30/10- myths-about-docker-that-stop-developers-cold/ (the lack of an active link is due to the fact that the author of the original text redirects to third-party resources of all visitors came for active links on other sites ).

Source: https://habr.com/ru/post/323554/

All Articles