Overview uniset2-testsuite - a small bike for functional testing. Part 2

The first part was an overview of the possibilities. And in this part we will consider which test interfaces have already been implemented and how to add your own ...

If anything, here is a link to the first part of the article.

Currently, uniset2-testsuite supports three interfaces:

- uniset

- modbus

- snmp

')

Interface type = "uniset"

As I wrote in the first part, the entire uniset2-testsuite was initially developed for testing projects using libuniset . Therefore, type = "uniset" is the first and main interface that has been developed. In uniset projects, the main element is “sensors”, which have a unique identifier (numeric, but string names can be used) and some value that can be set or obtained. Therefore, in the test scenario, everything revolves around these sensors and checking their values. Here is an example of a simple script that implements the algorithm described here .

<?xml version = '1.0' encoding = 'utf-8'?> <TestScenario> <TestList type="uniset"> <test name="Processing" comment=" "> <action set="OnControl_S=1" comment=" ' '"/> <check test="CmdLoad_C=1" comment=" ''"/> <check test="CmdUnload_C=0" comment=" ''"/> <check test="Level_AS>=90" comment=" .." timeout="15000"/> <check test="CmdLoad_C=0" comment=" ''"/> <check test="CmdUnload_C=1" comment=" ''"/> <check test="Level_AS<=10" comment=" .." timeout="15000"/> </test> <test name="Stopped" comment=" "> <action set="OnControl_S=0" comment=" ' '"/> <check test="CmdLoad_C=0" comment=" '' " holdtime="3000"/> <check test="CmdUnload_C=0" comment=" '' " holdtime="3000"/> <check test="Level_AS<=80" comment=" " holdtime="10000"/> </test> </TestList> </TestScenario> Interface type = "modbus"

This interface is more interesting for wide use, as it allows you to communicate with the system under test using the standard Modbus TCP protocol . A test script with its use is as follows:

<?xml version = '1.0' encoding = 'utf-8'?> <TestScenario type="modbus"> <Config> <aliases> <item type="modbus" alias="mb1" mbslave="localhost:2048" default="1"/> <item type="modbus" alias="mb2" mbslave="localhost:2049"/> </aliases> </Config> <TestList > <test name="Test simple read"> <check test="0x10!=0"/> <check test="0x24:0x04!=0"/> <check test="0x24!=0"/> <check test="0x1@0x02:0x02!=0" config="mb2"/> <check test="0x24:0x06!=0"/> </test> <test name="Test simple write"> <action set="0x25=10"/> <action set="0x25:0x10=10"/> <action set="0x25@0x02=10" config="mb2"/> <action set="0x25@0x02:0x05=10" config="mb2"/> </test> <test name="Test other 'check'"> <check test="0x20=0x20" timeout="1000" check_pause="100"/> <check test="0x20=0x20"/> </test> <test name="Test 'great'"> <action set="109=10"/> <check test="109>=5"/> <check test="109>=10"/> </test> <test name="Test 'less'"> <action set="109=10"/> <check test="109<=11"/> <check test="109<=10"/> </test> <test name="Test other 'action'"> <action set="20@2=1,21@0x02:5=1,103@2:0x10=12" config="mb2"/> </test> </TestList> </TestScenario> At the beginning, the Config section defines the parameters of the two nodes that are being worked on. Conditionally they are named mb1 and mb2 . One of them is assigned as the default default = "1" . Therefore, everywhere further in the text, if the config = ".." parameter is not specified, the default node is used.

The format of the test recording looks like this:

"mbreg@mbaddr:mbfunc:nbit:vtype"Where:

- mbaddr is the device address on the bus. Default: 0x01

- mbfunc is a polling or recording function. By default, mbfunc = 0x04 is used for polling, and mbfunc = 0x06 for recording

- nbit is the bit number [0 ... 15]. For the case if the survey is conducted by the function of reading "words", while the data is stored in a bit. By default, nbit = -1 - which means not to use.

- vtype - the type of the requested value, specified by the string value. The default is "signed".Supported vtype

- F2 - double word float (4 bytes)

- F4 - 8-byte word (double)

- byte - byte

- unsigned - unsigned integer (2 bytes)

- signed - signed integer (2 bytes)

- I2 - integer (4 bytes)

- U2 - unsigned integer (4 bytes)

ATTENTION: It should be borne in mind that in the current implementation, work is carried out only with integer values, therefore all 'float'-values are rounded to integers.

As a result, this interface allows you to write test scripts for working with many devices. For example, you can manage some of your test bench to supply test effects to your device and check its reaction. All this under the Modbus TCP protocol, which is supported by almost all manufacturers of devices used in ACS (and not only).

Interface type = "snmp"

This interface is designed to work with the system under test using the snmp protocol.

Some details on the implementation

I had several implementations based on the python modules pysnmp, netsnmp. But in the end, the difficulties encountered with different versions on different platforms led me to

more "oak" solution. Namely, the use of calls to the net-snmp-clients family of tools and the processing of results through popen. Paradoxical as it may sound, this method turned out to be more “portable” than using pure python modules.

more "oak" solution. Namely, the use of calls to the net-snmp-clients family of tools and the processing of results through popen. Paradoxical as it may sound, this method turned out to be more “portable” than using pure python modules.

An example of a test script using snmp is shown below:

<?xml version="1.0" encoding="utf-8"?> <TestScenario type="snmp"> <Config> <aliases> <item type="snmp" alias="snmp1" snmp="conf/snmp.xml" default="1"/> <item type="snmp" alias="snmp2" snmp="conf/snmp2.xml"/> </aliases> </Config> <TestList> <test name="SNMP read tests" comm=" snmp"> <check test="uptime@node1>1" comment="Uptime"/> <check test="uptimeName@node2>=1" comment=" " config="snmp2"/> </test> <test name="SNMP: FAIL READ" ignore_failed="1"> <check test="sysServ2@node2>=1" comment="fail read test" config="snmp2"/> </test> <test name="SNMP write tests" comm=" snmp"> <action set="sysName@ups3=10" comment="save sysName"/> <action set="sysServ2@ups3=10" comment="save sysServ"/> </test> <test name="SNMP: FAIL WRITE" ignore_failed="1"> <action set="sysServ2@node1=10" comment="FAIL SAVE TEST"/> </test> </TestList> </TestScenario> This interface still requires a special configuration file for its use, which is specified in the Config section ( snmp = ".." ).

Snmp.xml configuration file for snmp script

<?xml version='1.0' encoding='utf-8'?> <SNMP> <Nodes defaultProtocolVersion="2c" defaultTimeout='1' defaultRetries='2' defaultPort='161'> <item name="node1" ip="192.94.214.205" comment="UPS1" protocolVersion="2" timeout='1' retries='2'/> <item name="node2" ip="test.net-snmp.org" comment="UPS2"/> <item name="node3" ip="10.16.11.3" comment="UPS3"/> </Nodes> <MIBdirs> <dir path="conf/" mask="*.mib"/> <dir path="conf2/" mask="*.mib"/> </MIBdirs> <Parameters defaultReadCommunity="demopublic" defaultWriteCommunity="demoprovate"> <item name="uptime" OID="1.3.6.1.2.1.1.3.0" r_community="demopublic"/> <item name="uptimeName" ObjectName="sysUpTime.0"/> <item name="bstatus" OID="1.3.6.1.2.1.33.1.2.1.0" ObjectName="BatteryStatus"/> <item name="btime" OID=".1.3.6.1.2.1.33.1.2.2.0" ObjectName="TimeOnBattery"/> <item name="bcharge" OID=".1.3.6.1.2.1.33.1.2.4.0" ObjectName="BatteryCharge"/> <item name="sysName" ObjectName="sysName.0" w_community="demoprivate" r_community="demopublic"/> </Parameters> </SNMP> The Nodes section defines the list of nodes (devices) with which the exchange will take place. It is possible to set the following parameters:

- name - the name of the node used later in the test

- ip - device address (ip or hostname)

- timeout - timeout for one communication attempt, in seconds. Optional parameter. The default is 1 sec.

- retries - the number of attempts to read a parameter. Optional parameter. The default is 2.

- port - Port for communication with the device. Optional parameter. The default is 161.

- comment - comment to the name. Optional parameter, currently not used.

- defaultProtocolVersion

- defaultTimeout

- defaultRetries

- defaultPort

In the MIBdirs section , specify directories with mib files to verify the OID

- path - path to the directory

- mask - mask for files. If not specified, all files in the directory are downloaded.

The Parameters section sets the list of parameters that will participate in the tests:

- name - the name of the parameter used later in the test

- r_community — set the 'community string' when reading a parameter (see snmp protocol).

- w_community - setting the 'community string' to write a parameter (see the snmp protocol).

- OID - parameter identifier in accordance with the SNMP protocol.

- ObjectName is the name of the parameter in accordance with the SNMP protocol. Optional parameter.

- ignoreCheckMIB - do not check the parameter for the mib file (in the --check-scenario mode)

The OID and ObjectName parameters are interchangeable. If both parameters are specified, the OID is used. Directly in the Parameters section, you can set default parameters for all nodes.

- defaultReadCommunity

- defaultWriteCommunity

Using multiple interfaces in the same script

Just show an example:

<?xml version="1.0" encoding="utf-8"?> <TestScenario> <Config> <aliases> <item type="uniset" alias="u" confile="configure.xml" default="1"/> <item type="modbus" alias="mb" mbslave="localhost:2048"/> <item type="snmp" alias="snmp" snmp="conf/snmp.xml"/> </aliases> </Config> <RunList after_run_pause="5000"> ... </RunList> <TestList> <test name="check: Equal test"> <action set="111=10"/> <check test="111=10"/> <check test="uptime@node1>1" config="snmp"/> <check test="0x10!=0" config="mb"/> </test> </TestList> </TestScenario> Those. for each check, you can specify which interface to use by specifying the config = "aliasname" parameter

How to implement your interface

Introductory

While working on the basic interfaces, a vision was formed about the minimum API of the test interface for embedding it into uniset2-testsuite. And it turned out that for the main functionality it is enough to implement only two functions (

def get_value(self, name, context): ... def set_value(self, name, value, context): ... This is based on a simple idea. If you look at the test script, you can see that in general terms, check or action can be written as

heck="[NAME]=[VALUE]" .Instead of '=' , there can actually be something from this [=,>,> =, <, <=,! =].

Those. we have a certain [NAME] as the name of the parameter that we check. And the specific interface knows how to parse it. And there is [VALUE] - this is the value with which the result is compared or that we expose. As a result, uniset2-testsuite takes over the job of parsing the test for NAME and VALUE and calls the get_value or set_value function of a specific interface implementation. At the same time, the interface itself is responsible for how the parameter with which the work is performed is encrypted in the [NAME] field. For example:

- in “uniset” format NAME :

id@node - in "modbus" format NAME :

"mbreg@mbaddr:mbfunc:nbit:vtype" - in "snmp" format NAME :

"varname@node"

Those. the interface developer himself decides which format for NAME to choose.

It is important to keep in mind that in the current implementation, such test case parameters as timeout , check_time , holdtime are processed at the uniset2-testsuite level. Therefore, when the test reads

<check test="varname=34" timeout="15000" check_time="3000"/> , this means that the function get_value (varname) will be called every 3 seconds until the timeout expires or value 34 will be obtained.Another important limitation is that in the current implementation, [VALUE] is supported only as a number. In fact, it is not very difficult to remake to support “any type” (

Configuration

Each interface itself determines which configuration parameters it needs for operation. The uniset2-testsuite for their recording provides the Config / aliases section, where parameters can be written in the form of xml-properties. When creating an interface, he will be given a configuration node, from which he considers everything he needs. If you need too much extra to determine, then, for example, in the snmp interface, the configuration node only determines where to get the config file (snmp.xml), and there everything that the interface needs to work is determined. In turn, for the modbus interface, for example, it suffices to define ip and port to communicate with the device, and these parameters are written directly in the Config section.

Loading plug-ins (interfaces)

Loading interfaces is built on a simple principle. There is a ' plugins.d ' directory from which all the interfaces lying there are loaded. There is a “system” directory, as well as plugins are searched in the plugins.d subdirectory in the current directory where the test is run. Accordingly, the user can simply place his plugins in the same place where the test is located and they will automatically pick up.

Each implementation of the interface is made up as a separate python file, which must contain the uts_plugin_name () function. For example, in the snmp interface, it looks like this

def uts_plugin_name(): return "snmp" As a result, the interface loading itself is built on the following mechanism:

.. <Config> <aliases> <item type="uniset" alias="u" confile="configure.xml" default="1"/> <item type="modbus" alias="mb" mbslave="localhost:2048"/> <item type="snmp" alias="snmp" snmp="conf/snmp.xml"/> </aliases> </Config> ... Starting processing a test script, uniset2-testsuite compiles a list of available plugins by name, loading them from plugins.d directories. Further, with the passage

in the Config section, type = "xxxx" looks and the corresponding interface is searched for

for which creation special function is caused

uts_create_from_xml(xmlConfNode) , which passes the xml-nodeas a parameter. Further, it already implements the creation of the interface and its initialization.

In short:

uts_plugin_name() - type="..." uts_create_from_xml(xmlConfNode) - Validation of configuration parameters

I wrote above that to implement your interface, you only need to implement two functions set_value () and get_value () . In fact, it is desirable (but not necessary) to implement two more:

def validate_parameter(self, name): ... def validate_configuration(self): ... They are necessary for the --check scenario mode, when the test parameters are checked for correctness, without actually executing them. validate_parameter — Called to verify that the name parameter is correct. And we must bear in mind that it is called for each test in the test. And validate_configuration is called once to check the configuration parameters of the entire test. For example, in the snmp-interface, it checks the availability of nodes, as well as checking the validity of OID and ObjectName by mib-files (if the corresponding directories are indicated where to find them).

Implementation

Well, finally we got to the implementation. Reflecting on which interface to implement as an illustrative example, I decided that I would develop the most versatile of possible interfaces — namely, the interface that will run scripts as checks. I will call it "scripts" . Just want to note that the word "script" means not only bash, but all that "you can run." Since we actually run the program for each check through the shell.

So.

First you need to come up with a format for NAME (which means see above). After a bit of thinking,

<test name="check scripts"> <check test="scriptname.sh >= VALUE" params="param1 param2 param3" ../> <check test="../myscritps/scriptname.sh >= VALUE" params="param1 param2 param3" ../> .. </test> About formatting

At first I wanted to make a format, a la GET request:

But I was confronted by the fact that we have xml. And it’s not so easy to take and use ' & '. Only if it is written in the form of '&' amp; . What is understandable is already nullifying all convenience.

scriptname?param1¶m2¶m3.But I was confronted by the fact that we have xml. And it’s not so easy to take and use ' & '. Only if it is written in the form of '&' amp; . What is understandable is already nullifying all convenience.

Those. in test = ".." the name of the script is written (you can specify the path). Since we want to pass parameters to the script, then for this we introduce a special field (extension) params = "..." .

Now we need to understand how we get the result . Since it is assumed that the program returns 0 - in case of success and "not zero" in case of failure, then use the return code, as a result, it seems to me, not the best idea. In the end, I decided that the easiest way would be for the program to output a special marker with the result to stdout. Those. on the one hand, we do not prohibit the program to display some of its own messages, but on the other we need to get a result from it. The marker will be a string like:

TEST_SCRIPT_RESULT: VALUEThose. we run the script and catch the marker in the output, cutting out the result.

The next question is error handling . It's all standard. If the return code! = 0, then an error. As the error details, we take everything that was output by the program in stderr.

Global interface configuration parameters : well, for now we believe that for our simple interface they are not needed. Although then I did for example one.

On this narrative is complete. What happened

- TEST FORMAT:

<check test="testscript=VALUE" params="param1 param2 param3..".../> - RESULT: As a result, the script should display (stdout) the string

TEST_SCRIPT_RESULT: VALUE - ERRORS: If the return code! = 0 it is considered that an error occurred! If successful, the script should return the return code 0.

- CONFIGURATION: none

Then proceed to the implementation ...

In order for our module to load it is necessary to implement two global functions uts_create_from_xml (..) and uts_plugin_name () in it .

They are simple

def uts_create_from_xml(xmlConfNode): """ :param xmlConfNode: xml- :return: UTestInterface """ return UTestInterfaceScripts(xmlConfNode=xmlConfNode) def uts_plugin_name(): return "scripts" The interface itself must inherit from the UTestInterface base class and implement the necessary functions. To begin, let me show you how the UTestInterface.py class looks.

UTestInterface.py

#!/usr/bin/env python # -*- coding: utf-8 -*- from TestSuiteGlobal import * class UTestInterface(): """ """ def __init__(self, itype, **kwargs): self.itype = itype self.ignore_nodes = False def set_ignore_nodes(self, state): """ set ignore 'node' for tests (id@node) :param state: TRUE or FALSE """ self.ignore_nodes = state def get_interface_type(self): return self.itype def get_conf_filename(self): return '' def validate_parameter(self, name): """ Validate test parameter (id@node) :param name: parameter from <check> or <action> :return: [ RESULT, ERROR ] """ return [False, "(validateParam): Unknown interface.."] def validate_configuration(self): """ Validate configuration parameters (check-scenario-mode) :return: [ RESULT, ERROR ] """ return [False, "(validateConfiguration): Unknown interface.."] def get_value(self, name): raise TestSuiteException("(getValue): Unknown interface..") def set_value(self, name, value, supplier_id): raise TestSuiteException("(setValue): Unknown interface...") Let's start with the implementation of the main function

get_value(self, name, context) .The name is passed to us - in our case, this is essentially the name of the script.

But since we have introduced an additional params field, we need to process it as well.

In order to reach our additional field, we will use such a useful parameter as context . What else is interesting in it is written in the documentation , we will pull out the xml-node of the current test, taking into account that it may not be (then we will throw out the exception).

def get_value(self, name, context): xmlnode = None if 'xmlnode' in context: xmlnode = context['xmlnode'] if not xmlnode: raise TestSuiteException("(scripts:get_value): Unknown xmlnode for '%s'" % name) ... For convenience, I selected a separate function (whether the format will change a little later) which receives the name and context as input, and returns the scriptname and parameters .

Here she is:

parse_name ()

@staticmethod def parse_name(name, context): """ : <check test="scriptname=XXX" params="param1 param2 param3" .../> :param name: ( scriptname) :param context: :return: [scriptname, parameters] """ if 'xmlnode' in context: xmlnode = context['xmlnode'] return [name, uglobal.to_str(xmlnode.prop("params"))] return [name, ""] Then our get_value () should

- run the script

- handle errors

- parse the result

I'll just give the full implementation.

get_value ()

def get_value(self, name, context): xmlnode = None if 'xmlnode' in context: xmlnode = context['xmlnode'] if not xmlnode: raise TestSuiteException("(scripts:get_value): Unknown xmlnode for '%s'" % name) scriptname, params = self.parse_name(name, context) if len(scriptname) == 0: raise TestSuiteException("(scripts:get_value): Unknown script name for '%s'" % name) test_env = None if 'environment' in context: test_env = context['environment'] s_out = '' s_err = '' cmd = scriptname + " " + params try: p = subprocess.Popen(cmd, stdout=subprocess.PIPE, stderr=subprocess.PIPE, env=test_env, close_fds=True, shell=True) s_out = p.stdout.read(self.max_read) s_err = p.stderr.read(self.max_read) retcode = p.wait() if retcode != 0: emessage = "SCRIPT RETCODE(%d) != 0. stderr: %s" % (retcode, s_err.replace("\n", " ")) raise TestSuiteException("(scripts:get_value): %s" % emessage) except subprocess.CalledProcessError, e: raise TestSuiteException("(scripts:get_value): %s for %s" % (e.message, name)) if xmlnode.prop("show_output"): print s_out ret = self.re_result.findall(s_out) if not ret or len(ret) == 0: return None lst = ret[0] if not lst or len(lst) < 1: return None return uglobal.to_int(lst) Small explanations.

To run the script, we still took the opportunity to pass our own environment variables into it. In the documentation this can be read in the section on scripts.

We again get the dictionary with environment variables out of context:

test_env = context['environment']I allowed myself to enter two additional parameters in the implementation: show_output = "1" - this is the parameter of the check level. The inclusive output to the screen of all that the script there displays in stdout. By default, scripts run with output disabled. And the second parameter I entered is the global setting max_read - which determines how many (first) bytes from the output should be read to get the result. First, I needed to demonstrate how to use global settings. And secondly, I thought that limiting the buffer to read is a good idea. Moreover, you can do that if the size is set to <= 0, then read everything.

In processing the return code, if the code is not zero, we take stderr as the error text (making it along the way in one line).

The result is a parsim using a regular expression.

Small detail

In this case, you can see that we are waiting for the completion of the running program. Those. we mean that programs will be laconic enough and

. - , « ». , timeout-.

. - , « ». , timeout-.

, plugins.d .

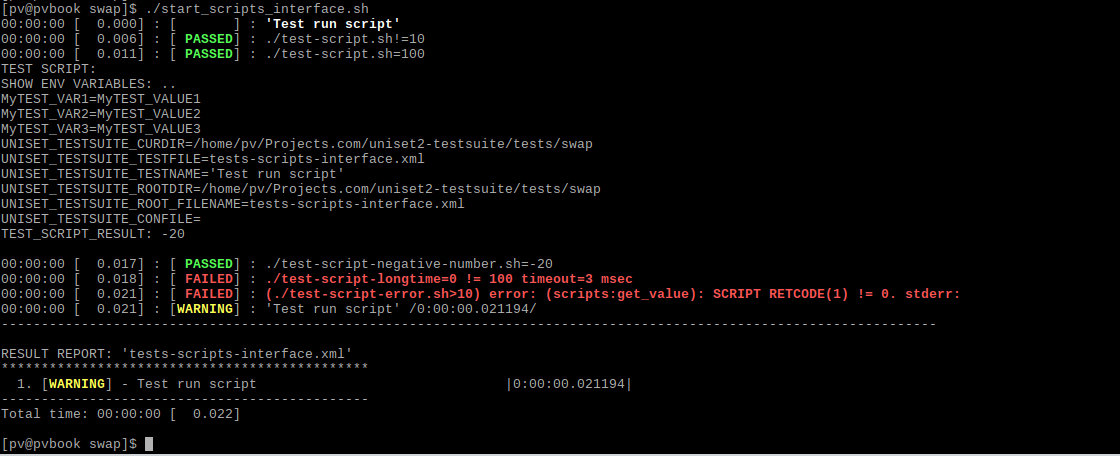

<?xml version="1.0" encoding="utf-8"?> <TestScenario> <Config> <environment> <item name="MyTEST_VAR1" value="MyTEST_VALUE1"/> <item name="MyTEST_VAR2" value="MyTEST_VALUE2"/> <item name="MyTEST_VAR3" value="MyTEST_VALUE3"/> </environment> <aliases> <item type="scripts" alias="s" default="1"/> </aliases> </Config> <TestList type="scripts"> <test name="Test run script" ignore_failed="1"> <check test="./test-script.sh != 10" params="--param1 3 --param2 4" timeout="2000"/> <check test="./test-script.sh = 100" params="param1=3,param2=4"/> <check test="./test-script-negative-number.sh = -20" show_output="1"/> <check test="./test-script-longtime.sh = 100" timeout="3000"/> <check test="./test-script-error.sh > 10"/> </test> </TestList> </TestScenario> , Config , , default- type=«scripts». TestList .

bash-, :

test-script.sh

#!/bin/sh echo "TEST SCRIPT: $*" echo "SHOW OUTOUT..." echo "TEST_SCRIPT_RESULT: 100" ,

test-script-negative-number.sh

#!/bin/sh echo "TEST SCRIPT: $*" echo "SHOW ENV VARIABLES: .." env | grep MyTEST env | grep UNISET_TESTSUITE echo "TEST_SCRIPT_RESULT: -20" ( show_output=«1» ignore_failed=«1» ):

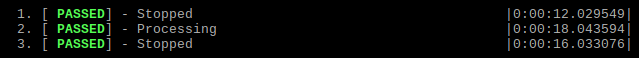

uniset2-testsuite-xmlplayer --testfile tests-scripts-interface.xml --log-show-actions --log-show-tests

. -

get_value(...) . .«»(action)? Those. set_value() …

, ,

<action set="..."/> - <action script=".."/> .

set_value(..)

def set_value(self, name, value, context): raise TestSuiteException("(scripts:set_value): Function 'set' is not supported. Use <action script='..'> for %s" % name) Go ahead ...

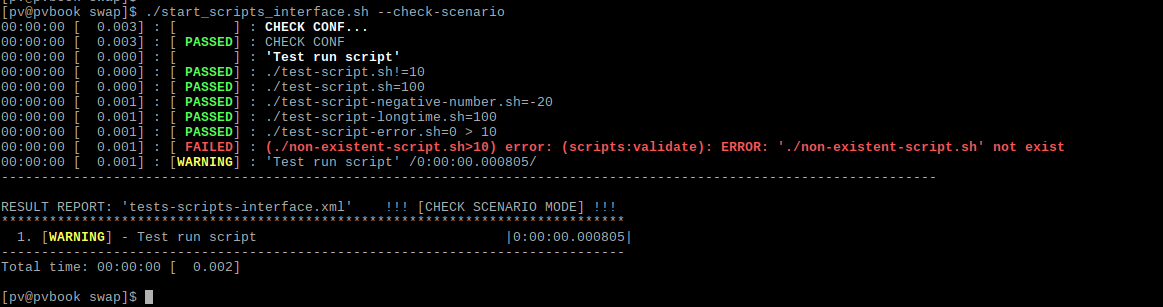

testsuite --check-scenario . validate_parameter validate_configuration . , max_output_read , . validate_configuration . validate_parameter . , ( ), :

.

validate_parameter() validate_configuration()

def validate_configuration(self, context): return [True, ""] def validate_parameter(self, name, context): """ :param name: scriptname :param context: ... :return: [Result, errors] """ err = [] xmlnode = None if 'xmlnode' in context: xmlnode = context['xmlnode'] scriptname, params = self.parse_name(name, context) if not scriptname: err.append("(scripts:validate): ERROR: Unknown scriptname for %s" % str(xmlnode)) if not is_executable(scriptname): err.append("(scripts:validate): ERROR: '%s' not exist" % scriptname) if len(err) > 0: return [False, ', '.join(err)] return [True, ""] , «» .

<?xml version="1.0" encoding="utf-8"?> <TestScenario> <Config> <environment> <item name="MyTEST_VAR1" value="MyTEST_VALUE1"/> <item name="MyTEST_VAR2" value="MyTEST_VALUE2"/> <item name="MyTEST_VAR3" value="MyTEST_VALUE3"/> </environment> <aliases> <item type="scripts" alias="s" default="1"/> </aliases> </Config> <TestList type="scripts"> <test name="Test run script" ignore_failed="1"> <check test="./test-script.sh != 10" params="--param1 3 --param2"/> <check test="./test-script.sh = 100" params="param1=3,param2=4"/> <check test="./test-script-negative-number.sh = -20" show_output="1"/> <check test="./test-script-longtime.sh = 100" timeout="3000"/> <check test="./test-script-error.sh > 10"/> <check test="./non-existent-script.sh > 10"/> </test> </TestList> </TestScenario> ,

uniset2-testsuite-xmlplayer --testfile tests-scripts-interface.xml --log-show-actions --log-show-tests --check-scenario

:

UTestInterfaceScripts.py

#!/usr/bin/env python # -*- coding: utf-8 -*- import os import sys import re import subprocess from UTestInterface import * import uniset2.UGlobal as uglobal class UTestInterfaceScripts(UTestInterface): """ . : <check test="testscript=VALUE" params="param1 param2 param3.." show_output="1".../> : (stdout) TEST_SCRIPT_RESULT: VALUE : !=0 ! 0. : show_output=1 - stdout.. ( <Config>): max_output_read="value" - , . : 1000 """ def __init__(self, **kwargs): """ :param kwargs: """ UTestInterface.__init__(self, 'scripts', **kwargs) self.max_read = 1000 if 'xmlConfNode' in kwargs: xmlConfNode = kwargs['xmlConfNode'] if not xmlConfNode: raise TestSuiteValidateError("(scripts:init): Unknown confnode") m_read = uglobal.to_int(xmlConfNode.prop("max_output_read")) if m_read > 0: self.max_read = m_read self.re_result = re.compile(r'TEST_SCRIPT_RESULT: ([-]{0,}\d{1,})') @staticmethod def parse_name(name, context): """ : <check test="scriptname=XXX" params="param1 param2 param3" .../> :param name: ( scriptname) :param context: :return: [scriptname, parameters] """ if 'xmlnode' in context: xmlnode = context['xmlnode'] return [name, uglobal.to_str(xmlnode.prop("params"))] return [name, ""] def validate_configuration(self, context): return [True, ""] def validate_parameter(self, name, context): """ :param name: scriptname :param context: ... :return: [Result, errors] """ err = [] xmlnode = None if 'xmlnode' in context: xmlnode = context['xmlnode'] scriptname, params = self.parse_name(name, context) if not scriptname: err.append("(scripts:validate): ERROR: Unknown scriptname for %s" % str(xmlnode)) if not is_executable(scriptname): err.append("(scripts:validate): ERROR: '%s' not exist" % scriptname) if len(err) > 0: return [False, ', '.join(err)] return [True, ""] def get_value(self, name, context): xmlnode = None if 'xmlnode' in context: xmlnode = context['xmlnode'] if not xmlnode: raise TestSuiteException("(scripts:get_value): Unknown xmlnode for '%s'" % name) scriptname, params = self.parse_name(name, context) if len(scriptname) == 0: raise TestSuiteException("(scripts:get_value): Unknown script name for '%s'" % name) test_env = None if 'environment' in context: test_env = context['environment'] s_out = '' s_err = '' cmd = scriptname + " " + params try: p = subprocess.Popen(cmd, stdout=subprocess.PIPE, stderr=subprocess.PIPE, env=test_env, close_fds=True, shell=True) s_out = p.stdout.read(self.max_read) s_err = p.stderr.read(self.max_read) retcode = p.wait() if retcode != 0: emessage = "SCRIPT RETCODE(%d) != 0. stderr: %s" % (retcode, s_err.replace("\n", " ")) raise TestSuiteException("(scripts:get_value): %s" % emessage) except subprocess.CalledProcessError, e: raise TestSuiteException("(scripts:get_value): %s for %s" % (e.message, name)) if xmlnode.prop("show_output"): print s_out ret = self.re_result.findall(s_out) if not ret or len(ret) == 0: return None lst = ret[0] if not lst or len(lst) < 1: return None return uglobal.to_int(lst) def set_value(self, name, value, context): raise TestSuiteException( "(scripts:set_value): Function 'set' is not supported. Use <action script='..'> for %s" % name) def uts_create_from_args(**kwargs): """ :param kwargs: :return: UTestInterface """ return UTestInterfaceScripts(**kwargs) def uts_create_from_xml(xmlConfNode): """ :param xmlConfNode: xml- :return: UTestInterface """ return UTestInterfaceScripts(xmlConfNode=xmlConfNode) def uts_plugin_name(): return "scripts" Total

uniset2-testsuite , , « — ». :

- ,

- (

, , ( REST API RS485). .

- python . C++. , python - . .

Related Links:

Source: https://habr.com/ru/post/323444/

All Articles