Administrator's summary: blaze and poverty of blade systems

In the Outline, we often bypass enterprise-technology because of their low applicability in not-so-large projects. But today's article is an exception, because it will be about modular systems, "blades".

There are not so many architectural delights in the IT world that would be enveloped in a large halo of "incredible coolness" and a comparable set of myths. Therefore, I will not complicate even more, and just talk about the features and applicability of such systems in practice.

Lego for engineer

The blade server is almost a normal server, which has a familiar motherboard, RAM, processors and many auxiliary systems and adapters. But "almost" is that such a server is not designed for autonomous work and comes in a special compact package for installation in a special chassis.

Chassis - or "basket" - is nothing like a big box with seats for servers and additional modules. All servers and components are connected using a large switching board (Backplane) and form a blade system .

If you disassemble the entire system into components, then the next slide will be on the table:

Blade Servers (blades) - servers without power supplies, fans, network connectors and control modules;

Chassis - body and backplane;

Power and cooling systems for all system components;

Switching devices for communication with the outside world;

- Control modules (various variations on IPMI).

From the usual server cabinet, all this stuff is compact in size (usually 6-10U) and a high level of reliability, since all components can be reserved. Here, by the way, lies one of the myths: a dozen blades are not going into one big server. It will be just a dozen servers with a common infrastructure.

By the way, HPE has solutions that resemble traditional blade servers - HPE Superdome . As blades there are used processor modules with RAM. In such solutions, the entire system really is one high-performance server.

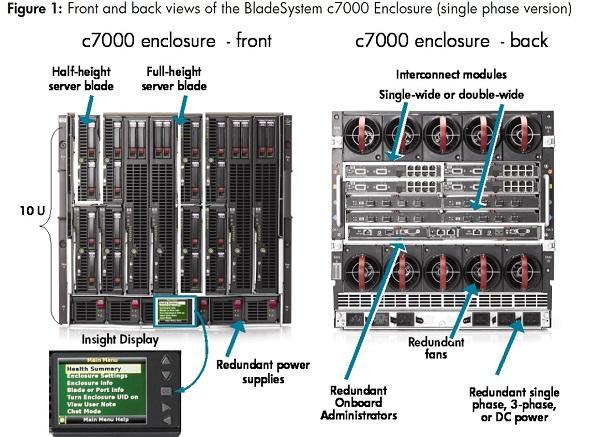

The nuances of architectural solutions from different manufacturers of blade systems have already been discussed at Habré (the article, though old, but relevant in its fundamentals), so I use the HPE blade system - BladeSystem c7000 for illustration.

In the role of the blades can be:

Normal servers, like HPE ProLiant BL460c Gen9 (analog rack-mount HPE ProLiant DL360 Gen9 );

Disk arrays - for example, HPE StorageWorks D2200sb , in which you can install up to 12 2.5 "disks. So easily and naturally several servers can provide shared DAS storage;

SAN switches for accessing external storage systems and full- featured NAS servers — for example, HPE StorageWorks X1800sb ;

- Tape devices.

The picture below shows the complete HPE BladeSystem c7000 system. The layout of the components is clear and so - pay attention only to the Interconnect modules section. A fail-safe pair of network devices or pass-thru modules is installed in each row for simple forwarding of server network interfaces to the outside.

The HPE ProLiant BL460c Gen8 compact blade fits only two 2.5-inch disks. For more beauty, instead of disks, you can use network boot from a SAN or PXE disk system.

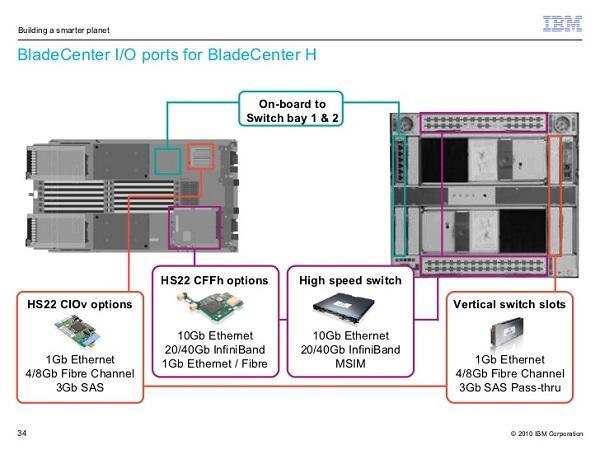

Below is a more compact blade system from IBM. The general principles are the same, although the location of the nodes here is different:

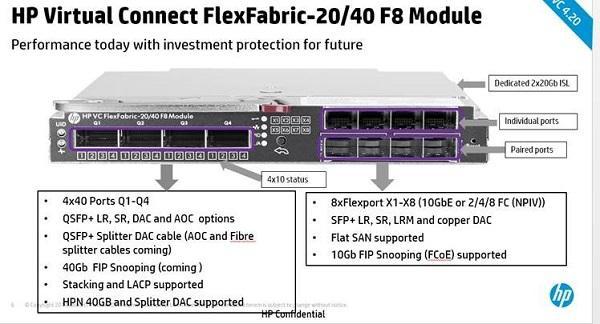

The most interesting part of the blades is, in my opinion, the network component. With the use of fashionable converged switches, you can work wonders with the internal network of blade systems.

Some network and enterprise magic

The network modules can be special Ethernet switches or SAS, or those who can both. Of course, an ordinary switchboard cannot be installed in a blade system, but compatible models are made by familiar brands. For example, the "great three" HPE, Cisco, Brocade. In the simplest case, these will simply be network access modules that bring all 16 blades out through 16 Ethernet ports — the HPE Pass-Thru .

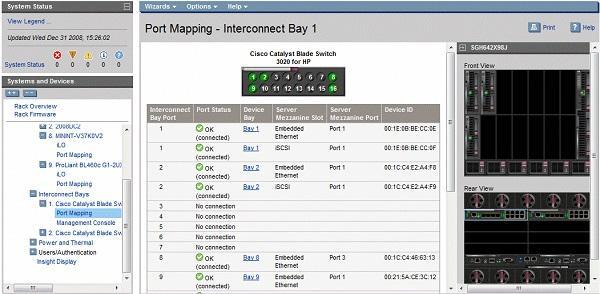

Such a module will not reduce the number of network wires, but will allow you to connect to the corporate LAN with minimal investment. If, instead, use an inexpensive Cisco Catalyst 3020 with 8 1GbE Ethernet ports and 4 1GbE SFP ports, then only a few common chassis ports will need to be connected to the public network.

Such network devices do not differ from ordinary ones in their capabilities. The HPE Virtual Connect (VC) modules look much more interesting. Their main feature is the ability to create several separate networks with flexible LAN and SAN bandwidth allocation. For example, you can lead to the 10GbE chassis and "cut" out of it 6 Gigabit LAN and one 4Gb SAN.

At the same time, VC supports up to four connections to each server, which opens up certain spaces for creativity and cluster assembly. Other manufacturers have similar solutions - something similar from Lenovo is called IBM BladeCenter Virtual Fabric .

Contrary to popular belief, the blades themselves do not differ from ordinary servers, and do not provide any particular advantages in terms of virtualization. Interesting opportunities appear only with the use of special, vendor-locked technologies, such as HPE VC or Hitachi LPAR.

Multiple IPMI from one console

You can use the BMC hardware control modules (iLO in the case of HPE) to configure the blade servers. The administration and remote connection mechanism differs little from a regular server, but the Onboard Administrator (OA) control modules themselves can reserve each other and provide a single entry point to manage all devices in the chassis.

OA can be with a built-in KVM console for connecting an external monitor, or with only one network interface.

In general, administration through OA is as follows:

You connect to the web interface of the Onboard Administrator console. Here you can see the status of all subsystems, disable or remove the blade, update the firmware, etc.

If you need to get to the console of a specific server, select it in the list of equipment and connect to its own iLO. There is already all the usual set of IPMI, including access to the images and reboot.

Even better, connect the blade system to external control software like HPE Insight Control or its replacement OneView . Then you can configure the automatic installation of the operating system on the new blade and the cluster load distribution.

Speaking of reliability, blades break down just like normal servers. Therefore, when ordering a configuration, do not neglect the redundancy of components and careful study of the instructions for the firmware. If the suspended Onboard Administrator delivers only inconvenience to the administrator, then an incorrect update of the firmware of all elements of the blade system is fraught with its inoperability.

But behind all this magic, we completely forgot about mundane matters.

Do you need a blade in your company?

High density, a small amount of wires, control from one point is all well and good, but let's estimate the cost of the solution. Suppose, in an abstract organization, you need to start at once 10 identical servers. Compare the cost of blades and traditional HPE ProLiant DL rack-mount models. For ease of evaluation, I do not take into account the cost of hard drives and network equipment.

Blades:

| Name | Model | amount | Cost of |

| Chassis | HPE BladeSystem c7000 | one | 603 125 ₽ |

| Power Supply | 2400W | four | - |

| Control module | HPE Onboard Administrator | one | - |

| Network module | HPE Pass-Thru | 2 | 211 250 ₽ |

| Blade | HPE ProLiant BL460c Gen8 | ten | 4 491 900 ₽ |

| CPU | Intel Xeon E5-2620 | 20 | - |

| RAM | 8 GB ECC Reg | 40 | - |

| Total: | 5 306 275 ₽ |

Prices are valid on 06.02.2017, source - STSS

Analogs - HP ProLiant DL360p Gen8 :

| Name | Model | amount | Cost of |

| Platform | HP ProLiant DL360p Gen8 | ten | 3 469 410 ₽ |

| CPU | Intel Xeon E5-2620 | 20 | - |

| Power Supply | 460W | 20 | - |

| RAM | 8 GB ECC Reg | 40 | - |

| Total | 3 469 410 ₽ |

Prices are valid on 06.02.2017, source - STSS

The difference is almost two million rubles, while I did not lay out full fault tolerance: an additional control module and, ideally, another chassis. Plus, we are depriving us of convenient network switching due to the use of the cheapest pass-thru modules for simple output of the network interfaces of the servers to the outside. Virtual Connect would be more appropriate here, but the price ...

It turns out that "head on" saving will not work, so let's move on to the other pluses and minuses of the blades.

Some more arguments

The obvious advantages of blade systems include:

Installation density If you need many, many servers in one DC, blades are like saving;

Neat and compact cable infrastructure due to flexible internal switching of blades;

Ease of management - the entire basket can be controlled from one console and without installing additional software;

Easy installation of new blades, while there is a place in the chassis - just like with disks in baskets with hot swapping. In theory, it is possible immediately when installing the blade to load the configured system over PXE and allocate resources in the cluster;

- Reliability. Virtually all nodes can be reserved.

But without cons:

Limited blade. If you need a server with four processors and a large number of local hard drives (for example, NMVE SSD), then installing such a large blade in a quarter of the entire chassis capacity makes it useless to use a high-density basket;

Reliability. Despite the duplication of components, there is a single point of failure - the communication board (backplane) chassis. In case of failure, all blades may fail;

The impossibility of separation. If you need to create a geographically distributed cluster, you can not just pull out and transport half of the servers - you will need another chassis;

- Cost In itself, the chassis stands as three blades, and the blade stands as a full-fledged server.

So what to choose

Blades look very organic in really large data centers, such as hosting companies. In such scenarios, the speed of scaling and the maximum density of equipment placement come out on top - saving on space and administration may well pay for the basket and all kinds of Virtual Connect.

In other cases, the use of ordinary rack servers seems to be more reasonable and universal. In addition, the widespread adoption of fast virtualization systems further reduced the popularity of blades, since most applications can also be compressed using virtual servers. Needless to say, managing virtual machines is even more convenient than blades.

If you have used Blade Systems in not the largest companies - share your impressions of the administration.

')

Source: https://habr.com/ru/post/323386/

All Articles