Pix2Pix: How Cat Generator Works

All of you, probably, have already seen super-realistic cats that you can draw here .

Let's understand what is there inside.

Disclaimer: The post is written on the basis of the edited chat logs of closedcircles.com , hence the style of presentation, and clarifying questions

All this is an implementation of the Image-to-Image Translation with Conditional Adversarial Networks from Berkeley AI Research.

So how does this all work?

In a paper, people solve the problem of transforming a picture into another so that a person does not need to invent a loss function.

One of the main problems with neural networks in the generation of images is that if you use just average loss in pixels as loss, for example, L1 or L2 (or mean squared error), then the network tends to average all possible options. If there is some uncertainty in the final picture - for example, the edge may be at different positions, or the color may be in a certain range, then the optimal result from the point of view of L2 loss is something between all possible cases, and not a specific one. of them.

Therefore, the pictures are very blurred spots.

For different individual tasks, people invented other loss functions to express a certain structure that should be in the resulting picture (for segmentation, for example, Conditional Random Fields tried to add, etc., etc.), but this all helps very incrementally and very much depends on the task.

So, following the new trends, in the paper as a kind of additional loss to the L1 stick GAN (Generative Adversarial Network). (read about GANs can be read on Habré here and here )

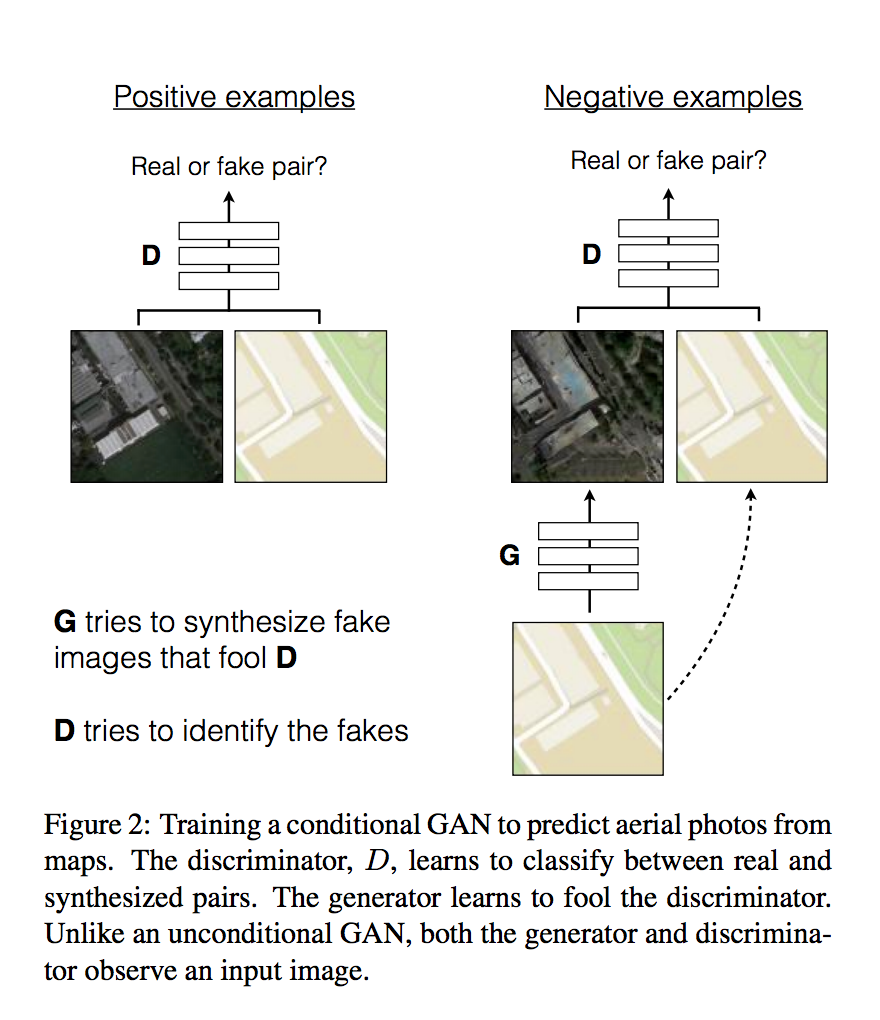

The general scheme they have is:

The input image is given to the input generator - it is an additional condition for what needs to be generated. Based on it, the generator should generate a picture for the output.

The discriminator is given both the input image and what the generator generated (or, for positive examples, the real pair from the training dataset), and it must output whether the generated picture is real or generated. Thus, if the generator generates a picture that is not related to the input, the discriminator must determine this and discard it.

The generator is the result of an iterative training of this pair of networks.

In general, this is the standard approach of Conditional GANs - a variant of GAN, where the model should generate images corresponding to the additional input vector of the class.

Only here the input vector of the class is a picture, and the total loss is GAN loss + L1.

In the sense of "stick GAN" in the context of the discussion of losses? Type add a generator and solve the problem of finding a minimax?

Well yes.

At a high level, everything!

What are their interesting details?

Unlike the classical approach to GANs, no noise vector is transmitted to the generator at all.

All variety only from the fact that the network has a dropout, and they do not turn it off after a workout.- Network architecture - U-Net, a rather new segmentation architecture that has many skip connections from the encoder to the decoder (here is a short description )

Here is a picture that shows that both GAN loss and U-net help.

Here, by the way, the initial problem with the use of only L1 loss is clearly visible - even the powerful model generates blurry spots to minimize the average deviation.

- They train the model on 70x70 patches, and then apply them on large pictures through full convolution. It's funny that 70x70 gives on average better results than doing the whole 256x256 picture right away.

And where are the cats !!!

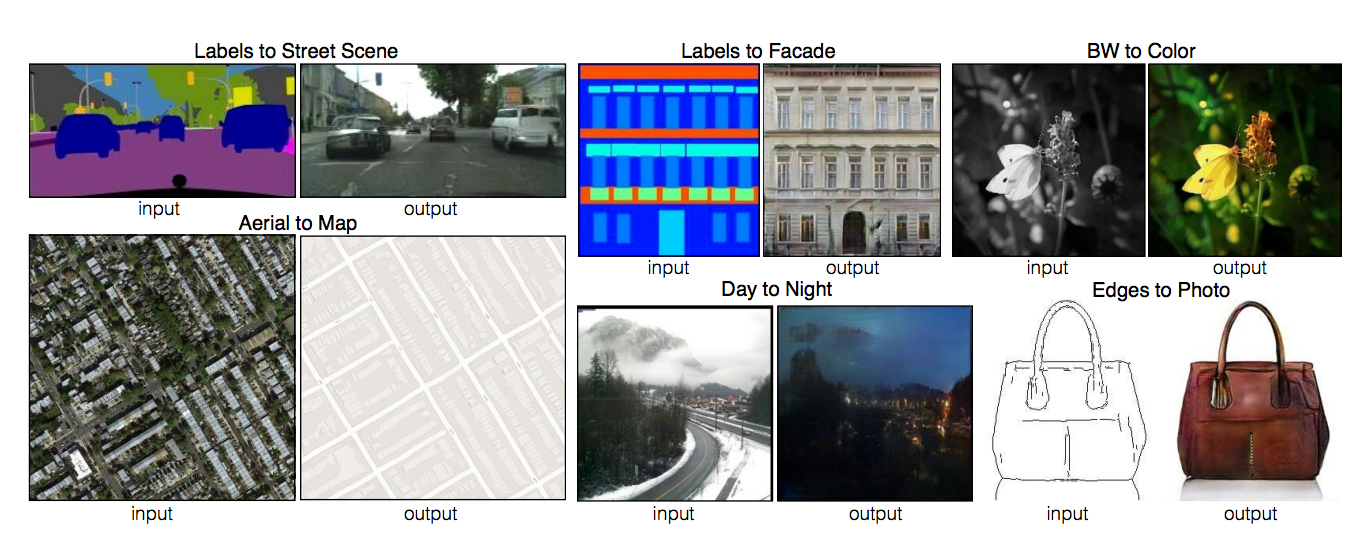

After that there is a system that can be taught on arbitrary inputs and outputs, even if they are from very different tasks.

From segmentation to photography, from daytime photos to nighttime, from black and white to color, etc.

And the last example is from the edges to the picture. The edges in the picture are generated by a standard algorithm from computer vision.

This means that you can simply take a set of pictures, drive off edge detection, and here on these pairs

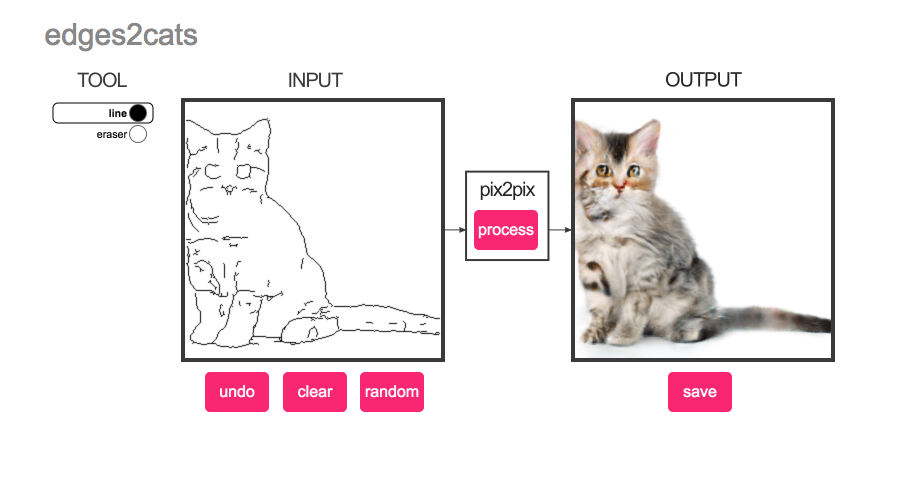

to train. It is possible and on cats:

And after that the model can generate something for any sketches that people draw.

(send, by the way, what do you remember)

Thus, the lack of grain cats from humanity was eliminated!

Overall, this job is another example of how GANs have been soaring since last year. It turns out that this is a very powerful and flexible tool that expresses "I want it to be indistinguishable from the present, although I don’t know what it specifically means" as the goal of optimization.

I hope someone will write a full review of the rest of what is happening in the area! Everything is very cool there.

Thanks for attention.

')

Source: https://habr.com/ru/post/323374/

All Articles