REST API Design for High Performance Systems

Alexander Lebedev expresses all the non-triviality of the REST API design. This is a transcript of the Highload ++ 2016 report.

Hello everybody!

Raise the hand of those who are frontend developer in this room? Who is a mobile developer? Who is the backend developer?Backend developers are most in this room now, which is joyful. Secondly, almost everyone woke up. Wonderful news.

')

A few words about yourself

Who am i? What do you do?

I am a frontend team lead of the company "New Cloud Technologies". For the last 5 years, I have written a web front-end that works with the REST API and which should work fast for the user. I want to share experiences about what API should be, which allow to achieve this.

Despite the fact that I will be telling from the front end, the principles - they are common more or less for everyone. I hope both backend developers and mobile application developers will also find useful things in this story.

A few words on why it is important to have a good API for fast applications.

First is performance from the client’s point of view. That is, not from the point of view of supporting us with one million customers who came at the same time — but from the point of view that for each of them the application worked quickly. A little later, I will illustrate a case in this report, when changes in the API made it possible to speed up the data acquisition on the client about 20 times and allowed the unfortunate users to turn into satisfied users.

Secondly - in my practice there was a case when, after a very short period of time, I had to write the same practically functional to two different systems. One had a convenient API, another had an awkward API. How do you think the complexity of writing this functionality was different? For the first version - 4 times. That is, with a convenient API, we wrote the first version in two weeks, with an uncomfortable one in two months. The cost of ownership, the cost of support, the cost of adding something new - it also differed significantly.

If you do not design your REST API, then you can’t guarantee that you will not be on the wrong side of this range. Therefore, I want the approach to the REST API to be reasonable.

Today I will talk about simple things, because they are more or less universal - they apply to almost all projects. How to properly complex cases require a separate analysis, they are specific to each project, and therefore not so interesting in terms of the exchange of experience.

Simple things are interesting to almost everyone.

Let's start by looking at the situation as a whole. In what environment is there a REST API that we need to do well?

In cases with which I have worked, the following generalization can be made:

We have a certain backend - conditionally, from the point of view of the frontend, it is one. In fact, everything is difficult there. Probably, everyone saw a caricature, where a mermaid acts as a front end, and a horrible sea monster as a backend. This may also work about. It is important that we have a single backend in terms of the front - we have a single API, there are several clients for it.

A fairly typical case: we have a web, and we have the two most popular mobile platforms. What can you immediately learn from this picture? One backend, several clients. This means that many times in the API design code there will be choices where to take the complexity. Either a little more work to do on the back end, or a little more work to do on the front.

It should be understood that in such a situation, the work at the front will be multiplied by 3. Each product will have to contain the same logic. It will also be necessary to apply additional efforts not even in the course of development, but in the course of further testing and maintenance, so that this logic does not disperse and remains the same on all clients.

It should be understood that the assignment of some kind of logic to the frontend is expensive and should have its own reasons, it should not be done "just like that." I would also like to note that the API in this picture is also single, not without purpose.

As a rule, if we say that we want to develop efficiently and we want to make the application fast, we really want to, despite the fact that there can be a zoo of systems on the backend (enterprise integration of half a dozen systems with different protocols, with different APIs). From the point of view of communicating with the front-end, it should be a single API, preferably built on the same principles, and which can be changed more or less completely.

If this is not the case, then it becomes much more difficult to talk about some meaningful API design, the number of decisions increases, and we have to say: “well, we have to, these are limitations.” It would be desirable, that in this situation it was not necessary to fall. It would be desirable, that we could, at least from the point of view of what API we provide for client applications, to control it all.

One more thing - let's add people to this scheme.

What we do

It turns out we have one or several backend teams, one or several front-end teams. You have to understand that if we want to have a good API, it is in the public domain. All teams work with him. This means that API decisions should preferably be made not in one team, but jointly. The most important thing is that decisions should be made in the interests of all these teams.

If I, like the frontend lead, in order to implement some functionality on the web, invent an API and go to negotiate with the backend developers to implement it - I must take into account not only the interests of the web, not only the interests of the backend, but also the interests of mobile teams. Only in this case will not have to painfully alter.

Or I must immediately clearly understand that I am doing some kind of temporary prototype, which then will need to cover the range from which we collect the requirements, including the rest of the teams and redoing them. If I understand this, then we can proceed from the interests of a separate team. Otherwise, it is necessary to proceed from the interests of the system as a whole. All these people have to work together for the API to be good.

In such conditions, I managed to ensure that the API was convenient - that it was convenient for him to write a good frontend.

Let's talk a little bit about general ideas before we get into the specifics.

Three basic principles that you want to highlight

What I have already said a little about the human key for technical solutions is also important. Technical decisions on API should be made on the basis of their entire system, and not on the basis of the vision of any particular part of it.

Yes. This is hard. Yes, it requires some additional information when you need to do it right now, but it pays off.

Secondly, performance measurement is more important than its optimization.

What does it mean? Until you start measuring how fast your frontend is working, how fast your backend is working, it's too early for you to optimize them. Because, almost always - in this case the wrong thing will be optimized. I'll talk about a couple of such cases later.

Finally, the fact that I have already touched the edge. Complex cases - they are unique for each project.

Simple principles are more or less universal, so I want to tell you about a few simple mistakes, some of which are learned through personal experience. These errors can not repeat almost any project.

A small section on how to measure performance. In fact, this is a very serious topic - there is a lot of hotel literature on it, a lot of hotel materials, as well as specialists who deal only with this.

What am i going to tell

I'm going to tell you where to start, if you have nothing at all. Some cheap and angry recipes that will help you start changing the performance of the web front end and start measuring the performance of the backend so that you can start to speed them up. If you already have something, great. Most likely it is better than what I propose.

Backend performance.

We measure through what clients work with - through the REST API. It is desirable to recreate more or less exactly how the user works with this API.

We measure UI performance through automated frontend testing. That is, in each case we measure not some internal elements - we measure the very interface with which the next layer works.

That is, REST API for the backend and UI for the user.

What you need to understand to measure

First, the closer our environment to what will work in production - the more accurate our numbers. It is very important that we have data similar to the real ones.

It often happens when we develop, we don’t have any test data at all. The developer creates something on his machine - usually one entry to cover each scenario that was thought of during the analysis and it turns out 5-10 entries. All is well.

And then it turns out that real users work with 500 entries, in the same interface, in the same system. Brakes and problems start. Or the developer creates 500 entries, but they are all the same and created by the script. With uneven real-life data, all kinds of anomalies can begin.

If you have data from a production, the best test data - we take a sample of data from a production, we remove from there all sensitive information that is the private property of specific customers. On this we develop, on this we test. If we can do this, then this is great, because we don’t need to think about what this data should be.

If we still have an early stage in the life of the system and we can’t do that, we have to guess how the users will use the system, how much data is there, what kind of data. Typically, these proposals will be very different from real life, but they are still 100 times better than the “that grew, it grew” approach.

Then, the number of users. Here we are talking about backend, because the user is usually alone at the front end. There is a temptation when we test the performance, to let a small number of users with very intensive requests. We say: “100 thousand people will come to us, they will make new requests on average every 20 seconds.

Let's replace it with 100 bots that will make new requests every 20 milliseconds. ”

It would seem that the total load will be the same. However, it immediately turns out that in this case we almost always measure not the system performance, but the performance of the cache somewhere on the server, or the performance of the database cache, or the performance of the application server cache. We can get numbers that are much better than real life. It is desirable that the number of users - the number of simultaneous sessions, was also close to what we expect in real life.

How users work with the system

It is important that we estimate how many times each element will be used during the middle session. I had a case where we implemented a load testing script simply by writing a script that touches all the important parts of the system, once in a while. That is, a person enters, uses each important function once and exits.

And it turned out that we have the two slowest points. We began to heroically optimize. If we thought or looked at the statistics that weren't at that time, we would know that the first slowest point is used in 30% of the sessions, and the second slowest point is used in 5% of the sessions.

This, first of all, would allow us to optimize them in different ways. Where 30% is more important. And secondly, it would allow to draw attention to the fact that there is a functional, which, despite the fact that it does not work so slowly, is always used.

If a session starts with logging into the system and viewing a dashboard, then the performance of this input and the performance of this dashboard feel 100% of the users on their skin.

Therefore, even if these are not the slowest places, it still makes sense to optimize them. Therefore, it is important to think about how the system will be perceived by a typical user, and not what functionality we have or what functionality we should check for performance.

On the back end - how can we measure the performance of the REST API?

The approach that I personally used, which works quite well as a first approximation, is that we should have a use case. We have no use case, we write them.

We estimate the percentage of use of system functions in each session. We shift it to requests to the REST API. We are convinced that we have data similar to the real ones for test users. We write scripts that generate them. Then we unload all this into JMeter or any other tool that allows us to arrange trips to the REST API with a large number of concurrent users and test. As a first decision, nothing complicated.

For the frontend - all about the same degree of oak.

We type the key places in the code, calls of console functions that consider time, we output to the console, everything is enough for the developer.

He can run the debug build, see how long a particular piece of time has been displayed, how long it has been between different user actions - all is well.

If we want to do this automatically we write the Selenium script. For Selenium, the console functions are not very similar - a primitive wrapper is written there that takes exactly the time of windows.performance.now () and transfers it to some global object, from where it can be picked up later. In this case, we can drive the same scenario on different versions and track how our system performance changes over time.

Now I want to go to the main part - recipes, known from personal experience.

How to and how not to do the API

Let's start with one rather curious case: I said that at the API level, you can optimize the processing speed by 20 times.

Now I will show how exactly this scenario was arranged.

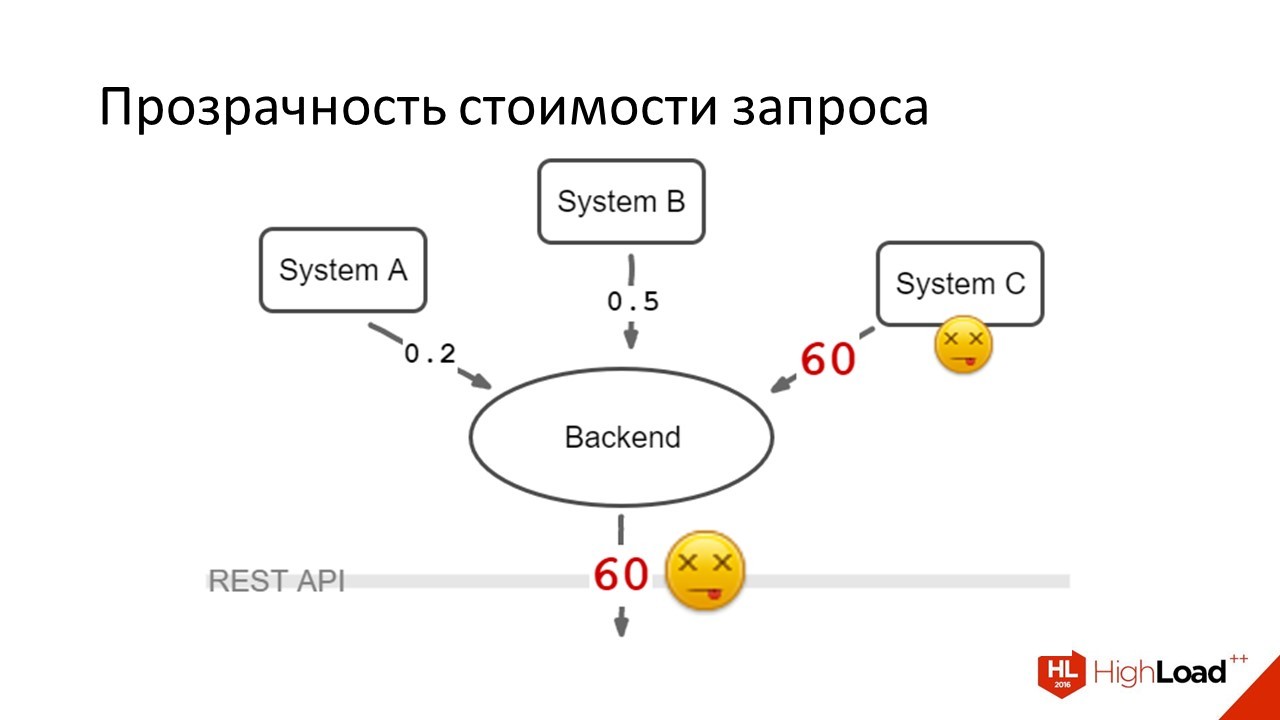

We have a backend. It aggregates the data of their three different systems and shows them to the user. In normal life, the response time looks like this:

Three parallel queries are leaving - the backend aggregates them. Time in seconds. Everywhere the answer is less than a second. 100 milliseconds are added for paralleling the aggregation request. We give the data to the frontend and everyone is happy.

It would seem that all is well, what can go wrong?

One of the systems suddenly dies - moreover, it dies in a very pernicious way, when instead of immediately answering honestly that the system is not available - it hangs on a timeout.

This happens in those days when the load on the system as a whole is when we have the most users. Users happily see that their loading spinner does not disappear, they press F5, we send new requests, everything is bad.

What we did in this case

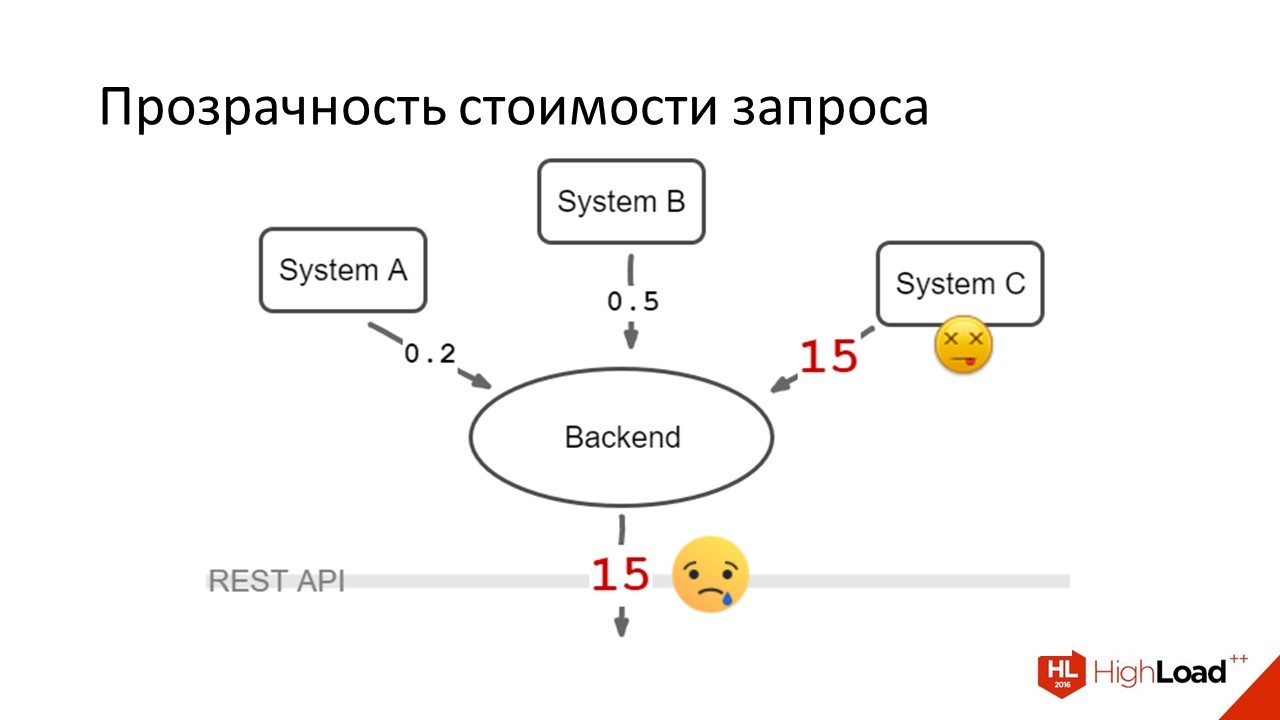

The first thing we did was lower timeouts. It became like this:

Users began to wait on average for their answers, but still they were very unhappy. Because 15 seconds, where there used to be one - it is extremely difficult. The problem was rolling. System C worked at normal speed, then lay down, then worked again.

Timeouts below this figure could not be reduced, because there were situations when she normally answered seconds 10-12. It was rare.

But if we lowered the timeout, we would start to make unhappy also the users who fell into this tail of response time.

The first decision in the forehead was made on the same day. It improved the situation, but still it remained bad. What was the right global solution?

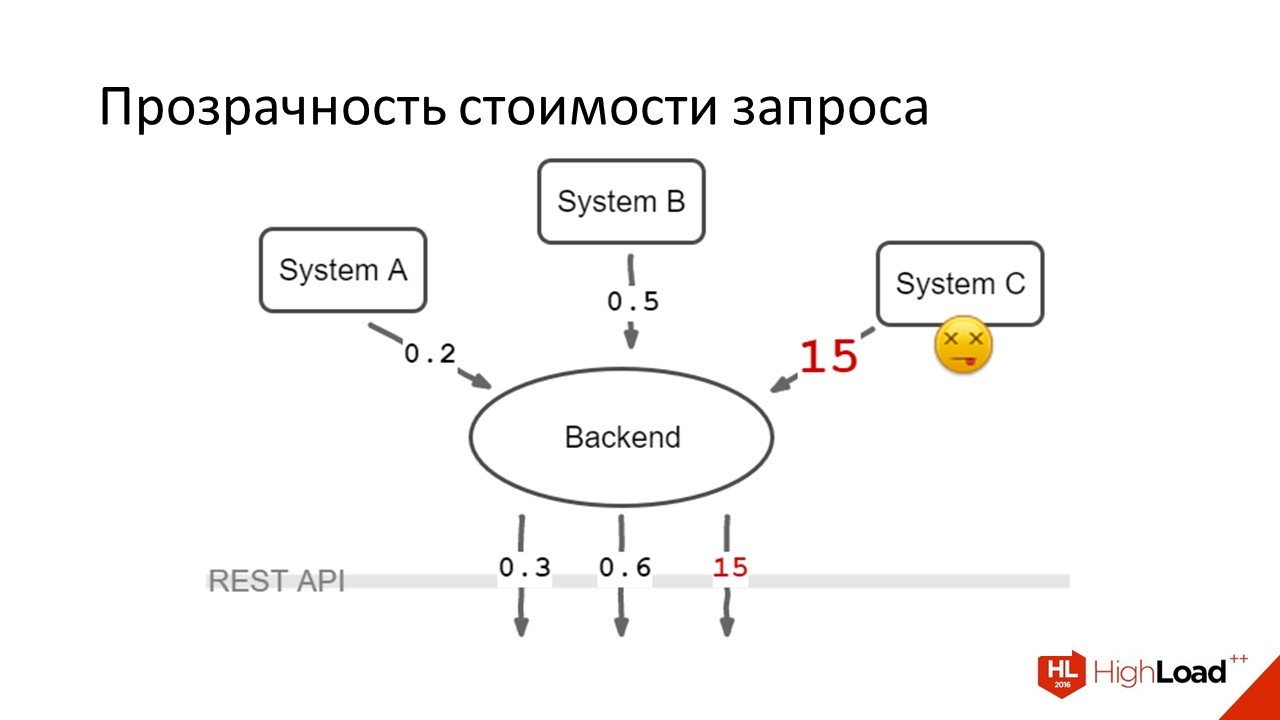

We did this:

Through the REST API, the requests were written in which it was written: the client transferred data from which system it wants to receive, three requests went in parallel, the data were aggregated on the client. It turned out that those 80% of users who had enough data from System A and B to work - they were happy. Those users (20%) to whom data from System C were also needed — they continued to suffer. But the suffering of 20% is much better than the suffering of absolutely everyone.

What ideas would you like to illustrate with this case?

This is the case when it was possible for all to accelerate very much.

First, optimization, often - this is an extra job, this is code degradation. From the point of view of complexity, it is clear that it is more difficult to aggregate data on the frontend - you need to do parallel queries, you need to handle errors, you need to propagate the business of data aggregation to all our frontends.

In the case when we work with a heavy scenario, where performance is critical for the user, this is sometimes necessary. That is why it is so important to measure performance, because if such heroism begins to be performed in any place where it will be a little slower, you can spend a lot of effort in vain. That was the first thought.

The second thought. There is a remarkable principle of encapsulation, which says that "we must hide the insignificant details behind the API and not show them to the client."

If we want a fast system, we should not encapsulate the cost of operations. In a situation where we have an abstract API that returns only all the data at once and it can only do it very expensively and we want to have a fast client. We had to break the principle of encapsulation in this place and make it so that we had the opportunity to send cheap requests and the possibility of an expensive request. These were different parameters of the same call.

I want to tell you a few thoughts about the use of Pagination, about the pros / cons, experience gained.

In the era of web 1.0, there were a lot of places where you could see interfaces that show 10-20-50 records and an endless list of pages: search engines, forums, whatever.

Unfortunately, this thing crawled into a very large number of APIs. Why "Unfortunately? Despite the fact that the solution is old and proven, everyone is more or less aware of how to work with him, there are problems that I want to talk about.

First, remember the Yandex screen, which says that there were 500 thousand results in 0.5 seconds.

Figures from the air - I'm sure Yandex is faster.

One of the cases I saw when, in order to display the first page, data sampling cost 120 milliseconds - and counting the total amount of data that fall into the search criteria cost 450 milliseconds. Attention question: for the user, the truth is that all the results are n - is it 4 times more important than the first page data?

Almost always not. It would be desirable that if the data is not very important for the user, then their receipt was either as cheap as possible, or this data should be excluded from the interface.

Here you can significantly improve this case by refusing to show the total number of results - you can write a lot of results. Suppose there was a case when we were approximately in such a situation, instead of writing that “100 results out of 1200 are shown” began to write that “the total number of results is more than 100, please specify the search criteria if you want to see something else” .

This is not the case with all systems, so measure before changing anything.

What other problems are there

From the point of view of usability, even when we don’t go to the server for the next page - all the same, in well-built user interfaces it’s not often that the user needs to go somewhere far behind the data.

For example, going to the second page of Google and Yandex, for me personally, comes only from despair, when I searched, did not find it, tried to formulate differently, did not find it either, tried the third, fourth option - and here I can already climb on the second page of these search options, but I do not remember when I last did it.

Who in the last month ever went to the second page of Google or Yandex? Quite a lot of people.

In any case, the first or second page. It may be necessary to simply show more data at once, but there is no reason why your user in a normally made interface might need to walk, let's say on page 50. There are issues with usability in pagination.

Another very painful problem. What will you do if the data set in the sample has changed between the page requests?

This is especially true for applications that do not have such a reliable network or frequent offline.

On mobile at all hard. On the web, which is honed under the possibility of working offline, is also not easy. There begins a very curly and peculiar logic on how we can invalidate the data of the previous pages - what should we do if the data of the first page is partially shown on the second, how to sew these pages. It's complicated.

There are many articles on how to do it and the solution is. I just want to warn you that if you are going in this direction - know this problem and appreciate in advance what will be its solution. It is rather unpleasant.

In all cases where I had to work, we usually either refused pagination at the design stage, or made a rough prototype, and then reworked it for something. It was possible to get away from the problem before they began to deal with it.

Let's sum up

I would like to say that pagination, especially in the form of endless scrolling, is quite appropriate in some situations. For example, we make some kind of news feed. VKontakte tape, where the user shakes it from top to bottom and reads it for entertainment purposes, without trying to find something specific - this is just one of those cases where page-by-page loading, with endless scrolling, will work wonderfully.

If you have some kind of business interface and there we are not talking about the flow of events - but this is about finding objects with which you need to interact, objects of more or less long-term living. Most likely there is another UI solution that allows you not to have these problems with the API and which allows you to make a more convenient interface.

Want to go in the direction of pagination? Think about what the choice of the total number of elements will cost and whether it is possible to do without them. And think about how to deal with synchronization issues that change between data pages.

Let's talk about the next problem - it is very simple.

We have 10 interface elements that we show to the user:

In the normal case, we will make 1 request, get all the data, show.

What happens when we don't think?

We will make 1 request for the list, then go down separately for each element of the list, this data on the client will die, we will show.

The problem is very old - it came from SQL databases, perhaps, it also existed somewhere before SQL. It continues to exist now.

There are two very simple reasons why this happens.

Either we did the API and did not think about what the interface would be and therefore did not include enough data in the API, or when we were doing the interface, we didn’t think what data the API could give us and included too much. Solutions are also pretty simple.

We either add data to the request and learn to get what we need to show with a single request, or we remove some data on individual objects either in the details page, or make some right panel where you select an element and load it to you. data.

There are no cases when it makes sense to work like this and lists to make more than one request for data.

Let's imagine that we have added a lot of data. We will immediately encounter the following problem:

We have a list. To display it, we need one amount of data, but we actually load 10 times more. Why it happens?

Very often, an API is designed according to the principle that we give the lists exactly the same object representations as with a detailed query. We have some page details of the object on 10 screens, with a bunch of attributes and we get all this in one request. In the list of objects we use the same view. What is bad?

The fact that such a request may be more expensive and the fact that we download a lot of data over the network, which is especially important for all mobile applications.

How I personally fought with it

An API is made that allows you to give two versions for each object of this type: short and full. The list request to the API gives short versions and this is enough for us to draw the interface. For the details page we request the full.

Here it is important that in each object there is a sign of whether it represents a multiple or full version. Because if we have a lot of optional fields, the business logic, by definition, “do we already have a full version of the object or need to request it” can become quite curly. This is not necessary.

You just need a field that you can check.

Let's talk about how to cache?

In general, for the ecosystem I described: backend, REST API, frontend. There are three levels of caching. Something is caching the server, something can be caching at the HTTP level, something can be cached on the client.

From the point of view of the developer's frontend, there is no server cache. It's just some kind of tricky magic that server developers use to make the backend work quickly.

Let's talk about what we can influence.

HTTP cache What is he good at?

It is good in that it is an old generally accepted standard that everyone knows, which almost all clients implement. We get a ready implementation on both the client and the server, without having to write any code. The most that can be required is to tweak something.

What is the problem

The HTTP cache is quite limited, in the sense that there are standards, and if your case is not provided for, then excuse me, you will not do it. It should be done programmatically in this case.

Another feature. Data invalidation in the HTTP cache occurs through a server request, which can somewhat slow down work, especially on mobile networks. In principle, when you have your own cache, you do not have this problem.

A number of keywords that you need to know about the operation of the HTTP cache. I don't have much time. Just say - read the specification, everything is there.

Pro client cache.He has the pros / cons exactly the opposite. This is the fastest response time, the most flexible cache - but it must be written. You need to write it in such a way that it works more or less the same way on all of your customers. In addition - there is no finished specification, where everything is written, you need to invent something.

General recommendation

If the HTTP cache is appropriate for your script, use it — if not, cache it on the client with a clear understanding that these are some additional costs.

Some simple client caching methods that you personally had to use.

Everything that can be calculated by a pure function can be cached with a set of arguments and used as a key to the calculated value. In Lodash there is a memoize () function, very convenient, I recommend it to everyone.

Next - there is an opportunity at the transport level. That is, you have some kind of library that makes REST requests and provides you with a data abstraction layer. There is an opportunity at its level to write a cache, in the background, add the data into memory or local storage, and next time you do not need to walk behind them if they are there.

I do not recommend doing this, because in my experience it turned out that we essentially duplicate the HTTP cache and write additional code ourselves, without any advantages - but having a lot of problems, bugs, incompatibility of cache versions, problems with disability and a lot of other charms . At the same time, such a cache, if it is transparent and is made at the transport level, it does not give anything relative to HTTP.

A self-made software cache that allows you to ask if it has data, allows you to disable something — a good thing. It requires some effort in implementation, but allows you to do everything very flexibly.

Finally, the thing I highly recommend. This is an in-memory database - when your data is not just randomly scattered in the frontend application, but there is some kind of single storage that ensures you have only one copy of all the data and that allows you to write some simple queries. Also very convenient. It also works well as a caching strategy.

A few words about how to invalidate the client cache.

First, the usual TTL. Each record has a lifetime, time is over - the record is rotting.

You can raise web sockets and listen to server events. But we must remember that this web socket may fall off, and we will not know about it immediately.

And finally - you can listen to some interface things to listen to. For example, if we paid for our mobile phone from a card in a web bank, probably, a cache in which the balance of this card is stored can be invalidated.

Such things also allow you to disable the client cache.

One thing to remember: all this is unreliable! True data live only on the server!

Do not try to play master replication when developing front-end applications.

This produces a lot of problems. Remember that the data on the server - and your life will become easier.

A few simple things that did not fall into other points.

What hinders faster front-end in terms of API?

Dependence of requests, when we cannot send the next request without results from the previous one. That is, in the place where we could send three requests in parallel - we are forced to get the result from the first one, process it, from them we collect the url of the second request, send, process it, collect the url of the third request, send, process it.

We got triple latency - where there could be a single. Out of the blue. Do not do this.

Data in any format other than JSON. Immediately I say that this applies only to the web - on mobile applications, everything can be more complicated. There is no reason to use non-JSON data in the REST API on the web.

Finally, some things when we break the HTTP caching semantics. For example, we have the same resource has several different addresses in different sessions, so caching does not work for us. If this is not all, then we do not have a number of simple problems and can spend more time to make our application better.

Let's summarize

How to make an API good?

- A good API is designed by all teams using and developing it. Given their common interests.

- We first measure the speed of the application, and then is engaged in optimization.

- We allow to do through the API not only expensive - abstract requests, but also cheap concrete ones.

- We design the data structure of the API based on the structure of the UI so that there is enough data, but not much unnecessary.

- We correctly use caches and we do not make some simple mistakes, which I told about.

That's all. Ask questions?

Source: https://habr.com/ru/post/323010/

All Articles