Updating the application code on a running server

Many organizations daily face the need to support the work of highly loaded systems, on the adequate functioning of which depends a huge number of operations. In such conditions it is difficult to imagine a situation when the system is suspended for updating the server components or the code of software applications. And if the suspension of downloading a picture or playing a video on Facebook can be uncritical, then in the financial, military or aerospace industry the consequences of even the minimal delay in processing operations can be disastrous.

To better understand the scale of these operations, just imagine that the bank was unable to make a multi-million dollar payment from a client or that any of the dispatching systems at Heathrow Airport decided to upgrade during the take-off of the aircraft. Hardly a similar scenario is permissible in today's realities.

However, the application server inevitably has to be updated - there is no getting away from it. And given the constant load on them, the issue of uninterrupted operation of applications during updates becomes more relevant than ever.

What is necessary to ensure the smooth operation of applications during the update?

There are several ways to solve this problem:

')

- Creating an excessive number of application servers or environments (clusters).

- Special features of the application server or applications directly.

It should be noted that recently, due to the cheapening of iron, the development trend of clusters, an excessive number of applications, as well as other resources required for work in complex distributed systems (for example, databases, additional disks (RAID of various types), several network interfaces for the same resources for reliability and so on).

However, in this article I would like to focus on the second option, namely the possibility of updating the application code on a separate server that continues to work under load.

Who might be interested in such a decision?

Although creating an excessive number of application servers or environments provides higher reliability, it requires a proportionately large amount of resources. In addition, it is much more difficult to ensure the operation of such clusters. In sum, this is not always justified, especially in the case of small solutions, for which the question of resource intensity is much more acute.

In this regard, applications without clustering occupy a separate niche, allowing you to bypass some of the difficulties in using an excessive number of application servers or environments, since such applications clearly benefit in terms of resource and ease of use.

Features of the development of complex applications

One of the principles for the development of complex applications is the construction of modular applications, modules in which are weakly connected. In the Java world, they wanted to include such technology in JDK 7, later they decided to release it along with Java 8, but now, judging by the current announcements, it will appear no earlier than Java 9 in March 2017 as a mechanism for interaction of Jigsaw modules.

Due to the principle of modularity, such applications can be updated in the course of their work even under load. Those who do not want to wait for the release of fresh versions of Java, can use the modular approach to developing applications right now - the technology is called OSGi , its first specification appeared in the early 2000s. Upgrading application code under load without clustering can be applicable for both PCs and mobile devices. Moreover, it requires less resources (processor, memory, disk) and less time spent on configuration and testing.

What about the clouds?

Due to the increasing popularity of cloud services, this topic can not be touched on.

To solve the update problem, you can actually create a virtual IP address, configure it to be balanced across several application nodes, and update them sequentially. In this case, the system will simultaneously exist applications of different versions. This is possible given the thoughtful design of applications, but imposes additional requirements on them. In essence, the complete independence of applications from the data should be ensured, they should not maintain state and should be designed so that the availability of different versions in the system is acceptable. In fact, this involves the simultaneous operation of several applications, which imposes additional costs for managing them. It should, however, be noted that cloud technologies and OSGi are not mutually exclusive. OSGi approaches can be used in the clouds, which, among other things, implies an abstraction from iron.

Thus, the use of cloud servers allows both splitting application modules into separate cloud nodes according to the OSGi principle, and launching a full-fledged application with all modules at each node, creating redundancy. Which of these methods to choose depends primarily on the resources of the organization.

What are the main differences between the OSGi specs from Jigsaw?

Of course, in order to understand the feasibility of using the OSGi specification in view of the upcoming Java update, you need to figure out how these solutions will differ and whether they will be compatible. Let's look at the table below:

| OSGi | Jigsaw |

| It has existed since 2000 and is a fully working mechanism with proven effectiveness. | In development and will not be available until Java 9 |

| Is a separate specification. | Will be fully embedded in the Java 9 platform. |

| Quite difficult to use | JPMS tends to be easier to use than OSGi. However, the creation of a modular product based on non-modular and is the main source of complexity. Thus, Jigsaw is unlikely to be much easier OSGi |

| It is impossible to load internal classes of modules, as they are not visible from the outside. That is, the class loader of my module can only see the internal types of my module, as well as types that have been explicitly imported from other modules. | Each type sees any other type, as they are in the same loader class. True, JPMS adds a secondary check to make sure that the class being loaded has the right to access the type it is trying to load. Internal types from other modules are private in reality, even if they are declared public. |

| Allows simultaneous operation of multiple versions of applications. | Does not allow to have several versions of the same module at the same time |

| There is a product lifecycle management | No product lifecycle management |

| Dynamic modularity in real-time deployment | Static modularity when deployed |

| Allowed to have modules, the content of which duplicates each other | It is impossible to have modules whose content duplicates each other. |

In basic compatibility mode, the framework and OSGi sets will fully exist within the “nameless” Jigsaw module. OSGi will continue to offer all its functionality for isolation - along with a powerful registry and dynamic loading. Any investment in OSGi is safe, as the specification will still remain a good option for any new projects.

What is special about working with the OSGi specification?

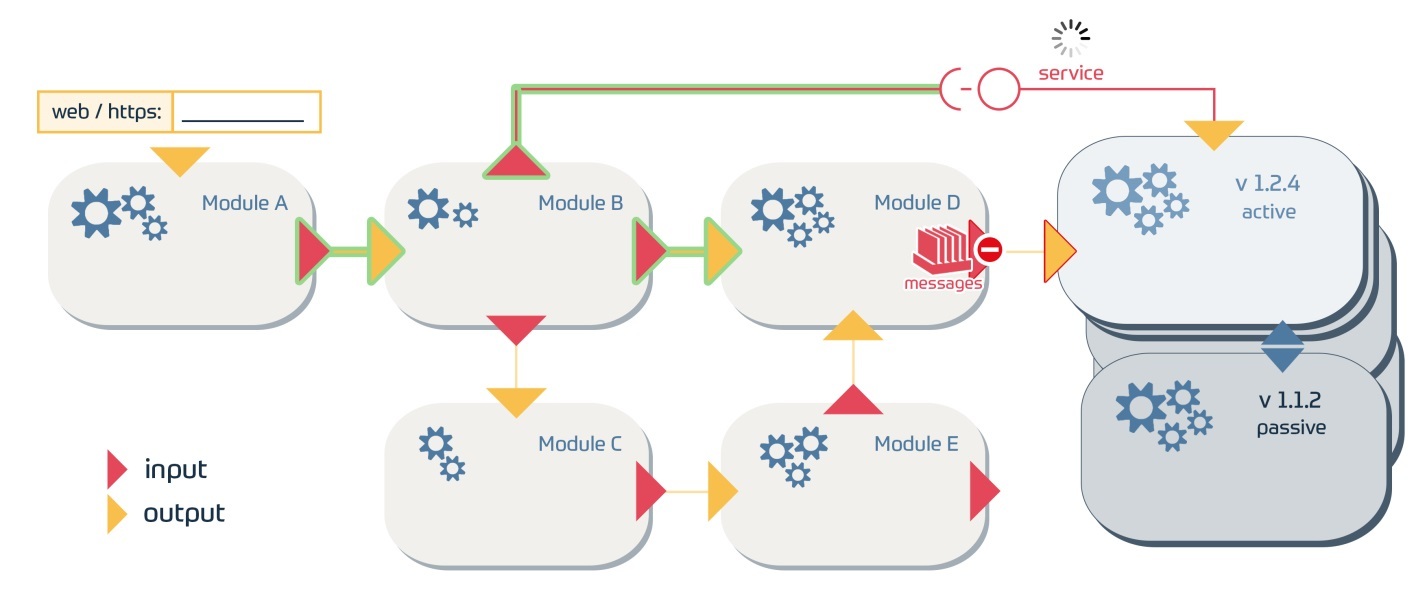

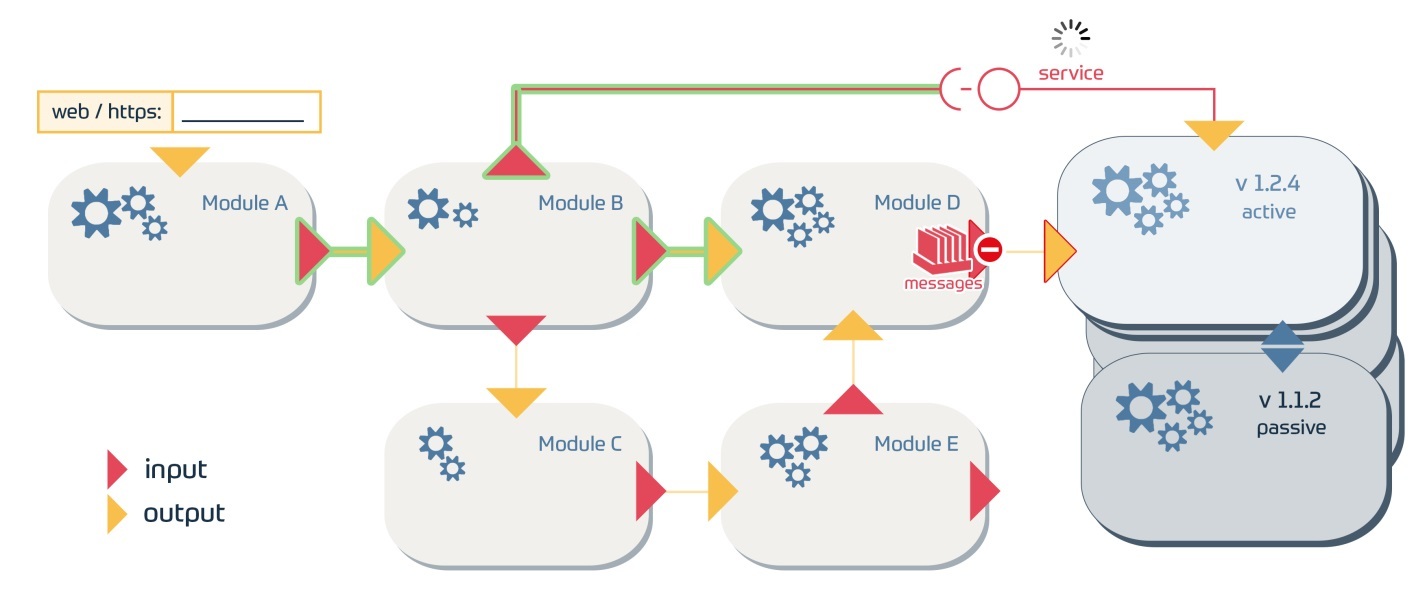

The OSGi specification is based on the use of modular applications, the components of which work in a loosely coupled environment, and the interaction routes between applications are configured in the configuration file. Such an application model allows us to consider it as a network (graph), where nodes are components, and branches are connections between them. In this connection can be both one-way and two-way.

When updating the code of such a program, first of all it is necessary to analyze the following points:

- What nodes need to be updated.

- What branches of the application will be affected.

It is important to note that there are two main ways of interaction between components in a distributed isolated environment: synchronous (through services) and asynchronous (using messages).

They work as follows: in a loosely coupled environment, there is an unlimited number of modules that can communicate with each other, either using services, or using messages, or using both. During the update of a specific module, messages will be accumulated in a queue, and services will wait half way until the destination module is updated.

The analysis of services is carried out through an open implementation of the OSGi framework, and the analysis of messages is performed using application code (in our case, the Real Time Framework application (RTF), which will be discussed below). This allows you to understand at what points in the interaction path of components you need to temporarily accumulate messages in the queue (with the help of which components interact) or temporarily suspend the called services so that immediately after updating the application code of the application you can continue working. As a result, while the application code is updated to the next version, the messages will be accumulated in the queue, and the services will be put on standby, that is, during the update, the affected routes will become unavailable. Thus, the application server does not need to be restarted, which will significantly reduce the unavailability of applications. In addition, you do not need to load the caches again, since the application will continue to work exactly from the point where it stopped during the update.

It is worth noting that in order to work effectively, it makes sense to design applications with the most unrelated routes of interaction between components, so that when they are updated, such routes and the services provided continue to function.

In addition, it is necessary to separate the API from the implementation so that it can be easier to update without affecting the API. What for? For comparison: an application server usually starts in minutes, ideally in 20-30 seconds, and a component update in a running server will take only 2-5 seconds, while all the service data will not need to be re-loaded into memory (cache).

Real Time Framework (RTF) application for updating code under load

RTF is a commercial product based on the OSGi specification, which is an application consisting of modules. The OSGi specification itself only provides the ability to simultaneously have multiple versions of the work of many modules related to certain dependencies, but building synchronous and asynchronous connections between modules and working on updating components is the responsibility of the RTF application itself.

The feature of RTF is that it extends the capabilities of OSGi, allowing not only updating the source code of the application during operation, but also doing it under load. In other words, it allows you to "pause the application", update and continue the execution of requests in the normal mode already on the updated application.

It also provides a lightweight transport for sending and receiving messages between components, implemented in Java without storing messages in any database. Such transport allows you to organize the interaction of components in a distributed system of weakly coupled components. To illustrate the mechanism of interaction of components, you can use the scheme below:

It is worth noting that the components interact indirectly - each component does not have special knowledge about the recipient of the message. In this case, a message can transmit a data set of the form <key-value>, which will be sufficiently abstract for both the sender and the recipient.

Messages are sent using the outgoing port, and reception, respectively, via the incoming port. Route settings for sending messages are defined in a special xml configuration file, while you can additionally set filters for messages, one of the options is with the presence / absence of some parameters or their values.

RTF usage examples

RTF technology is not new and has proven its effectiveness more than once. One example is setting up billing services from scratch for Axia based on an RTF application. The implementation was carried out standalone, i.e., without using the possibilities of clustering. In this case, the Company was more important than ever to ensure the stable operation of the system even during the update of any components, since the specifics of payment processing does not allow for the slightest error or delays in work.

Recently, a large number of developments are underway for MANO (Management and Orchestration). In this case, RTF technology is applied to the system monitor (monitors the hardware status) and the network virtualization monitor (VNF). It should be noted that all work is carried out in the open stack, which once again confirms the full compatibility of RTF technology with cloud services. As an example, it is worthwhile to cite the work of NEC / Netcracker on network virtualization for the Japanese telecom operator NTT DOCOMO. This project gives the company the opportunity to flexibly change the power of the underlying network, and also significantly reduces recovery time in case of equipment failure.

findings

The article demonstrates one of the possible ways to update the application code on a server operating under load - using the example of a commercial RTF application server that complies with the OSGi specification. RTF allows you to pause the interaction of the modules that make up the application, put all messages in the queue, and services (synchronous calls) to wait, update the necessary modules, and start the application from the moment you pause. In this case, the data with which the application worked, will not disappear anywhere. The described method of updating the code is suitable primarily for those who for some reason do not use clustering, do not want to duplicate applications and want to save resources on configuration and testing. A good example is the development of a billing system for Axia.

At the same time, RTF technology is effectively used by companies using clusters and cloud servers. Thanks to RTF, applications can be used continuously, taking into account that at the time of updating requests will not be processed for a few seconds, and immediately after updating the application will continue. This allows organizations to flexibly update application code without risking losing some data. Moreover, the time to load applications after the update is significantly saved, since the module update in a working server will take only 2–5 seconds, after which the service data will not have to be loaded into the cache again.

Source: https://habr.com/ru/post/322814/

All Articles