Practice Continuous Delivery with Docker (review and video)

We will start our blog with publications based on the latest speeches of our technical director of distol (Dmitry Stolyarov). All of them took place in 2016 at various professional events and were devoted to the topic of DevOps and Docker. One video from the Docker Moscow meeting in the office of Badoo, we have already published on the site. New will be accompanied by articles that convey the essence of the reports. So…

On May 31 at the RootConf 2016 conference, which was held as part of the Russian Internet Technologies Festival (RIT ++ 2016), the section “Continuous Deployment and Deploy” opened with the report “Best Practices Continuous Delivery with Docker”. It summarized and systematized the best practices for building the Continuous Delivery process (CD) using Docker and other Open Source products. With these solutions we work in production, which allows you to rely on practical experience.

')

If you have the opportunity to spend an hour on the video with the report , we recommend to watch it in full. Otherwise - below is the main expression in text form.

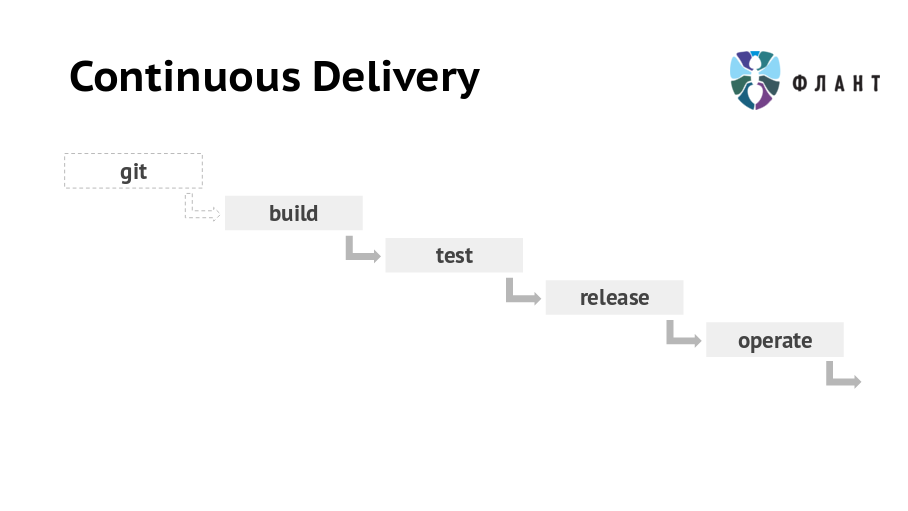

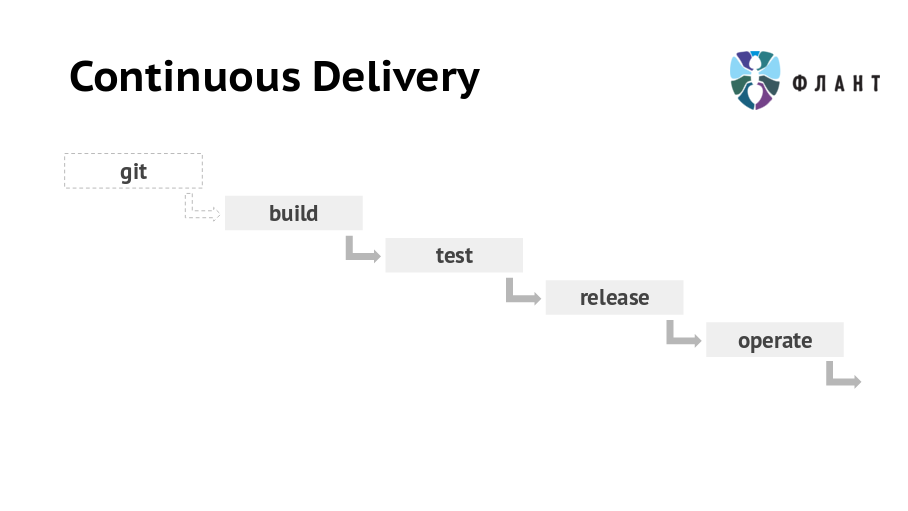

By Continuous Delivery, we mean a chain of events, as a result of which the application code from the Git repository first comes to production, and then gets into the archive. It looks like this: Git → Build (build) → Test (test) → Release (release) → Operate (subsequent maintenance) .

Most of the report is devoted to the build stage (application build), and the release and operate topics are covered visually. We will talk about the problems and patterns that allow them to solve, and the specific implementation of these patterns can be different.

Why do you need a Docker here? Not just like that, we decided to talk about the practices of Continuous Delivery in the context of this open source tool. Although the entire report is devoted to its application, many reasons are already revealed when considering the main pattern for rolling out the application code.

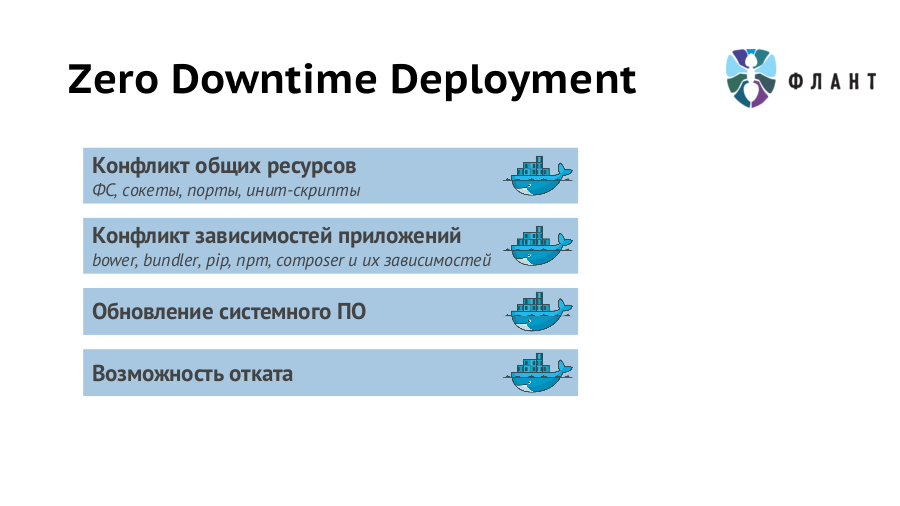

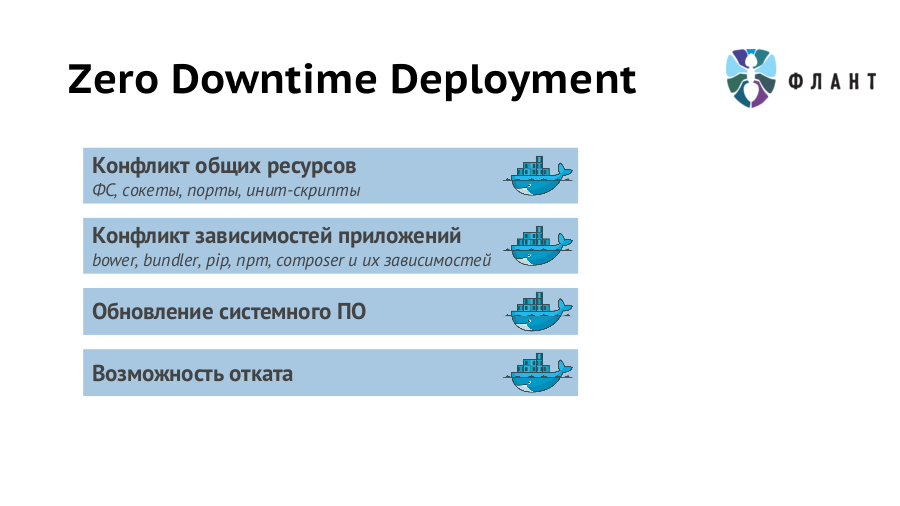

So, when rolling out new versions of the application, we are certainly faced with the problem of downtime resulting from the switching of the production server. The traffic from the old version of the application to the new one cannot be switched instantly: we must first make sure that the new version is not only successfully rolled out, but also “warmed up” (that is, completely ready to serve requests).

Thus, for some time both versions of the application (old and new) will work simultaneously. That automatically leads to a conflict of shared resources : network, file system, IPC, etc. With Docker, this problem is easily solved by running different versions of the application in separate containers, for which resource isolation within a single host (server / virtual machine) is guaranteed. Of course, you can do some tricks and without isolation at all, but if there is a ready and convenient tool, then there is a reverse reason - do not neglect it.

Containerization gives many other advantages in case of delay. Any application depends on a specific version (or range of versions) of the interpreter , the availability of modules / extensions, etc., as well as their versions. And this applies not only to the direct executable environment, but also to the whole environment, including system software and its versions (up to the Linux distribution used). Due to the fact that the containers contain not only the application code, but also the pre-installed system and application software of the required versions, problems with dependencies can be forgotten.

Let us generalize the main pattern of the rollout of new versions taking into account the listed factors:

This approach with the deployment of different versions of the application in separate containers gives another convenience - a quick rollback to the old version (after all, it is enough to switch traffic to the desired container).

The final first recommendation is that even the Captain doesn’t carp: “ [when organizing Continuous Delivery with Docker] Use Docker [and see what it gives] ”. Remember that this is not a “silver bullet” that solves any problems, but a tool that provides a wonderful foundation.

By “reproducibility” we mean a generalized set of problems encountered in the operation of applications. We are talking about such cases:

Their common essence boils down to the fact that full compliance with the environments used is needed (as well as the absence of the human factor). How to guarantee reproducibility? Make Docker-images based on code from Git, and then use them for any tasks: on test sites, in production, on local programmer's machines ... It is important to minimize the actions that are performed after the image is assembled: the simpler the less chance of errors.

If infrastructure requirements (availability of server software, its versions, etc.) are not formalized and not “programmed”, then rolling out any application update can result in disastrous consequences. For example, you have already switched to staging on PHP 7.0 and rewrote the code accordingly - then its appearance on production with some old PHP (5.5) will certainly surprise someone. Suppose you don’t forget about a major change in the version of the interpreter, but the “devil is in the details”: the surprise may be in a minor update of any dependency.

The approach to solving this problem is known as IaC (Infrastructure as Code, “infrastructure as a code”) and involves storing infrastructure requirements along with the application code. When using it, developers and DevOps specialists can work with one application Git-repository, but on different parts of it. From this code in Git, a Docker image is created, in which the application is deployed taking into account all the specifics of the infrastructure. Simply put, the scripts (rules) of the image assembly should be in the same repository with the source code and merge together.

In the case of a multi-layered application architecture — for example, there is nginx, which stands in front of an application already running inside the Docker container — the Docker images should be created from the code in Git for each layer. Then in the first image there will be an application with an interpreter and other “closest” dependencies, and in the second - the upstream nginx.

All Docker images collected from Git are divided into two categories: temporary and release. Temporary images are tagged by the name of the branch in Git, can be overwritten by another commit and rolled out only for preview (not for production). This is their key difference from the release: you never know which particular commit is in them.

It makes sense to collect in temporary images: the master branch (you can automatically roll out to a separate platform to constantly see the current version of master), branches with releases, branches of specific innovations.

After the preview of temporary images comes to the need to transfer to production, the developers put a certain tag. A release image is automatically collected from the tag (its tag corresponds to a tag from Git) and rolls out to staging. In the case of its successful testing by the quality department, it falls into production.

Everything described (rollout, assembly of images, subsequent maintenance) can be implemented independently using Bash scripts and other "improvised" means. But if you do this, then at some point the implementation will lead to great complexity and poor handling. Understanding this, we came to the creation of our specialized Workflow-utility for building CI / CD - dapp .

Its source code is written in Ruby, opened and published on GitHub . Unfortunately, the documentation at the moment is the weakest point of the tool, but we are working on it. And we will write and tell about dapp more than once. We sincerely can not wait to share its opportunities with all interested communities, but for now send your issues and pull requests and / or follow the development of the project on GitHub.

Another ready Open Source tool that has already gained significant recognition in a professional environment is Kubernetes , a cluster for managing Docker. The topic of its use in the operation of projects built on Docker is beyond the scope of the report, so the presentation is limited to an overview of some interesting features.

For rollout Kubernetes offers:

Since Continuous Delivery is not only a release of the new version, Kubernetes has a number of possibilities for the subsequent maintenance of the infrastructure: built-in monitoring and logging across all containers, automatic scaling, etc. All this already works and is only awaiting competent implementation into your processes.

The video from the speech (about an hour) is published on YouTube (the report itself starts from the 5th minute - click on the link to play from now on) .

Presentation of the report:

On May 31 at the RootConf 2016 conference, which was held as part of the Russian Internet Technologies Festival (RIT ++ 2016), the section “Continuous Deployment and Deploy” opened with the report “Best Practices Continuous Delivery with Docker”. It summarized and systematized the best practices for building the Continuous Delivery process (CD) using Docker and other Open Source products. With these solutions we work in production, which allows you to rely on practical experience.

')

If you have the opportunity to spend an hour on the video with the report , we recommend to watch it in full. Otherwise - below is the main expression in text form.

Continuous Delivery with Docker

By Continuous Delivery, we mean a chain of events, as a result of which the application code from the Git repository first comes to production, and then gets into the archive. It looks like this: Git → Build (build) → Test (test) → Release (release) → Operate (subsequent maintenance) .

Most of the report is devoted to the build stage (application build), and the release and operate topics are covered visually. We will talk about the problems and patterns that allow them to solve, and the specific implementation of these patterns can be different.

Why do you need a Docker here? Not just like that, we decided to talk about the practices of Continuous Delivery in the context of this open source tool. Although the entire report is devoted to its application, many reasons are already revealed when considering the main pattern for rolling out the application code.

Main pattern roll out

So, when rolling out new versions of the application, we are certainly faced with the problem of downtime resulting from the switching of the production server. The traffic from the old version of the application to the new one cannot be switched instantly: we must first make sure that the new version is not only successfully rolled out, but also “warmed up” (that is, completely ready to serve requests).

Thus, for some time both versions of the application (old and new) will work simultaneously. That automatically leads to a conflict of shared resources : network, file system, IPC, etc. With Docker, this problem is easily solved by running different versions of the application in separate containers, for which resource isolation within a single host (server / virtual machine) is guaranteed. Of course, you can do some tricks and without isolation at all, but if there is a ready and convenient tool, then there is a reverse reason - do not neglect it.

Containerization gives many other advantages in case of delay. Any application depends on a specific version (or range of versions) of the interpreter , the availability of modules / extensions, etc., as well as their versions. And this applies not only to the direct executable environment, but also to the whole environment, including system software and its versions (up to the Linux distribution used). Due to the fact that the containers contain not only the application code, but also the pre-installed system and application software of the required versions, problems with dependencies can be forgotten.

Let us generalize the main pattern of the rollout of new versions taking into account the listed factors:

- First, the old version of the application runs in the first container.

- Then the new version rolls out and “warms up” in the second container. It is noteworthy that this new version itself can carry not only the updated application code, but also any of its dependencies, as well as system components (for example, the new version of OpenSSL or the entire distribution kit).

- When the new version is fully ready to service requests, traffic switches from the first container to the second.

- Now the old version can be stopped.

This approach with the deployment of different versions of the application in separate containers gives another convenience - a quick rollback to the old version (after all, it is enough to switch traffic to the desired container).

The final first recommendation is that even the Captain doesn’t carp: “ [when organizing Continuous Delivery with Docker] Use Docker [and see what it gives] ”. Remember that this is not a “silver bullet” that solves any problems, but a tool that provides a wonderful foundation.

Reproducibility

By “reproducibility” we mean a generalized set of problems encountered in the operation of applications. We are talking about such cases:

- Scenarios verified by the staging quality department should be accurately reproduced in production.

- Applications are published on servers that can receive packages from different repository mirrors (they are updated over time, and with them, versions of installed applications).

- “Everything works locally for me!” (... and developers are not allowed into production.)

- It is required to check something in the old (archival) version.

- ...

Their common essence boils down to the fact that full compliance with the environments used is needed (as well as the absence of the human factor). How to guarantee reproducibility? Make Docker-images based on code from Git, and then use them for any tasks: on test sites, in production, on local programmer's machines ... It is important to minimize the actions that are performed after the image is assembled: the simpler the less chance of errors.

Infrastructure is code

If infrastructure requirements (availability of server software, its versions, etc.) are not formalized and not “programmed”, then rolling out any application update can result in disastrous consequences. For example, you have already switched to staging on PHP 7.0 and rewrote the code accordingly - then its appearance on production with some old PHP (5.5) will certainly surprise someone. Suppose you don’t forget about a major change in the version of the interpreter, but the “devil is in the details”: the surprise may be in a minor update of any dependency.

The approach to solving this problem is known as IaC (Infrastructure as Code, “infrastructure as a code”) and involves storing infrastructure requirements along with the application code. When using it, developers and DevOps specialists can work with one application Git-repository, but on different parts of it. From this code in Git, a Docker image is created, in which the application is deployed taking into account all the specifics of the infrastructure. Simply put, the scripts (rules) of the image assembly should be in the same repository with the source code and merge together.

In the case of a multi-layered application architecture — for example, there is nginx, which stands in front of an application already running inside the Docker container — the Docker images should be created from the code in Git for each layer. Then in the first image there will be an application with an interpreter and other “closest” dependencies, and in the second - the upstream nginx.

Docker images, linking with git

All Docker images collected from Git are divided into two categories: temporary and release. Temporary images are tagged by the name of the branch in Git, can be overwritten by another commit and rolled out only for preview (not for production). This is their key difference from the release: you never know which particular commit is in them.

It makes sense to collect in temporary images: the master branch (you can automatically roll out to a separate platform to constantly see the current version of master), branches with releases, branches of specific innovations.

After the preview of temporary images comes to the need to transfer to production, the developers put a certain tag. A release image is automatically collected from the tag (its tag corresponds to a tag from Git) and rolls out to staging. In the case of its successful testing by the quality department, it falls into production.

dapp

Everything described (rollout, assembly of images, subsequent maintenance) can be implemented independently using Bash scripts and other "improvised" means. But if you do this, then at some point the implementation will lead to great complexity and poor handling. Understanding this, we came to the creation of our specialized Workflow-utility for building CI / CD - dapp .

Its source code is written in Ruby, opened and published on GitHub . Unfortunately, the documentation at the moment is the weakest point of the tool, but we are working on it. And we will write and tell about dapp more than once. We sincerely can not wait to share its opportunities with all interested communities, but for now send your issues and pull requests and / or follow the development of the project on GitHub.

Kubernetes

Another ready Open Source tool that has already gained significant recognition in a professional environment is Kubernetes , a cluster for managing Docker. The topic of its use in the operation of projects built on Docker is beyond the scope of the report, so the presentation is limited to an overview of some interesting features.

For rollout Kubernetes offers:

- readiness probe - check for readiness of the new version of the application (for switching traffic to it);

- rolling update - sequential update of the image in a cluster of containers (shutdown, update, prepare for launch, switch traffic);

- synchronous update - updating the image in a cluster with a different approach: first on half of the containers, then on the rest;

- canary releases - launching a new image on a limited (small) number of containers for monitoring anomalies.

Since Continuous Delivery is not only a release of the new version, Kubernetes has a number of possibilities for the subsequent maintenance of the infrastructure: built-in monitoring and logging across all containers, automatic scaling, etc. All this already works and is only awaiting competent implementation into your processes.

Final recommendations

- Use Docker.

- Make docker images of the application for all needs.

- Follow the principle of "Infrastructure is a code."

- Link Git with Docker.

- Regulate vykat order.

- Use a ready-made platform (Kubernetes or another).

Video and slides

The video from the speech (about an hour) is published on YouTube (the report itself starts from the 5th minute - click on the link to play from now on) .

Presentation of the report:

Source: https://habr.com/ru/post/322686/

All Articles