Architecture of the growing project on the example of VKontakte

Alexey Akulovich explains the life path of a high-loaded PHP project. This is a highload decoding ++ 2016 .

My name is Lyosha, I am writing in PHP.

')

Fortunately, the report is not about that. The report will be about the retrospective of the development of the network - how the project developed. What solutions are captains or very specific to our workload we used, which can be used in other projects that are under pressure.

Let's start.

What we're going to talk

It’s impossible to tell about everything in one report, so I chose topics that seemed to me the most interesting. This is a question of developing access to databases and storing them, a question of optimizing PHP, and what we came to as a result, at the end there will be a few examples of how we deal with the existing architecture arising in production.

As a small offtopic: just a month ago, VKontakte turned 10 years old, a rather round figure, not true for IT professionals, Highload is also 10 years old. The fact that the report was accepted into the program on such an anniversary is rather nice.

How it all began

This is not the most original scheme, but the network came to it pretty quickly. With the increase in load and popularity, such a typical Lime Stack turned out, when we have fronts on Nginx, they process requests, send them to Apache, which is PHP boot smod, and they go to MySQL or Memcached, the standard lamp. Let's start with him as a starting point.

So, the load has increased.

If the load on Nginx'e, which does not have any local data that require a second user request came to the same machine with Nginx, that is, we did not have enough of them - we put more machines and everything works further.

If the load is not enough for the current cluster size on Apache, then for a project that does not use local sessions, local caches that require the request to come to the same Apache and the same PHP - we again install more machines and everything works. Standard layout.

In the case of MySQL and Memcached, which is an external data storage, which is needed by all Apache, etc., it’s just impossible to deliver another machine, you need to do something more clever and clever.

So let's start with the development of the database and how it generally scales. The very first, simple, banal method that can be used is vertical scaling: we just take a more powerful piece of hardware, more processor, more disk, and at some point we have enough of it. As long as the piece of iron is of some typical configuration, we can afford it for money, for iron. So it is impossible to grow endlessly.

The more correct option to which everyone comes is horizontal scaling. When we try not to make more powerful pieces of iron, and we make these pieces of iron more, maybe even less powerful than the original piece, we smear the load. This requires changes in the code so that the code takes into account that the data is not stored in one place, not in one basket, but they are somehow spread on the basis of some kind of algorithm.

So, in order to somehow try to scale horizontally, we need at the code level to reduce data connectivity and their independence from the place and method of storage. The simplest things are the abandonment of foreign keys, joines and anything else that needs to be stored in one place.

Another option, when we have several labels do not fit on one server, we distribute them to different servers - the usual solution. This can be done with granularity up to one table per server.

If this is not enough for us, then we divide the plates themselves into parts, we shard them and each piece is stored on a separate machine. This requires the most processing of the code, we cannot even perform the banal select labels, we need to make a select in each shard from a fragment of this table by key and then somewhere to merge them, in the intermediate layer, or in our code.

At this point, the question of further growth becomes relevant - if we have once increased our number of servers, we will most likely need to increase them. If every time we simply increase the number of machines, say from 8 to 16, then when rebalancing the data in these shards - most likely you will have a huge number of data migrations between pieces of the engine, between MySQL. To avoid this huge, undulating, data transfusion between machines, it is better to start virtual shards right away, that is, we say that we do not have 8 shards, but let's say we have 8,000, but the first thousand is stored on the first server, the second thousand on the second server, etc.

If you need to increase the number of shards, we don’t immediately transfer 1000 or 500 of them, but we can start with one small shard. Transferred the shard, everything - it works with the new machine, it is already a little loaded, the other is a little unloaded. The granularity of this transfer is already determined by the project, as it can afford. If you transfer half of the shards at once, we will return to the regular migration scheme - if this is permissible.

No matter how we shard our engines, this is a relational database, it is universal, cool, but it has a certain peak of performance and things that require more performance are cached. About the usual cache: “we did not go to the cache, we went to the database”, we will not talk.

Let us turn to more interesting things that help us cope with the loads, namely in the issue of caching.

The first option is the task of pre-creating the cache. It is useful in cases where we have some kind of code, can be competitive or in large quantities, follow some data into the database at the same time. Let's say a person has posted a post on his wall, information about this is leaving to his friends. If we just do this, then all the code that forms the tape will immediately rush into the cache, there is no cache in this post, the code went to the database, this is not very good. A bunch of code crawls into the database at the same time.

What we can do? We can after creating the post - in the database, immediately create a cache entry, only after that send a message to the tape that the post has appeared. The tape formation code will come to the cache; we are no longer going to the base, the base is not used. If there is enough memory in our caches, they are not restarted. It turns out that we never go to the database for reading, everything is taken from the cache .

Another way to reduce the load on the database is to use expired caches. This is either synchronous processing of counters, or it is data that is stored longer. What's the point? We can in some cases, business logic to give is not the latest data, but to save on this trip to the database. Let's say a user's avatar - if he updated it, we can get the cache right away, it will be updated from the database, or we can update it in the background in a few seconds, friends can see the old avatar for a few seconds, uncritically perfect, but there is no query to the database.

The third option is associated with even greater loads. Imagine that we have a block with friends in the profile. If we want to get an avatar and a name, then we have to go to the database 6 times and get data on each - if after this campaign, we simply save the data to the cache, then they will be cleaned at about the same time.

In order to somehow reduce this load, we can save data to the profile cache not for the same time, but plus or minus a few seconds. In this case: when we re-arrive, then most likely part of the data will still be in the cache. We spread the load on the TTL time, on that range of randomness of storage.

Another way, under even greater load , is when we arrive at the base, but she could not. If a second request arrives, there is no data cache, we go back to the database, which could not do it before, it cannot again , it becomes worse from our paratite load and it can die.

To prevent this from happening, we can sometimes afford to save the checkbox to the cache so that we don’t need to go to the database. The request comes to the cache, there is no entry in the cache, but there is a checkbox "do not go to the database" and the code does not go to the database, it immediately runs through the code branch, as if we could not, and the request does not go to the database - no timeouts, expectations and everything is fine, if your business logic allows it. Do not fall, but give at least some answer without any special burden.

In any case, if caching is introduced into the system, this caching is not built into the permanent storage system, that is, into the database system. There is a problem of validation - if the code is written carelessly, then we can get old data from the cache, or there may be something wrong in the cache. All this requires a more accurate approach to programming.

What to do when the load grows, and then you can not shard

The project had a situation when MySQL could not cope, even downs. Memcached was overloaded so that they had to be restarted in large chunks. Everything worked badly, there was nowhere to grow, there were simply no other solutions at that moment — it was 2007–2008.

How did the project go with such a load

It was decided to rewrite Memcached, which does not work, for a solution that would at least withstand such a load, and then it went. There were engines associated with targeting and other things. We transfer the load from the universal super-solution, but which does not work very quickly, due to its versatility, to small narrow solutions that make a small functional, but make it better than universal solutions. At the same time, these solutions are sharpened precisely for the use, for the load and types of requests that are used on the project.

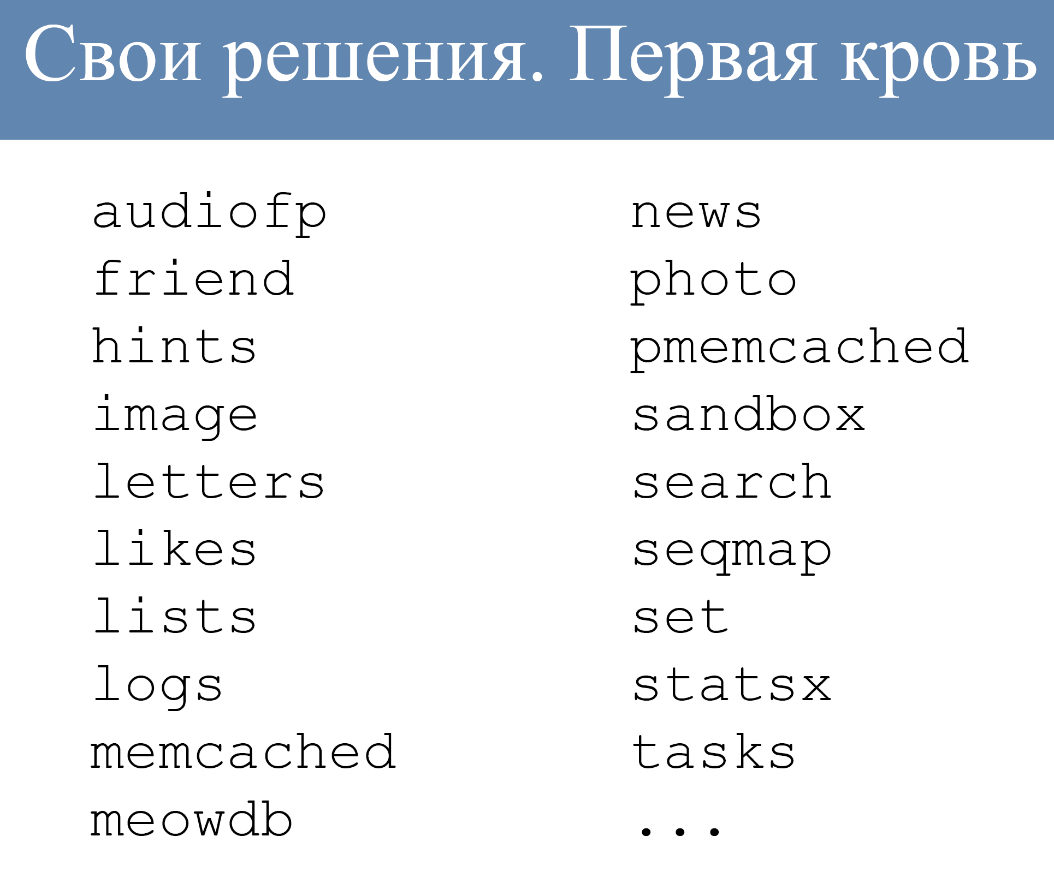

This is a small list of engines that are used now:

There are actually more of them, there are engines that work with queues, with lists, with tapes, a bunch of different engines. Let's say the lists engine handles the processing of lists of something. He is not able to do anything else, he works just under the lists, but he does it well.

What did it look like?

At that moment there were two connectors to external data. There was a connector to Memcached, there was a connector to MySQL. Memcached protocol was chosen for the interaction protocol with the engines, the engines pretended to be Memcached. At the same time, everything was shardish, groups of shards of the same type were combined into a cluster. Access to the cluster as a whole, at the level of code business logic.

It looked like this:

That is, “give me a connection to the cluster with that name,” then we just use the connection in some of our queries.

If on that side, at the level of clusters and engines, usually Memcached, or a converted engine, pretending to be Memcached, then the query looks like this:

Standard solution, normal cache.

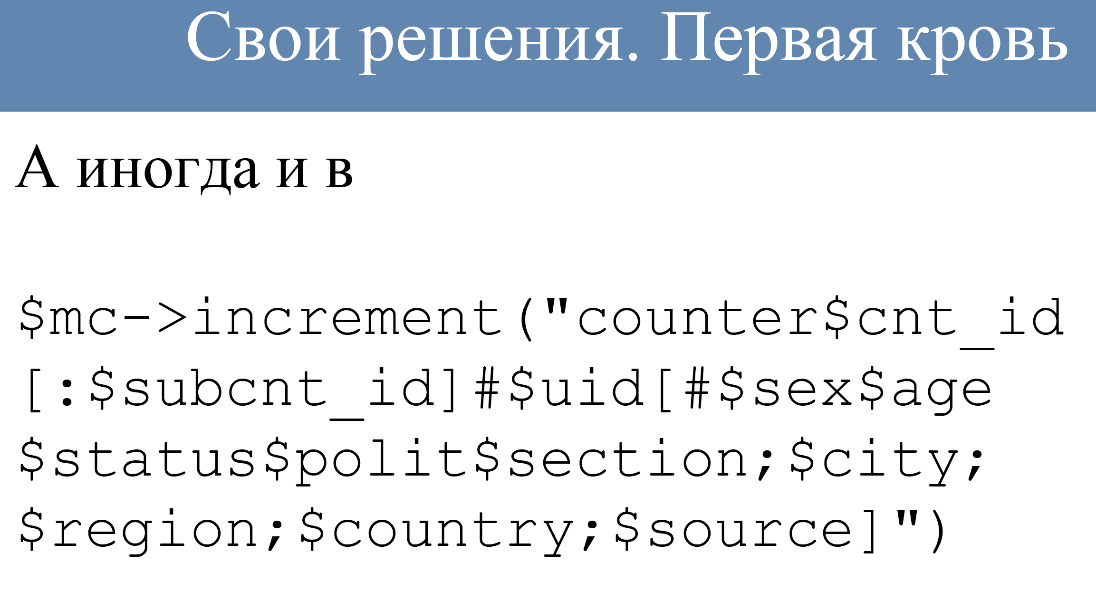

In the case when there is a more specific solution that requires its own protocol, then we had to fence such a garden:

We do not just make a plain key, but we sew up additional parameters into the key, which the engine can already parse on its side and use as additional request parameters. This was required in order to maintain the standard protocol and not to alter anything, and at the same time the engine could accept some additional parameters.

In the case of even more complex engines that require a large number of parameters, the request could look like this:

This is already a complex query - there are special separator characters, optional blocks. But it all worked, quite well. If it’s not handwriting, but using some kind of wrapper to form such a request, then it’s pretty good.

What did it look like?

The code does not go directly to the cluster. There is no need to store the topology in the code, this was done by the proxy - these were special engines that worked on each server with the code. That is, all the connections of the code were in the local engine, and the proxy was already configured by administrators, taking into account the cluster storage topology, it could change on the fly. It didn’t matter where the cluster was, how many pieces it had, what work was done with it — all this doesn’t matter. We connect to the proxy, and then it does its job. In this case, one cluster could go to another cluster through a proxy, if it needed some external data.

How was the shard selected in the cluster

There are two options: we either choose based on the hash of the key entirely, or we are looking for the first number, not a number, namely the number in the key string, use it as a key for further operation - taking the remainder. Standard more or less solution.

A popular solution that works in a rough way is twemproxy from Twitter . If someone has not tried, but at the same time uses a large installation of Memcached or Redis, you can see if it will be useful to you.

All this developed, grew, but at some point the restriction of the protocol arose in the form of a finite number of commands, into which we were trying to shove. These complex queries with a bunch of parameters - it became pretty close to them, there was also a limit on the key length of 250 bytes, and there were a bunch of parameters: string, numeric. It was not very comfortable, there was also a limitation of the text protocol, there was not even a binary one. He limited the size of the answer in megabytes, and necessitated the need for screening binary data: spaces, line breaks and everything else.

This led to the decision to migrate to the binary protocol.

The closest analogue is protobuf . This is a protocol with a previously described scheme. These are not schemaless protocols like msgpack and the like.

It is pre-recorded in the scheme, it is stored as in a proxy - in the config. You can perform queries based on it. The engines began a gradual migration to this scheme. In addition to issues related to solving problems Memcached protocol. Useful buns were obtained from this - for example, they switched from TCP to UDP protocol, this greatly saved the number of connections for servers. If the engine rises, and 300 thousand connections are knocked to it and keep constantly, it is not very cool. It is much nicer when it is UDP packets and everything works much better. Encrypting connections between machines, which for me personally, the most pleasant thing is asynchronous communication.

How does the general query work in general in the same PHP and many other languages?

We send a request to an external database and wait by blocking a worker, thread or process, depending on the implementation. In this case, we send the request to the local proxy - it is very fast, and we continue to work. The flow of executions is not blocked, the proxy itself, asynchronously, for our code goes to another cluster, waits for a response from it, receives it and stores it in local memory for a while.

When we needed an answer to the formation of the answer in the code, we had already made some other requests, also waited for them, carried out. We are going to the proxy: “give us the answer to that request”, it simply copies it from the local memory and everything works quickly and asynchronously. This allows you to write on a single-threaded PHP rather parallelized code .

Do we still have MySQL after migrating to engines? There are already a lot of different engines. They remain here and there, they are quite few, they are mainly used in things related to internal admins, with no load interfaces that are not accessible from the outside. It works - do not touch, everyone is happy. It does not break, okay.

There are things that, unfortunately, have not been rewritten - they are not under pressure, so we will rewrite them sometime, when there will be time. This leads to the names of the plates, the type of these:

" Honestly, this will be the last tablet ." It was created this or that year. She is not experiencing a load, she is, we live with it and all right. I want to burn such things, get rid of them, as if from a relic, but we still have MySQL.

Now let's talk a little bit about PHP.

We have some with engines, which is described. Now, how we lived in PHP .

PHP is slow, it does not need to be explained to anyone, it has weak dynamic typing.

At the same time it is very popular. The project was created in this language, because it allowed to write code very quickly - this is a plus PHP. The size of the code base is already such that it is not really possible to rewrite, we live with this language. We use its pluses and try to level the minuses.

Each project tries to get around the limitations of PHP in its own way. Someone rewrites PHP, someone struggles with PHP itself.

What went on our part

How to process a million requests per second in PHP? If you do not have 100,500 servers. No

It is almost impossible if there is no infinite amount of iron.

VKontakte project went on the development of loads - a translator from PHP to C ++ was written, which translates all the code of the site into sish code. What does he do? It does not implement all the PHP support, but the level of the language that was used on the project at the time of the appearance of KPHP. Some things have appeared since then, but in general this is work at the level of the former code. Since then, little has appeared in it.

What is being done? All code is translated to it - there is a static analyzer, it tries to deduce types, it analyzes the use of variables. If variables are always used as a string, then most likely in the std string code. If it is used as an array of numbers, it will be a vector of ints. This allows the compiler to optimize the resulting code very well.

A pleasant trifle from type inference : the translator, when he sees that a variable is used is very strange (it is passed to a function where another type is specified or transferred to another type of array) - he can throw a warning. A developer collecting his fresh code will see that there is some kind of suspicion here - most likely it is worth going and checking whether the correct code is there or whether it is just a false compiler trigger.

When the site was transferred to KPHP, a large number of errors were found due to static code analysis.

What is the result? We obtained from PHP code - C ++ code, we collected it with additional libraries, and have a regular HTTP server binary. It just starts up as Nginx as upstream and works without any additional layers, wrappers, such as Apache and others.

This is nothing. It just takes the machine, runs a bunch of processes on it, there fork, but it does not matter - it just works.

We have specialized engines, there is PHP code, which is translated into KPHP engines.

How we live with it (life examples)

- Who uses storing configurations in regular ini, json, yml files and so on, is there such?

- And who uses the configuration in the code? Or using code at the level of storing it in source codes? Already less.

- And who uses external configuration storage systems that can send you the current config on demand? More.

Our option is the third option . Used external storage, what does it look like?It is similar to Memcached, we have Memcached protocol involved, while it is divided into master and slave.

Master is writing nodes, slaves are engines that are running on each server locally, where code is used. The code always, when receiving a config, goes to a local replica, on which there is code - it is distributed from the master to all the slaves. At the same time, the slaves have protection against data aging - if the data is already old there, the code will not start, there important things may change in the config and it’s better not to work. Let it be better we will not be executed, than we will be executed with old configs.

This allows you to scale the reading of the config almost infinitely, that is, we get our own config on each server. At the same time, the high speed of request distribution is a fraction of a second across all the machines that are running.

The next option.

Who can say what this request may lead to: a normal request to Memcached, let's say it is executed to the site, it has a constant key, it’s not some kind of variable, but the same one. Any idea what is really bad could happen? Lies all site.

Why one banal request that gets on every hit with a heavy load can break everything? The constant key, regardless of the sharding algorithm, was turned off by the hash - we take the first number. We somehow get to the same shard of the same cluster, the entire volume of requests goes to it. The engine becomes bad, it either falls, or starts to slow down. The load is spread by the proxy to other machines, but in general it becomes bad for this shard, it becomes bad for the proxy who save their turn in it - they have timeouts, additional resources for processing requests of this shard, the proxy becomes bad and the site drops, because the proxy is a binder link the entire site. This is hard to do, but still rather unpleasant.

What can you do about it?

First, do not write such code, the usual solution .

But what to do when you can not write such code, but you want?

For this, sharding of the key is used - for things related to the attendance calculation, for example, set increments of some counters. We can write an increment not in one counter, but spread it on 10 thousand keys, and when we need to get the value of the counter, we do a multi get of all the keys and simply add in our code. We spread the load on the Nth number of shards, and at least we did not fall.

If the load is even more and we cannot scale the keys even more, or we don’t want it — we don’t need a super-exact value, we can incrementally use the counters with probability, for example, 1/10 and while reading back the values, we simply multiply the resulting value by this probability coefficient and we get a close value to what we would get if we considered every single hit. We spread the load on a certain number of cars.

Another option - the site began to slow down .

We see that the load on the cluster of total Memcached has increased, a bunch of shards that cache everything. What to do with it? Who is guilty?

In this embodiment, it is not known, because the load has increased in general for everything, for the general cluster - it is not clear. We have to deal with reverse engineering commits, look at the history of changes.

What can be done here? It is possible to divide universal clusters into concrete small shards. Want photos of your friend's cat, relatively speaking, keep you one small cluster. Use it only and do not climb into the central one; at the same time, when the load on this cluster grows, we see that it is he - we know what functionality has been loaded and the load on this cluster does not affect all the others at all. That is, even if it falls completely, the whole cluster, no one will notice anything in other clusters.

Another option .

Regarding the previous question - about the "did not fall." So much so that, if, say, a section falls, for example, messages, and a person watches a video with cats. If he doesn’t go up and send it to his friend’s friend, he won’t even know that there were any problems. He will also successfully listen to music, watch news feeds, and so on. He works with the part of the site that does not depend on what is broken.

The third option .

Suppose we have 500th errors from PHP, that is, Nginx fronts receive errors from upstream KPHPs. Rolled out a new functionality, not tested. What to do?

In case we have a common KPHP cluster that processes the entire site, it is unclear.

If we have a division of KPHP into clusters: separately for the API, separately for the wall, and so on, you might think. We also see: “the problem is with this” and we know which group of methods, which commits to watch, and who is to blame. Moreover, if this is not a massive problem, when everything really broke, and a one-time event - a single failure, then Nginx has the opportunity to send the request again to another KPHP server. Most likely, if it was a one-time problem, the repeated request will pass and the person will receive his correct and well-processed answer after some waiting time.

findings

Each project with its growth is experiencing some kind of load and makes those decisions that at the moment seem appropriate. You have to choose from the existing solutions, but if there are no solutions, you have to implement your own - go beyond the standard, popular approach used in the community to combat these solutions. But if everything is good within the project, everything is enough, then it is better to use some ready-made solutions, tested, proven ideas of the community. If there are none, then everyone decides for himself.

The meaning of the entire report - you need to smear it in all parts of your project.

So tnank you.

Report: Architecture of the growing project on the example of VKontakte .

Source: https://habr.com/ru/post/322562/

All Articles