Smart feeder: Machine Learning, Raspberry Pi, Telegram, a little learning magic + assembly instructions

It all started with the fact that his wife wanted to hang a bird feeder. I liked the idea, but immediately wanted to optimize. A light day in winter is short - there is no time to sit in the afternoon and look at the feeder. So you need more Computer Vision!

The idea was simple: a bird flies in - vzhuuuuh - it turns out to be on the phone. It remains to figure out how to do it and implement it.

In the article:

All sources are open + described the full order of deployment of the resulting structure.

To be honest, I was not sure that everything will turn out + I was not very sure about the final architecture. In the course of work, it has changed quite a lot. Therefore on Habré I write after there is a ready version. The ups and downs of development can be tracked on GitHub and in the blog, where I made small reports on the campaign (links at the end of the article).

The idea of “I want to recognize birds” can have dozens of implementations. At the beginning of work, I wanted the system to automatically detect the birds coming to the feeder, determine what kind of birds they were, choose the best photo, lay out somewhere + lead the attendance statistics. Not everything worked out from here.

')

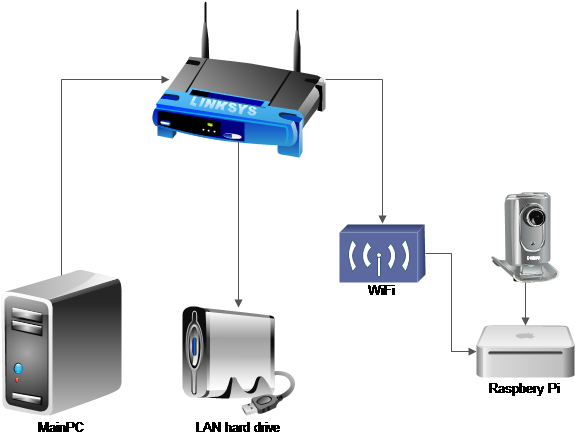

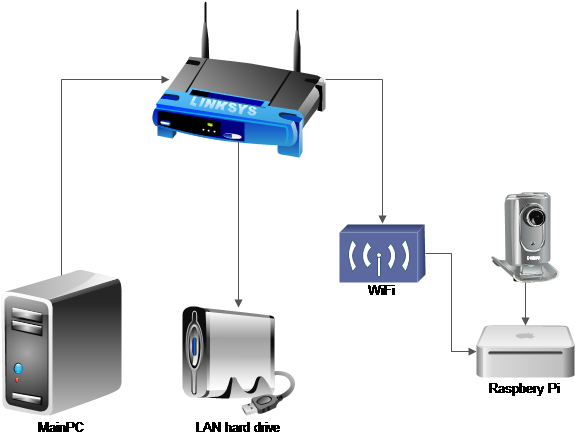

A piece of my home network that is responsible for the project has the following configuration:

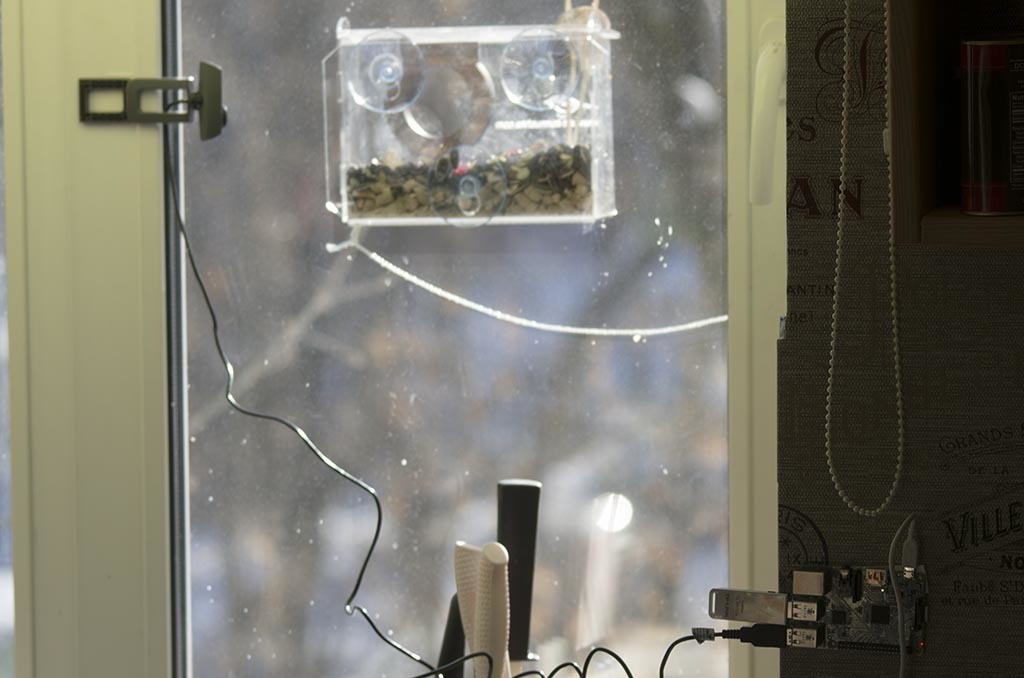

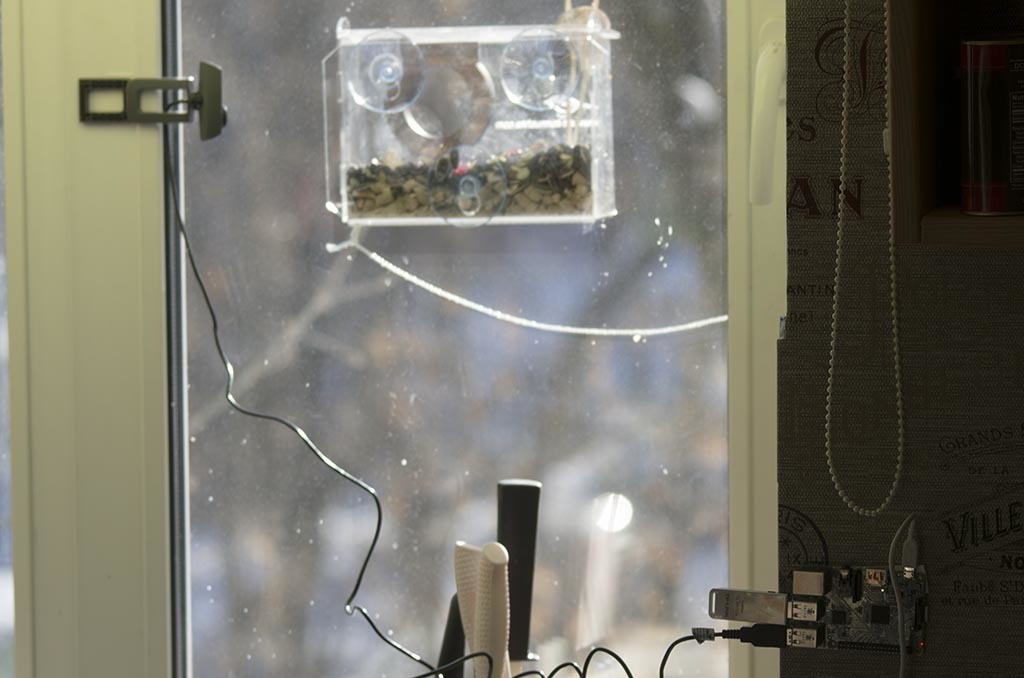

Rpi + camera hang near the window. I spent a lot of experiments to find a convenient mount and a good view:

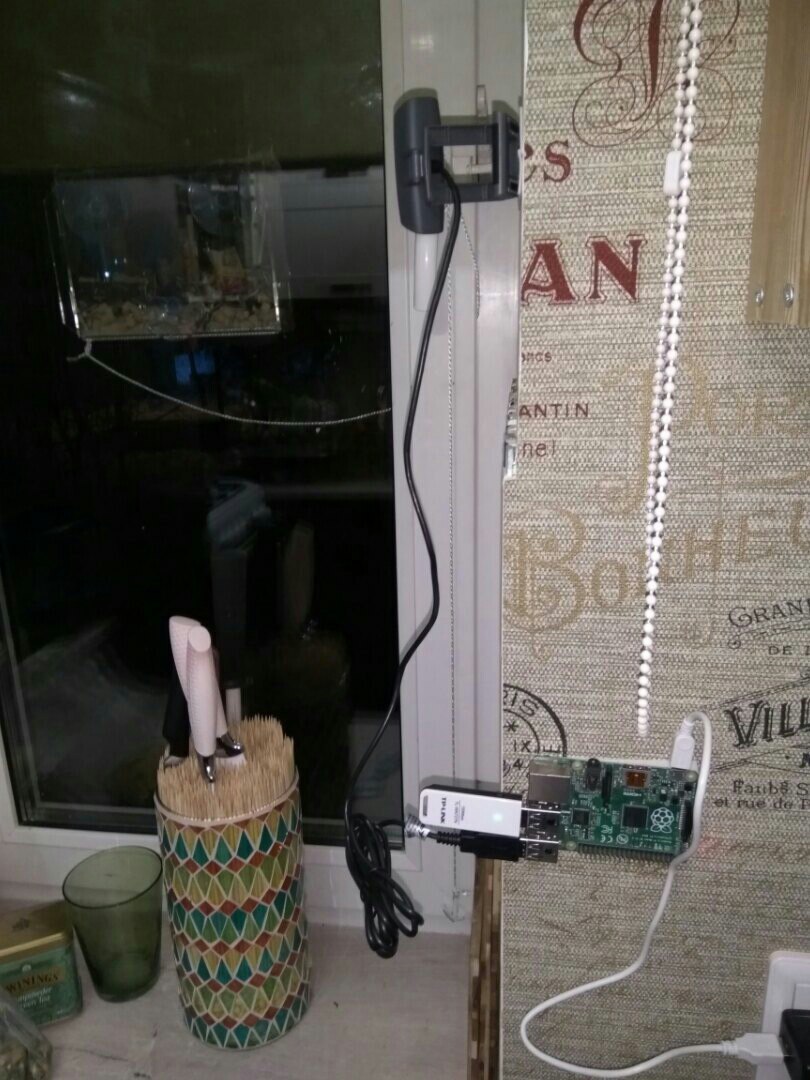

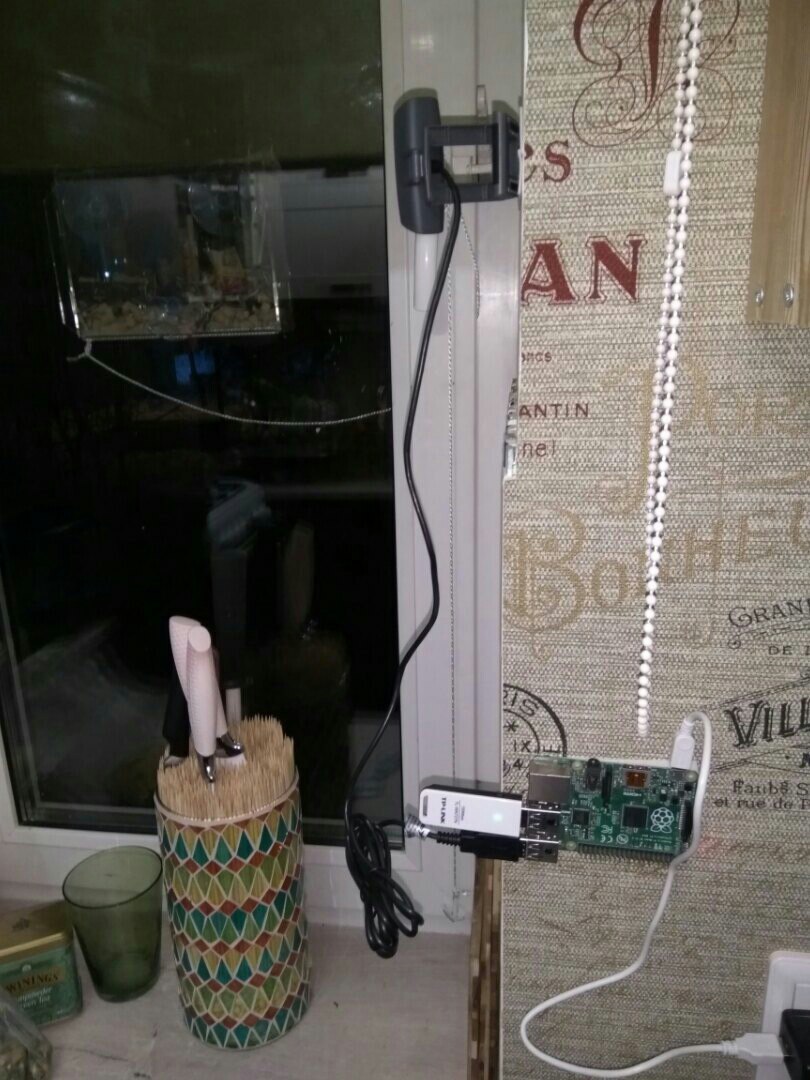

As a result, stuck in a stand for knives. It is mobile, you can gain a base from different angles. Not to say that my wife liked this idea, but I assure you that this is a temporary solution:

Pictures are as follows:

The quality of the webcam is not so hot. But, in principle, it is clear what is happening.

Let's return to architecture. In Rpi, a WiFi module is plugged in that is connected to the router. Photos are saved to a network drive (WD MyBook live). This is a prerequisite for dialing a base (there will not be enough for RPi flash drives). When used, of course, can be disabled. But it is convenient for me.

Rpi itself hangs without a monitor. Its management, programming and configuration is now done from the main computer via SSH. In the beginning, I set something up by inserting it into the monitor, but this is not necessary.

A well-assembled base is much more complicated than choosing the right neural network. Using broken markup or non-representative data can degrade system quality far more than using VGG instead of ResNet .

Base collection is a mass of manual, even unskilled labor. Especially for marking large databases there are Yandex.Toloka and Amazon Mechanical Turk services. I will refrain from using them: I will mark everything manually by myself, not for long here. Although, maybe it would make sense to drive there, to practice using it.

Naturally, I want to automate such a process. To do this, consider what we want.

What is the base in our situation:

In fact, the event "arrival of the bird." For this event, we must determine what kind of bird flew in and make a good shot.

The easiest thing to do is to make a trivial "motion detector" and dial its entire output. Motion detector will do the easiest way:

Code on several lines:

The detector worked on any stirrer. At its beginning and at its end. As a result of the detector operation, a base of approximately 2,000 frames was recruited during the week. We can assume that there are birds in every second frame => approximately 1000 images of birds + 1000 images of non-birds.

Given that the viewpoint does not move much - we can assume that the base plus minus is sufficient.

For the markup, I wrote a simple program on python. Bottom, source links. Thank you so much my wife for helping us with markup! Two hours of time killed :) And a couple of hours I spent myself.

For each picture two signs were marked:

In total, we have for each image a vector of two quantities. Well, for example, here:

Obviously, the quality is zero (0), and the big tit is sitting (2).

In total, about half of the frames with birds turned out, half the empty ones. At the same time, the tit Azureque was only 3-5% of the base. Yes, it is difficult to get a large base from them. And yes, learning from these 3-5% (~ 40 pictures) is unreal. As a result, I had to train only in ordinary tits. And hope that sooner or later the base of the azure will be much more.

Now I will skip through the stage to preserve the continuity of the narrative. About the network, the choice of the network and its training will be discussed in the next section. Everything was more or less studied there, except for the blue tit. On the basis of the percentage of frame recognition accuracy was somewhere 95%.

When I say that machine learning in contests and in reality - these are two things that are not related to each other - they look at me like a psycho. Tasks for machine learning at competitions are a matter of optimizing grids and finding loopholes. In a rare case - the creation of a new architecture. The tasks of machine learning in reality is a matter of creating a base. Set, markup, automate further retraining.

I liked the task with the feeder largely because of this. On the one hand, it is very simple - it is done almost instantly. On the other hand, it is very indicative. 90% of the tasks here are unrelated to contests.

The base that we recruited above is extremely small for tasks of this type and is not optimal. It does not imply "stability." Only 4-5 camera positions. One weather outside the window.

But it helps to create the “first stage” algorithm. Which will help you gain a good base.

Modify the detector that I described above:

How to set the base!? And what did we do before?

We used to get the usual base. And now - we are recruiting a database of errors. In one morning, the net produced more than 500 situations identified as tits:

But let me! Maybe your mesh is not working? Maybe you confused the channels when you transferred the image to from camera to grid?

Unfortunately not. This is the fate of all the grids trained on a small amount of data (especially for simple networks). In the training set there were only 6-9 different camera positions. Few highlights. Little extraneous noise. And when the grid sees something completely new - it can throw out the wrong result.

But it's not scary. After all, we screwed the collection base. Only 300-400 empty frames in our base - and the situation is improving. Instead of 500 false alarms in the morning, they are already zero. Only here something and birds were detected only 2/3 of their total number. These did not recognize these:

For their collection and it is "else" in the code above. View the base of the motion detection for the whole day and select 2-3 passes simply. For these pictures it took me time - twenty seconds.

The actual implementation of the system is a permanent workflow, where the grid has to be tightened every few days. And sometimes introduce additional mechanisms:

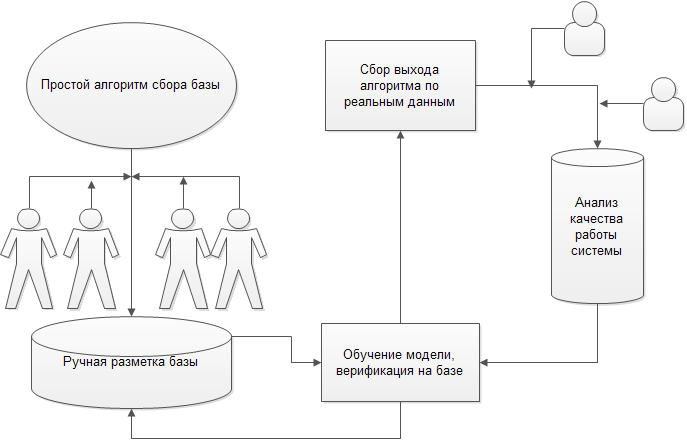

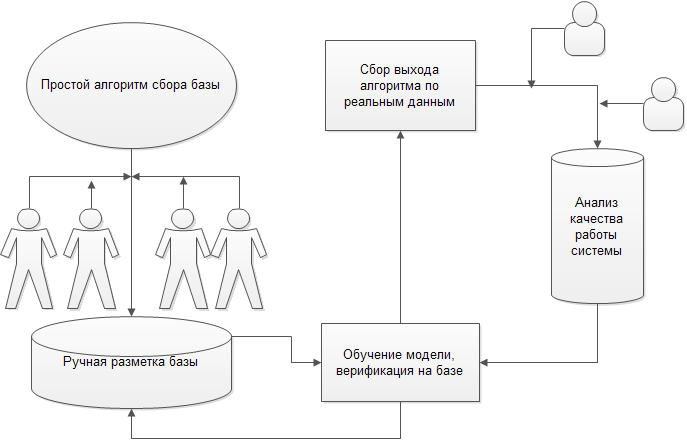

The solution of the problem in practice is the construction of such a scheme, with all the processes of additional training, retraining, collecting and optimizing the collection.

To invent and build a model is much more power than to train a good model. Often it is necessary to use ancient algorithms: SIFT, SURF. And sometimes take a trained grid, but from a completely different task. For example, a face detector.

It's all? Is the base ready? Does the system work? Of course not . Outside the window is soft, white and fluffy snow. But it becomes hard and icy. Spring is coming. In the last two days, they spoiled the spoons again:

The sun does not shine from there. The snow melted. Kapel pounded.

A good base for this task is spring + winter + summer + autumn. For all the birds, for different types of the window, for different weather. I already wrote a long-long article here about how to build a database.

It seems to me that such a task requires a base of at least 2-3 thousand frames for each bird in different conditions.

There is no such thing yet.

The base can be supplemented automatically in order to generate distortions. This greatly increases stability. I did not do all possible increments. You can do more and raise quality. What did I do:

And this could be added:

To be honest, I did not fully introduce all these distortions. Need more time. But still the quality of the base is not surpassed. It is not Kaggle to fight for the fractions of a percent.

One of the main questions that I wanted to understand for myself was the possibility of running ML frameworks for CV on simple devices. For example on the Raspberry Pi.

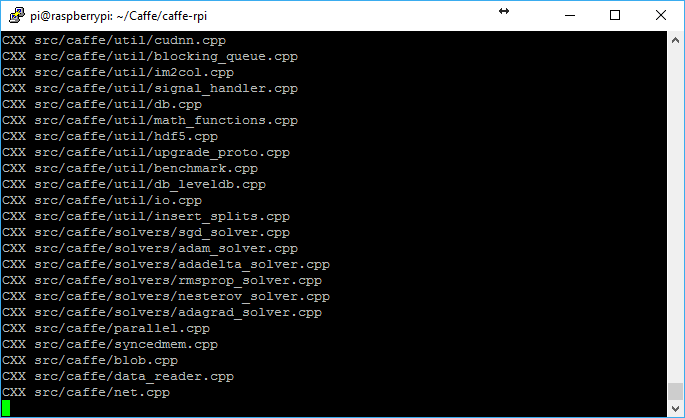

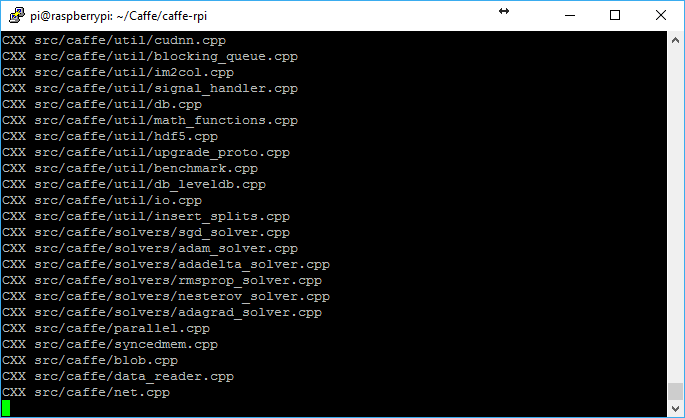

Thank God that someone thought this for me. GitHub has a useful repository with almost no instructions.

On RPi B + Caffe and everything you need for it, you can assemble and install it somewhere in a day (you need to approach yourself once an hour and shove the next team). On RPi3, as I understand it, it can be much faster (in 2-3 hours it should cope).

In order not to litter the article with a bunch of Linux commands, I just drop the link here , where I described them all. Caffe is going! Works!

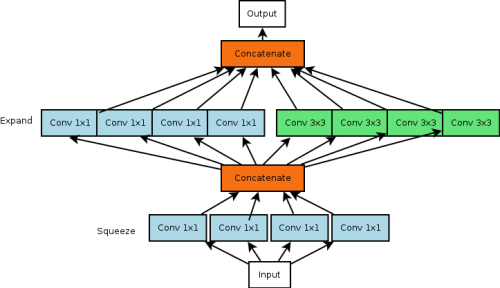

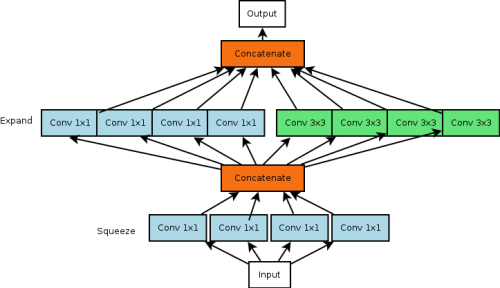

Initially, I thought to use some kind of unpretentious mesh, such as CaffeNet , or even VGG16. But the author of the port of Caffe under RPi strongly advised using SqueezeNet . I tried - and I liked it. Fast, enough memory takes up. Accuracy, of course, not the ResNet level. The first attempt to deploy the network was not very successful. The network ate 500 MB of RAM out of my 400 free. I quickly realized that the main problem was in the output layer. He was from ImageNet at 1000 pins. I needed only a dozen output neurons. This reduced the size of the network immediately to 150 meters. The grid itself is very interesting. In accuracy, it is comparable to AlexNet. In this case, 50 times faster, according to the authors. The grid itself implements the following principles:

Total There is a local component:

And there is a global one created from these local ones:

Good ideas. On RPi3, apparently, it gives real time (I sense that there should be 10-15 fps).

On my RPi B + it gave 1.5-2 frames per second. Well, for more, I honestly did not count.

The authors of the port under Caffe used a mesh through C ++ to improve performance. But I dragged to Python. It is much faster to develop.

First, as I said above, I had to change the last fully connected layer:

I replaced it with two outs. At one exit - the presence of a bird + its type. The second is quality.

The final speed of work on RPi B + of such a thing is ~ 2-3 seconds per frame + its preprocessing (cleaning the code from converting unnecessary, teaching in a format in which OpenCV directly receives data - will be 1.5-2 seconds).

In reality, learning the layer on "quality" is still a trouble. I used three approaches (yes, you can approach correctly and take special layers of losses. But laziness:

Left - the last. He is the only one who has ever worked.

With the birds in the base everything is fine. 88% -90% correct classifications. In this case, of course, 100% loss of all azureworms. After I got the base - the quality has improved.

Even slightly improved due to increments from the database (described above).

Go to the last mile. Need to deliver pictures to the user. There were several options:

After reading about Telegram and making sure that everything is not so terrible there, plus there are some mysterious “channels” - I decided to use it. Begin to fear. It seemed that I had to take a day or two to complete the task. Finally I got up with the spirit to read the documentation about the front of work. Allocated an hour in the evening.

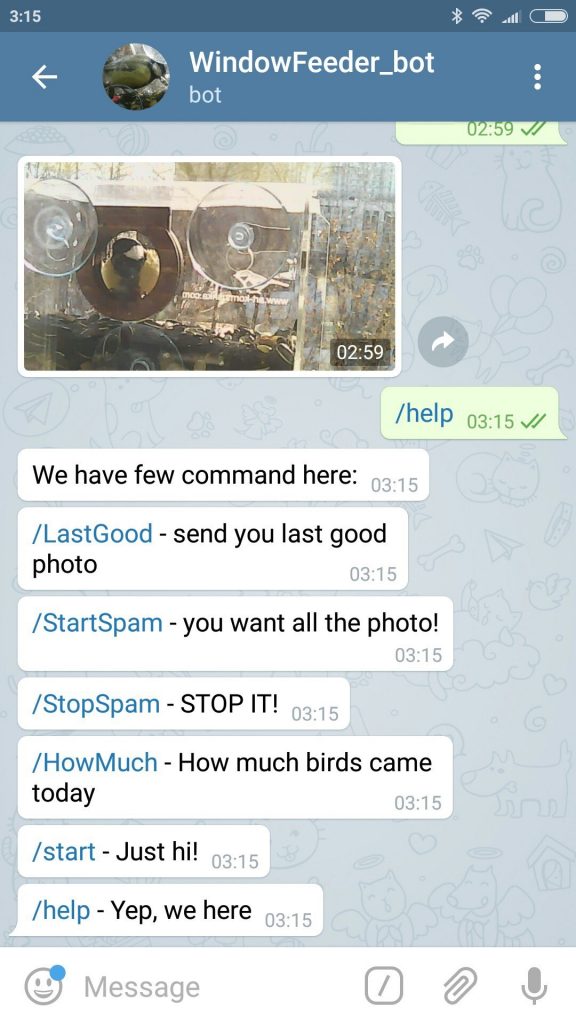

And then I was pleasantly surprised. Incredibly pleasantly surprised. This hour was almost enough for me to write and connect everything that I had in mind. No, of course I'm lying. Spent 2 hours. And then another one and a half to fasten an unnecessary whistle. So it is all simple / convenient / recklessly works. Essentially make a bot:

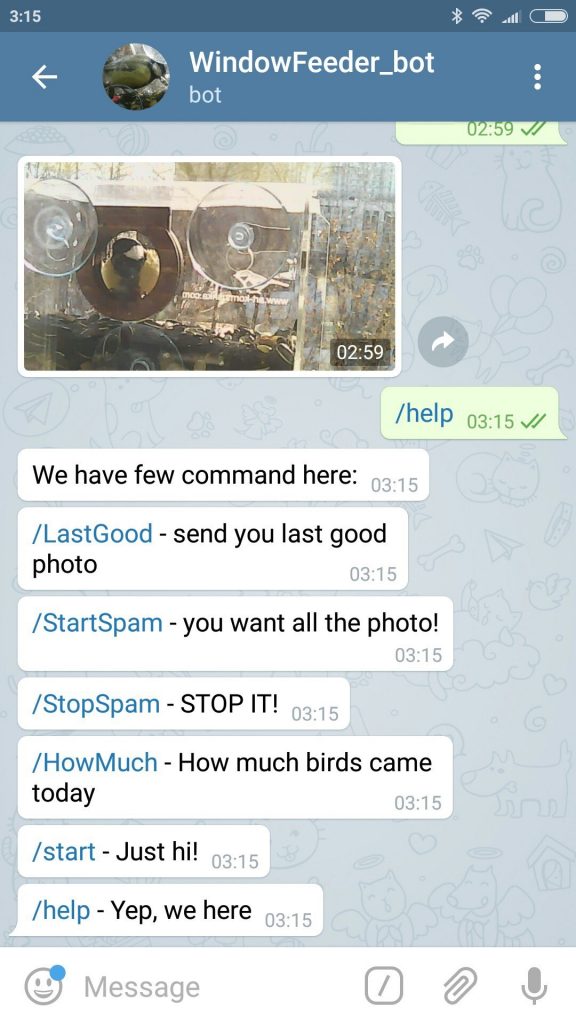

As I understand it, the processing of / start and / help commands is very desirable.

If I ever do a smart home, I feel that it will not do without this thing.

What can a bot. The link to the bot will be lower:

Bot combined with recognition. Its text is in the source. Capture.py file

Link to the bot and the channel with the output of the bot - below, in the basement of the article.

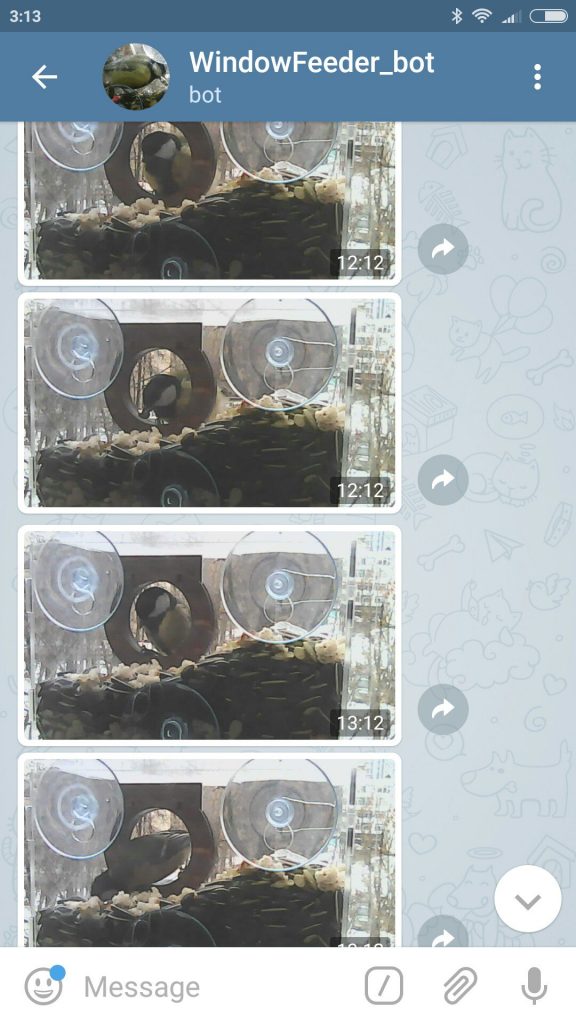

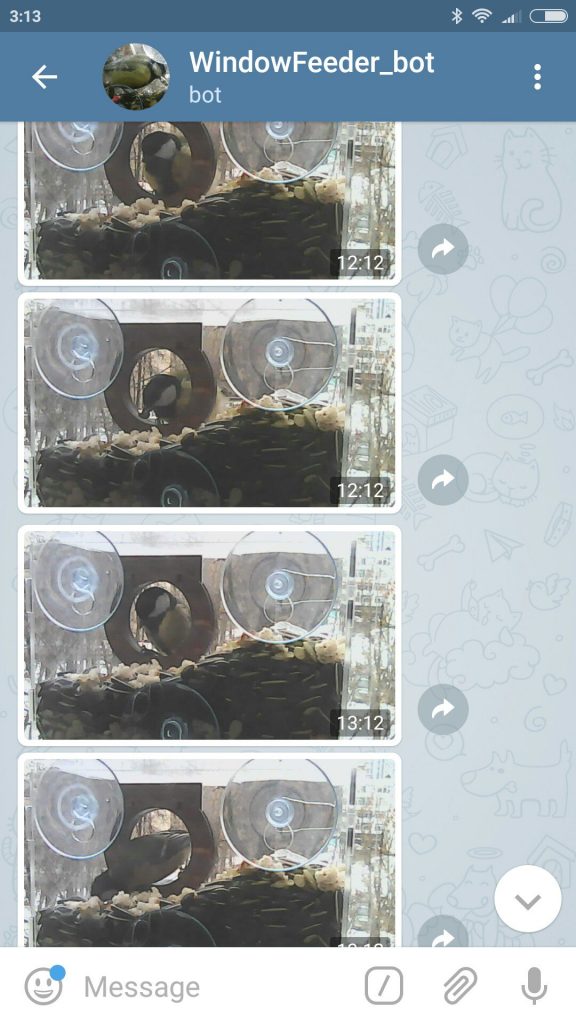

And this is what spam mode looks like:

I have a few further ideas on how to improve and what to do next:

There are more global plans. Ideally, for the next winter I would like to make a feeder that would be completely autonomous. To hang it in the country and manage from the city. But for this you need to solve a number of problems. First, find an adequate provider and make a system that would not have survived an insanely large amount of traffic. Secondly, to make a feed dispenser, which could be remotely controlled. Thirdly, to make an adequate assembly of this whole miracle into a single kulechek.

I did a lot of this in parallel with other tasks. So the score may shift. If you want to do the same for yourself, part of the way will help to cut off my source code, but you will have to repeat a lot.

In reality, this whole thing lasted three or four weeks. It seems to me that with the source and the new RPi, you can make it 2-3 times faster.

I post links how it all looks in a running form. Links to all of its source code in the process. As well as links to all used frameworks / guides / forums

There are several limitations:

Hope you scared.

Here is a link to the bot - @WindowFeeder_bot

And here again the channel - t.me/win_feed

Project repository .

Here are programs for marking the base. Under Windows and under Ubuntu (for some reason, OpenCV perceives keyboard codes in different ways, it was too lazy to be steamed).

Here is an example of network training. In my opinion very useful for Caffe. When I teach something myself, it often turns out some kind of similar program of this type.

This is the main code. Here implemented: motion detection, Telegram bot, neural grid for recognition.

Here is a base of photos. For most photos there is a text file. It has two digits. The first is the type of bird. The second is the quality of the image (relevant only when there is a bird). There are also pictures without tektovichkov. There is always no bird. I took part of the database from VOC2012 to create a subsample with images that are completely irrelevant to the topic.So you will see cats or dogs - do not be surprised.

If you type your base, then my additive should increase stability.

I cut off a rather large amount of details on how to configure RPi in this article. In my blog, I talked in more detail about some of the configuration steps.

Plus, here I cut off some of the ideas that did not get a continuation - there they are. Total 4 notes on the topic: 1 , 2 , 3 , 4 .

The Caffe version of the Raspberry Pi is dragged away .

There is almost no description on the installation. You can read in my blog, you can try to act on the closest guide (skipping a piece from hdf5). OpenCV

launch guide on RPi. Useful instruction

to create a bot in Telegram. In general, I took most of the samples about him from here . Good repository (API for Python).

If suddenly someone wants his birds to be recognized, there is RPI, but there is no deployed computer to train the grid on your data - send the database marked in the specified format. I will retrain with the addition of my own, I will post it in general

He was very afraid that today none of the birds would fly in and it would be broken. Posted an article - and empty. But one has already arrived, pleased. Maybe there will be more.

The idea was simple: a bird flies in - vzhuuuuh - it turns out to be on the phone. It remains to figure out how to do it and implement it.

In the article:

- Launch Caffe on Raspberry Pi B + (I have long wanted to do this)

- Building a data collection system

- Neural network selection, architecture optimization, training

- Wrapping, selecting and attaching an interface

All sources are open + described the full order of deployment of the resulting structure.

To be honest, I was not sure that everything will turn out + I was not very sure about the final architecture. In the course of work, it has changed quite a lot. Therefore on Habré I write after there is a ready version. The ups and downs of development can be tracked on GitHub and in the blog, where I made small reports on the campaign (links at the end of the article).

The idea of “I want to recognize birds” can have dozens of implementations. At the beginning of work, I wanted the system to automatically detect the birds coming to the feeder, determine what kind of birds they were, choose the best photo, lay out somewhere + lead the attendance statistics. Not everything worked out from here.

')

Complete set

- System core: Raspberry Pi first model, B +. I lay in my box for a year and a half with almost no business. Now, of course, it will be more logical to take the RPi 3. There, the memory is bigger and faster too. Price RPi 3 - somewhere 3-3.5 tr.

- SD card for RPi. Like 32 gig. I bought a long time, I do not remember the price. Somewhere around 500-1000r

- Power supply (microUSB). Took from their stocks. Again, somewhere in the 500-1000r.

- Camera:

- Initially, I planned to use the most basic camera from Rpi (Raspberry Pi Camera 1.3rev), which I had in the same box. But she did not start. Judging by the tests, either the RPi connector or the cable died. Another option - firewood flew. In the near future I will finally understand what is wrong there. The price of such a camera, depending on the lens and the characteristics of 1.5t.r - 2.5 tr.

- So as a starting decision, I decided to install a webcam from a computer. Normal Creative, good on Rpi B + many USB ports. FaceCam100x. I did not buy right now, it is worth somewhere in the neighborhood of 1t.r. The option is bad, as the quality is miserable. But dial the base and test it - it will come down.

- WiFi dongle I took the most common - TP-Link TL-WN727N for 400r.

- Actually feeder. Himself was doing laziness, bought ready. Cost somewhere in the 2t.r. with delivery

Infrastructure

A piece of my home network that is responsible for the project has the following configuration:

Rpi + camera hang near the window. I spent a lot of experiments to find a convenient mount and a good view:

As a result, stuck in a stand for knives. It is mobile, you can gain a base from different angles. Not to say that my wife liked this idea, but I assure you that this is a temporary solution:

Pictures are as follows:

The quality of the webcam is not so hot. But, in principle, it is clear what is happening.

Let's return to architecture. In Rpi, a WiFi module is plugged in that is connected to the router. Photos are saved to a network drive (WD MyBook live). This is a prerequisite for dialing a base (there will not be enough for RPi flash drives). When used, of course, can be disabled. But it is convenient for me.

Rpi itself hangs without a monitor. Its management, programming and configuration is now done from the main computer via SSH. In the beginning, I set something up by inserting it into the monitor, but this is not necessary.

Base collection

A well-assembled base is much more complicated than choosing the right neural network. Using broken markup or non-representative data can degrade system quality far more than using VGG instead of ResNet .

Base collection is a mass of manual, even unskilled labor. Especially for marking large databases there are Yandex.Toloka and Amazon Mechanical Turk services. I will refrain from using them: I will mark everything manually by myself, not for long here. Although, maybe it would make sense to drive there, to practice using it.

Naturally, I want to automate such a process. To do this, consider what we want.

What is the base in our situation:

- The system works with video - it means that there must be frames from the video. Power for analyzing video sequences on an old RPi is definitely not enough

- The system should recognize the birds in the video, so there should be examples of frames in which the birds are present in the database, as well as examples of frames where they are not present.

- The system must recognize the quality of the images. This means that there should be marks in the database that determine the quality of the frame.

- The system should have a set of frames for each type of bird it recognizes.

In fact, the event "arrival of the bird." For this event, we must determine what kind of bird flew in and make a good shot.

The easiest thing to do is to make a trivial "motion detector" and dial its entire output. Motion detector will do the easiest way:

Code on several lines:

import cv2 import time video_capture = cv2.VideoCapture(0) video_capture.set(3,1280) video_capture.set(4,720) video_capture.set(10, 0.6) ret, frame_old = video_capture.read() i=0 j=0 while True: time.sleep(0.5) ret, frame = video_capture.read() diffimg = cv2.absdiff(frame, frame_old) # d_s = cv2.sumElems(diffimg) d = (d_s[0]+d_s[1]+d_s[2])/(1280*720) frame_old=frame print d if i>30: # 5-10 , if (d>15): # cv2.imwrite("base/"+str(j)+".jpg", frame) j=j+1 else: i=i+1 Detector Result

The detector worked on any stirrer. At its beginning and at its end. As a result of the detector operation, a base of approximately 2,000 frames was recruited during the week. We can assume that there are birds in every second frame => approximately 1000 images of birds + 1000 images of non-birds.

Given that the viewpoint does not move much - we can assume that the base plus minus is sufficient.

Base marking

For the markup, I wrote a simple program on python. Bottom, source links. Thank you so much my wife for helping us with markup! Two hours of time killed :) And a couple of hours I spent myself.

For each picture two signs were marked:

- Type of bird. Unfortunately, only two kinds of tits came to me. So there are three types:

- Image quality. The subjective score on the scale [0,8].

In total, we have for each image a vector of two quantities. Well, for example, here:

Obviously, the quality is zero (0), and the big tit is sitting (2).

In total, about half of the frames with birds turned out, half the empty ones. At the same time, the tit Azureque was only 3-5% of the base. Yes, it is difficult to get a large base from them. And yes, learning from these 3-5% (~ 40 pictures) is unreal. As a result, I had to train only in ordinary tits. And hope that sooner or later the base of the azure will be much more.

Base expansion

Now I will skip through the stage to preserve the continuity of the narrative. About the network, the choice of the network and its training will be discussed in the next section. Everything was more or less studied there, except for the blue tit. On the basis of the percentage of frame recognition accuracy was somewhere 95%.

When I say that machine learning in contests and in reality - these are two things that are not related to each other - they look at me like a psycho. Tasks for machine learning at competitions are a matter of optimizing grids and finding loopholes. In a rare case - the creation of a new architecture. The tasks of machine learning in reality is a matter of creating a base. Set, markup, automate further retraining.

I liked the task with the feeder largely because of this. On the one hand, it is very simple - it is done almost instantly. On the other hand, it is very indicative. 90% of the tasks here are unrelated to contests.

The base that we recruited above is extremely small for tasks of this type and is not optimal. It does not imply "stability." Only 4-5 camera positions. One weather outside the window.

But it helps to create the “first stage” algorithm. Which will help you gain a good base.

Modify the detector that I described above:

if (d>20): frame = frame[:, :, [2, 1, 0]] # transformed_image = transformer.preprocess('data', frame) # net.blobs['data'].data[0] = transformed_image # net.forward() # if (net.blobs['pool10'].data[0].argmax()!=0): # 0 - misc.imsave("base/"+str(j)+"_"+ str(net.blobs['pool10_Q'].data[0].argmax())+".jpg",frame) j=j+1 else: #- : ?! misc.imsave("base_d/"+str(k)+".jpg",frame) k=k+1 How to set the base!? And what did we do before?

We used to get the usual base. And now - we are recruiting a database of errors. In one morning, the net produced more than 500 situations identified as tits:

But let me! Maybe your mesh is not working? Maybe you confused the channels when you transferred the image to from camera to grid?

Unfortunately not. This is the fate of all the grids trained on a small amount of data (especially for simple networks). In the training set there were only 6-9 different camera positions. Few highlights. Little extraneous noise. And when the grid sees something completely new - it can throw out the wrong result.

But it's not scary. After all, we screwed the collection base. Only 300-400 empty frames in our base - and the situation is improving. Instead of 500 false alarms in the morning, they are already zero. Only here something and birds were detected only 2/3 of their total number. These did not recognize these:

For their collection and it is "else" in the code above. View the base of the motion detection for the whole day and select 2-3 passes simply. For these pictures it took me time - twenty seconds.

The actual implementation of the system is a permanent workflow, where the grid has to be tightened every few days. And sometimes introduce additional mechanisms:

The solution of the problem in practice is the construction of such a scheme, with all the processes of additional training, retraining, collecting and optimizing the collection.

To invent and build a model is much more power than to train a good model. Often it is necessary to use ancient algorithms: SIFT, SURF. And sometimes take a trained grid, but from a completely different task. For example, a face detector.

It's all? Is the base ready? Does the system work? Of course not . Outside the window is soft, white and fluffy snow. But it becomes hard and icy. Spring is coming. In the last two days, they spoiled the spoons again:

The sun does not shine from there. The snow melted. Kapel pounded.

A good base for this task is spring + winter + summer + autumn. For all the birds, for different types of the window, for different weather. I already wrote a long-long article here about how to build a database.

It seems to me that such a task requires a base of at least 2-3 thousand frames for each bird in different conditions.

There is no such thing yet.

Base generation

The base can be supplemented automatically in order to generate distortions. This greatly increases stability. I did not do all possible increments. You can do more and raise quality. What did I do:

- Mirroring Pictures

- Turns the pictures at angles within 15 degrees

- Crop pictures (5-10%)

- Changes in the brightness of the image channels in different combinations

And this could be added:

- Homography

- Breaking a picture into 2 parts and gluing a new one. The increment went very well in this competition.

- Knocking out pieces of image squares of different colors

- nonlinear transformations

To be honest, I did not fully introduce all these distortions. Need more time. But still the quality of the base is not surpassed. It is not Kaggle to fight for the fractions of a percent.

Network Setup and Startup

Installation

One of the main questions that I wanted to understand for myself was the possibility of running ML frameworks for CV on simple devices. For example on the Raspberry Pi.

Thank God that someone thought this for me. GitHub has a useful repository with almost no instructions.

On RPi B + Caffe and everything you need for it, you can assemble and install it somewhere in a day (you need to approach yourself once an hour and shove the next team). On RPi3, as I understand it, it can be much faster (in 2-3 hours it should cope).

In order not to litter the article with a bunch of Linux commands, I just drop the link here , where I described them all. Caffe is going! Works!

Initially, I thought to use some kind of unpretentious mesh, such as CaffeNet , or even VGG16. But the author of the port of Caffe under RPi strongly advised using SqueezeNet . I tried - and I liked it. Fast, enough memory takes up. Accuracy, of course, not the ResNet level. The first attempt to deploy the network was not very successful. The network ate 500 MB of RAM out of my 400 free. I quickly realized that the main problem was in the output layer. He was from ImageNet at 1000 pins. I needed only a dozen output neurons. This reduced the size of the network immediately to 150 meters. The grid itself is very interesting. In accuracy, it is comparable to AlexNet. In this case, 50 times faster, according to the authors. The grid itself implements the following principles:

- 3 * 3 convolutions are replaced by 1 * 1 convolutions. Each such replacement reduces the number of parameters 9 times.

- At the entrance of the remaining bundles of 3 * 3 try to submit only a small number of channels

- Size reduction is done as late as possible so that the convolutional layers have a large activation area.

- Complete abandonment of fully connected layers at the exit. Instead, direct exits to recognition neurons from the convolution layer via avg-pooling are used.

- Adding Analogue Residual Layers

Total There is a local component:

And there is a global one created from these local ones:

Good ideas. On RPi3, apparently, it gives real time (I sense that there should be 10-15 fps).

On my RPi B + it gave 1.5-2 frames per second. Well, for more, I honestly did not count.

The authors of the port under Caffe used a mesh through C ++ to improve performance. But I dragged to Python. It is much faster to develop.

Network training

First, as I said above, I had to change the last fully connected layer:

layer { name: "conv10_BIRD" type: "Convolution" bottom: "fire9/concat" top: "conv10" convolution_param { num_output: 3 kernel_size: 1 weight_filler { type: "gaussian" mean: 0.0 std: 0.01 } } } layer { name: "conv10_Q" type: "Convolution" bottom: "fire9/concat" top: "conv10_Q" convolution_param { num_output: 3 kernel_size: 1 weight_filler { type: "gaussian" mean: 0.0 std: 0.01 } } } I replaced it with two outs. At one exit - the presence of a bird + its type. The second is quality.

The final speed of work on RPi B + of such a thing is ~ 2-3 seconds per frame + its preprocessing (cleaning the code from converting unnecessary, teaching in a format in which OpenCV directly receives data - will be 1.5-2 seconds).

In reality, learning the layer on "quality" is still a trouble. I used three approaches (yes, you can approach correctly and take special layers of losses. But laziness:

- Nine output neurons, each with L2 regularization (Euclidian). The decision was pulled to the center of expectation. No.

- Nine output neurons, but which are scattered not by 1-0, but by a certain value of expectation. For example, for a frame marked as “4”: 0, 0, 0.1, 0.4, 0.9, 0.4, 0.1.0, 0. The error in Gauss with this approach smooths the noise in the sample. Training more or less went, but did not like the accuracy.

- Three neurons with SoftMax at the exit. “No bird”, “bird of poor quality” (value “0” in the quality metric), “bird of normal quality” (value “1-8” in the quality metric). This method worked best. Statistics is average, but somehow it works. Plus, when training, put a small weight to the layer (0.1)

Left - the last. He is the only one who has ever worked.

With the birds in the base everything is fine. 88% -90% correct classifications. In this case, of course, 100% loss of all azureworms. After I got the base - the quality has improved.

Even slightly improved due to increments from the database (described above).

Information output, Telegram

Go to the last mile. Need to deliver pictures to the user. There were several options:

- Leave on a network drive. Despondency. You can see only at home.

- Post to Twitter. Minus - I do not use it. No habit. Plus, who needs nafig need such spam in the tape (sometimes 100 birds fly in a day)

- Post office. I tried, I know. Awful. Not. Of course, I once on mail clients did manage a network of telescopes, but no longer wanted.

- Telegram. Something new for me, but it has been on the phone for half a year already, it has been used 5 times.

- Make your http client.

After reading about Telegram and making sure that everything is not so terrible there, plus there are some mysterious “channels” - I decided to use it. Begin to fear. It seemed that I had to take a day or two to complete the task. Finally I got up with the spirit to read the documentation about the front of work. Allocated an hour in the evening.

And then I was pleasantly surprised. Incredibly pleasantly surprised. This hour was almost enough for me to write and connect everything that I had in mind. No, of course I'm lying. Spent 2 hours. And then another one and a half to fasten an unnecessary whistle. So it is all simple / convenient / recklessly works. Essentially make a bot:

- Login to Telegram

- Connect the bot "@BotFather". Write / start, follow the instructions. After 15 seconds you have your own bot.

- Choose a convenient programming language, find the appropriate wrapper. I took a python .

- Examples that will be there - 90% will close the functionality you need.

As I understand it, the processing of / start and / help commands is very desirable.

If I ever do a smart home, I feel that it will not do without this thing.

What can a bot. The link to the bot will be lower:

- Send on request the last frame with a bird. I will divide this into 2 teams later: just a frame and a good frame.

- Include the mode “send all new shots with birds from the feeder”. Every time a bird arrives, I send a photo of it to all interested people. Since this whole farm is spinning on the Raspberry Pi - I imposed a limit on the number of people who can connect to this mode so as not to overload it (15 people). In this case, the last 10 people will periodically reset, so that someone else could look. Here in this channel this mode will be duplicated - @win_feed.

- How many birds were today. A simple question is a simple answer.

- Mysterious "inline mode". This is a piece in a telegram that allows you to send a request to the bot when typing text in any message. I do not know why I added it. So carried away that he could not stop. In the query, I added the output of the last 5 photos with birds. Type "appeal to the archive." He did more for the sake of experiment.

Bot combined with recognition. Its text is in the source. Capture.py file

Link to the bot and the channel with the output of the bot - below, in the basement of the article.

And this is what spam mode looks like:

How to develop

I have a few further ideas on how to improve and what to do next:

- Pick up the camera from PRi. It is possible to pick up some kind of good camera specifically for this task.

- Gain a more complete base. Hang on another window, dial the base in the summer. To build a base at the cottage, etc.

- Make more than one type of bird. At least notorious azure tit.

There are more global plans. Ideally, for the next winter I would like to make a feeder that would be completely autonomous. To hang it in the country and manage from the city. But for this you need to solve a number of problems. First, find an adequate provider and make a system that would not have survived an insanely large amount of traffic. Secondly, to make a feed dispenser, which could be remotely controlled. Thirdly, to make an adequate assembly of this whole miracle into a single kulechek.

Time budget

I did a lot of this in parallel with other tasks. So the score may shift. If you want to do the same for yourself, part of the way will help to cut off my source code, but you will have to repeat a lot.

- Run old RPI. Testing, checking, connecting WiFi, connecting network drives - 3-4 hours.

- Build a layout for monitoring, hanging - 1 hour

- Writing the first base build program - 0.5 hours

- Analysis of the database, analysis, markup - 5 hours, 2 of them - the wife.

- Installation on RPi caffe - about 10-15 hours. Upgrading the system, assembling Pip modules, etc. The process is purely in the background. Once an hour to go to check what has gathered what is not and run on.

- Reading manuals on SqueezeNet, training, launching the network, optimization, testing, comparing, writing a training program, tests - approximately 4-5 hours + 4 hours of training time.

- Recognition of the program for disengaging movement for recognition - 1 hour

- Telegramm bot (all the time in total) - 4 hours

- Analysis of the collected base, analysis of work, additional training, etc. - 3 hours + 5 hours of computer time

In reality, this whole thing lasted three or four weeks. It seems to me that with the source and the new RPi, you can make it 2-3 times faster.

Sources

I post links how it all looks in a running form. Links to all of its source code in the process. As well as links to all used frameworks / guides / forums

How to test

There are several limitations:

- Recognition and Telegram bot spin on raspberry pi. It is clear that he has not much power. And from the Habr effect, he will possibly fall. Therefore, I specifically limited the part. I limited the function "send a fresh picture." This is the most loaded function. In total, I made access to the first 15 people to this function. On 5-6 people checked, it works fine. Plus, I will periodically reset, so that if someone is interested to be able to turn it on. In addition, this function is duplicated on this Telegram channel. In case the bot overloads and the habroeffect does not pull, I registered a duplicate bot account for it. I’m writing here that I’ve fallen, I'll throw it on him and I’ll not give you the address. Then pictures can be viewed only in the channel. Then I will return, so that someone is interested - postestil.

- Birds. In January, they flew to the trough constantly. There were 200 arrivals each day. But as time went on, the fewer they became. Do not quite understand why. Whether the flock migrated, or found a kosher place to eat, or overeat seeds, or smelled spring. Last week, 2-3 birds flew in a day. And even none. So if you connect, and there will be no birds - excuse me.

- There are false detections. Not often, but there are. Usually they are caused by some effect which. before that was not observed and the friend became. Therefore, often go in series. Moreover. Spring is now. I think that as the snow melts (in 2-3 days) - either it will trample false specimens ten times more, or it will pass.

- Birds fly between 8 am and 6 pm (Moscow time).

- The feeder will not work all the time. I want to disassemble the piece of iron and improve it. Plus, the snow will soon melt. I will keep the week on, then I will turn it off. I will definitely return it next winter, most likely in a much more adequate form.

Hope you scared.

Here is a link to the bot - @WindowFeeder_bot

And here again the channel - t.me/win_feed

Source code

Project repository .

Here are programs for marking the base. Under Windows and under Ubuntu (for some reason, OpenCV perceives keyboard codes in different ways, it was too lazy to be steamed).

Here is an example of network training. In my opinion very useful for Caffe. When I teach something myself, it often turns out some kind of similar program of this type.

This is the main code. Here implemented: motion detection, Telegram bot, neural grid for recognition.

Here is a base of photos. For most photos there is a text file. It has two digits. The first is the type of bird. The second is the quality of the image (relevant only when there is a bird). There are also pictures without tektovichkov. There is always no bird. I took part of the database from VOC2012 to create a subsample with images that are completely irrelevant to the topic.So you will see cats or dogs - do not be surprised.

If you type your base, then my additive should increase stability.

Instructions, helpful commands, etc.

I cut off a rather large amount of details on how to configure RPi in this article. In my blog, I talked in more detail about some of the configuration steps.

Plus, here I cut off some of the ideas that did not get a continuation - there they are. Total 4 notes on the topic: 1 , 2 , 3 , 4 .

The Caffe version of the Raspberry Pi is dragged away .

There is almost no description on the installation. You can read in my blog, you can try to act on the closest guide (skipping a piece from hdf5). OpenCV

launch guide on RPi. Useful instruction

to create a bot in Telegram. In general, I took most of the samples about him from here . Good repository (API for Python).

Z.Y.

If suddenly someone wants his birds to be recognized, there is RPI, but there is no deployed computer to train the grid on your data - send the database marked in the specified format. I will retrain with the addition of my own, I will post it in general

Z.Y.Y.Y.

He was very afraid that today none of the birds would fly in and it would be broken. Posted an article - and empty. But one has already arrived, pleased. Maybe there will be more.

Source: https://habr.com/ru/post/322520/

All Articles