Tarantool: load testing

In the article " Tarantool: Good, Bad, Evil, " a simple voting service was described with a working example in PHP. We saw how easy it is to connect and use this NoSQL database in our programs. However, one important question was left unattended - why? What are the performance benefits of using NoSQL compared to regular databases?

Let's go!

To answer this question, I will test one of my servers. It is spinning a virtual machine with 1 GB of memory and a processor core with the following parameters:

processor: Intel(R) Xeon(R) CPU E5-26xx (Sandy Bridge) cpu MHz: 1999.999 cache size: 4096 KB bogomips: 3999.99 The disk subsystem on the SSD is not bad:

hdparm -t /dev/sda1 /dev/sda1: Timing buffered disk reads: 484 MB in 3.00 seconds = 161.13 MB/sec Our hoster claims that a 100 Mbit band is available for the virtual server. And, although, our tests showed a great network speed, we take this limitation for granted. Version nginx 1.6.2 , version php / php-fpm 5.6.30 , version Tarantool 1.7.3 .

')

For testing, we will use the wrk utility. After several tests with different wrk parameters and a small tuning of nginx , the latter obtained the following configuration:

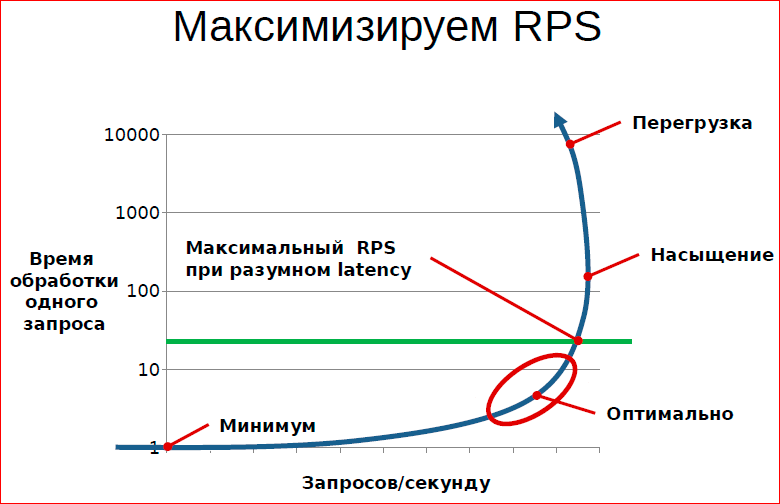

worker_processes 1; events { worker_connections 1024; multi_accept on; use epoll; } http { # Timeouts keepalive_timeout 60; # TCP options tcp_nodelay on; tcp_nopush on; # Compression gzip on; gzip_comp_level 5; … } The wrk utility eventually ran with 50 parallel queries. If you put fewer requests, you can not achieve maximum server performance. If you put more requests - 100 and higher, then, despite some performance increase request per second, delay began to grow rapidly. Ultimately, an increase in the number of parallel requests led to losses. However, on this point there is a beautiful illustration:

From the article by Konstantin Osipov Principles and techniques for processing queues

Tarantula test results

Network ping between the measured server and the traffic generator was 32 ms. Just in case, the test machine was restarted after trial tests for the sake of objectivity of the result. The following data was obtained:

wrk -c50 -d60s -t4 http://ugly.begetan.me/good Running 1m test @ http://ugly.begetan.me/good 4 threads and 50 connections Thread Stats Avg Stdev Max +/- Stdev Latency 54.48ms 10.15ms 441.17ms 95.62% Req/Sec 220.76 19.43 270.00 74.65% 52760 requests in 1.00m, 320.86MB read Requests/sec: 878.72 Transfer/sec: 5.34MB We managed to get a little less than 900 requests per second with a response time of 54 milliseconds. Is it a lot or a little? To begin, remember what happens when you access the page in the script of our service.

- The script sees the appeal of a new client and tries to read cookies that are not available.

- A unique uuid is generated in Tarantula.

- In Tarantula, the upsert write command is executed, which records the client's uuid, time, IP, and User-Agent

- The script invokes the Tarantula team, which selects the Top 9 best out of 16 thousand entries using an index by rating.

And all this together takes about 1 millisecond at the lowest possible VPS - there is no place cheaper! During the tests, there were more than a million entries in the session table, and this does not affect the performance of the server. It seems to me that this is not bad, is not it?

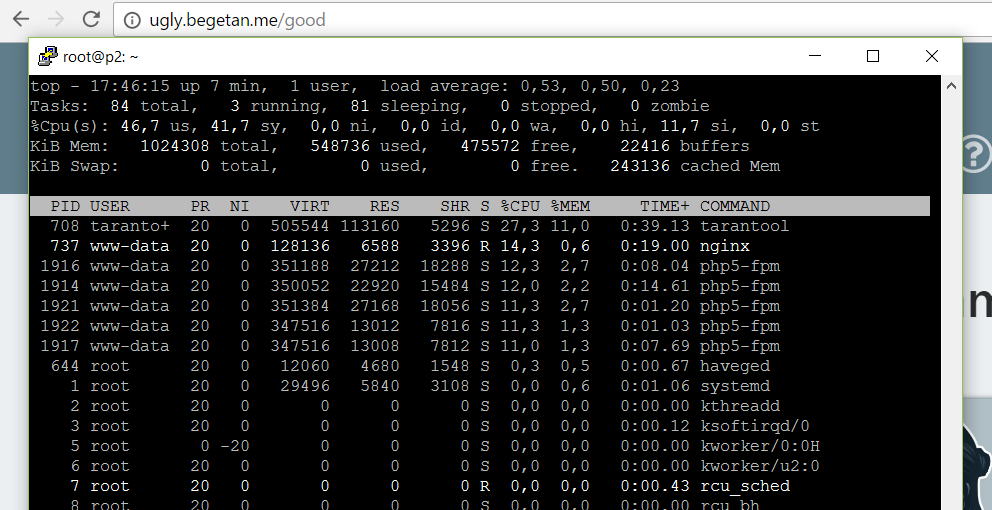

Additional information can be gathered from the top utility output. As already mentioned, the server was restarted before starting the tests. A screenshot was taken during the launch of the re-benchmark from the time of the reboot.

The picture shows the distribution of processor time for tasks. About a quarter of the resources consumed directly by the Tarantula . The main consumer of resources turned out to be php-fpm handlers , which was expected. In third place was nginx . When I increased the number of parallel requests wrk , the CPU time consumed by nginx increased. Because of this, there were not enough resources for php-fpm and errors 499 and 502 occurred in the logs.

At this point, it would be correct to rewrite the application, replacing Tarantula with some ordinary SQL database - MySQL or PostgreSQL and compare the results. However, the author is not a fan of useless work, so he came up with another option. We will test a bunch of php-fpm without the Tarantula and see how performance changes.

Test results without Tarantula

To begin with, let's see why we took exactly 50 simultaneous connections for testing using wrk (the -c50 parameter)? When we run one test thread, it starts loading the page, one after another. Due to the presence of the network delay of signal propagation and non-zero request processing time, the load from one stream is very weak. Therefore, we are starting to increase the number of parallel requests and watch what happens with the test results and server load. As the number of simultaneous requests grows, the% CPU of the nginx process increases almost evenly. When the load increases too much, the server no longer has enough processor resources and some test requests get an error. They either terminate on timeout from the wrk side, or the web server generates an error 502 that the backend is unavailable (in our case, php-fpm).

The most important thing about all this is that the test parameters were chosen precisely for our configuration of the hosting and test server. To test another bundle, for example, in a local network, you need to select other parameters.

Now let's look at the chart, and then I'll show you a couple of interesting points.

So, instead of appeals to the Tarantula in our program, we stuck a plug, like this, in a peasant way:

action_good ()

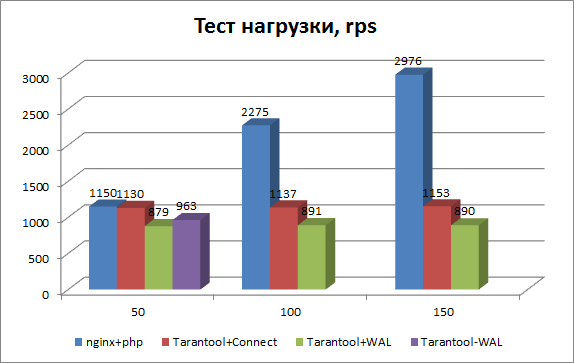

function action_good() { $title = 'Top of the best stickers for Telegram'; // $top = get_top(10,Tarantool::ITERATOR_LE); $top[0] = array(0, 7, 'Procy', 0, 'https://s.tcdn.co/6fb/382/6fb38239-ce7c-3234-bc8a-e6267086b46a/18.png', 1,-1); $top[1] = array(0, 7, 'Procy', 0, 'https://s.tcdn.co/6fb/382/6fb38239-ce7c-3234-bc8a-e6267086b46a/17.png', 1,-1); $top[2] = array(0, 7, 'Procy', 0, 'https://s.tcdn.co/6fb/382/6fb38239-ce7c-3234-bc8a-e6267086b46a/16.png', 1,-1); $top[3] = array(0, 7, 'Procy', 0, 'https://s.tcdn.co/6fb/382/6fb38239-ce7c-3234-bc8a-e6267086b46a/15.png', 1,-1); $top[4] = array(0, 7, 'Procy', 0, 'https://s.tcdn.co/6fb/382/6fb38239-ce7c-3234-bc8a-e6267086b46a/14.png', 1,-1); $top[5] = array(0, 7, 'Procy', 0, 'https://s.tcdn.co/6fb/382/6fb38239-ce7c-3234-bc8a-e6267086b46a/13.png', 1,-1); $top[6] = array(0, 7, 'Procy', 0, 'https://s.tcdn.co/6fb/382/6fb38239-ce7c-3234-bc8a-e6267086b46a/12.png', 1,-1); $top[7] = array(0, 7, 'Procy', 0, 'https://s.tcdn.co/6fb/382/6fb38239-ce7c-3234-bc8a-e6267086b46a/11.png', 1,-1); $top[8] = array(0, 7, 'Procy', 0, 'https://s.tcdn.co/6fb/382/6fb38239-ce7c-3234-bc8a-e6267086b46a/10.png', 1,-1); $top[9] = array(0, 7, 'Procy', 0, 'https://s.tcdn.co/6fb/382/6fb38239-ce7c-3234-bc8a-e6267086b46a/9.png', 1,-1); $active_good ='class="active"'; $active_bad =''; include_once('top.html'); } Also disable and remove all references to the Tarantula in general and run the script. As can be seen from the graph (a series of nginx + php), with the Tarantula disabled on 50 streams, we received 1150 instead of 879 requests, i.e. speed increase was only 30%. Cool? Not! In fact, this test says nothing. Because we initially picked up the load on the site so that it was 100% loaded. But now we have disabled one resource-intensive process (about 30% of the total time), and our site is no longer fully loaded. Check?

It is seen that the blue graph grows well with a further increase in the load on the server. And with 150 test streams without the Tarantula, we received almost 3 thousand requests per second, instead of 1 thousand with the Tarantula. Now everything is correct.

By the way, why did Tarantula use only 30% of the CPU in the test, but when it was turned off, did the server's performance increase threefold? The answer is simple: after turning off the Tarantula, the load on php-fpm workers has also decreased. The increase in load was due to the increase in CPU consumption by the nginx process. We drove this load by increasing the number of threads until we began to receive testing errors.

Now look at one interesting point. What is on the Tarantool + Connect graph? As usual, interesting moments are random. The situation arose when I first turned off all the calls to the Tarantula procedures and ran the test, but I saw that the Tarantula process continues to have a decent piece of CPU. It turned out that I forgot to comment out the connection setup procedure, this is the part:

# Init database $tarantool = new Tarantool('localhost', 3301, 'good', 'bad'); try { $tarantool->ping(); } catch (Exception $e) { echo "Exception: ", $e->getMessage(), "\n"; } The Tarantool + Connect graph shows system performance only with connection initialization, without any computational operations. It turned out that in our demo application a large part of the utilization of the Tarantula process gives the procedure of establishing the connection, and not the actual computing tasks. By CPU shares, the exact alignment is as follows: 20% in the case of the Tarantool + Connect test and 28% in the case of full-fledged work as a database.

What conclusion can there be? It is possible that the process of connecting to the Tarantula is a resource-intensive operation, or the PHP driver does not work optimally. It is important that when establishing a permanent connection, for example, from a daemon written in C, Java or Go, the same operations would use up to 4 times less CPU. This should be considered when writing programs.

WAL or not WAL?

And finally, the last test, which is extremely simple, but very interesting for the developer. As we already know, Tarantula declares data integrity thanks to the Snapshoot and Write Ahead Log mechanism. The first creates and records a regular database cast, and the second records all changes in a special change log. In the event of a sudden shutdown or server crash, the changes are saved and can be restored when the system is restarted.

I tested the load with the WAL log turned off. At 50 streams, the number of rps increased from 879 to 963. It is clear that turning off the additional log file on the disk should speed up the system, which is 100% loaded. However, it is clear that the increase in speed is close to the measurement error and is absolutely not worth the price to be paid for it. It is better to be confident in the safety of their precious data.

Instead of an afterword

Previously, in web development, a common place was the reasoning on the topic “why, they say, use fast programming languages to write web-frontend, anyway, the database will slow down!”. Now, as the capabilities of hardware, virtualization, and NoSQL solutions grow, the base ceases to be a bottleneck in the application.

For me personally, one important question remains unclear. Is Tarantula really as reliable a repository as its developers are trying to prove? “And what the hell, if not?”, As sung in one song. I would like to conduct a data loss test called “Break the Tarantula”, but I definitely need help and advice from skeptics, haters, competitors and just Mail.ru haters to simulate tough conditions for real! Because “ Plato is my friend, but the truth is more precious! "

For me personally, one important question remains unclear. Is Tarantula really as reliable a repository as its developers are trying to prove? “And what the hell, if not?”, As sung in one song. I would like to conduct a data loss test called “Break the Tarantula”, but I definitely need help and advice from skeptics, haters, competitors and just Mail.ru haters to simulate tough conditions for real! Because “ Plato is my friend, but the truth is more precious! "And do not forget to vote on the demo site: ugly.begetan.me ! And then you have to lay out each time the same raccoon from the TOP section.

Source: https://habr.com/ru/post/322266/

All Articles