Simple technology for classifying recognized pages of business documents based on the Template Matching method

The classification task is well known: it is required to assign an arbitrary object from a certain sample to one or several classes from a predetermined set of classes.

1. Statement of the problem

The classification algorithm can be built on the basis of machine learning, using some training selection of objects, about which it is known which classes they belong to.

Also well known is the Template Matching method, which consists in preparing templates for all possible classes. The decision on whether a test object belongs to a certain class is carried out by the criterion of the minimum (maximum) of a certain function of matching an object and its template (in [1] an object is considered - images of characters). This method is one of the simplest for understanding and its own implementation. In essence, it is necessary to solve the problem of representing an object with a certain pattern and choosing a matching function.

Consider the task of classifying recognized pages of business documents. Business documents used in document management, including in the exchange of documents between organizations, have a certain standardization, they can be both unstructured and structured. In banks or insurance companies, documents such as a power of attorney, a contract, a card with samples of signatures and seals, a charter, a contract, an invoice (invoice in English), registration certificates, etc. are often required. When creating and maintaining electronic archives, paper documents are digitized, and digital images of pages (page scans) can be recognized and analyzed. One of the tasks of the analysis is to classify the image of the page, consisting in checking the ownership of the image of the page to a particular class. Such a need arises in the control of images of documents attached by the user as electronic copies.

To analyze the information contained in the image of the document (in particular, the classification), you can use arbitrary text recognition programs (OCR). Currently, one of the most popular solutions is OCR Tesseract, for example, in the thesis [2] this OCR was chosen to recognize the body of archival documents for the following reasons: the possibility of free distribution, as well as the presentation of recognition results in the HOCR format (HTML OCR) information about the coordinates of the recognized words.

OCR Tesseract, depending on the degree of text noise and its own abilities, can recognize page images successfully or unsuccessfully. Systematic failure can be associated with the objective difficulties of recognizing certain classes of documents, for example, passports of a citizen of the Russian Federation, the recognition program of which was described by us in another article [3]. Typical OCR Tesseract errors can be divided into several types:

- E 1 : total failure to recognize the page, to the extent that not a single word is recognized;

- E 2 : the number of errors is so great that a person cannot understand the document from its textual representation in the HOCR format (for example, see the illustration above - it shows a real example of unsuccessful recognition of the “Certificate of registration in the IMNS” using OCR Tesseract version 3.04.00);

- E 3 : the page structure is incorrectly recognized, for example, when adjacent text fragments as a result were separated by a considerable distance;

- E 4 : the presence of a small number of errors, when in the majority of incorrectly recognized words there are 1-2 errors.

In this article we will consider the classification of pages only of such documents that are widely recognized by OCR Tesseract, under the assumption that errors like E 3 and E 4 are likely to occur.

Consider another task of classifying images of document pages. In the existing set of multipage image files, each of which contains pages of one or several documents, it is necessary to determine the boundaries of each of the available documents and assign the found documents to one of the previously known classes. The received portions of the pages are stored in the electronic archive as separate documents.

Next, we will talk about a simple method of classifying pages of documents based not on matching with pre-prepared class templates. The method allows to process single-page and multi-page documents, it allows to take into account some errors of OCR recognition.

2. Description of patterns and pattern matching

We suggest creating class templates based on keywords and a combination of several keywords.

The model of an unstructured document in the form of a structure over a set of keywords is fairly well known. For example, see the document model descriptions as

- vector model or "bag of words", i.e. multiset of words included in the document [4],

- language model that takes into account the word order (taking into account the probability of occurrence of a sequence of words) [5],

- a thematic model using themes consisting of sets of words [6, 7].

We will describe an approach to creating templates using the mechanisms listed above and taking into account errors and features of recognition.

The basic signs will be recognized words in the form of symbols of the word and its properties:

W = ( T ( W ), m 1 ( W ), m 2 ( W ), m 3 ( W ), m 4 ( W ), m 5 ( W ), m 6 ( W )),

where T ( W ) is the core of the word , that is, the sequence of characters and characters "?" and "∗", the latter are used to specify a set of words, for example, "∗ ab? c" defines a set of words with an arbitrary number of characters, with the last characters ab? c, in the place of "?" arbitrary character may be present.

m 1 ( W ) is the distance threshold when comparing two words T ( W ) and W r . To compare two words, it is necessary to choose a metric (distance function), we used the Levenshtein distance [8] or a simplified function, which takes into account only the number of operations of replacing one character with another. In the latter case, the distance d ( T ( W ), W r ) is calculated only for words of the same length, for words with signs "?" the corresponding characters are not involved in the comparison, and for words with the signs "∗" the necessary substrings are compared. If d ( T ( W ), W r ) < m 2 ( W ), then the words are identical, otherwise - different. In the simplest case, m 2 ( W ) is the maximum number of replacement operations during the transformation of T ( W ) to W r .

m 2 ( W ) - restriction on the length of the word W r when comparing T ( W ) and W r makes sense for words containing "∗".

m 3 ( W ) - case-sensitive feature (case sensitive / insensitive) when comparing characters.

m 4 ( W ) is a rectangle of a word , consisting of coordinates in the range [0,1], used to check whether a word falls into the specified rectangle.

m 5 ( W ) - a sign of negation of the word , indicating that the given word should not be in the text. With the help of m 5 ( W ), it is possible to organize a check of both the presence and absence of the word W in the text.

m 6 ( W ) - will be described below.

When searching for the word T ( W ) in the text of the recognized document T, we check the truth of the condition

∃ W r ∈ T : d ( T ( W ), W r ) < m 2 ( W ) ( F ( W r ) ∩ m 4 ( W ) = F ( W r ))

where F ( W r ) is the rectangle of the word W r , that is, the coordinates of the word extracted from the recognition results in the HOCR format, are abscissas and ordinates normalized to the width and height of the original image. When comparing, the parameter m 3 ( W ) is used. Generally speaking, several words { W r } can be found that are identical to the keyword T ( W ).

For the case m 5 ( W ) = 0, we define the predicate P ( W , T ) = 1 if in the text of the recognized document T at least one word is found identical to the word W , and P ( W , T ) = 0 if the text does not found one word identical to the word w . If the word had the sign m 5 ( W ) = 1, then P ( W , T ) = 0, if in the text of the recognized document T at least one word is found identical to the word W , and P ( W , T ) = 1, if No words were found in the text that are identical to the word W.

We also need estimates of d ( T ( W ), W r ) words identical to the word T ( W ).

Next, we define the placement of words as an ordered set of words R = W 1 , W 2 , ..., for which the presence of each word in the recognized document T is checked:

P ( W 1 , T ) ∧ P ( W r , T ) ∧ ... (1)

and additionally for each pair of words W i and W i +1 the condition is checked

r ( W i +1 ) - r ( W i ) < m 5 ( W ), (2)

where the function r ( W ) gives the number of the word W in the text T ordered by the OCR mechanisms. That is, the parameter m r6 ( W ) determines the distance between adjacent words in the arrangement, for m r ( W i +1 ) = ∞, condition (2) is not checked, but only the order of the words is checked.

For placement of R, m 7 ( R ) can be specified - an allocation rectangle, the meaning of which is similar to a rectangle of a word, it is checked whether the words T 1 , W 2 ..., completely found in the text T , are contained in rectangle m 6 ( R ).

The fulfillment of conditions (1), (2) and the conditions for checking compliance with the frame m 7 ( R ) of a rectangle determines the predicate of the location belonging to the text R to the text T : P ( R , T ) = 1. In the simplest case, the placement may consist of one single word, in the general case, in order to calculate P ( R , T ), it is necessary to search for sets that are identical to the words W 1 , W 2 ....

We define the estimate d ( R , T ) of placement as the minimum of the estimates of the words: min ( d ( W 1 , T ), d ( W 2 , T ), ...).

Now we define a combination as a set of placements S = R 1 , R 2 , ..., for which the presence of each of the placements in the recognized document T is checked:

P ( R 1 , T ) ∧ P ( R 2 , T ) ∧ ... (3)

The placement procedure is not important. In addition to condition (3), a comparison of the rectangles of all words from the combination with the m 8 ( S ) combination rectangle can be added. These conditions determine the membership predicate of the S combination to the text T : P ( S , T ) = 1.

We estimate the combination d ( S , T ) as the minimum of the estimates of the allocations included in the combination: min ( d ( R 1 , T ), d ( R 2 , T ), ...).

And finally, we define the pattern M as the set of combinations S 1 , S 2 , ..., for which the pattern belongs to the recognized text T is set by checking the expression

P ( M , T ) = P ( S 1 , T ) ∨ P ( S 2 , T ) ∨ ... (4)

In addition to condition (4), a comparison of the rectangles of all the words of the pattern with the m 8 ( M ) combination rectangle can be added.

We estimate the d ( M , T ) of the pattern as the maximum of the combinations that are included in the pattern: max ( d ( S 1 , T ), d ( S 2 , T ), ...).

To the existing comparisons of the template with the recognized text, we add checks for the compliance of some text properties (the number of characters on the page m 9 ( T ), the number of columns of text m 10 ( T )) with similar properties of the template m 9 ( M ), m 10 ( M ).

If for n classes the set of templates M 1 , ..., M n is ready, then the task of checking compliance with class M i of the recognized document page T is reduced to calculating the distance d ( M i , T ) using the scheme outlined and comparing this distance with the previously known threshold d 1 . If max ( M i ) < d 1 is true, then the document T corresponds to class i .

Explain the need to use the elements of the proposed templates.

Simple single errors of the form E 4 , often appearing in a word, can be ignored with the help of the signs "?" and "∗" in a word mask. To control the length of a word including the "∗" sign, the parameter m 2 can be used, for example, the keywords "CONTRACT", "Contracts" can be described by the kernel "CONTRACT ∗" with the restriction m 2 = 8, which excludes from consideration words like "contractual capacity". The core "? AGREEMENT" allows you to not distinguish between the words "AGREEMENT" and "AGREEMENT".

The threshold m 1 can be used to require a complete match of a keyword and a word from the text, for example, when m 1 = 0, the kernel "CONTRACT" when searching in the text does not allow the choice of the words "AGREEMENT" or "DOGOOR", differing in one character replacement operation.

The parameter m 3 also allows you to ignore character case recognition errors.

The word rectangle (placement, combination, pattern) m 4 provides a partial description in the document structure template by extracting keywords from the specified areas of the document image. Of course, the very borders of the rectangles cannot be specified precisely, due to the variability of the parts of the documents.

Errors of the E 3 type , that is, an incorrectly recognized page structure, can sometimes be ignored by the placement settings. Placement naturally describes a phrase or several adjacent words. In the case of splitting a text column into two columns due to recognition errors, the described placement will be found if you do not specify the distance between words m 6 .

The ability to check both the presence and absence of words, set by the parameter m 5 , allows you to create templates for similar documents, dividing them using keywords that are present in one class of documents and present in another.

The explanations show the usefulness of the described template scheme for the classification of texts recognized with errors. The described scheme is simple in the sense that creating a template in some cases is a simple matter, and the template itself is intuitive. For example, the document "Non-Residential Premises Rental Agreement" can be described with the following pattern:

- keywords:

- placements: R 1 = W 1 , R 2 = W 2 & W 3 & W 4 , R 3 = W 2 & W 5 & W 6 , R 4 = W 7 & W 8 & W 9 & W 10 , all frames there are no placements,

- combinations: S 1 = R 1 ∧ R 2 , S 2 = R 1 & R 3 , S 3 = R 3 , all combinations have no frames,

- pattern: M = S 1 & S 2 & S 3 , the pattern has a frame m 8 ( M ) = {(0.0.0.0), (0.3,0.9)}.

The task of choosing the best class is solved by calculating the distances d ( M 1 , T ), ..., d ( M n , T ), discarding such classes M j , which is true d ( M j , T )> d 1 , by ordering the resulting set in ascending order ( in essence, we want to choose one or several classes with a minimum penalty for not matching a template to a text) and saving one or several alternatives d ( M i 1 , T ), d ( M i 2 , T ), .... That is, in the best way, the text T corresponds to the class i 1, and the other classes i 2, ... are ordered by distance. Conflict resolution d ( M i 1 , T ) = d ( M i 2 , T ) in the simplest case, to conduct, abandoning the classification, in some cases, the conflict is eliminated by using additional features m 9 , m 10 .

3. Forming templates (training)

Obviously, the described pattern matching method is simple, but a certain complexity is present in the process of pattern formation, that is, in training.

Consider the process of forming templates on a real example for the flow of documents 45 classes. We prepared templates in several stages, focusing on keywords on the first page of multipage documents.

Stage 1. At the beginning we looked at many reference documents . For each class, several samples of ideal documents were prepared, in the recognition results of which there were no errors. Textual representations of these documents were converted into word bags, i.e. in multisets of words from which stop words were removed (short words, full name, numerical data, dates, etc.). Then, using simple reusable algorithms from these multisets, single words and phrases of several words were found, inherent in one of the classes. The focus was on characteristic words from the headings and titles of sections of the document. Thus, it was composed of several locations, well separating one class from the other. Thus, we searched for the core of keywords, and the remaining parameters were set by default. Immediately, several problem classes were identified, for example, the first page of documents of the Charter class could be represented by a single key word of the Charter, which is often found in other documents, for example, in documents of the Contract class. To eliminate this problem, we used two methods:

- the use of words that should not appear on the first page of documents of the “Charter” class,

- the use of the attribute m 9 ( T ) - the number of characters on the page - which well distinguished the pages of documents of the "Charter" class.

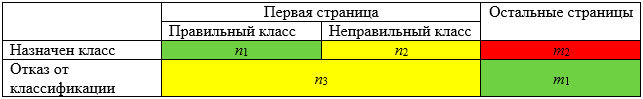

Stage 2. Further, despite some imperfections of the classification with the resulting templates, we moved on to a sample of documents consisting of 50-100 samples of real documents (single-page and multi-page), the recognition of which gave a wide range of errors of all types of 1 - 4 . Error analysis revealed a number of pages that required modifying the templates, and pages that could not be classified by our technology (primarily due to the large number of recognition errors). Modification of templates was reduced to the search for new placements and combinations, the search for words that should not appear in the document, as well as the choice of parameters for keywords, first of all, rectangles m 4 and distances between words m 6 . The analysis of the classification results was carried out according to the following criteria:

Correctly classified pages are estimated by accuracy ( n 1 + m 2 ) / ( n 1 + n 2 + n 3 + m 1 + m 2 ), errors of false classification - as ( n 2 + m 2 ) / ( n 1 + n 2 + n 3 + m 1 + m 2 ), and refusals to classify as ( n 3 ) / ( n 1 + n 2 + n 3 + m 1 + m 2 ).

The most unpleasant classification error is the false classification of a non-first (intermediate and last) page of a multi-page document. This is explained by the subsequent storage of a multi-page document in the form of several parts and the need for correction that requires searching for the missing part of the document. For the classification of non-first pages, the described method was used, using intermediate and last page templates. The remaining classification errors (incorrect classification of the first page of a multi-page document or a single page of a single-page document) are corrected by simply replacing the class using the directory.

Stage 3. The first two stages are based on the use of rules (the actual templates) to be assigned to a particular class. For high-volume samples (3000–30000 samples of each class), machine learning is possible, for example, using the well-known method of constructing a binary decision tree CART (Classification and Regression Trees [9]). The initial data for learning are descriptions of the keywords known in the previous stages in the form of the core and features. On these signs several trees were built, for each class, according to the strategy one against all. If some document in the classification process was not recognized by one of the trees, a classification failure is generated. Of the known features of the CART method, we note the need for a sufficiently large amount of the training sample for stable learning. Because of this, we will not present the classification results obtained using the CART method, although in general they are superior to the classification results obtained through training in stages 1 and 2.

4. Experiments

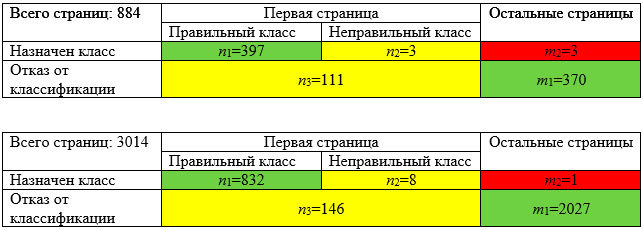

For the experiments, two test sets were used:

- containing images of documents of medium and poor quality of digitization, selected for the learning stage (884 pages),

- containing images of documents of average quality of digitization, obtained regardless of the stage of training (3014 pages).

The results of the classification are presented below in two tables:

It is clear from the above tables that the classification technology described by us gives an accuracy of 0.86 - 0.95, while a false classification does not exceed 0.01, the remaining errors relate to the refusals of classification. That is, the proposed method does not always work, but rarely offers the wrong class.

The speed of implemented classification (Release build using Microsoft Visual Studio 2013) is quite large: 3000 recognized pages are processed in about 1 minute on an Intel® Core (TM) i7-4790 computer CPU 3.60 GHz, 16.0 GB, Windows 7 prof 64-bit . However, the actual OCR Tesseract recognition required for the HOCR source file takes several seconds.

To reduce the proportion of pages left unclassified, the following possible steps are necessary:

- the use of binarization methods, which remove the background on contaminated documents and documents with a complex background,

- use of other OCR,

- the use of more efficient methods of classification based on the search for keywords, for example, the CART method described above.

Conclusion

The described classification technology was used in the projects implemented by Smart Engines for input and analysis of the flow of scanned documents.

The described technology is easy to understand and self-implementation for solving similar problems.

List of useful sources

- Gonzalez R., Woods R. Digital Image Processing. M: Technosphere, 2005. 1070 p.

- Smirnov SV. TECHNOLOGY AND SYSTEM OF AUTOMATIC CORRECTION OF RESULTS IN RECOGNITION OF ARCHIVAL DOCUMENTS. Thesis for the degree of candidate of technical sciences, SPT :, 2015. - 130 p.

- "Recognition of the Passport of the Russian Federation on a mobile phone" ( https://habrahabr.ru/company/smartengines/blog/252703/ )

- Martin D., Jurafsky D. Speech and Language Processing. Language recognition, computational language and language recognition. Pearson Prentice Hall, 2009. - 988 p.

- Wonder JM, Croft WB Proc. Conference on Research and Development in Information Retrieval. ACM. 1998. - P. 275–281.

- Indexing by Latent Semantic Analysis / S. Deerwester [et al.] // Journal of the American Society for Information Science. 1990. - V. 41, № 6. - P. 391.

- Chernyak EL. DEVELOPMENT OF COMPUTING METHODS OF TEXT ANALYSIS USING ANNOTATED SUFFIX TREES. Thesis for the degree of candidate of technical sciences, M :, 2016. - 124 with

- Levenshtein V.I. Binary codes with correction of fallouts, insertions and substitutions of symbols, - Moscow: Reports of the Academy of Sciences of the USSR, v.163.4, 1965. - 845-848 p.

- Breiman L., Friedman JH, Olshen RA, & Stone CJ Classification and regression trees. Monterey // CA: Wadsworth & Brooks / Cole Advanced Books & Software, 1984. - 368 p.

')

Source: https://habr.com/ru/post/321782/

All Articles