JavaScript start performance

Web developers know how easily the sizes of web pages grow. But loading a page is not just transferring bytes over a wire. When the browser has loaded the scripts, it needs to be parsed, interpreted, and run. In the article, we will carefully look at this phase and find out why it may cause a slowdown in the launch of your application and how to fix it.

Historically, we don’t spend much time optimizing JavaScript parsing / compiling. We believe that the scripts will be instantly parsed and executed as soon as the parser reaches the

<script> . But it is not. Here is a simplified diagram of how V8 works:

')

This is an idealized representation of our working pipeline.

Let's look at some key phases.

What slows down the loading of our web apps?

During startup, the JavaScript engine spends considerable time on parsing, compiling, and executing scripts. This is important, because if the engine does this for quite a long time, the beginning of user interaction with our site will be delayed . Suppose they see a button, but for a few seconds it does not respond to pressing. This can lead to degradation of UX.

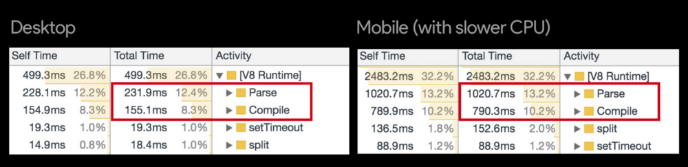

The duration of parsing and compiling for a popular site using statistics runtime calls V8 in Chrome Canary. Please note, as already slow on desktop computers parsing and compiling may become even slower on smartphones.

Runtime is important for performance sensitive code. In fact, the JS V8 engine spends a lot of time parsing and compiling sites like Facebook, Wikipedia and Reddit:

Pink areas (JavaScript) reflect the time spent on V8 and Blink C ++, orange and yellow - the duration of the parsing and compiling

For many sites and frameworks, the duration of parsing and compiling is a weak point. The following are cited by Sebastian Markbage (Facebook) and Rob Vormald (Google):

Parsing / compiling is a huge problem. I will ask our guys to share the data. However, you need to measure the disconnect.

- @sebmarkbage

I understand this data in such a way that the main startup costs in Angular are mainly in JS parsing, before we even deal with the DOM.

- @robwormald

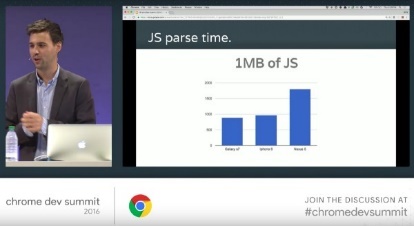

Sam Sakkone reveals the cost of JS-parsing in " Planning for Performance "

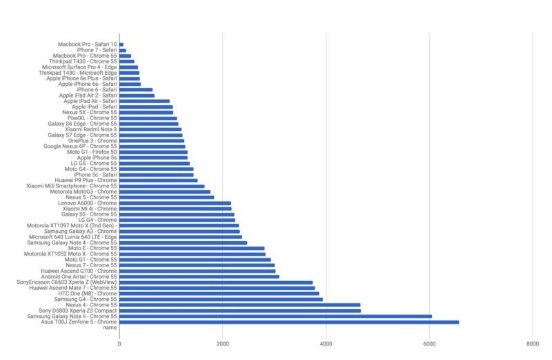

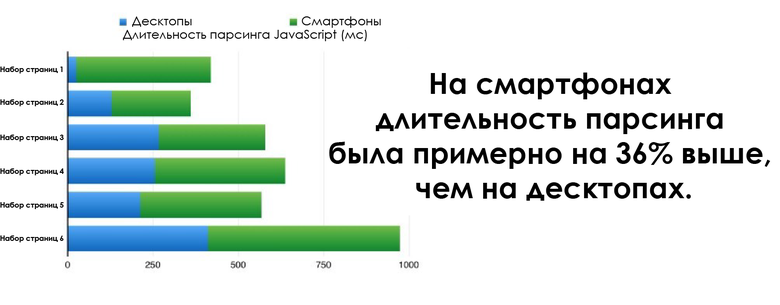

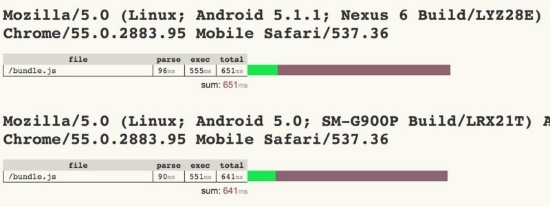

The “mobility” of the web is increasing, and it is important to understand that on smartphones, parsing / compiling can be 2-5 times longer than on desktop computers . And the performance of top-end smartphones is very different from any Moto G4. This underlines the importance of testing on representative equipment (and not just on top-end equipment!) To check the quality of user experience.

The duration of parsing 1-package (bundle) JavaScript on desktop and mobile devices of different classes. Please note that smart phones like the iPhone 7 are close in performance to the MacBook Pro, and compare the drop in performance on average devices

If our web applications use huge packages, then the use of modern delivery methods, such as code-splitting, tree-shaking and caching by the Service Worker, can have a very strong impact. On the other hand, even a small package, clumsily written or using mediocre libraries, can lead to the main thread being stuck for a long time on compiling or function calls . It is important to evaluate the picture holistically, knowing exactly where the bottlenecks are.

Is the duration of JavaScript parsing and compiling a bottleneck for an average site?

Surely now you are thinking: "But I'm not Facebook." You may ask: “How long is the parsing and compiling time for average sites?” Let's explore this question!

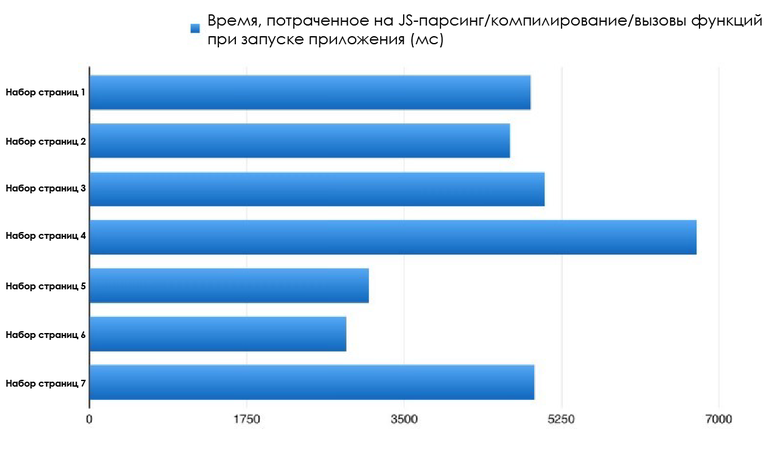

I spent two months measuring the performance of a large number of working sites (more than 6 thousand), built using different libraries and frameworks - React, Angular, Ember and Vue. Most of the tests were reproduced on WebPageTest, so you can easily run them yourself or carefully examine the results. Here are some conclusions.

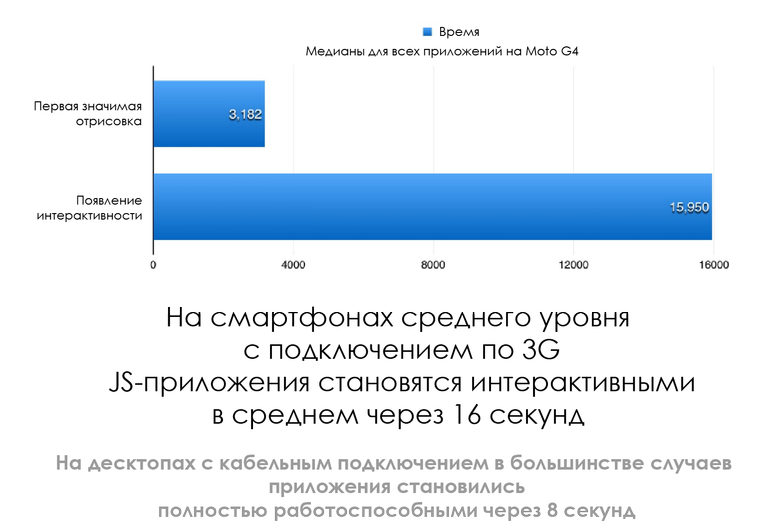

Applications become interactive after 8 seconds on desktops (cable connection) and after 16 seconds on smartphones (Moto G4 with 3G):

What is the reason? On desktops, the launch of most applications takes an average of about 4 seconds (parsing / compiling / execution).

On smartphones, the duration of parsing was about 36% higher than on desktops.

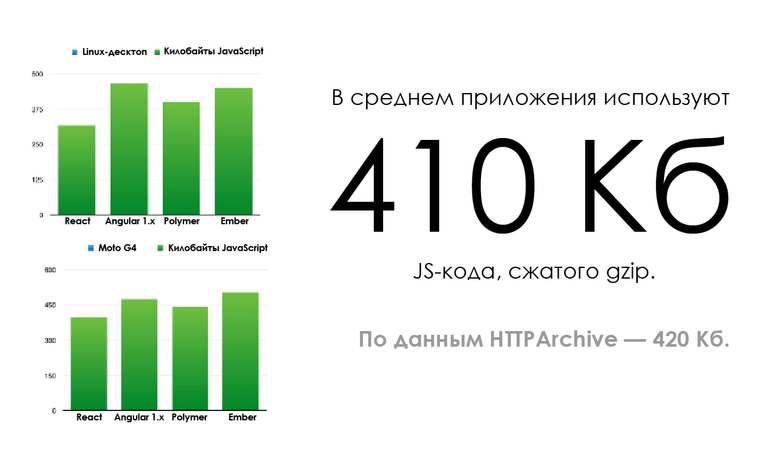

Everyone used huge js packages? Not as big as I expected, but there is still room for improvement. On average, developers used 410 KB packages for their pages, compressed with gzip. This is consistent with HTTP Archive data - 420 Kb JS per page on average. Some freaks transmitted by cable up to 10 MB.

HTTP Archive statistics : an average of 420 Kb of javascript per page

The size of the scripts is important, but not decisive. The duration of parsing and compiling does not necessarily depend linearly on the size of the scripts. More compact JavaScript packages generally demonstrate faster loading (depending on the browser, device and connection), but 200 KB JS! == 200 KB of something else, so the duration of parsing and compiling can vary greatly.

Modern measurement of the duration of parsing and compiling JavaScript

Developer Tools in Chrome

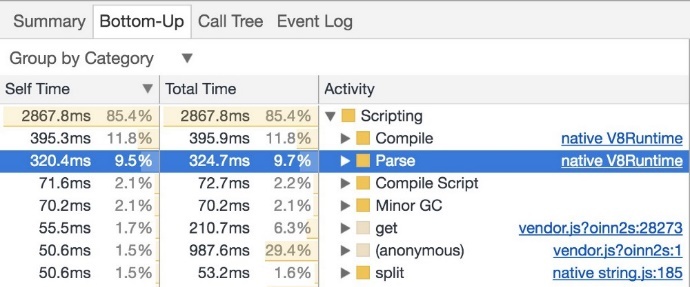

Go to the Timeline (Performance panel)> Bottom-Up / Call and see the Tree / Event Log, from which you get an idea of the time spent parsing and compiling. For the sake of a more detailed picture (for example, the duration of parsing, preparsing, or lazy compiling), you can include statistics for V8 runtime calls . In Canary, this is done like this: Experiments> V8 Runtime Call Stats on Timeline.

Chrome Tracing

about: tracing - a low-level tracing tool in Chrome will allow you to use the category disabled-by-default-v8.runtime_stats to draw deeper conclusions about what the time spent running V8 is spent on. In the engine there is a fresh step by step guide to using the tool.

WebPageTest

The Processing Breakdown page on WebPageTest contains data on the compilation duration in V8, EvaluateScript and FunctionCall, when we perform tracing using the included Chrome> Capture Dev Tools Timeline tool.

You can also extract runtime call statistics by setting the custom trace category disabled-by-default-v8.runtime_stats (Pat Minen from WPT does this by default!).

How can you benefit from this: https://gist.github.com/addyosmani/45b135900a7e3296e22673148ae5165b .

User Timing

You can measure the duration of parsing using the User Timing API :

The third

<script> is unimportant here. But it becomes important, being the first <script> , separated from the second ( performance.mark () starts before <script> ).This approach can affect subsequent reboots by the V8 preparser. This can be circumvented by adding a random string value at the end of the script, Nolan Lawson did something similar in his benchmarks.

To measure the effect of the duration of JavaScript parsing, I use a similar approach using Google Analytics:

Custom parse measurements allow you to measure the duration of JavaScript parsing for real users and devices visiting my pages

DeviceTiming

DeviceTiming tool will help in measuring the duration of parsing / compiling scripts in a controlled environment. Local scripts are placed in an instrumental wrapper, and each time you access pages from different devices (laptops, smartphones, tablets), we can compare the duration of parsing / execution locally. Daniel Especet’s “ Benchmarking JS Parsing and Execution on Mobile Devices ” talked about this tool in more detail.

How can I reduce the duration of JavaScript parsing?

- Less javascript. The fewer scripts you need to parse, the shorter the parsing / compiling phase.

- Use the code-splitting technique only to deliver the code that is needed to direct the user through the page, and load the rest in lazy mode. In many cases this will help avoid parsing a large amount of js. Patterns like PRPL , which Flipkart, Housing.com and Twitter use today, are useful in implementing this approach.

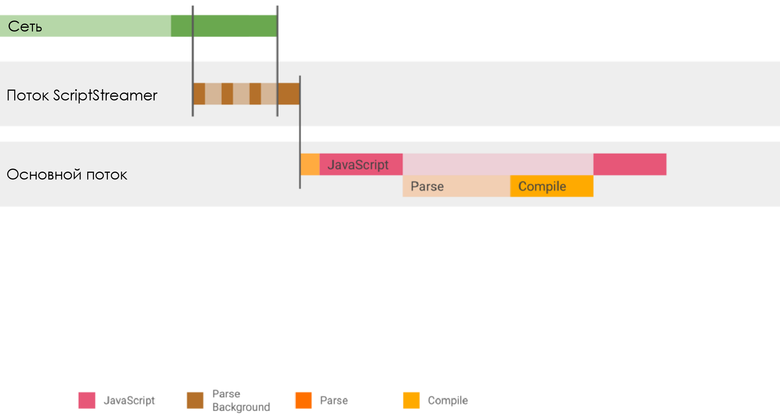

- Use script streaming. Previously, V8 suggested that developers use async / defer to reduce parsing time by using streaming scripts by 10–20%. At a minimum, this allows the HTML parser to detect the source earlier, pass the task to the streaming download stream and not slow down the parsing of the document. Now this is done for parser-blocking scripts, and I do not think there are any other tasks. If you have one streamer stream, V8 recommends loading larger packages first.

- Measure the cost of parsing dependencies - libraries and frameworks. Wherever possible, switch to dependencies with faster parsing (for example, instead of React, it's better to use Preact or Inferno, which require less bytes for initial loading and faster parsing / compiling). Paul Lewis recently in his article raised the issue of the cost of bootstrap frameworks . Sebastian Markbager also pointed out a good way to measure the cost of starting up frameworks — first rendering the view, erasing and rendering again, this will give you an understanding of its scalability . The first rendering plays the role of warming up for lazily compiled code, a larger tree which can benefit from scaling.

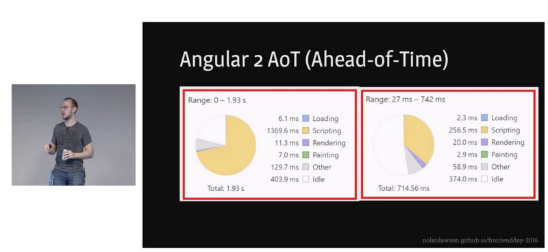

If the JavaScript framework you have selected supports ahead-of-time compilation (AoT) compilation mode, this will significantly help reduce the duration of parsing / compiling. For example, this benefits Angular applications:

Speech by Nolan Lawson " Solving the Web Performance Crisis "

What do browsers do to speed up parsing / compiling?

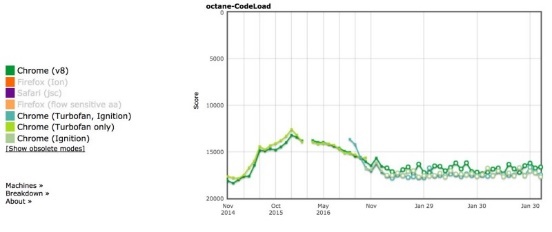

Not only the developers collect real statistics to find how to improve the launch speed. Thanks to the V8, it was discovered that Octane, one of the oldest benchmarks, was a bad proxy for measuring the real performance of 25 popular sites. Octane can be a proxy:

- for JS frameworks (usually not a mono / polymorphic code),

- for launching paged applications (real-page app) (most of the code is “cold”).

Both of these cases are quite important for the web, but still Octane is not suitable for all types of workload. The V8 development team has spent a lot of effort on improving startup times:

Year after year, the performance of V8 when starting JavaScript is improved by about 25%. Closer deal with the performance of real applications.

- @addyosmani

Judging by Octane-Codeload, the performance of the V8 has improved by about 25% when parsing multiple pages:

In this respect, improved results and Pinterest. Also in recent years, there are a number of other confirmations of the qualitative growth of the V8 in terms of the duration of parsing and compiling.

Code caching

From the article " Using code caching in V8 ".

In Chrome 42, code caching appeared - a way to store a local copy of the compiled code, so that when you return to the page, the steps of extracting scripts, parsing and compiling are skipped. On repeated visits, this gives Chrome an approximately 40 percent acceleration of compiling, but you need to clarify something:

- Code caching is triggered for scripts that have been executed twice within 72 hours.

- For Service Worker scripts: the same condition.

- For scripts stored in the script storage by the Service Worker, caching is triggered when first executed .

So if the code needs to be cached, then V8 will skip parsing and compiling from the third download .

You can play with this mechanism: chrome: // flags / # v8-cache-strategies-for-cache-storage . You can also launch Chrome with flags

— js-flags=profile-deserialization and see if objects are loaded from the cache (in the log they are presented as deserialization events).One explanation: only that code that is compiled eagerly compiled is cached. In general, this is a top-level code that is executed only once, to configure global values. Function definitions are usually compiled lazily and not always cached. IIFE (for users of optimize-js;)) is also included in the V8 code cache, since they are already eagerly compiled.

Script Streaming Streaming

Streaming script loading allows parsing scripts asynchronously or with a delay, moving them to a separate background thread with the start of loading. This allows about 10% faster page loading. As noted above, this mechanism now works to synchronize scripts.

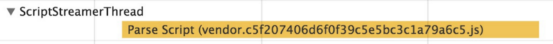

Today V8 allows parsing in the background thread all scripts , even blocking the parser (parser blocking)

<script src=””> , so this will benefit everyone. The caveat is that the background thread is unique, so it makes sense to handle large / critical scripts first. Be sure to take measurements to determine where improvements can be made.Because

<script defer> in <head> , we can pre-define the resource and then parse it in the background thread.With DevTools Timeline, you can also check if streaming has been applied to the right scripts. If you have one big script that accounts for most of the parsing time, then it makes sense (usually) to check whether streaming is applied to it.

Improved parsing and compiling

We continue to work with a more compact and fast parser that frees up memory and works more efficiently with data structures. Today, the main reason for the mainstream delays in V8 is the non-linear dependence of the cost of parsing. UMD example:

(function (global, module) { … })(this, function module() { my functions }) V8 does not know that the module is definitely needed, so we will not compile it during the compilation of the main script. When we finally decide to compile the module, we will need to repack all internal functions. This leads to non-linearity of the parsing duration in V8. Each function at a depth of N is parsed N times, which leads to delays.

V8 developers are already working on gathering information about internal functions during initial compilation, so any subsequent compilations can ignore their internal functions. This should lead to a large increase in the performance of modular (module-style) functions.

For more on this, see The V8 Parser (s) - Design, Challenges, and Parsing JavaScript Better .

Also, V8 developers are exploring the possibility of transferring part of compiling JavaScript during launch into the background .

Precompiling javascript?

Every few years, the engines suggest ways to precompile the scripts so that we do not have to spend time parsing or compiling the resulting code (code pops up). Instead, during assembly or on the server side, you can simply generate bytecode. I believe that passing a bytecode to an application can slow down the load (bytecode takes up more space), and for the sake of security you will probably have to sign the code and process. Today, V8 developers believe that precompiling does not give much benefit. But they are open to discussing ideas that will speed up the launch phase. Developers are trying to make V8 more aggressive in terms of compiling and caching scripts when you update the site in Service Worker.

A discussion of precompiling with Facebook and Akamai, as well as my notes on this issue can be found here .

Hack with parentheses Optimize JS for lazy parsing

JavaScript engines are equipped with lazy parsing heuristics: most of the functions in scripts are prepared before the completion of the full parsing cycle (for example, to search for syntax errors). This approach is based on the idea that most pages contain JS functions, which, if executed, are lazy.

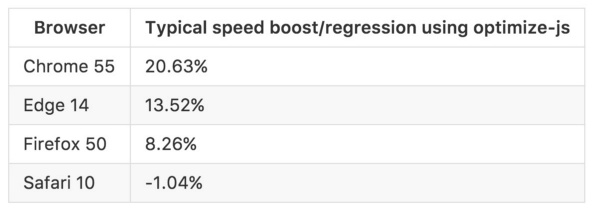

Preparsing can speed up launch due to the fact that functions are checked only for the presence of the minimum information required by the browser. This is contrary to the use of IIFE. Although the engines try to skip preparsing for them, heuristics do not always work correctly, and in such situations tools like optimize-js are useful.

optimize-js parses the scripts in advance and inserts parentheses if it knows (or assumes due to heuristics) that functions will be executed immediately there. It speeds up the execution . With some functions (for example, with IIFE!), Such a hack works correctly. With others, everything depends on heuristics (for example, Browserify or Webpack assumes that all modules are loaded greedily, and this is not always the case). V8 developers hope that in the future, the need for such hacks will disappear, but today it is a useful optimization, if you know what you are doing.

The V8 authors are also working on reducing the cost of errors, which in the future should reduce the benefits of the hack with brackets.

Conclusion

Starting performance matters . The combination of slow parsing, compiling and execution can be a bottleneck for pages that need to load quickly. Measure the duration of these phases for your pages. Find ways to speed them up.

And we, for our part, will continue to work on improving the starting performance of the V8. We promise;)

useful links

- Planning for Performance

- Solving the Web Performance Crisis by Nolan Lawson

- JS Parse and Execution Time

- Measuring Javascript Parse and Load

- Unpacking the Black Box: Benchmarking JS Parsing and Execution on Mobile Devices ( slides )

- When everything is important, nothing is!

- The truth about traditional JavaScript benchmarks

- Do Browsers Parse JavaScript On Every Page Load

Source: https://habr.com/ru/post/321748/

All Articles