The basics of Juniper Contrail, and how to put it in the lab

Unexpectedly, it was found that on Habré there is almost no information about Juniper Contrail (yes, SDN) - and I decided on my own to make up for the omission.

In this article I want to briefly talk about what is Contrail, in what forms it is available and how to put it in the lab. More specifically, we will install Contrail Cloud 3.2.0.

The challenge that Contrail solves is building flexible and scalable virtual networks. A virtual network can be understood as a replacement for the good old VLAN, in this case implemented approximately as a provider L3VPN / EVPN. At the same time, from the point of view of connected clients, everything looks as if all their virtual machines and physical servers are connected via a regular switch.

')

The easiest way to describe Contrail as an SDN overlay. Software-Defined Networking is understood here in the sense of the classic definition of ONF - the separation of the forwarding plane and control plane, plus the centralization of the control plane in this case in the Contrail controller.

The word "overlay" indicates that there are actually two networks:

Contrail-controller is engaged in managing a virtualized overlay network in the data center or provider cloud, and completely climbs into the work of the factory. Answering the questions “why?” And “why exactly?”, The strengths of Contrail include:

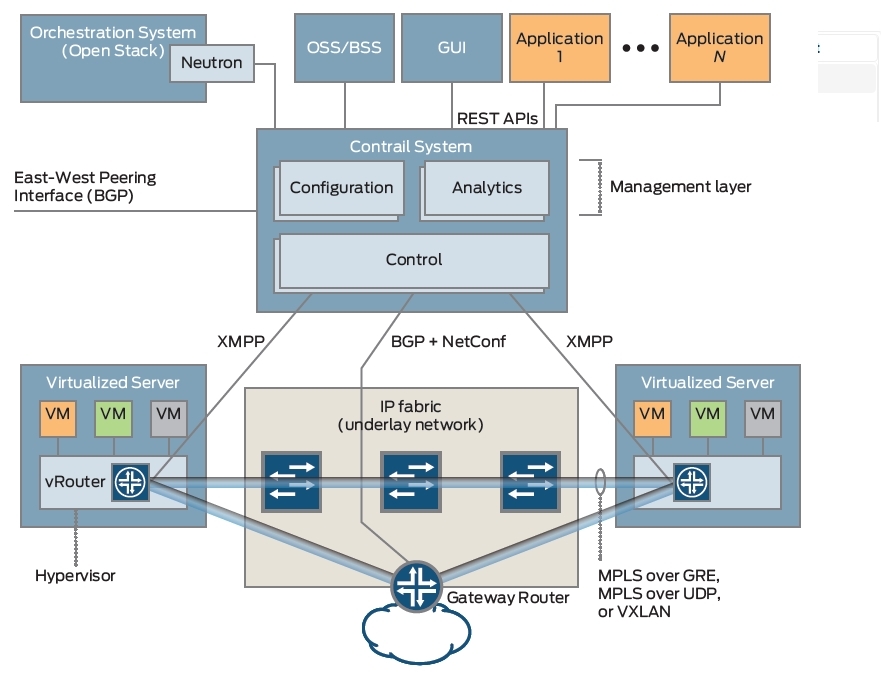

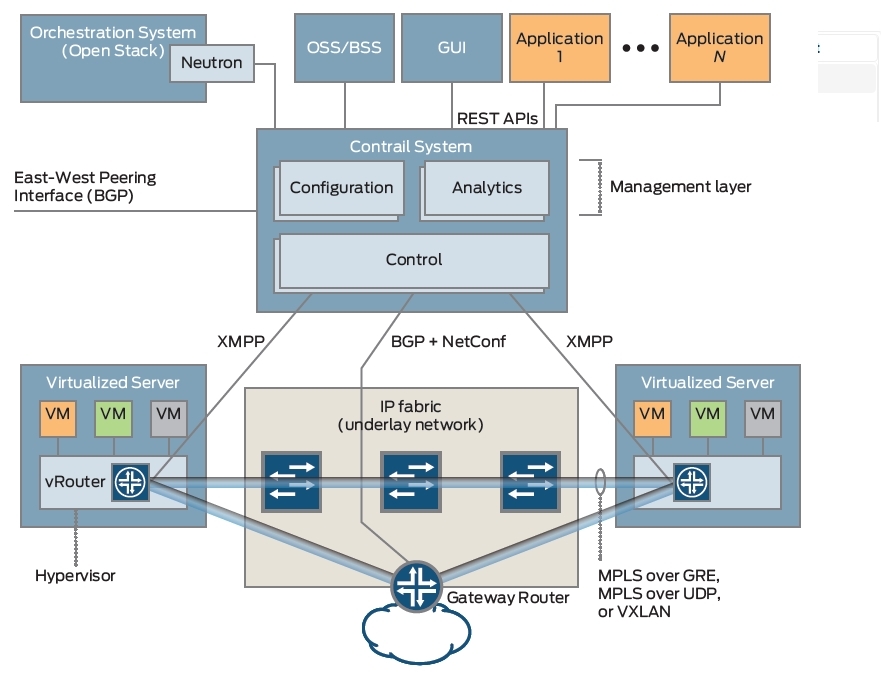

Here is a picture of the Contrail Architecture whitepaper , showing how the system works as a whole:

The infrastructure orchestrator works at the top level - most often it will be OpenStack (there are options for integrating Contrail with vCenter and Kubernetes). In it, the network is configured via high-level commands (Neutron API) - while the implementation details remain the concern of the SDN controller.

The SDN controller itself consists of four main types of nodes:

On one physical (or even virtual) server, several roles can be run, in a lab, you can even make an all-in-one controller (plus computational nodes separately).

Now a little about forvarding. All traffic between virtual machines or containers in the system runs through tunnels terminated on a vRouter, a physical router or an OVSDB switch (I don’t consider this option here). vRouter is a software component (default Linux kernel module, user space if using DPDK), the second important part of the Contrail solution (the first is the controller itself). vRouters are installed and run on cluster computing nodes (Virtualized Server in the picture) - in the same place where the machines / containers are started.

Once again, the main task of vRouter is to terminate the overlay tunnel. By function, vRouter corresponds to the PE (provider edge) router in MPLS VPN.

We have the following options for using Contrail (the features and code are the same everywhere and only support options are different):

In addition, there is an option with support for OpenContrail from Mirantis.

I will follow the path of least resistance in this article and show you how to install the Contrail Cloud.

We will install Contrail Cloud 3.2.0 - the latest version, at the time of this writing. For installation, I used one ESXi 6.0 server with a 4-core hyper-threading CPU and 32GB RAM. This is enough for tests, even with a margin (you can still run a pair of vMX).

The scheme of the virtual laboratory will look like this:

Please note that the Compute nodes (as well as the controller nodes) are virtualized in the lab, that is, the virtual machines will run inside other virtual machines. You should go to the hypervisor settings and check the box next to the option “Expose hardware assisted virtualization to the guest OS” for each Compute node. As you can see from testbed.py, the first two are actually for virtual machines, and they use KVM, and the third is for Docker containers.

We deploy all 5 virtual locks with parameters as shown in the diagram. At the same time, for compute nodes, the parameters are actually determined by how many and what kind of machines / containers you are going to run, but for the controller, the parameters are close to the minimum acceptable.

We put on all machines the minimum Ubuntu 04/14/4 (ubuntu-04/14/4-server-i386.iso). Strictly this version, as stated in the Contrail 3.2.0 documentation - this is very important! Otherwise, it is very easy to run into the incompatibility of packages. And it is the minimum, for the same reason. Naked minimum plus only OpenSSH Server. For some reason, many people do not take seriously such simple instructions and then they do not work :)

Next, we write the addresses in / etc / network / interfaces, and in / etc / hosts we ask, so as not to bother with DNS,

We will install Contrail using Fabric scripts. This option is the easiest for labs, there is also Server Manager for production (with Puppet under the hood), but this is some other time. For Fabric, we need to allow root login for SSH, like so

It is also desirable to enable ntp on all nodes:

Next, on the first node, copy the package contrail-install-packages_3.2.0.0-19-ubuntu-14-04mitaka_all.deb to / tmp and install it:

Run the installation script:

Now an important point. We need to create the file /opt/contrail/utils/fabfile/testbeds/testbed.py, which will describe our cluster. Here is a working example:

The meaning of different sections, I think, should be clear without further explanation.

Just a few more steps. Install packages for the remaining nodes:

Change the core to the recommended:

(kernel version will change from 4.2.0-27-generic to 3.13.0-85-generic and the nodes will reboot).

Next, go to the first node and:

And finally, the last step (the longest, takes about an hour in my case):

In principle, everything. But in this form, with the given parameters of virtual computers, Contrail Cloud slows down. Apply a few tricks to speed it up (use only for labs):

(after which the server must be restarted; everything adds up to one and a half times the use of memory and noticeably improves the response to actions like virtualo run).

Now you can go to the web interface. Openstack Horizon should be available on 10.10.10.230/horizon, and Contrail Web UI - on 10.10.2.230:8080. With our settings, login is admin, password is contrail.

I hope that this article will help interested people to begin to understand and work with Contrail 3.2. Full product documentation is available on the Juniper website . Some examples of how to pull Contrail through the API, I try to collect here .

All success in work and good mood!

In this article I want to briefly talk about what is Contrail, in what forms it is available and how to put it in the lab. More specifically, we will install Contrail Cloud 3.2.0.

Contrail Basics

The challenge that Contrail solves is building flexible and scalable virtual networks. A virtual network can be understood as a replacement for the good old VLAN, in this case implemented approximately as a provider L3VPN / EVPN. At the same time, from the point of view of connected clients, everything looks as if all their virtual machines and physical servers are connected via a regular switch.

')

The easiest way to describe Contrail as an SDN overlay. Software-Defined Networking is understood here in the sense of the classic definition of ONF - the separation of the forwarding plane and control plane, plus the centralization of the control plane in this case in the Contrail controller.

The word "overlay" indicates that there are actually two networks:

- Physical "factory", which is required to "only" ensure IP accessibility between connected physical devices (servers, gateway routers), and

- An overlay is a network made up of tunnels laid between servers and gateway routers. Tunnels can be MPLS over UDP, MPLS over GRE or VXLAN (listed in the order of priority set by default; although there are some nuances, in general, the specific type of tunnel used is part of the implementation).

Contrail-controller is engaged in managing a virtualized overlay network in the data center or provider cloud, and completely climbs into the work of the factory. Answering the questions “why?” And “why exactly?”, The strengths of Contrail include:

- Scalability - the system is built on the same principles as the time-tested BGP / MPLS VPN solution.

- Flexibility - changing the configuration of a virtual network does not require changes to the physical network. This is a natural consequence of overlay and the use of the smart edge - simple core principle.

- Programmability - applications can manage the network as a system through the Contrail API.

- NFV (virtualization of network functions) aka service chains (chain of services) is a very important aspect, it allows to drive traffic through specified virtualized network services (virtual firewall, cache, etc.). At the same time, both Juniper VNF (vSRX, vMX) and any products of other vendors are absolutely equal.

- Powerful analytics and visualization of results, including “Underlay Overlay Mapping” —this is when Contrail shows you how the traffic between virtual networks actually “flows” through the physical network.

- Open Source - all source codes are open, plus only standard protocols are used for interaction between the parts. Project site - www.opencontrail.org .

- Easy integration with existing MPLS VPNs.

Here is a picture of the Contrail Architecture whitepaper , showing how the system works as a whole:

The infrastructure orchestrator works at the top level - most often it will be OpenStack (there are options for integrating Contrail with vCenter and Kubernetes). In it, the network is configured via high-level commands (Neutron API) - while the implementation details remain the concern of the SDN controller.

The SDN controller itself consists of four main types of nodes:

- Configuration nodes are responsible for providing the REST API to the orchestrator and other applications. “Compiles” instructions coming “from above” in configurations applicable to a particular network at a low level.

- Control nodes - accept configuration from configuration nodes and program vRouters (see below) and physical routers.

- Analytics - nodes collecting flow statistics, logs, and more.

- Database (not shown in the picture) - the Cassandra database, which stores the configuration and information collected by analytics.

On one physical (or even virtual) server, several roles can be run, in a lab, you can even make an all-in-one controller (plus computational nodes separately).

Now a little about forvarding. All traffic between virtual machines or containers in the system runs through tunnels terminated on a vRouter, a physical router or an OVSDB switch (I don’t consider this option here). vRouter is a software component (default Linux kernel module, user space if using DPDK), the second important part of the Contrail solution (the first is the controller itself). vRouters are installed and run on cluster computing nodes (Virtualized Server in the picture) - in the same place where the machines / containers are started.

Once again, the main task of vRouter is to terminate the overlay tunnel. By function, vRouter corresponds to the PE (provider edge) router in MPLS VPN.

What is Contrail

We have the following options for using Contrail (the features and code are the same everywhere and only support options are different):

- OpenContrail is an option available for free. Installation is described in the Quick Start Guide .

- Contrail Networking is a commercial version supported by Juniper TAC.

- Contrail Cloud is again a commercial version, and this includes both Contrail itself and Canonical / Ubuntu OpenStack, both supported by Juniper TAC.

In addition, there is an option with support for OpenContrail from Mirantis.

I will follow the path of least resistance in this article and show you how to install the Contrail Cloud.

Install Contrail Cloud

We will install Contrail Cloud 3.2.0 - the latest version, at the time of this writing. For installation, I used one ESXi 6.0 server with a 4-core hyper-threading CPU and 32GB RAM. This is enough for tests, even with a margin (you can still run a pair of vMX).

The scheme of the virtual laboratory will look like this:

Please note that the Compute nodes (as well as the controller nodes) are virtualized in the lab, that is, the virtual machines will run inside other virtual machines. You should go to the hypervisor settings and check the box next to the option “Expose hardware assisted virtualization to the guest OS” for each Compute node. As you can see from testbed.py, the first two are actually for virtual machines, and they use KVM, and the third is for Docker containers.

We deploy all 5 virtual locks with parameters as shown in the diagram. At the same time, for compute nodes, the parameters are actually determined by how many and what kind of machines / containers you are going to run, but for the controller, the parameters are close to the minimum acceptable.

We put on all machines the minimum Ubuntu 04/14/4 (ubuntu-04/14/4-server-i386.iso). Strictly this version, as stated in the Contrail 3.2.0 documentation - this is very important! Otherwise, it is very easy to run into the incompatibility of packages. And it is the minimum, for the same reason. Naked minimum plus only OpenSSH Server. For some reason, many people do not take seriously such simple instructions and then they do not work :)

Next, we write the addresses in / etc / network / interfaces, and in / etc / hosts we ask, so as not to bother with DNS,

10.10.10.230 openstack 10.10.10.231 control 10.10.10.233 compute-1 10.10.10.234 compute-2 10.10.10.235 compute-3 We will install Contrail using Fabric scripts. This option is the easiest for labs, there is also Server Manager for production (with Puppet under the hood), but this is some other time. For Fabric, we need to allow root login for SSH, like so

echo -e "contrail\ncontrail" | sudo passwd root sudo sed -i.bak 's/PermitRootLogin without-password/PermitRootLogin yes/' /etc/ssh/sshd_config sudo service ssh restart It is also desirable to enable ntp on all nodes:

sudo apt-get install ntp Next, on the first node, copy the package contrail-install-packages_3.2.0.0-19-ubuntu-14-04mitaka_all.deb to / tmp and install it:

dpkg -i /tmp/contrail-install-packages_3.2.0.0-19-ubuntu-14-04mitaka_all.deb Run the installation script:

cd /opt/contrail/contrail_packages ./setup.sh Now an important point. We need to create the file /opt/contrail/utils/fabfile/testbeds/testbed.py, which will describe our cluster. Here is a working example:

from fabric.api import env # FOR LAB ONLY, DEFAULT IS 250 minimum_diskGB = 10 # MANAGEMENT USERNAME/IP ADDRESSES host1 = 'root@10.10.10.230' host2 = 'root@10.10.10.231' host3 = 'root@10.10.10.233' host4 = 'root@10.10.10.234' host5 = 'root@10.10.10.235' # EXTERNAL ROUTER DEFINITIONS ext_routers = [] # AUTONOMOUS SYSTEM NUMBER router_asn = 64512 # HOST FROM WHICH THE FAB COMMANDS ARE TRIGGERED # TO INSTALL AND PROVISION host_build = 'root@10.10.10.230' # ROLE DEFINITIONS env.roledefs = { 'all': [host1, host2, host3, host4, host5], 'cfgm': [host1], 'openstack': [host1], 'control': [host2], 'compute': [host3, host4, host5], 'collector': [host1], 'webui': [host1], 'database': [host1], 'build': [host_build] } # DOCKER env.hypervisor = { host5 : 'docker', } # NODE HOSTNAMES env.hostnames = { 'host1': ['openstack'], 'host2': ['control'], 'host3': ['compute-1'], 'host4': ['compute-2'], 'host5': ['compute-3'], } # OPENSTACK ADMIN PASSWORD env.openstack_admin_password = 'contrail' # NODE PASSWORDS env.passwords = { host1: 'contrail', host2: 'contrail', host3: 'contrail', host4: 'contrail', host5: 'contrail', host_build: 'contrail', } The meaning of different sections, I think, should be clear without further explanation.

Just a few more steps. Install packages for the remaining nodes:

cd /opt/contrail/utils/ fab install_pkg_all:/tmp/contrail-install-packages_3.2.0.0-19-ubuntu-14-04mitaka_all.deb Change the core to the recommended:

fab upgrade_kernel_all (kernel version will change from 4.2.0-27-generic to 3.13.0-85-generic and the nodes will reboot).

Next, go to the first node and:

cd /opt/contrail/utils/ fab install_contrail And finally, the last step (the longest, takes about an hour in my case):

fab setup_all In principle, everything. But in this form, with the given parameters of virtual computers, Contrail Cloud slows down. Apply a few tricks to speed it up (use only for labs):

echo 'export JAVA_OPTS="-Xms100m -Xmx500m"' > /etc/zookeeper/java.env sed -i.bak 's/workers = 40/workers = 1/' /etc/nova/nova.conf sed -i.bak 's/#MAX_HEAP_SIZE="4G"/MAX_HEAP_SIZE="1G"/' /etc/cassandra/cassandra-env.sh sed -i.bak 's/#HEAP_NEWSIZE="800M"/HEAP_NEWSIZE="500M"/' /etc/cassandra/cassandra-env.sh (after which the server must be restarted; everything adds up to one and a half times the use of memory and noticeably improves the response to actions like virtualo run).

Now you can go to the web interface. Openstack Horizon should be available on 10.10.10.230/horizon, and Contrail Web UI - on 10.10.2.230:8080. With our settings, login is admin, password is contrail.

findings

I hope that this article will help interested people to begin to understand and work with Contrail 3.2. Full product documentation is available on the Juniper website . Some examples of how to pull Contrail through the API, I try to collect here .

All success in work and good mood!

Source: https://habr.com/ru/post/321656/

All Articles